when comparing checkpoints (steps, methods, etc), what parameters do you guys use on XYZ? only seed

when comparing checkpoints (steps, methods, etc), what parameters do you guys use on XYZ? only seed and checkpoint?

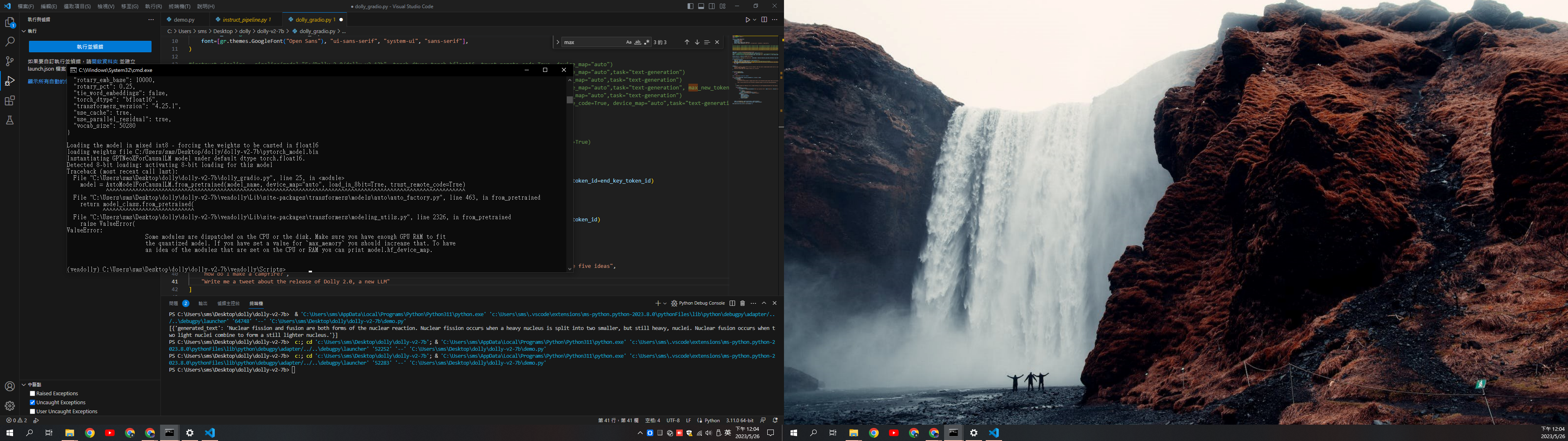

Traceback (most recent call last):

File "/workspace/kohya_ss/train_network.py", line 783, in <module>

train(args)

File "/workspace/kohya_ss/train_network.py", line 146, in train

text_encoder, vae, unet, _ = train_util.load_target_model(args, weight_dtype, accelerator)

File "/workspace/kohya_ss/library/train_util.py", line 3023, in load_target_model

text_encoder, vae, unet, load_stable_diffusion_format = _load_target_model(

File "/workspace/kohya_ss/library/train_util.py", line 2989, in _load_target_modelsubprocess.CalledProcessError: Command '['C:\\Stable-Diffusion\\kohya_ss\\venv\\Scripts\\python.exe', 'train_network.py', '--enable_bucket', '--pretrained_model_name_or_path=runwayml/stable-diffusion-v1-5', '--train_data_dir=C:/Stable-Diffusion/Emma/Process lora/Emma lora/image/', '--resolution=512,512', '--output_dir=C:/Stable-Diffusion/Emma/Process lora/Emma lora/model', '--logging_dir=C:/Stable-Diffusion/Emma/Process lora/Emma lora/log', '--network_alpha=1', '--save_model_as=safetensors', '--network_module=networks.lora', '--text_encoder_lr=5e-5', '--unet_lr=0.0001', '--network_dim=8', '--network_weights=C:/Users/archi/Downloads/LoraLowVRAMSettings.json', '--output_name=last', '--lr_scheduler_num_cycles=1', '--learning_rate=0.0001', '--lr_scheduler=cosine', '--lr_warmup_steps=150', '--train_batch_size=1', '--max_train_steps=1496', '--save_every_n_epochs=1', '--mixed_precision=fp16', '--save_precision=fp16', '--seed=1234', '--cache_latents', '--bucket_reso_steps=64', '--mem_eff_attn', '--gradient_checkpointing', '--xformers', '--use_8bit_adam', '--bucket_no_upscale']' returned non-zero exit status 1.

Traceback (most recent call last):

File "/workspace/kohya_ss/train_network.py", line 783, in <module>

train(args)

File "/workspace/kohya_ss/train_network.py", line 146, in train

text_encoder, vae, unet, _ = train_util.load_target_model(args, weight_dtype, accelerator)

File "/workspace/kohya_ss/library/train_util.py", line 3023, in load_target_model

text_encoder, vae, unet, load_stable_diffusion_format = _load_target_model(

File "/workspace/kohya_ss/library/train_util.py", line 2989, in _load_target_modelsubprocess.CalledProcessError: Command '['C:\\Stable-Diffusion\\kohya_ss\\venv\\Scripts\\python.exe', 'train_network.py', '--enable_bucket', '--pretrained_model_name_or_path=runwayml/stable-diffusion-v1-5', '--train_data_dir=C:/Stable-Diffusion/Emma/Process lora/Emma lora/image/', '--resolution=512,512', '--output_dir=C:/Stable-Diffusion/Emma/Process lora/Emma lora/model', '--logging_dir=C:/Stable-Diffusion/Emma/Process lora/Emma lora/log', '--network_alpha=1', '--save_model_as=safetensors', '--network_module=networks.lora', '--text_encoder_lr=5e-5', '--unet_lr=0.0001', '--network_dim=8', '--network_weights=C:/Users/archi/Downloads/LoraLowVRAMSettings.json', '--output_name=last', '--lr_scheduler_num_cycles=1', '--learning_rate=0.0001', '--lr_scheduler=cosine', '--lr_warmup_steps=150', '--train_batch_size=1', '--max_train_steps=1496', '--save_every_n_epochs=1', '--mixed_precision=fp16', '--save_precision=fp16', '--seed=1234', '--cache_latents', '--bucket_reso_steps=64', '--mem_eff_attn', '--gradient_checkpointing', '--xformers', '--use_8bit_adam', '--bucket_no_upscale']' returned non-zero exit status 1.