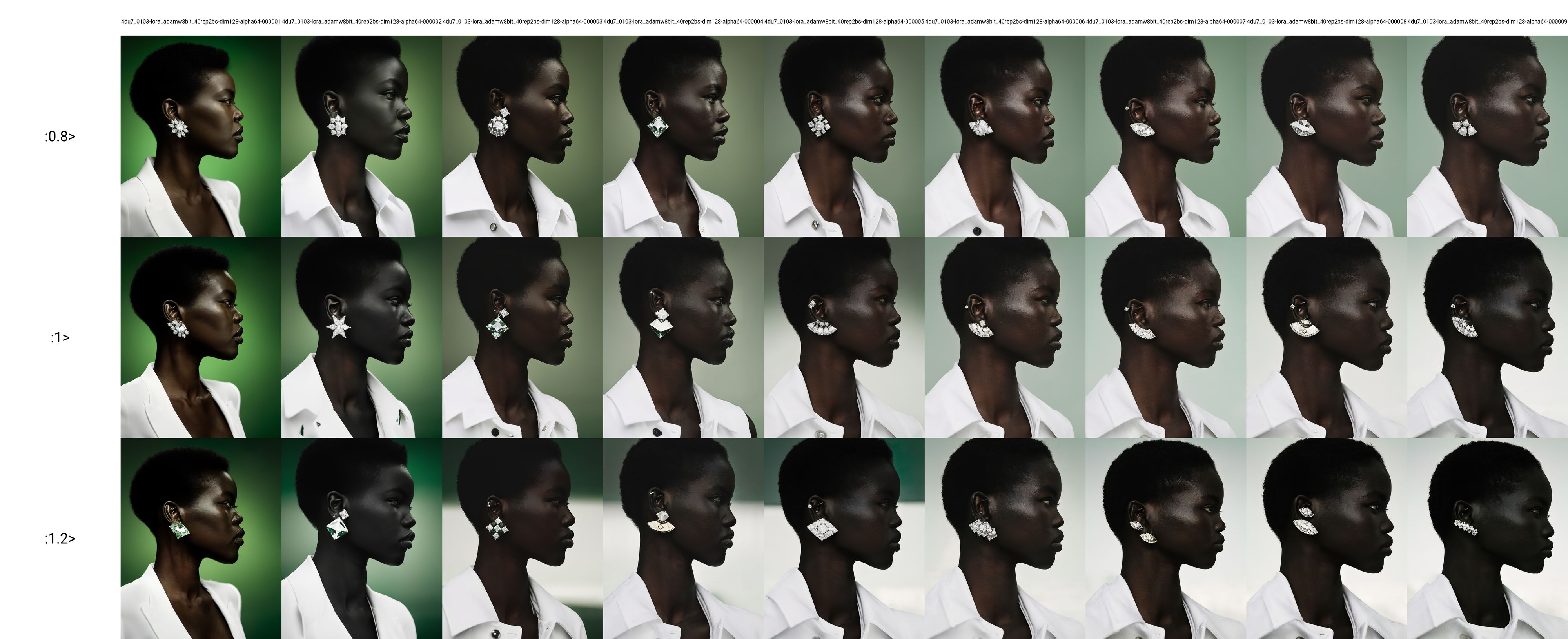

i got pretty impressive results with batch 2, which drove SDXL adamW8bit training by by 30% and bs 4

i got pretty impressive results with batch 2, which drove SDXL adamW8bit training by by 30% and bs 4 by 60%. For me it's somewhere in there. Need to check flexibility, but i am sure there are other experimental variables to test. Here's dataset of 12 images, vs xy plot with batch 2 up to 9 epochs (5000 steps), 5 hours vs 9 hours batch size 1