How to make it public

I am using mobile device

no colab cannot be used

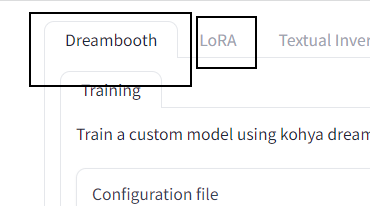

It should only say Lora at the top

1024 sdxl fail again ------------------------------------------------------------

Failures:

[1]:

time : 2023-10-10_15:03:15

host : 19fe5077aa6e

rank : 1 (local_rank: 1)

exitcode : 2 (pid: 1098)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2023-10-10_15:03:15

host : 19fe5077aa6e

rank : 0 (local_rank: 0)

exitcode : 2 (pid: 1097)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

Failures:

[1]:

time : 2023-10-10_15:03:15

host : 19fe5077aa6e

rank : 1 (local_rank: 1)

exitcode : 2 (pid: 1098)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2023-10-10_15:03:15

host : 19fe5077aa6e

rank : 0 (local_rank: 0)

exitcode : 2 (pid: 1097)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

train_network.py: error: argument --max_data_loader_n_workers: expected one argument

train_network.py: error: argument --max_data_loader_n_workers: expected one argument

Traceback (most recent call last):

File "/opt/conda/bin/accelerate", line 8, in <module>

sys.exit(main())

File "/opt/conda/lib/python3.10/site-packages/accelerate/commands/accelerate_cli.py", line 47, in main

args.func(args)

File "/opt/conda/lib/python3.10/site-packages/accelerate/commands/launch.py", line 977, in launch_command

multi_gpu_launcher(args)

File "/opt/conda/lib/python3.10/site-packages/accelerate/commands/launch.py", line 646, in multi_gpu_launcher

distrib_run.run(args)

File "/opt/conda/lib/python3.10/site-packages/torch/distributed/run.py", line 785, in run

elastic_launch(

File "/opt/conda/lib/python3.10/site-packages/torch/distributed/launcher/api.py", line 134, in call

return launch_agent(self._config, self._entrypoint, list(args))

File "/opt/conda/lib/python3.10/site-packages/torch/distributed/launcher/api.py", line 250, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

train_network.py: error: argument --max_data_loader_n_workers: expected one argument

Traceback (most recent call last):

File "/opt/conda/bin/accelerate", line 8, in <module>

sys.exit(main())

File "/opt/conda/lib/python3.10/site-packages/accelerate/commands/accelerate_cli.py", line 47, in main

args.func(args)

File "/opt/conda/lib/python3.10/site-packages/accelerate/commands/launch.py", line 977, in launch_command

multi_gpu_launcher(args)

File "/opt/conda/lib/python3.10/site-packages/accelerate/commands/launch.py", line 646, in multi_gpu_launcher

distrib_run.run(args)

File "/opt/conda/lib/python3.10/site-packages/torch/distributed/run.py", line 785, in run

elastic_launch(

File "/opt/conda/lib/python3.10/site-packages/torch/distributed/launcher/api.py", line 134, in call

return launch_agent(self._config, self._entrypoint, list(args))

File "/opt/conda/lib/python3.10/site-packages/torch/distributed/launcher/api.py", line 250, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

trying to put my face on this image, but it's not going well, tried regular lora with positive prompt, roop and auto detailer, but never gets the face well

I assume it has to do with distance and darkness

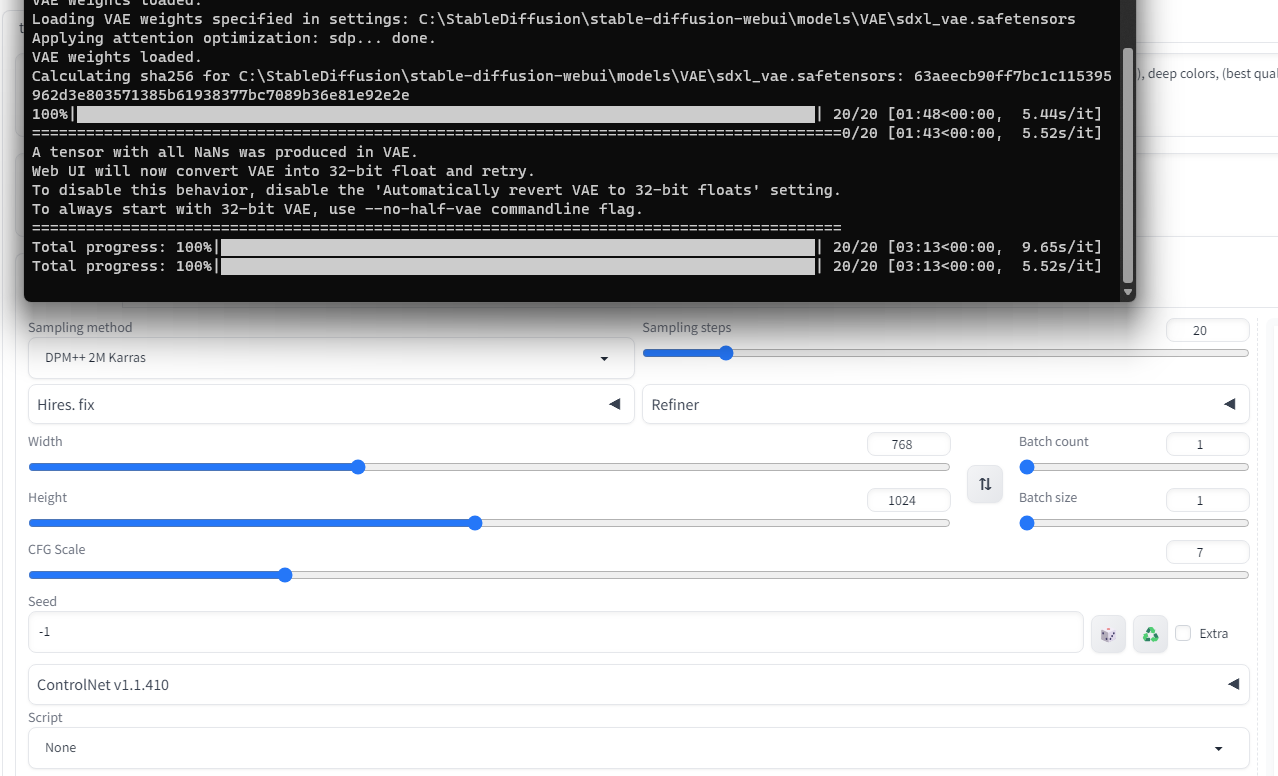

Hi friends, I have this problem: Very slow image generation speed when using SDXL 1.0 on RTX 3060 Ti GPU and 16gb RAM. If i generate 1024x1024 with 20 steps then the generation time is 10 minutes, if for example 768x1024 then the generation speed is from 20 minutes. I have seen many people have generation speeds of only a couple minutes on the same GPU. I am using AUTOMATIC1111 version v1.6.0 and the start parameter --opt-sdp-attention. I don't get any errors about lack of VRAM, just a very slow generation speed. Maybe I should do a clean SD installation?

I enabled sdxl.vae and got a reduction in image generation time. But I got an error. Anyway it's not a 10 minutes wait anymore:)

When generating at 40 steps, the generation time doubles.

When generating at 40 steps, the generation time doubles.

none with A1111 so far and haven't tried kohya yet. Will in the next few days.

What are other good rare tokens to use for training besides ohwx? I would like to train other subjects

I also just noticed the change in processing units. Never paid attention before but I was getting 1.6 sec/Iteration on 3060 and now I'm getting 0.31 sec/iteration (or 3.21 iterations/sec). 4-5x faster and better quality

I think I was able to fix the slow generation speed on the 3060 ti. I now generate a 768x1024 image in 30-40 seconds. The problem is with Nvidia drivers from version 531 and up. And also I used --xformers parameter instead of --opt-sdp-attention.

https://github.com/vladmandic/automatic/discussions/1285

https://github.com/vladmandic/automatic/discussions/1285

GitHub

it seems that nVidia changed memory management in the latest versions of drivers, specifically 532 and 535 new behavior is that once gpu vram is exhausted, it will actually use shared memory thus c...

Hey guys

I'm a bit lost, can I use kohya to train model for SD 1.5?

If yes, which one gives better result between Dreambooth and Kohya?

I'm a bit lost, can I use kohya to train model for SD 1.5?

If yes, which one gives better result between Dreambooth and Kohya?

A6000 training sdxl kohya - 48gb config file, around 8000 steps, 3 hours makes sense?

good idea

i see there dreambooth and lora tabs

you need special notebook we shared on patreon

you really are not following what i am telling you

add --medvram

assuming it is 12 gb vram

yes you can use. but dreambooth extension is better for sd 1.5

i will search best parameters for sd 1.5 too for kohya

after sdxl dreambooth hopefully

do you have best setting for dreambooth model SD 1,5? I have take a rtx3090 on runpod since I only have 12gb vram on my pc

i dont have yet sadly for kohya

it is on my research list

but i have for extension

thanks was looking for dreambooth

1 auto installer

2 settings

Is it compulsory to use class images?

not but improves

i have class images too

why is there 2 links?

1 settings

1 auto installer

read all links

ah ok thank you

will go through it

Yes, I ran the --medvram-sdxl command and it really helped for my 3060 ti. The only downside is the long wait for the first image to be generated. Also xformers helped me because opt-sdp-attention gives extremely low generation speed for sdxl.

ah u have ti