or if you have them already hit the down arrow on models and choose an XL compatible one

or if you have them already hit the down arrow on models and choose an XL compatible one

it was kinda warm in here since it's winter, but yeah. If you're not overclocking and you have a well ventilated case, I would say don't worry about it.

it was kinda warm in here since it's winter, but yeah. If you're not overclocking and you have a well ventilated case, I would say don't worry about it.

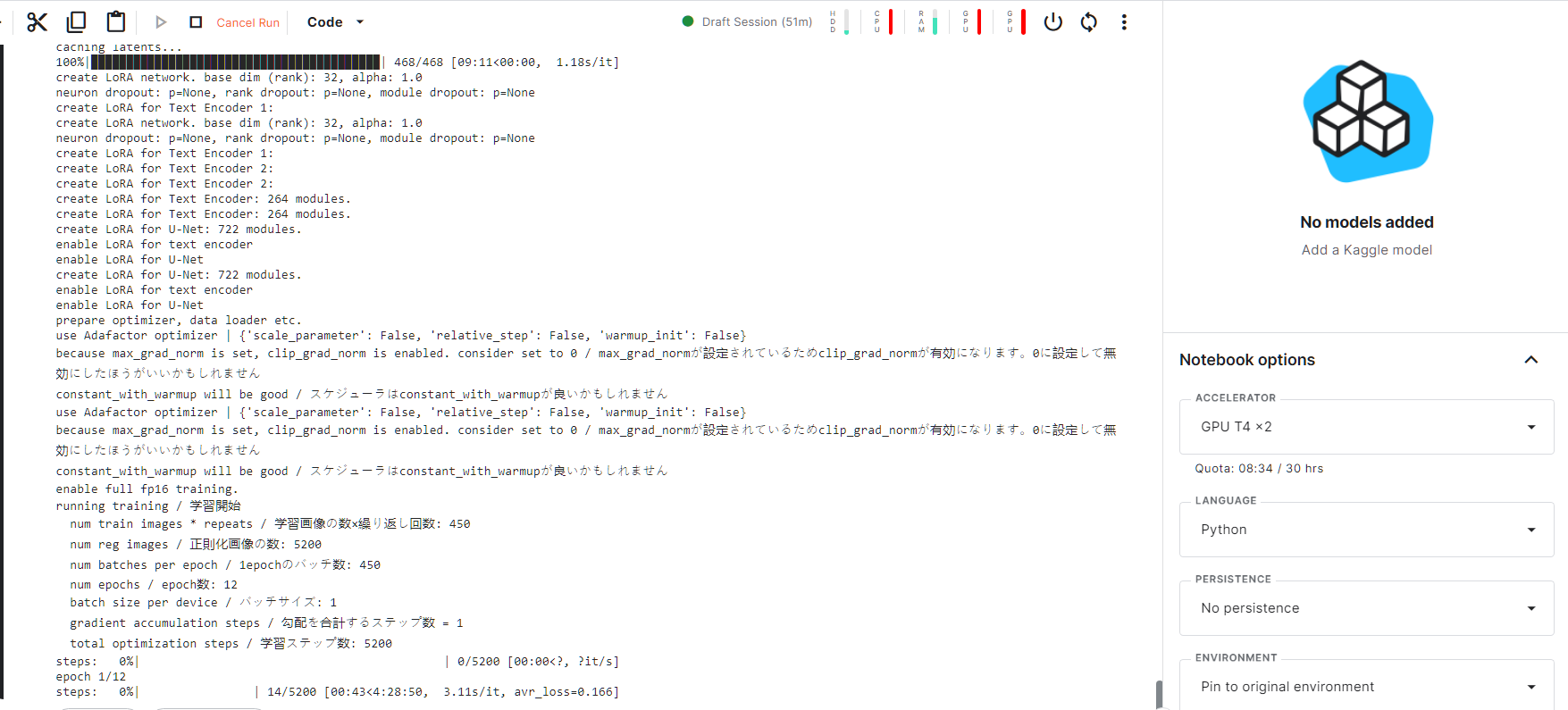

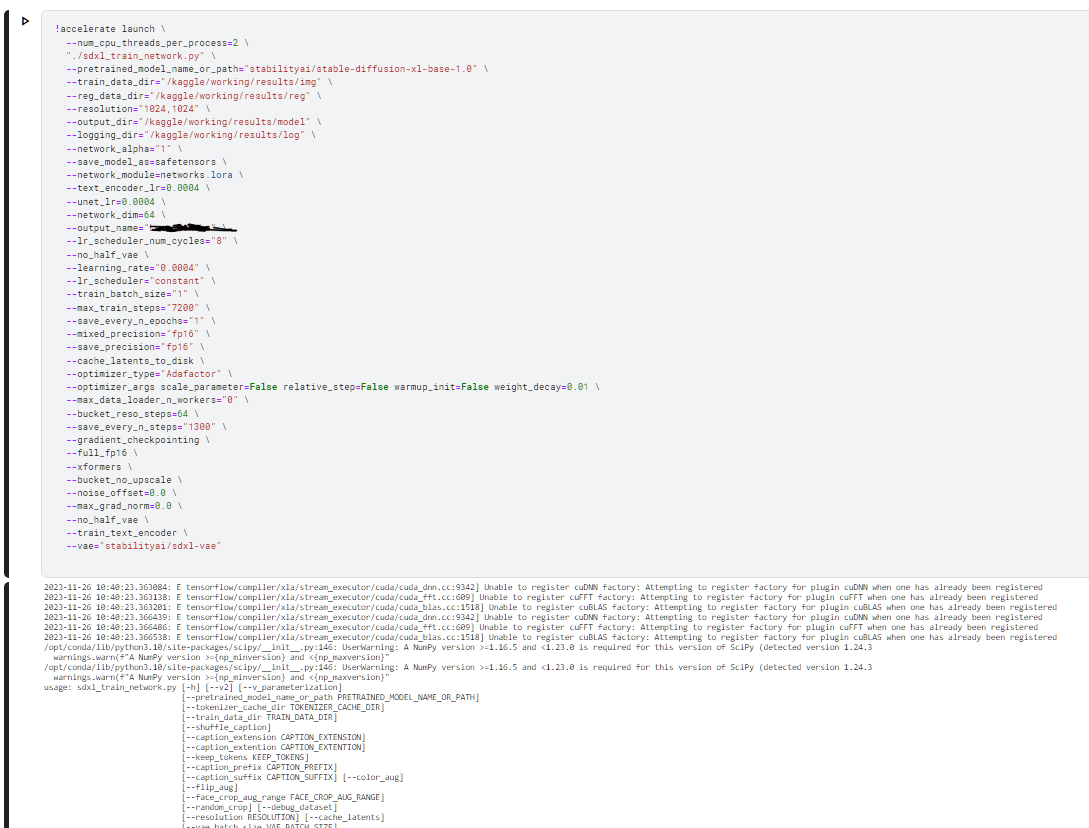

Is there a way to retain all the files/setups ? before i come to hit the train button ,gpu time of 1 hour is lost..

Is there a way to retain all the files/setups ? before i come to hit the train button ,gpu time of 1 hour is lost..