downloaded from civitai?

what is your resolution 1024x1024?

yes 1024,1024 and the training images are 1280x1536

now it works with the

now it works with the

stabilityai/stable-diffusion-xl-base-1.01280x1536 will use more vram

resize them to 1024x1024 yourself before start training

because it will downscale only 1 side to 1024

Furkan, do you offer new checkpoint settings? and do you mean if i train checkpoint, but can finally extract lora from it, too?

new checkpoint settings are shared on patreon

and you can extract lora from them

working nice

Do regularisation images work better when training on dreambooth when they have caption files with them?

i never tried them with caption

someone i know tried your dreambooth notebook and got this error. do you know what could be wrong?

you forgatten to enable gpu

Furkan where you going for vacation?

Can I come with you Haha

i dont have any vacation haha  not even weekends

not even weekends

not even weekends

not even weekendscheckout the newest article : https://medium.com/@furkangozukara/comparison-between-sdxl-full-dreambooth-training-vs-lora-training-vs-lora-extraction-44ada854f1b9

Medium

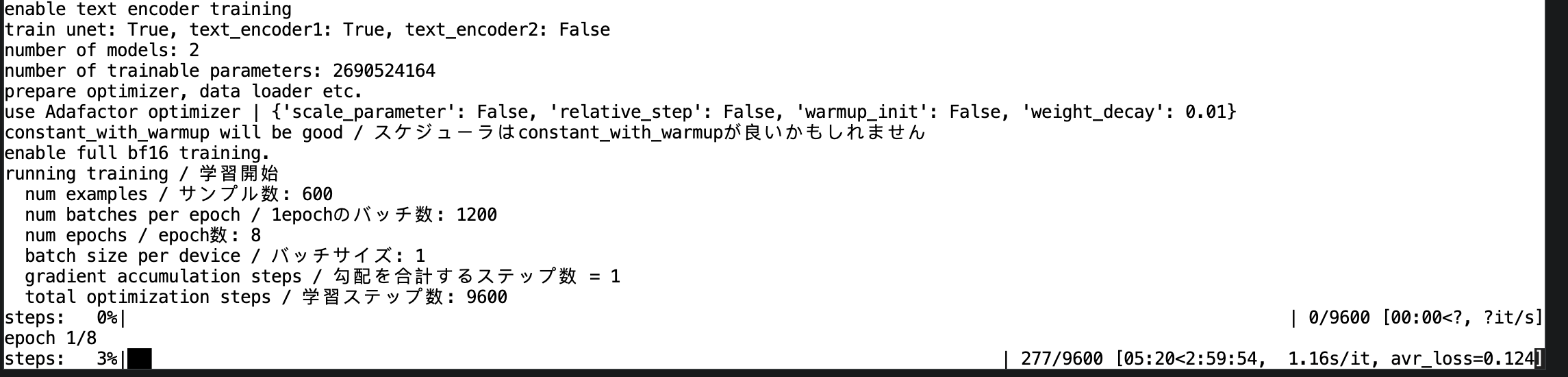

Hello everyone. I have trained myself recently by using Kohya GUI latest version. Used official SDXL 1.0 base version.

he's always working working working

Anyone know why I always get bad results from Adetailer? Does it only work well on small further faces? On my images where my face is pretty large (think portrait) Adetailer always makes my face look worse, like its set to a higher CFG Scale (its not) I followed the same settings @Dr. Furkan Gözükara used in the SDXL Dreambooth training video. For some reason this has always been my results with Adetailer and I'm not sure what I could be doing wrong

can you show previous after and your settings?

i am using adetaler all the time

You are in that same position?

AI is hard work, thank you for all these tutorials, I would need 48 hours a day to get everything done.

BTW, do you think teaching SDXL style on a realvisXL model would be better? It seems the custom SDXL models are still flexible with prompts more than the 1.5 were.

yes D

sadly didnt have chance to test yet

I'll test it now. Same everything only difference is base vs realvis SDXL

The settings already helped me increase the quality by a lot. I just need more steps the next time.

Awesome keep the work going and don't forget not to burn out  I know it myself

I know it myself

I know it myself

I know it myselfCan someone share or show us what a high-quality or ultra-high-quality dataset for training looks like for SDXL Dreambooth? For example how many are enough and what ratio between close-up face, upper body, and full body shots is needed? If a photographer takes shots of the subjects what to take care of etc.

Hello, new to the patreon, looking at the config files and i notice i can't find the network dim or alpha. Is 128/1 still the default we are using here, or is it 128/128?

Hi @Dr. Furkan Gözükara and everyone. My 10 year old nephew wants a cool illustration of himself for xmas so I wan to to do a lora training of him. Can I use the images from the download_man_reg_imgs.sh file? Or is there a child regimages dataset somewhere? Lastly, will the class prompt "man" work in this case or does it have to be "child"? Thanks!

guys whats the best XL-based model for landscape?

The checkpoint that I have thought a style with, now produces that style even without a triggerword. Is that normal? Or is it overtrained?

I used your crop & resizer tool and made them 896x1152, It's still using 14.9 GB VRAM so I switched to P100 GPU, it slow but works just fine.

Is it just me or does that 6s/it for a p100 seem slow?

Idk how fast it should be. For me 4.5h training time for an sdxl dreambooth for free is good enough.

Ah, for free. Then it's fine

I get 1.2s/it on my 4090, that is why I was wondering why it's slower on a p100

Oh wait, p100 isn't that new. Nevermind me.

I was thinking about the A100

With kaggle's double gpu it's around 1.3s/it but it has only 14GB Vram

@Dr. Furkan Gözükara have you tried multi gpu lora training with kohya?

ddp_bucket_view and the other parameter dont seem to exist even on dev branch

Whats the best tool for scanning a large dataset folder for duplicate images? (Some duplicates might be different size / res)

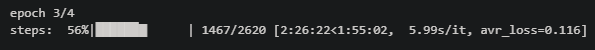

So I'm using all the latest instructions and settings, and I'm running training on a 4090 in Runpod. When I watch the video, Dr Furkan gets a total training time of 50m-2h using a 3090 on Runpod. For some reason I'm getting a training time of ~3hours, despite being on a 4090.

Did something change about the training between the video publication and now, making it more intensive? Or am I doing something wrong?

Did something change about the training between the video publication and now, making it more intensive? Or am I doing something wrong?

This is with 15 example images, scaled to 1024x1024, and using the reg set of men at 1024x1024 as well.

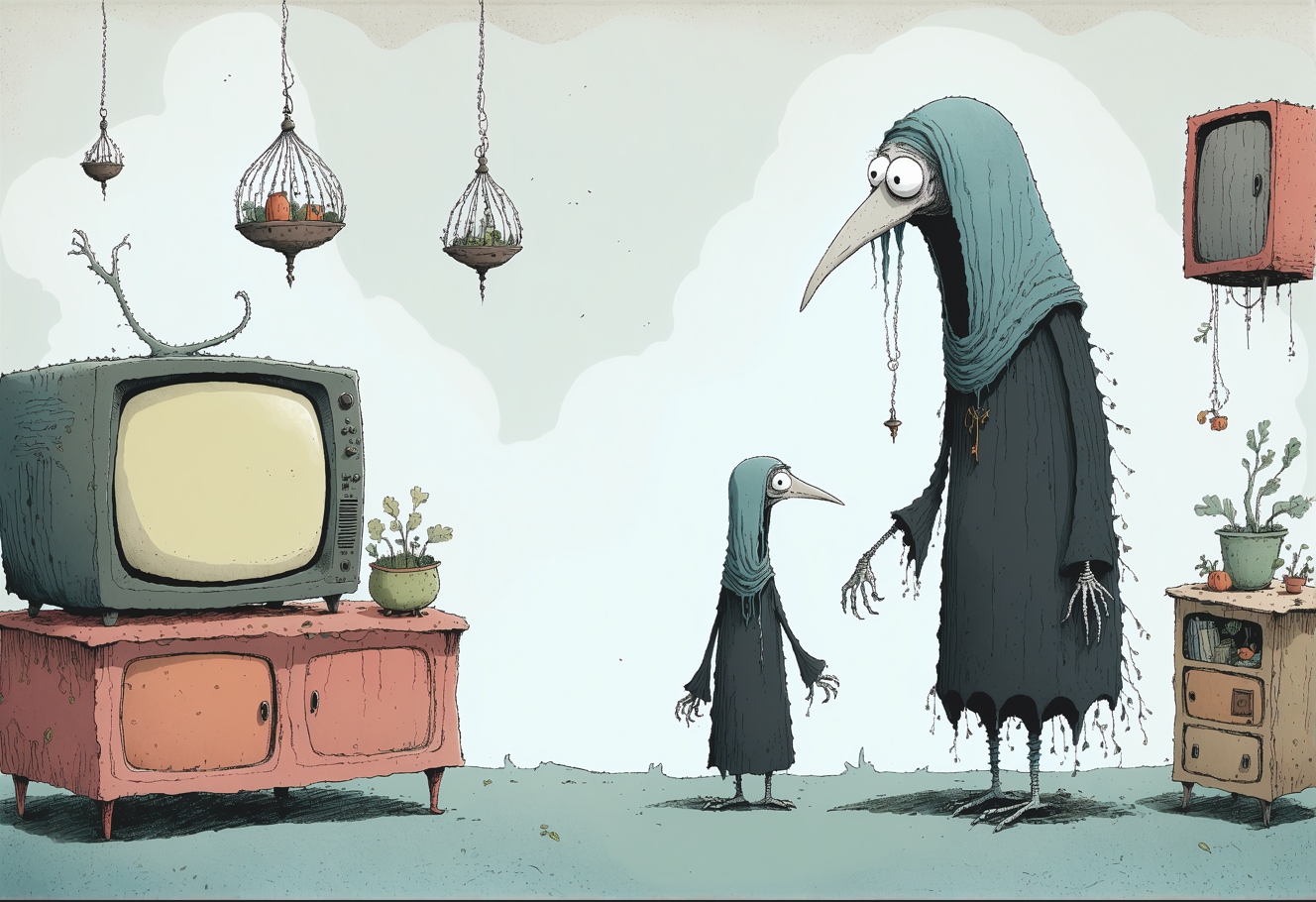

In the vast expanse of the whimsical world of Widdendream, where every creature was as unique as a snowflake in a winter flurry, there stood two friends: little Lyla Longbeak and the towering Tara Tattercloak. Lyla, with her inquisitive eyes and a beak sharp enough to pick the seeds of wisdom from the fruits of knowledge, gazed up in awe at her friend. Tara, draped in a cloak of midnight blue, tattered at the edges from embracing every thorn and rose life offered, towered above like a gentle giant, her eyes kind pools of understanding.

Together, they were a testament to the beauty of difference, a duo that danced in harmony despite their contrasting tunes. The room they shared was filled with oddities and ends, with hanging cages that held not birds but blooming ideas, and a television that was old and wise, flickering with the stories of yore.

"See, Lyla," Tara's voice rustled like the leaves of an ancient tree, "we are like these cages, different in size and shape, yet home to ideas just as bright and beautiful." Lyla nodded, her heart swelling with the warmth of acceptance.

Their friendship was a mosaic, a splendid tapestry woven from threads of myriad textures and hues, teaching all who visited Widdendream that diversity was not just to be tolerated, but celebrated, for it was the very essence of life's enchanting tapestry.

Together, they were a testament to the beauty of difference, a duo that danced in harmony despite their contrasting tunes. The room they shared was filled with oddities and ends, with hanging cages that held not birds but blooming ideas, and a television that was old and wise, flickering with the stories of yore.

"See, Lyla," Tara's voice rustled like the leaves of an ancient tree, "we are like these cages, different in size and shape, yet home to ideas just as bright and beautiful." Lyla nodded, her heart swelling with the warmth of acceptance.

Their friendship was a mosaic, a splendid tapestry woven from threads of myriad textures and hues, teaching all who visited Widdendream that diversity was not just to be tolerated, but celebrated, for it was the very essence of life's enchanting tapestry.

this is typical of my 4090. He may have used xformers which I do not since I want higher quality