i can give you private consultation if you need

i can give you private consultation if you need

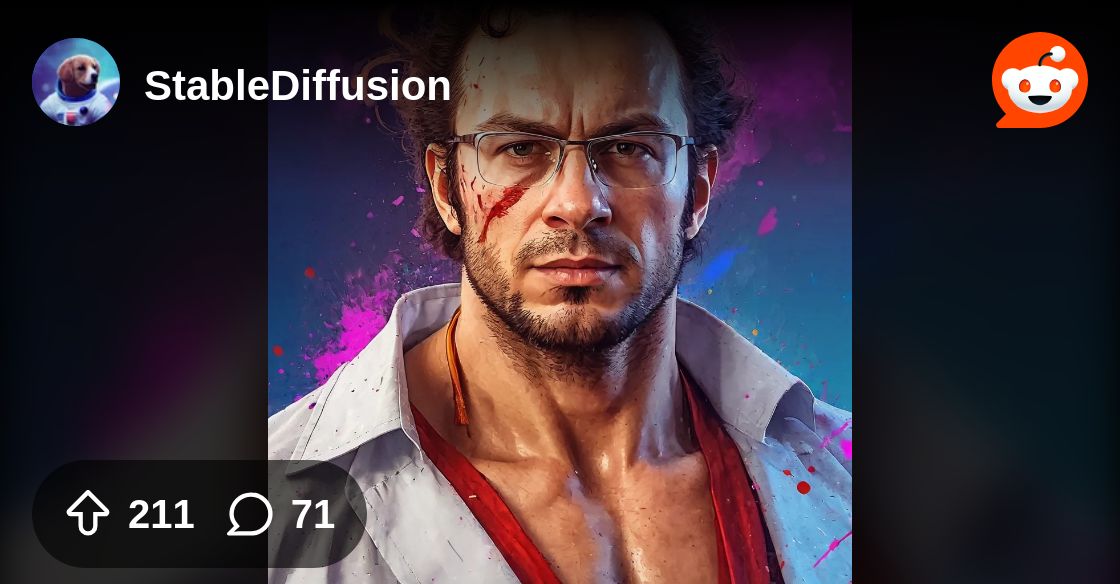

Master Stable Diffusion XL Training on Kaggle for Free!

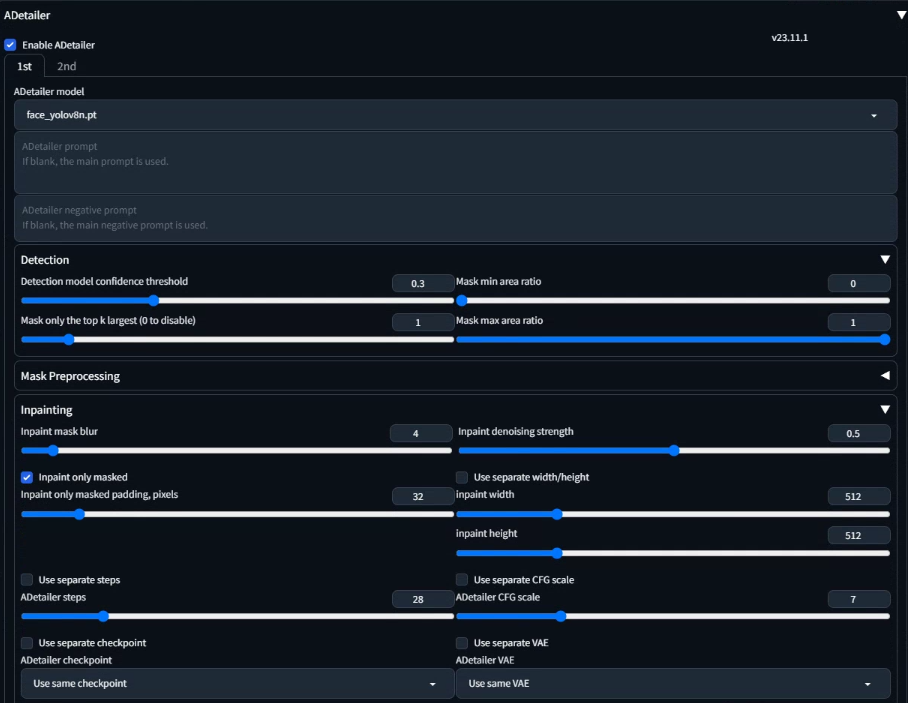

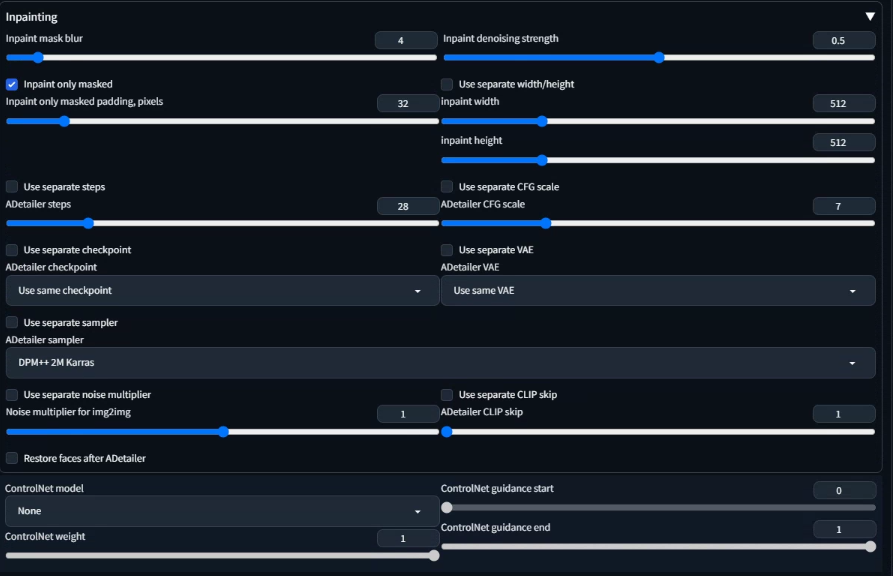

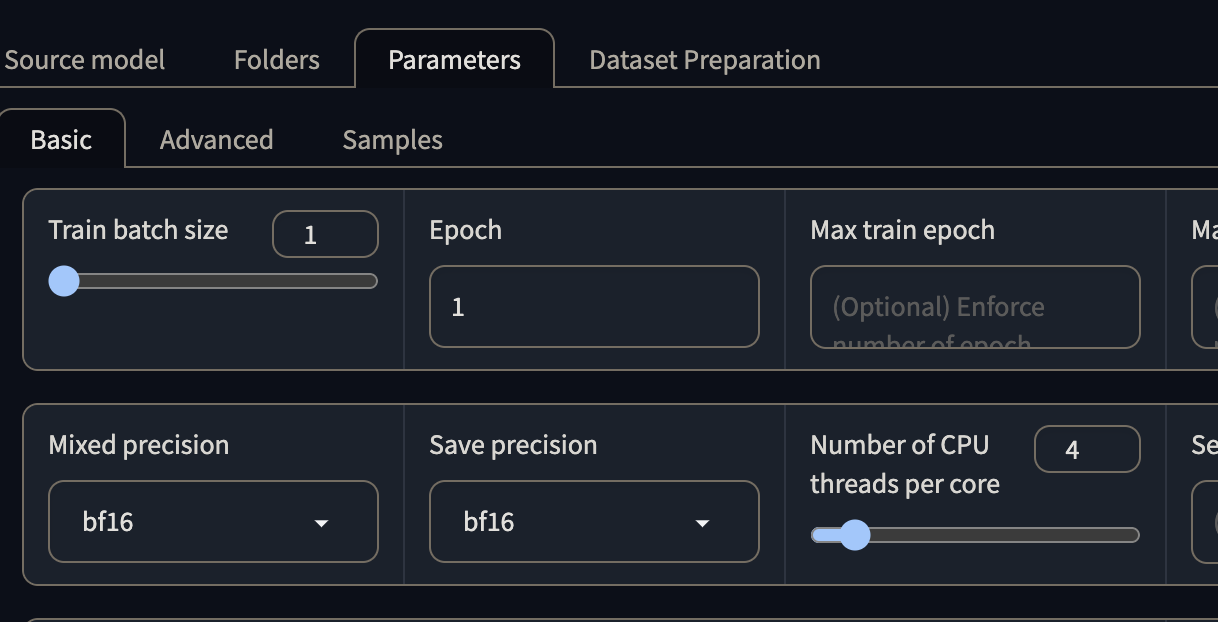

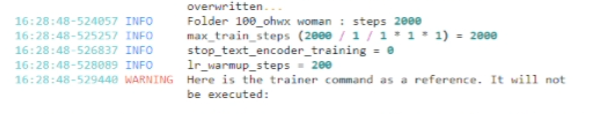

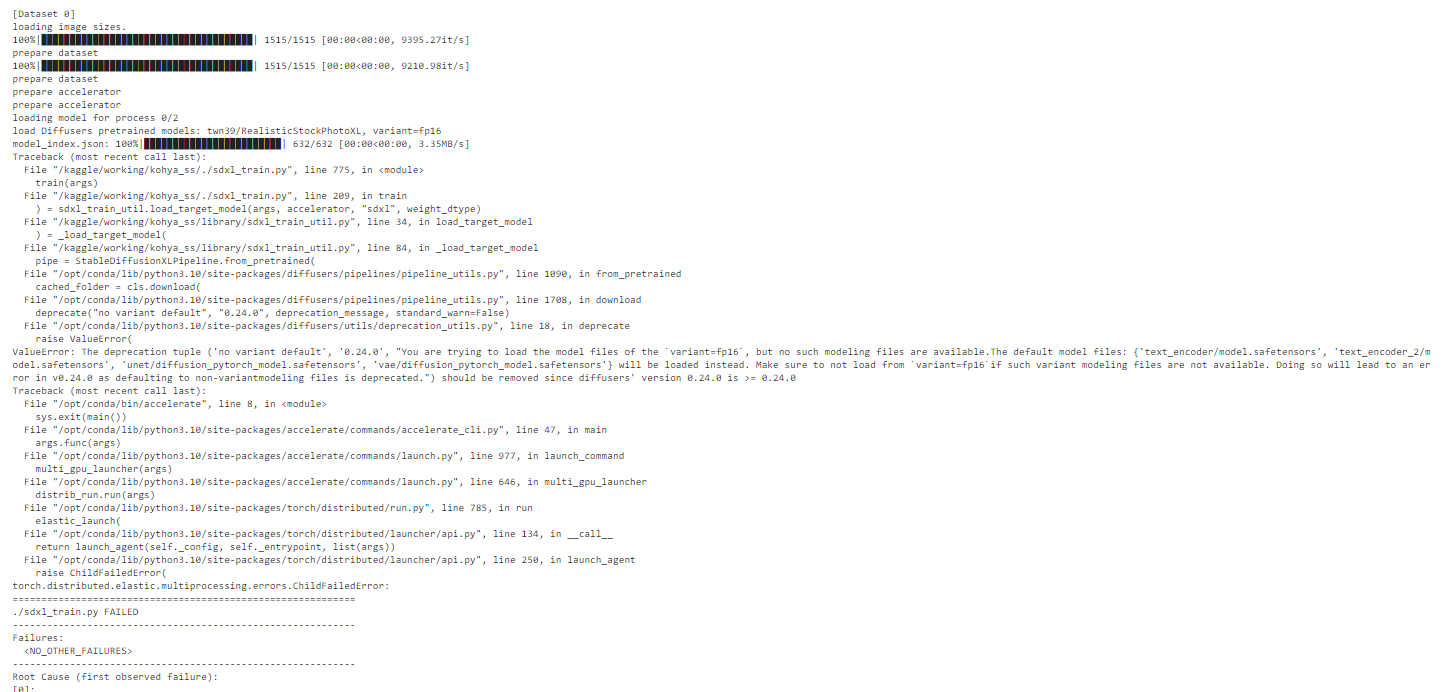

Master Stable Diffusion XL Training on Kaggle for Free!  Welcome to this comprehensive tutorial where I'll be guiding you through the exciting world of setting up and training Stable Diffusion XL (SDXL) with Kohya on a free Kaggle account. This video is your one-stop resource for learning everything from initiating a Kaggle session with dual ...

Welcome to this comprehensive tutorial where I'll be guiding you through the exciting world of setting up and training Stable Diffusion XL (SDXL) with Kohya on a free Kaggle account. This video is your one-stop resource for learning everything from initiating a Kaggle session with dual ...

save_model. More information at https://huggingface.co/docs/safetensors/torch_shared_tensors '.

save_model