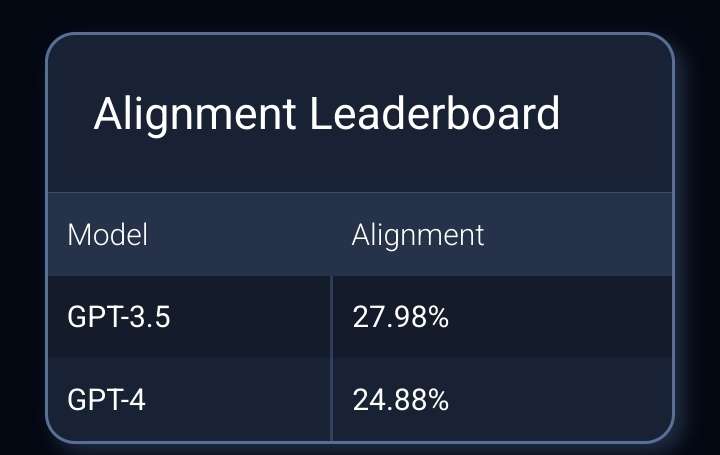

AI Safety Scorecard

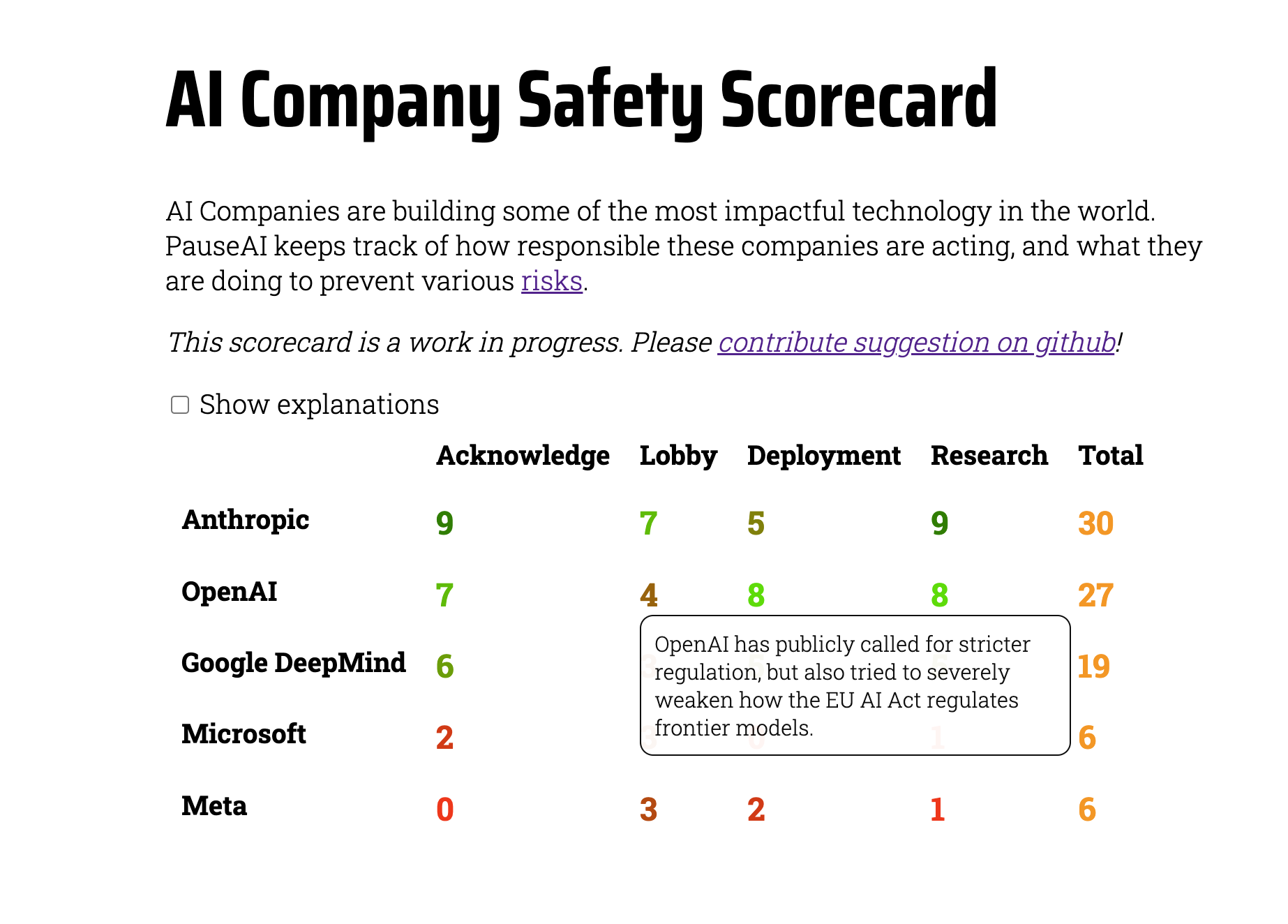

We want AI companies to be rewarded for taking risks seriously and implementing safety measures. We also want to criticize those who do not. Creating a report card / score card could be a great way of achieving this.

- Publish a report. Make it a newsworthy thing. Make sure @Comms Team is involved.

- Maybe do it regularly (e.g. before each summit, every 6 months)

- If there are other orgs doing similar things, make sure our tone stands out. We can probably be more critical than an OpenPhil funded project!

- @Tyler worked on a spreadsheet comparing RSPs: https://docs.google.com/spreadsheets/d/16QRpgMS5qG1pZmXC1MennS6WLwkAtkXjunVBs16QoRQ/edit#gid=0

- FLI is working on something similar

- Research RSPs (responsible scaling policies)! Help compare them. All major AI labs have them written down for the AI safety summit. I know @Tyler has done a lot of work in this already!

- Research acknowledgement of xrisk. OpenAI has, but some others have not.

- Design a scorecard / find example designs (see below)

- Design a rating system

- See here: https://github.com/joepio/pauseai/pull/32

Examples:

https://www.ciwf.org.uk/media/7452331/eggtrack-2022-report.pdf

https://thehumaneleague.org/2023-cage-free-eggspose

https://mercyforanimals.org/count-your-chickens-report/

Strategy

- Publish a report. Make it a newsworthy thing. Make sure @Comms Team is involved.

- Maybe do it regularly (e.g. before each summit, every 6 months)

- If there are other orgs doing similar things, make sure our tone stands out. We can probably be more critical than an OpenPhil funded project!

Other initiatives

- @Tyler worked on a spreadsheet comparing RSPs: https://docs.google.com/spreadsheets/d/16QRpgMS5qG1pZmXC1MennS6WLwkAtkXjunVBs16QoRQ/edit#gid=0

- FLI is working on something similar

How to help

- Research RSPs (responsible scaling policies)! Help compare them. All major AI labs have them written down for the AI safety summit. I know @Tyler has done a lot of work in this already!

- Research acknowledgement of xrisk. OpenAI has, but some others have not.

- Design a scorecard / find example designs (see below)

- Design a rating system

Progress

- See here: https://github.com/joepio/pauseai/pull/32

Examples:

https://www.ciwf.org.uk/media/7452331/eggtrack-2022-report.pdf

https://thehumaneleague.org/2023-cage-free-eggspose

https://mercyforanimals.org/count-your-chickens-report/