Unable to turn on pg_stat_statements

Hi

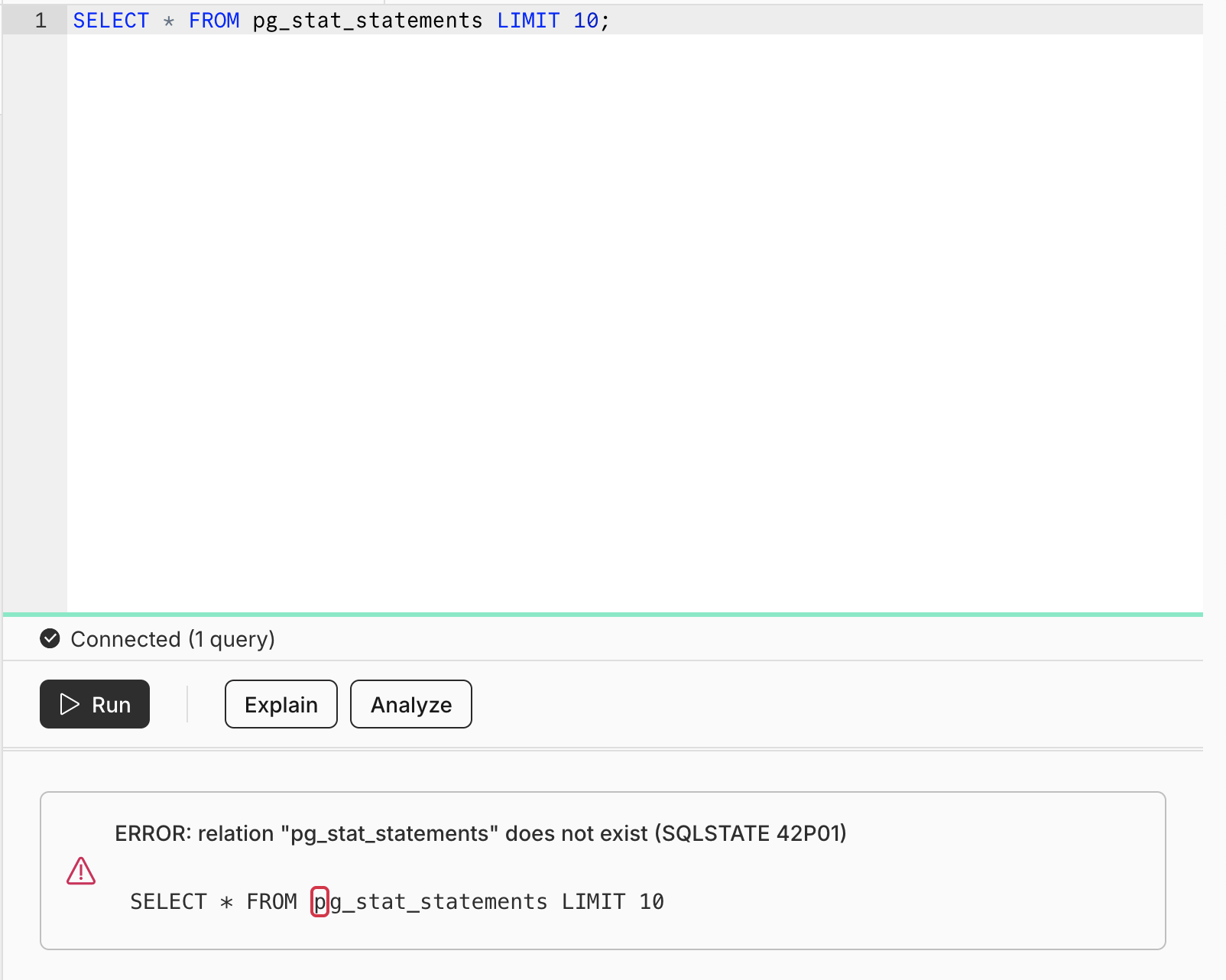

I am following the instructions in the neon guide here: https://neon.tech/docs/extensions/pg_stat_statements to enable pg_stat_extensions. Enabling the extension works without a problem but if try any queries listed in the instructions I get a "relation does not exist error".

I am following the instructions in the neon guide here: https://neon.tech/docs/extensions/pg_stat_statements to enable pg_stat_extensions. Enabling the extension works without a problem but if try any queries listed in the instructions I get a "relation does not exist error".

Neon

The pg_stat_statements extension provides a detailed statistical view of SQL statement execution within a Postgres database. It tracks information such as execution counts, total and average execution...