Crawl not working on self-hosted with external redis (Upstash)

The bull dashboard doesn't seem to be showing the web scraper queue when it is connected to Upstash redis. I did not do anything like IP whitelisting etc that should block the connection.

I also got no concerning log messages

It also seems like the redis connection is successful. It might be worth noting that using local docker composed redis works.

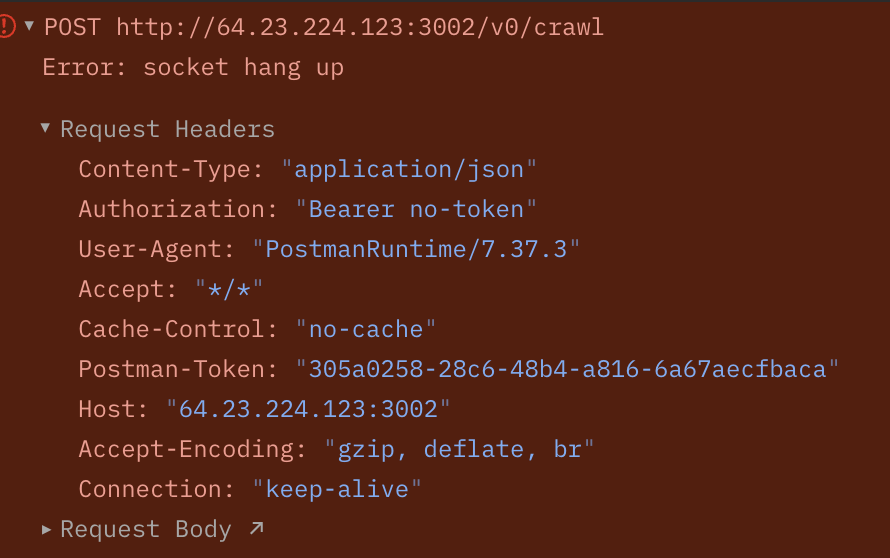

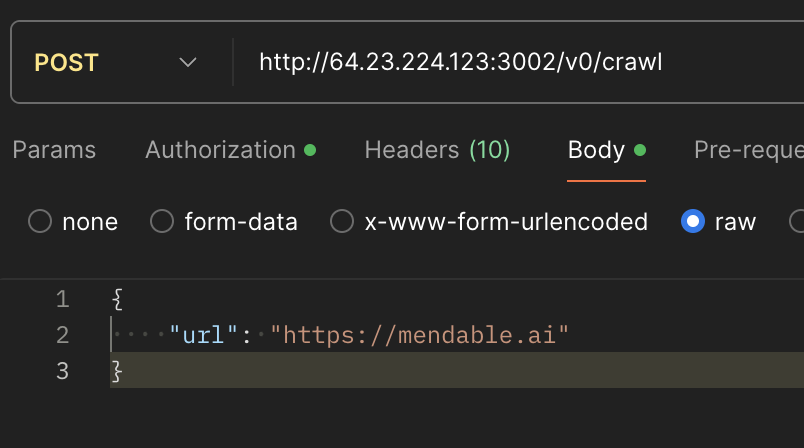

However, whenever I try to do crawl job, I got socket hangup instead of jobId. Am I missing something here? I attached the IP address of the service in the image and also attached the postman request for crawl.

Is there any missing steps to connect to external redis providers like Upstash?

I also got no concerning log messages

It also seems like the redis connection is successful. It might be worth noting that using local docker composed redis works.

However, whenever I try to do crawl job, I got socket hangup instead of jobId. Am I missing something here? I attached the IP address of the service in the image and also attached the postman request for crawl.

Is there any missing steps to connect to external redis providers like Upstash?