594 Replies

https://github.com/ublue-os/ucore-kmods/actions/runs/9814573437/job/27102383611

so, it seems newer CoreOS is either configured or uses a newer rpm-ostree setting which prevents use of dnf

It’s making me wonder if we don’t add the CoreOS testing kernel to akmods in addition to the stable kernel, and just build all kmods there, thus ucore would use those

That's definitely new. I'm completely down to consolidating into akmods since all that should matter is making sure kernel versions match

I think I’ll first prep the PR for ucore to use akmods. It will only work with stable for now obviously, but I want to validate.

Then I’ll add the extra kernel to akmods.

I do like the idea of all the kmods building in one place.

Also we have a massive library of scripts in akmods right now.

Like most of them aren't needed for ucore, but it would simplify things for people who want to make a custom Ucore image

yeah

i mostly want the benefit of not doing the same thing in different places

shared benefit from fixes

And things like the zfs module that needs a little babying for versions

i didn't make much progress on this today, mostly a ucore:testing concern at the moment, but i'll need to fix soon.

i was short on time so focussed on helping finish up the luks tpm lock/unlock stuff

working on this... one thing i forgot... while the kmods themselves are fine for ucore to source from akmods... there's a couple other "addons" rpms i'm building in the ucore-kmods

so, i'll need to sort that out, but for testing, i'll copy kmods from akmods, but still copy the addons rpms from ucore-kmods

stepping away for a bit, hope the workflow actually works for the bits which i changed

i do know the containerfile is working

GitHub

refactor: use akmods repo as source for kmods by bsherman · Pull Re...

This is an effort to move away from distinct builds of kmods for CoreOS based uCore, instead using the shared akmods repo's kmods.

also note... i only made changes for the CoreOS" nvidia-zfs" builds, not the ucore ones

w00t

I was thinking of pulling zfs out of common and having another Containerfile for it.

extra?

or just zfs

Just zfs. Similar to nvidia

i think it would be good to do just zfs and only build for coreos

Basically my thought. I would throw possibly throw in fsync for shits/giggles but basically coreOS common does a few extra things compared to everyone else

hmm

yeah, whatever, i just don't think need to build for all kernel permutations

Oh yes. Hence why I said shits/giggles and point to people on why zfs on fedora full speed kernel is dumb

But. Main reason is simply to get away from the extra logic inside of common. Putting in Extra doesn't seem great since extra has so much random stuff in it. A separate containerfile also kinda makes sense since we have to do different package prep for it

do you know this error message?

https://github.com/ublue-os/ucore/actions/runs/9831803039/job/27139706272#step:5:763

Error: invalid reference formatPassed a bad tag

The image:tag you are trying to pull doesn't exist is when I see that usually

yeah, seems to be the case

GitHub

refactor: use akmods repo as source for kmods · ublue-os/ucore@f934...

An OCI base image of Fedora CoreOS with batteries included - refactor: use akmods repo as source for kmods · ublue-os/ucore@f934e0c

Doesn't have "coreos-40"

Same for the next line. It's missing the akmods flavor

ah, thanks!

yeah

i was pretty sure it was something like that, but i'd overlooked that part of log

Yeah I wonder for that retry action if we can have it set -x so we can see the line that it fails on

Instead of just getting stdout/stderr

it's not respecting my include?

https://github.com/ublue-os/ucore/blob/use-akmods/.github/workflows/reusable-build.yml#L105-L109

Includes are really finicky. I find it easier to do excludes. Will take a look at what is the whole matrix

agree, they ARE finicky

i can make it work a different way

https://github.com/ublue-os/ucore/actions/runs/9831803039/job/27139706272#step:1:39

Yeah it's not picking up that coreos

GitHub

refactor: use akmods repo as source for kmods · ublue-os/ucore@f934...

An OCI base image of Fedora CoreOS with batteries included - refactor: use akmods repo as source for kmods · ublue-os/ucore@f934e0c

yep

Since it's a one to one pair. Use a environment variable.

hmm... i want to do this differently

yeah

been a while since i was deep in the weeds with github workflows

Yeah I find the documentation for include to be on the bad side. It doesn't do what I feel like it should.

Meanwhile exclude does exactly what I expect, except work with an array

yep

I do like inspecting from the store and confirming everything matches up.

I also like that you split out the skopeo stuff to a block beforehand

yeah, I had been thinking about that improvement for a while... 1) make sure we can pull images 2) make sure the kernels align by checking what we've pulled

i want to fail, not push bad images

hah! i know why the include failed now, but it's ok, i'd rather do env

I have been doing the skopeo stuff inside the build container step. But I like the idea of breaking up the massive build job to smaller blocks

i was checking for a matrix valuewhich didn't exist 😄

back to this...

the RPMs I build which aren't building yet in

akmods are:

- ublue-os-ucore-nvidia

- ublue-os-ucore-addons

they are similar to the ublue versions, but a bit simpler

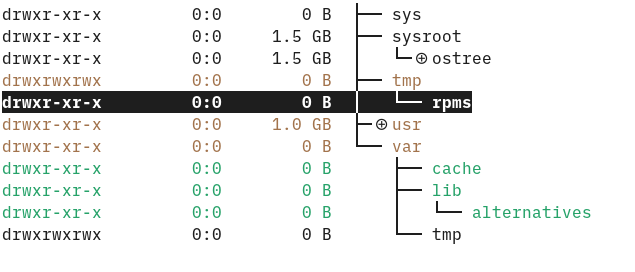

i suppose i could just build them in akmods , only for coreos kernel_flavor, and store in a sub-dir of the /rpms dir so as to not break existing consumers of those imagesDo you always copy over zfs?

sadly

Then put in that Containerfile

thats one of the areas where i think a yum repo would help

True

i don't follow you here

The idea of making a containerfile for explicitly zfs

Since realistically only coreOS is going to use that one

ah

well

i'll cross that bridge later 🙂 when i'm looking at the code more closely

But doesn't exist yet

yes! versions verified!

https://github.com/ublue-os/ucore/actions/runs/9832706989/job/27141896949#step:6:33

GitHub

refactor: use akmods repo as source for kmods · ublue-os/ucore@776c...

An OCI base image of Fedora CoreOS with batteries included - refactor: use akmods repo as source for kmods · ublue-os/ucore@776cffc

sweet!

ok, i'll finish that set of changes for ucore... then work on the akmods side

🤞 https://github.com/ublue-os/ucore/actions/runs/9833009866

looks like a lot of green

yep, just didn't get to the akmods side yet

@M2 what do you think about including the "stable" / "testing" in the coreos tag for akmods?

I think I'm going to need it for testing... even if we don't add it for stable

or do you have another idea?

So we currently do akmods:coreos-fedora_version

Are you thinking about changing to akmods:coreos-stable/testing?

i was thinking

akmods:coreos-stable-40 as an exampleOkay, I think that should work.

i THINK the only consumer of coreos is currently bluefin, so maybe we can just change it

Bluefin would then move to akmods:coreos-stable

right,

akmods:coreos-stable-40 and akmods:coreos-stable-39Yeah we are the only consumer unless someone has made a custom image with it

and ucore would use both the stable and testing -40

if you don't mind, I can include that change in my PR

or we can keep it distinct

Yeah please let us get a draft PR in place

small PRs aren't bad

k

RJ is working on getting fsync in place on bluefin:latest

yep

lets keep it simple and do one thing at a time

https://github.com/ublue-os/akmods/pull/212

GitHub

feat: build both stable and testing CoreOS streams by bsherman · Pu...

This provides both kernel streams for uCore or other CoreOS images to consume.

A breaking change occurs in the tagging for existing coreos akmods images.

Instead of: akmods:coreos-40 and akmods:cor...

I just noticed @EyeCantCU 's kernel version tagging here https://github.com/ublue-os/fsync/pkgs/container/fsync and i'm now wondering if our tagging for akmods should change 🙂

That caching layer was all M2 🙂

oh 😄 well, you help on stuff a lot toO!

We can parse the ostree.linux label for akmods, but having the kernel version would be very nice

*as a tag

yeah, maybe even as an extra tag?

That's what I was thinking the other day

akmods is used enough by many people, i'd be hesitant to completely change it

Yeah, wouldn't be bad to add it as a tag at all either. Can look into it more tomorrow. Going to finalize a PR adding fsync to latest in Bluefin real fast and call it

joy, looks like package skew is breaking akmods builds

no fun when i'm trying to work on it

Package skew is always fun

actually not sure it's package skew

it looks like dnf and dnf5 really have a conflict

i'm working on it

i think we may have a problem

https://github.com/ublue-os/akmods/pull/213

at least, something to fix...

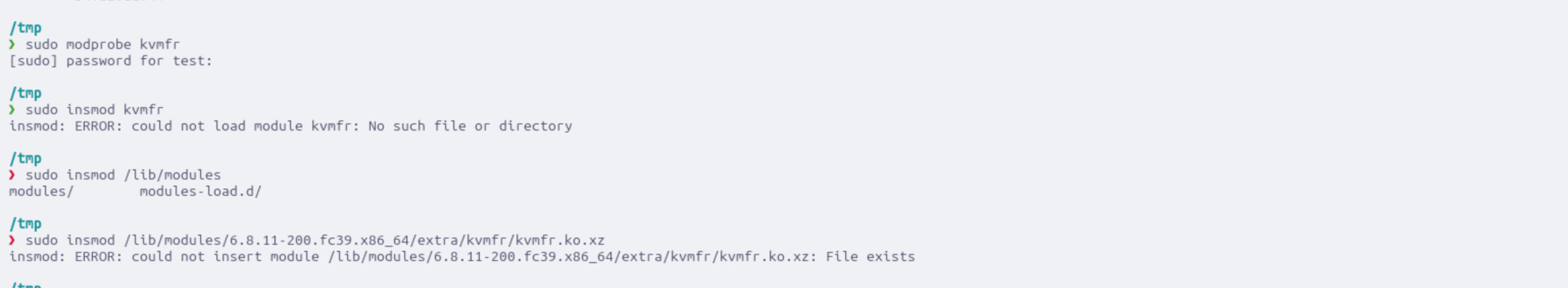

this issue is what led me to trying to use akmods instead of building my own for ucore in the first place:

That warning doesn't sound good

i think something changed in rpm-ostree maybe?

I'll take a look. This is going to make akmods complicated

Yes. Very.

F40 got the latest rpm-ostree but F39 does not (yet?)

Oh, it's just a warning saying those changes will be lost when an update is performed (which is correct): https://github.com/rpm-software-management/dnf/commit/5c050ba2324c5fb95bf0e0501c7925f38f6a09dc

We're safe

But it’s causing akmods to fail. No?

Oh wait. Shit. Yeah, it raises an error

We need to rethink akmods 🤦♂️

Or convince upstream that this should just be a warning

Since its python we could try patching out the error message.

Another option is removing bootc which is how it's doing the detection

Nope. They are also doing a check for the ostree dir and failing out if that exists.

What would happen if we built in a fedora container instead of an ostree container? We also could do a downgrade for a little bit on dnf

This would be fine if the kernel in atomic always aligned with it, but unfortunately it doesn't. We'd have to ensure the kernels match

But, I think this is a strong case for having a caching layer for the kernel shipped in Fedora. We'd be able to kill kernel skew entirely

Well we have a template with fsync. We can do the same with the main kernel.

I also want to have the fsync layer not be dependent on akmods.

We still occasionally have Nvidia driver skew and some problematic packages.

Maybe if we do the

|| true that Bazzite does it will mitigate that as wellWhat if we enabled install-to-root and symlinked rpm-ostree to /usr/bin/dnf? All these should be doing is an install

We do install to root.

I think the only reason we were using dnf is for the easy copr stuff.

Let's drop it then and just curl the repos

Actually I think we also need dnf for akmods install

right, i don't have the example in the draft PR, but i tried using cliwrap, and akmods failed in other ways with that...

this is a problem with rpm-ostree/dnf changes which are now blocking akmods builds, i think

i haven't tested yet, but i'm thinking of going to the coreos examples github and using one of those examples to demo to Colin/Timothee about this issue, though, maybe seeing our PR here is enough of a demo?

feels like this is unforseen consequence

Yeah, the PR said warn but it's failing out

I don't know if them Putting Error there was actually intended

i'm guessing the warning is returning a non-zero exit code?

yeah, in

raise CliError(_("Operation aborted."))Yeah, why are they aborting an operation if its a warning?

and if it was attempting to do this on an ostree system right now.... it would fail trying to write the rpm lock fail

file*

this has to break akmods from rpm-fusion, too

yepp

i think they need to revert that PR. or at least not error out

the commit is linked above, but the the PR is https://github.com/rpm-software-management/dnf/pull/2053 (for my own context)

@m2 do you mind to file the issue ?

thank you!

ya know, thinking about this... there IS a workaround...

the RPM is successfully built, just not installed

so, we could handle the error, and install the rpm with rpm-ostree to verify

or, ideally, there's an option which tells akmods "don't actually install"

but the latter seems to not be available

other option is to cache main line kernel like fsync and then use a normal fedora container

i don't understand what that means...

I get the idea of querying what kernel is installed (or will be installed) in an image and making sure we install that version in a normal fedora container for build...

but not sure what the "cache like fsync" really does/means

GitHub

GitHub - ublue-os/fsync: A caching layer for the fsync kernel from ...

A caching layer for the fsync kernel from sentry/kernel-fsync - ublue-os/fsync

We cache the current version of fedora ostree image kernel and use that with akmods

main reason we are using quay.io/fedora-ostree-desktops/base is that it has the same kernel and is the smallest image

so this just caches a known version of a set of kernel RPMs?

yepp

ok, so the idea is we could run a "kernel-cache" repo or image, and each could be tagged with "flavor-version" eg, "fsync-6.8.11-xyz"

i know this is a distinct repo and image for fsync, but that doesn't scale

we could have all our kernel flavors cached

and then, for akmods, use generic fedora container, and install the different kernel flavors from cache?

yeah

it wouldn't address other library/rpm differences beyond kernel, but hopefully would be "close enough"

this is a major PITA

what's the value of the cache layer? vs.... knowing the version and installing from dnf?

repos losing old kernels?

because the cost is copying RPM files into a layer which can't be removed

This would be for akmods where we publish only the scratch image

ah

so... downstreams like bluefin/bazzite still install kernels from regular repos or their upstream silverblue/bazzite main?

Only swap kernels if we need to

we probably could do everything with skopeo and not need to cache main kernel

yeah, come to think of it, even where we need RPMS on downstreams (eg, installing kmods/custom kernels from a cache image layer) we could spin up a nested container, mount the akmods/kernel images and copy RPMs out at runtime rather than use Contianerfile COPY ... but... then the Containerfiles wouldn't be standalone capable

or maybe they could be

eh no

i think we just need a yum repo 😂

would be simpler.

But with a scratch container.

yep

well, all said, for the short term, i do think caching kernels in a scratch image and using for akmods builds with standard fedora container is a winner

we could also just downgrade dnf in akmods

I wonder if @EyeCantCU can tell us (or you @M2 sorry, i'm still not paying attention to who is doing what)...

can we rename

fsync repo to kernel-cache or something? and make it more generic for all our kernel-caching image needs?

maybe, but i fear dependency hellGitHub

GitHub - ublue-os/kernel-cache: A caching layer for the fsync kerne...

A caching layer for the fsync kernel from sentry/kernel-fsync - ublue-os/kernel-cache

i'll rework the workflow to get it to not needs akmods as a bootstrap and then think how we can refactor to be more generic

thanks very much

who know that coreos testing kmod failures were the harbinger of problems we'd soon face with all akmods

kinda miffed since it does say warning

warnings warn.... not abort

i agree

also we do need to split out zfs from common

but that is a later thing

yeah, so order of operations seems to be:

1) fix current state (kernel-cache+generic-fedora?)

2) my PR for coreos-stable/coreos-testing

3) move zfs to distinct akmods-zfs image

4) finish my move of ucore stuff to kamods

sounds about right

or we could just merge the coreos-stable thing if you want, it doens't break anything worse that it already is and we'd have something merged

it means that bluefin will be broken until I update the containerfile

I think getting akmods first

bluefin is already broken because akmods is already failing to build

lol true

yeah we can do the coreos-stable/testing

ok, so this is ready to merge: https://github.com/ublue-os/akmods/pull/212

@M2 @EyeCantCU @Robert (p5)

per me and M2 discussion... i'll comment also

Hey all. Sorry - can catch up in a bit

GitHub

feat: build both stable and testing CoreOS streams · ublue-os/akmod...

A caching layer for pre-built Fedora akmod RPMs. Contribute to ublue-os/akmods development by creating an account on GitHub.

oh wow that's a lot of read

yep

but so is this: https://github.com/ublue-os/akmods/actions/runs/9849935505

GitHub

ublue akmods 40 · ublue-os/akmods@a172797

A caching layer for pre-built Fedora akmod RPMs. Contribute to ublue-os/akmods development by creating an account on GitHub.

all of those fails were due to dnf5

so fine with me

i need to work ... day job... but want to help more get akmods happy

probably tonight, or random comments today

GitHub

feat: Cache all the kernels. No Akmods Bootstrap by m2Giles · Pull ...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

i'm confused by the matrix args flipping order?

(flavor, 40) and (39, flavor) ?

you know why?

https://github.com/ublue-os/kernel-cache/pull/5#issuecomment-2218478588

probably because of the order of the include

why not excluding? 🙂

i have no idea

it's literally the same either way

you told me you prefer excludes 😉

i hate include so much

LOL

Surface has a few additional packages required by a kernel install

yah

only 2. One is an F39 package randomly

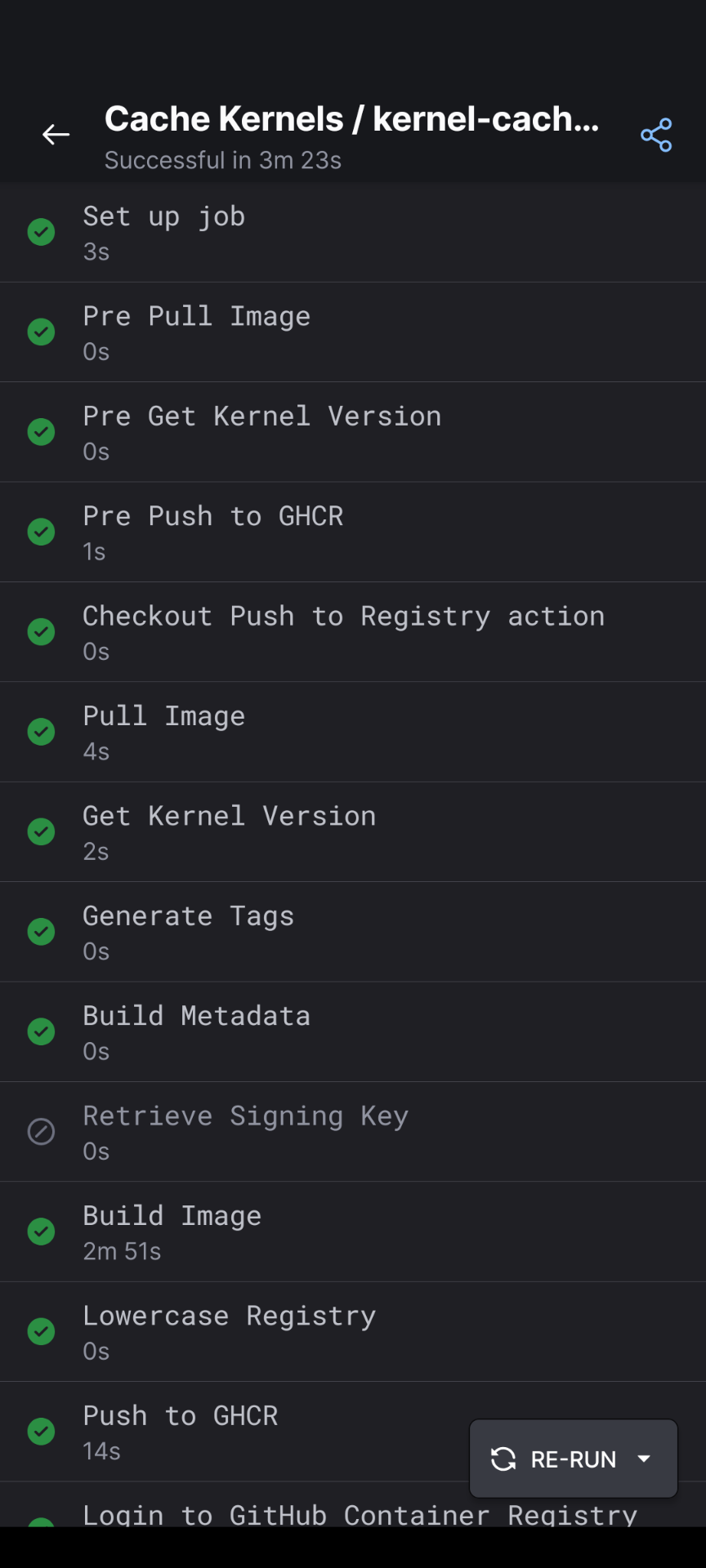

looking much better 🙂 https://github.com/ublue-os/kernel-cache/actions/runs/9863277960

i think tags might be off

agree

the PR number is not present

is it not ${{ github.event_number }}?

mmm

looking closer

that is what we use elsewhere

i wonder if it breaks on reruns?

no... weird, it's not reporting https://github.com/ublue-os/kernel-cache/actions/runs/9862941104/job/27234602246#step:8:5

i wonder if that changed in github expressions and we missed the memo, becasue i'd swear it used to work

fixed with ${{ github.event.number }}

👁️ 👁️

yeah

i was looking at another one which works and it has a

event.number but I didn't see the difference between . and _oh the schedule should now be pushed forward before akmods

also F39 seems to just take forever for repo sync

alright I'm personally happy with the kernel-cache. @EyeCantCU fsync is now called fsync-kernel since the repo is caching everything

thanks for this! looks great! I'm excited to see this in action for all of akmods, and beyond

Long term.

https://linux.die.net/man/1/rpmrebuild

This could possibly be used to sign the kernel in the kernel cache.

https://github.com/ublue-os/hwe/blob/f050686e82c0d0ab4730ccc483a795dd1c85e290/install.sh#L58

Also should probably add these from surface repo

GitHub

hwe/install.sh at f050686e82c0d0ab4730ccc483a795dd1c85e290 · ublue-...

Fedora variants with support for ASUS devices, Nvidia devices, and Surface laptops - ublue-os/hwe

I have work started on moving akmods to kernel cache.

I just didn’t get a draft PR pushed as it wasn’t quite far enough

Let me know if tags need to change

that'll be an interesting thing, do we leave tags as is, but ADD the kernel version?

Right now it's:

flavor-kernel:latest, short, and full kernel version

the PR in progress

https://github.com/ublue-os/akmods/pull/214

but it does NOT yet actually pull kernel-cache and hasn't converted the kernel-install method in build-prep

GitHub

feat: use kernel-cache images and fedora for builds by bsherman · P...

This PR uses the new kernel-cache images to install the desired kernel flavor to a generic Fedora container (not ostree based), allowing:

consistent kmod builds per kernel version (avoid repo skew...

sorry, i misunderstood and was thinking something else...

about if we needed to change the tags on

akmods images

but I think the tags on kernel are solid.

my only question is if we want to make the image name more specific.

instead of coreos-stable:TAG or fsync:TAG, should they be:

kernel-coreos-stable:TAG and kernel-fsync:TAG ?Right now:

fsync-kernel:TAGor

kc-etc... or kernel-chache-etc..

i'll go look before i sound even more like an idiotcoreos-stable-kernel:TAGok, yeah, they are appropriately unique 🙂 just a question of

kernel as a prefix or suffix... but i don't care enough to argue for changes.

the only reason for prefix is sortingThe thing I realized is that I only have main, coreos-stable, and surface have fedora version as part of the latest tag

Just thinking of better mechanism for passing tags in a build since you may not have kernel version

hmm

ya know... since the kernel version part of the tag includes the fedora version (eg, fsync-kernel's

6.9.8-201.fsync.fc40.x86_64 includes fc40 and coreos-stable's 6.8.11-200.fc39.x86_64 includes fc39 even though stable is really fc40 based...)

i think those kernel versions have all the meta that's required when paired with the proper (as currently done) image names

probably don't need the 39- 40- prefix

and latest ... let's burn it... seems very inappropriate for this use case

these cache images are most valuable when the consumer DOES know the kernel version, and they find it by inspecting their base image's labels, no?

i think the problem with my statement is something needs to be the source of truth for what is "current" kernel (regardless of flavor)Yes

how will bluefin (or akmods, for that matter) know which fysync to use, unless asking for "latest" or "39"?

Fsync is only building 40 right now

So fsync only provides an F40 kernel.

Also each package is always tagged with entire kernel version as well which includes the fedora version.

It's just there isn't a simple tag that's like

fsync-kernel:40 it's fsync-kernel:latest

For main that caches 39 and 40

It's main-kernel:40-latest

Instead of using latest it probably should be the fedora version is all I'm sayingok, so I suggest this...

all images get a tag which is the full kernel version, which handles the case where a user KNOWS the kernel they want

eg

fsync-kernel:6.9.8-201.fsync.fc40.x86_64 or coreos-stable-kernel:6.8.11-200.fc39.x86_64

but all images ALSO get a tag which is simply the fedora major version

eg fsync-kernel:40 and coreos-stable-kernel:39 and main-kernel:40

those fedora major version numbers are de facto latest for the fedora release of the respective kernel flavor, which are the tags akmods should be consuming.

and bluefin, et al will look at latest akmods-kernel_flavor to find the specific kernel version they should install (or look at kernel-cache, then use that to find akmods, i'm not sure the order matters)

but i think extra tags like :39-6.8.11-200.fc39.x86_64 and :39-latest are extraneousSo right now the tags are:

for main, coreos-stable, and surface:

for fsync, asus, coreos-testing:

I think we should consolidate to:

I think we are on the same page

fsync-kernel:major_minor_patch doesn't work for coreos since we are building a coreos-stable:39-major_minor_patch even though coreos stable is actually FC40

oh geez

you covered that

sorry, i'm context switchingGitHub

chore: consolidate tags to fedora-version by m2Giles · Pull Request...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

I think we should consolidate to:

PR is in with change

Back to work

i'm not 100% the change is what we expect

echo 'alias_tags=pr-7-6.8.11-200.fc39.x86_64 8e3197b-6.8.11-200.fc39.x86_64 pr-7-39 8e3197b-39'

https://github.com/ublue-os/kernel-cache/actions/runs/9878224005/job/27281675392#step:8:73

seems we are missing major_minor_patch?I didn't put in the major minor one in pr.

But it's in build tags

I should just make the commit tags be the same as build tags.

i think for this repo, it would be much simpler...

we always have the 3 base tags

fedora_version, fedora_version-major_minor_patch, and full_kernel_name .... but on PR's this all gets prefixed with pr-N-Agreed

I had sha on there

i don't think it's wrong to have a sha tag too, I generally assume there is one

okay, updated to have major minor patch for commit tags. So PRs will have SHA+PR nomenclature. Build Tags will have the 3 agreed upon tags

hah! i had a comment open to capture this idea and walked away, now i probably don't need to push "Comment"

I don't think a sha tag is particularly necessary since we will have a digest that we can pin to if needed.

If we ever push PRs having the sha tag there would make the most sense.

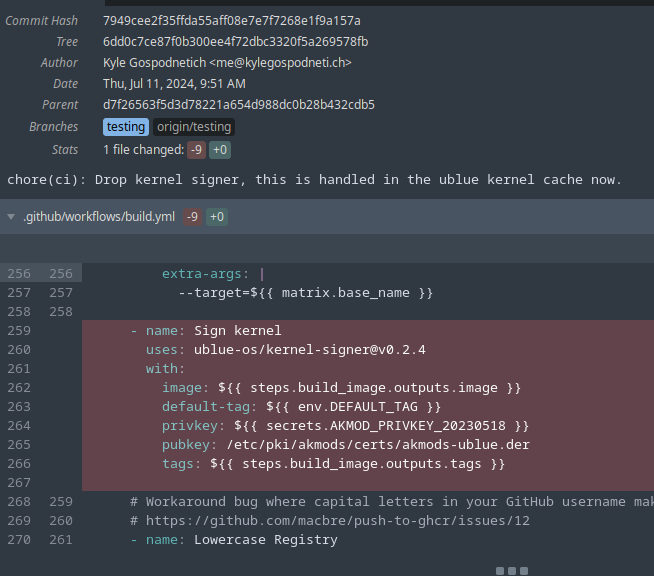

Also I would like to look into signing the kernels inside the RPMs so we don't have to do the kernel signer step in downstream images.

agree on sha stuff

re: signing kernels... that wouldn't mean building initramfs... does initramfs need to be signed?

no

initramfs is not signed

i was thinking not... just confirming

the file inside the rpm at /usr/lib/modules/*/vmlinuz is what needs to be signed

by signing, our key, the same key used for akmods, would be all that's required for secure boot? even if a user nuked their Microsoft keys, but imported ours as MOK it still boots, right? or does something else in the chain need signing?

we would sign with our MOK.

seems like something else is needed

Microsoft keys are used by shim.

well, all said, i think https://github.com/ublue-os/kernel-cache/pull/7 is good, just needs other approval

GitHub

chore: consolidate tags to fedora-version by m2Giles · Pull Request...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

Basically,

https://github.com/EyeCantCU/kernel-signer/blob/main/sign-kernel.sh

we are using this to sign the kernel but it's doing the signature after vmlinuz is in the correct spot.

oh did you put it up to 2 approvals? Probably makes sense if we are moving so our kernel install method to this for akmods and images

i did 🙂

yeah, this is definitely going to be one of those no stars, infrastructure repos

its the layer before akmods

yep

if there are src rpms I think this would be download the src.rpm and do the rpmbuild action?

yeah, i think so... if the build process auto-matically picks up the signing key like when building the zfs kmod that should be pretty easy.

fsync doesn't do source RPMs so would need to work with the regular rpm

the vmlinuz lives in

kernel-core

Will need to look into rpmrebuild maybe

@Kyle Gospo @EyeCantCU any familiarity with rpmrebuild?

Also fishing for a second ack on the PRI used it like once last year and I have no idea how to use it now 😆

Okay. The idea is to extract the files out of

kernel-core and kernel-virt-uki and sign vmlinuz and vmlinuz-uki.efi with our MOK and then rebuild the RPM with rpmrebuild.

Losing the GPG key signature shouldn't matter since the container will be signed by our cosign keyOh my gosh, that would be amazing and kill an entire layer we add to the container

I'm going to have to relearn

Additionally since these are now cached in a container. We could use podman inside the build to pull out the RPMs and not use a COPY directive saving the the size of the RPMs in COPY layer.

Sounds solid to me! The smaller we can make things, the better

So instead of doing

We can do a podman pull inside of a run directive

And do

Yeah kinda sucky maybe make a bash function for it but no wasted space in COPY layer

So we match size of using a repo but get control over our kernel version and akmods.

And if the kernels are signed... No kernel signer action needed downstream and custom images can kernel swap

I was about to ask if we could use

podman cp but remembered that requires it to be runningHmmm I wonder if we could do that.

We would need something like sleep to run.

If we used BusyBox/alpine/Wolfi instead of scratch we could use sleep and get the easier

podman cp command

We also could have a tiny sleep like statically compiled binary image.

I can use rpmdev-extract to extract files. Move them into place.

Then use rpmrebuild to rebuild the package using the files in place?

okay need to figure how to due this with batch mode:

but install kernel-core

sign /lib/modules/*/vmlinuz

rpmrebuild kernel-core

and you will have an rpm in /root/rpmbuild/RPMs that you is signed

I'm back at the desk and going to pick up work on my PR for akmods to use kernel-cache

okay

you are a machine

will go the route of how akmods gets the keys. I believe we have a test key in akmods

yes, the test key is in repo and the real key overrides it at runtime in Github Actions

reminder @Kyle Gospo @EyeCantCU

I'm still needing an extra approval on this one:

https://github.com/ublue-os/akmods/pull/212

thanks y'all

this is looking pretty close... think i have a detail to cleanup around ZFS

and... need some more review

https://github.com/ublue-os/akmods/pull/214

GitHub

feat: use kernel-cache images and fedora for builds by bsherman · P...

This PR uses the new kernel-cache images to install the desired kernel flavor to a generic Fedora container (not ostree based), allowing:

consistent kmod builds per kernel version (avoid repo skew...

@M2 looking at the only 2 failures with this PR... they are coreos common builds with ZFS... both failed during the last kmod build, ZFS...

however, those builds succeed locally if i use the same build-args for Containerfile.common

OH... it's the

I thought for some reason I could move back to

tar bug on older podman in ubuntu-22.04I thought for some reason I could move back to

ubuntu-latest builders...

will swap that back and test againYeah we need 24.04 builders for the podman tar bug

24.04 is still in beta I think

Again I think we should probably move it to it's own containerfile.

Since it has its own prep we could do it as a second step instead of as part of the build matrix to specify the 24.p4 builder

i do agree, i'm just trying to keep changes scoped

although, at this point I'd be willing in this PR, i just wanted it "working" before adding more changes

sounds good. Putting 24.04 on the builder should make akmods green

uh what? https://github.com/ublue-os/akmods/actions/runs/9893048873/job/27327238596#step:7:29

Error during unshare(...): Operation not permitteduhm what?

yeah

did they just turn on app armor or something?

GitHub

apparmor should be disabled by default on Ubuntu · Issue #10015 · a...

Description apparmor.service being enabled by default in the images causes various issues (e.g. https://gitlab.com/apparmor/apparmor/-/issues/402). Given these are ephemeral build VMs where users h...

checking

AAAAAAAAAAAAAHHHHHHHHHHHHHHHHHHHHHH

this is maddening

https://github.com/ublue-os/akmods/actions/runs/9893238618/job/27327861506#step:6:18

are we no longer root?

beta builders gonna beta?

if we are root.... we don't need an interactive login

i added sudo and it disabled apparmor, but still fails on that unshare

that seems to indicate we are an unprivileged user now

i agree

https://docs.github.com/en/actions/using-github-hosted-runners/about-github-hosted-runners/about-github-hosted-runners#administrative-privileges

The Linux and macOS virtual machines both run using passwordless sudo. When you need to execute commands or install tools that require more privileges than the current user, you can use sudo without needing to provide a password.Is this actually new?

i have no idea

I've assumed we been root in the build environment

do we just use skopeo against the registry?

but this is really bizarre

oh, you think maybe using skopeo against local storage is the problem on 24.04?

yeah

i have the ucore PR for using akmods sourced kmods... i'll test there since it uses same strategy

i have the kernel-cache running on latest. Will also try 24.04. But the pre PR version was using 24.04

yeah

feels so bizarre

ok, so this "verify version" works with the skopeo inspect on 24.04 https://github.com/ublue-os/ucore/actions/runs/9893501157/job/27328777885#step:6:28

and this fails but i think it should be about the same: https://github.com/ublue-os/akmods/actions/runs/9893048856/job/27327671867#step:7:13

so what's different?

set -eo pipefail

but that should be a shell exit not an unshare not permitted

and shell:bash

i agree

it's definitely the skopeo command

that's so frustrating

the unprivileged user can't access its own container storage?

can I get an approval from @Kyle Gospo @EyeCantCU or @Robert (p5) ? 🙂

https://github.com/ublue-os/kernel-cache/pull/10

GitHub

Correct tag name by m2Giles · Pull Request #11 · ublue-os/kernel-ca...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

Not sure why that didn't work.

Going to just remove that

GitHub

Cache Kernels · ublue-os/kernel-cache@7611571

A caching layer for Fedora kernels. Contribute to ublue-os/kernel-cache development by creating an account on GitHub.

any objections?

remove the pr blocking thing?

yeah that bash check should be redundent

true,

or we can use ${{ github.pull_request }} there instead of trying to match on tags containing "pr"

well, thinking about that

we only have a "pr tag" if github.event_name is pull_request...

and we only retrieve the key if event_name is pull_request

so if we use pull_request in bash, it's doubly redundant, no?

yeah

we're using the github action

if

oh.... it's because the shell is sh and not bash

cool!

and I can get rid of that && in the github

if statementGitHub

chore(ci): change signing check logic by m2Giles · Pull Request #13...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

What sucks is that this is code I don't want to run during a PR.... so the checks are happening as we merge

i'm not 100% sure i'm with you...

are you lamenting the more general problem we have where certain parts of our workflow don't get validated during PR runs and only at merge time?

yeah. We have a lot of things where we don't run if they are PR. Like the push actions and this one here. So really the merge check is the actual validation for the workflow.

It's not so much of an issue for things inside the build image. But I don't like the noisy commits when it's literally debugging the workflow

not for today, but that's one of the things i wish to improve when we reduce privileges on our default GIT_TOKEN and are able to push to an alternative registry/image_name even during PRs

probably the correct answer to this lamentation is I do this in a repo under my namespace I could test there

lets take a look at the akmods changes.

feeling dense...

systemctl status apparmor || exit 0

is that what I want?yeah

well actually you could use || true

yes! that's what i wanted was || true, thank you

that seemed to be the ticket

i wasn't anticipating a systemctl status check to fail my build 🙂

well the status is dead.....

yeah, i get why, just wasn't thinking ahead on it

one thing i REALLY like about this approach, building akmods on fedora image with the kernel_cache...

fedora container image doesn't have a kernel, so there's no confusing "dnf swap" or "rpm-ostree replace" stuff... simply install the cached kernel RPMs

it's all a bit faster

We may want to consider setting the install only 1 kernel parameter for dnf, but that shouldn't matter since we start from zero

right

I'm going to ask #💾ublue-dev to take a look too

https://github.com/ublue-os/akmods/pull/214

GitHub

feat: use kernel-cache images and fedora for builds by bsherman · P...

This PR uses the new kernel-cache images to install the desired kernel flavor to a generic Fedora container (not ostree based), allowing:

consistent kmod builds per kernel version (avoid repo skew...

okay, using kernel-cache for akmods looks great.

Another thought is that we are going back to dnf with this. So this should look less crazy and could be copied over to a centos style image in the future if needed

yep

cache-kernels is green!

and the resulting image labels look good!

oh! i found an issue with my akmods PR

ARG KERNEL_IMAGE="${KERNEL_IMAGE:-${KERNEL_FLAVOR}-kernel}" in Containerfile.common

that's required for builds to work if the user passes KERNEL_FLAVOR but not KERNEL_IMAGE

and i didn't do it the nvidia or extra Containerfiles

it actually makes me think this is bad

easy to mess upYeah, that looks like a fix to add. KERNEL_FLAVOR should be what's being passed in. And I believe you can have it defined on the layer above or do another nested one

ARG KERNEL_IMAGE="${KERNEL_IMAGE:-${KERNEL_FLAVOR:-main}-kernel}"yeah, that works, i was trying to avoid the assuming of ${KERNEL_FLAVOR}-kernel, but if it's a default which can be overridden that's fine

i'll clean that up quick,

Should kernel image even be a passed in build-arg?

if kernel_flavor is being passed in

i feel like it has to be a possible to override, otherwise all images ever used even for local dev would be fored into name $KERNEL_FLAVR-kernel

it's an edge case

oh you're right

this will be fine, and i'll use it correctly by only passing KERNEL_FLAVOR from workflow

hmm... or...

yeah. And then local can override

we can make the assumption here:

ARG KERNEL_BASE="ghcr.io/${KERNEL_ORG}/${KERNEL_FLAVOR}-kernel:${FEDORA_MAJOR_VERSION}"

and if someone really wants to not use defaults they can set both KERNEL_FLAVOR and a ful name for KERNEL_BASE

blah

feels like over thinking... what we already said will work fineweee

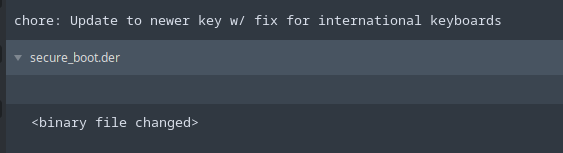

oh, can I get the new pub key for this so I can add it to the repos?

it's in kernel-cache

tx

i assume this one: https://github.com/ublue-os/kernel-cache/blob/main/certs/public_key_2.der

GitHub

kernel-cache/certs/public_key_2.der at main · ublue-os/kernel-cache

A caching layer for Fedora kernels. Contribute to ublue-os/kernel-cache development by creating an account on GitHub.

otherway around

_2 is old and "" is new?

boom

@Kyle Gospo

Hey guys

How would somebody resolve the "dnf" vs "dnf5" conflicts normally, without implementing this whole kernel-cache thing?

Is there an "easy fix"?

Is there an "easy fix"?

I didn't find one.

I had this branch going: https://github.com/ublue-os/akmods/pull/213

i found that the coreos-devel pool was adding some complications

@M2 where is KERNEL_VERSION: 6.9.7-201.fsync.fc40.x86_64 defined?

I need 6.9.8 real bad

It's now called:

fsync-kernel:40https://github.com/ublue-os/kernel-cache/pkgs/container/fsync-kernel/242493473?tag=40

yeah, i think you are still looking at

fsync:40 not fsync-kernel:40FROM ghcr.io/ublue-os/fsync:latest AS fsync

FROM ghcr.io/ublue-os/fsync-kernel:40 AS fsync

AH

thank you

Sorry. We added the -kernel because of all of the other kernels

needs to match the fedora release version,

latest isn't being publishednah all good

secure_boot_key_url: 'https://github.com/ublue-os/akmods/raw/main/certs/public_key.der'

was this key updated?

actually it's goofy that this points to akmods anyway

gonna redirect this to my own repoNo

Only place new key is hung right now is in kernel-cache

I also want to clean up the old fsync and things tagged with latest in kernel-cache.

Okay to unpublish?

give it a couple days

need to get this on bazzite main

and I'm testing other things

Okay

GitHub

chore: Update kernel source · ublue-os/bazzite@dcf9b05

Bazzite is a custom image built upon Fedora Atomic Desktops that brings the best of Linux gaming to all of your devices - including your favorite handheld. - chore: Update kernel source · ublue-os/...

Know what I'm missing?

I assume the problem is here

Akmods is a day behinf

ahh

ok sorry

I'll rebuild that

trying this change as well

definitely cleaner if it works

If you look at bshermans PR that will base akmods on kernel-cache

This is the issue were trying to eliminate

Also if you don't mind shipping devel packages you can just install everything in that directory

Yay! 🙂 my PR got merged

Hopefully no more kernel skew and just now package skew!

Now to go implement installing from cache for main / hwe / bluefin

yeah, i think that's the priority before double sign, right?

Yeah. I think so

will prevent build fails

No one should have only the new key yet.

@M2 minor issue, i think we added extra double quotes to the kernel_cache ostree.linux labels

we need to fix it

i can PR, but that's not correct

Yeah. Seeing that now.

Makes sense on what RJ was saying about using tr

You can use

| tr '"' to strip it for now in ucorei'm not working around with

tr 🙂 we should fixAgreed

Wow.... Stupid yaml strings are being strings getting me

this should be all it needs

working with PR: https://github.com/ublue-os/kernel-cache/actions/runs/9897080735/job/27340751854?pr=14#step:9:64

previous not correct: https://github.com/ublue-os/kernel-cache/actions/runs/9895211309/job/27334585507#step:9:64

yes, yaml strings are PITA

approval if you can please @Kyle Gospo ... we'll have to kickoff another akmods build, after this mergeds and builds because akmods inherits the

ostree.linux field from kernel-cache

merged and rebuilding kernel-cache

kernel-cache rebuilt

now rebuilding akmods 39 and 40Awesome

That just leaves dual signing, yes?

For akmods.

Main/hwe/bluefin still need to switch over to kernel-cache

akmods all rebuilt

Right so our pending tasks in rough priority order:

1. convert

main to use kernel-cache (always reinstall kernel)

2. convert hwe to use kernel-cache (always reinstall kernel for asus/surface, etc)

3. convert bluefin to use kernel-cache (always reinstall kernel)

4. add dual-signing to akmods

5. split zfs into distinct akmods build (Containerfile.zfs) and also move over residual zfs bits from ucore-kmods

6. add ucore specific addons to akmods to be built for coreos images@M2 dual signing not working

breaks existing users

What's broken?

Secureboot or the kernel?

secure boot

it fails to load the kernel w/ the old key

Can you do a secureboot off and dump the certificates?

working on it, secure boot off broke my luks tpm

in all seriousness, i'm happy to help with the task list above and secureboot testing, but i need to unplug on ublue for several hours for "day job"

Luks tpm needs secureboot on. We bind to secureboot state

send me the command you want run

Just need to know what failed for the dual signing.

booting now

Once in....

mokutil --list-enrolled

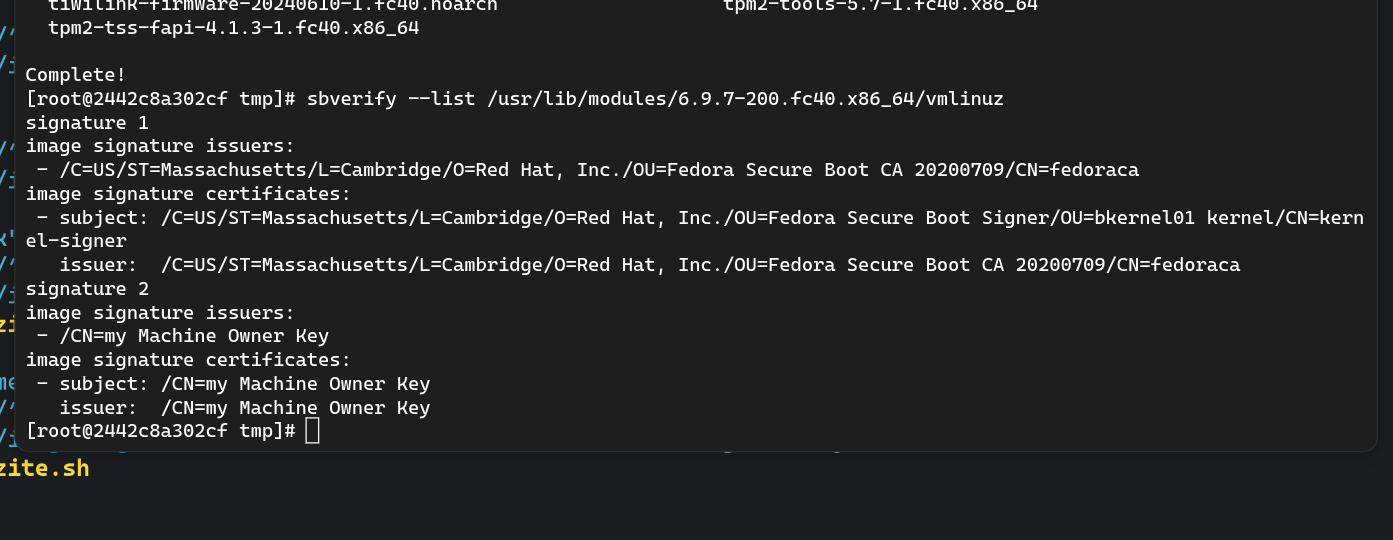

sbverify /usr/lib/modules/*/vmlinuzThat looks like mokutil. I see old cert

https://paste.centos.org/view/22536e06

first was mok

2nd is kernel

Okay. That's weird

did the new cert simply not get enrolled?

this is an existing install, so that's correct

the problem is the 2nd paste, kernel sigs are invalid

ahh... and since it's not enrolled it's failing the sig check

got it

https://github.com/ublue-os/kernel-cache/actions/runs/9897204070/job/27341138629#step:11:781

But it's not enrolled here either

GitHub

Cache Kernels · ublue-os/kernel-cache@78555fe

A caching layer for Fedora kernels. Contribute to ublue-os/kernel-cache development by creating an account on GitHub.

Just one of the signatures needs to be valid

https://paste.centos.org/view/2599b589

previous kernel w/ secure boot turned back on

so uhhh

not the problem(?)

kylegospo@daedalus:~$ sbverify /usr/lib/modules//vmlinuz

Signature verification failed

this is my desktop on the old kernel

that command doesn't work lol

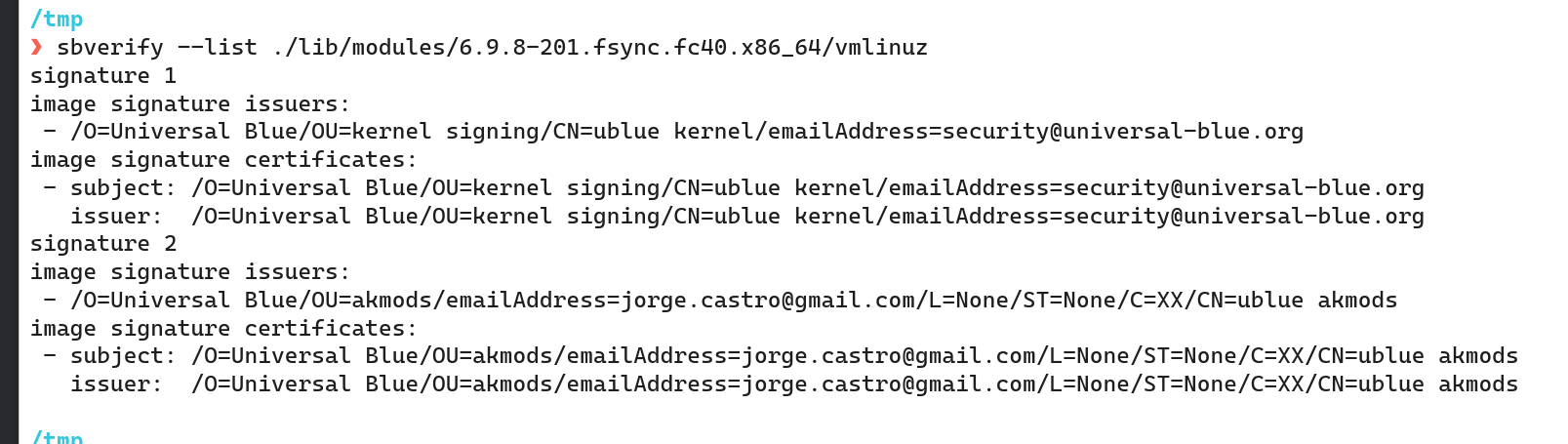

```sbverify --list /usr/lib/modules//vmlinuz

signature 1

image signature issuers:

- /O=Universal Blue/OU=akmods/emailAddress=jorge.castro@gmail.com/L=None/ST=None/C=XX/CN=ublue akmods

image signature certificates:

- subject: /O=Universal Blue/OU=akmods/emailAddress=jorge.castro@gmail.com/L=None/ST=None/C=XX/CN=ublue akmods

issuer: /O=Universal Blue/OU=akmods/emailAddress=jorge.castro@gmail.com/L=None/ST=None/C=XX/CN=ublue akmods

```

this works

I will do the same on my handheld w/ new kernel

Sorry tired. Missed the --list.

Sbverify is done with the certificate.

https://paste.centos.org/view/09612a19

there we go -- not signed

Uhmmm what the

Which kernel are you using

Yeah thats not our certs at all

6.9.8-201

fsync

just verified that the fsync copr is not still present

so it's fsync from kernel-fsync

Okay. The RPM I have downloaded has vmlinuz signed with our keys

and on the most recent run its there as well.

https://github.com/ublue-os/kernel-cache/actions/runs/9897204070/job/27341138629#step:11:783

where did this signature come from?

how'd you download it?

FROM ghcr.io/ublue-os/fsync-kernel:latest AS fsync

this is what bazzite is doing

and getting the unsigned/original fsyncwe aren't publishing latest

yeah....

we need to use API to purge all

latest from thoseFROM ghcr.io/ublue-os/fsync-kernel:40 AS fsyncthen there will be a proper error if someone tries to use it

@Kyle Gospo https://discord.com/channels/1072614816579063828/1259209376011517995/1261018256265707621

yeah latest would of been from yesterday prior to signing

I'm sorry.... should of killed the latest tags when we started the new packages

i'm fixing now

i did it manuall via UI, but i think i deleted all:

-

latest

- 39-latest

- 40-latest

- major.minor.patch

as our agreed upon convention is:

- RELEASE (eg, 39, 40, which is a release specific latest)

- RELASE-major.minor.patch (eg, 40-6.9.8)

- kernel-version (eg, 6.9.8-268.rog.fc40.x86_64)

we could probably add those tag conventions to README 🙂

so much for me working LOLYeah ...

I also will add signature verification to the workflow

ah, ok

I'll update on my end

to which workflow?

FROM ghcr.io/ublue-os/fsync-kernel:${FEDORA_MAJOR_VERSION} AS fsync

ezpz

thank you bothWell to the fetch script or in the downstream image

or both! 😄

Actually wouldn't be a bad idea

That would be a good action step

working now!

thanks guys

oh, what did we go with for the new enrollment password?

universalblue?yes. Should be good. minimun length is 8 characters

well the key is already made

just wondering what we went with lol

gotta update the docs

since I'm pointing our ISOs to the new one now

this password you are talking about is not in the key/cert we use for signing, it's hard coded in the installer ISO though...

and it's in our just config for enrolling secure-boot key/MOK

its something a user would type manually if self-enrolling a MOK, but not part of the MOK itself

and i don't think config/just or ISOs have been updated with a changed password, unless i've just missed it

No config hasn't been updated yet. Bazzite should be changing. Working on main right now

Oh I guess I misread. And you are trying to update the ISO to the agreed upon password. 🤦♂️

I feel dumb.

I’m going to feed the family

ok, done

GitHub

config/build/ublue-os-just/00-default.just at main · ublue-os/config

A layer to provide configuration files (udev rules, service units, etc) - ublue-os/config

this is the last thing to update from bazzite's perspective

hwe needs update as well since you have some asus images

main is about done

GitHub

feat: use cached kernel by m2Giles · Pull Request #605 · ublue-os/m...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

looks like very old podman doesn't like create a container from a scratch image

they don't use hwe

or at least, hwe's kernel

gets replaced w/ fsync

interesting. THought you kept Asus's

naw, would just be yet another difference

we get HWE's software, but the fsync kernel has all asus patches

and a few removed where the ones in asus's kernel are absolutely awful

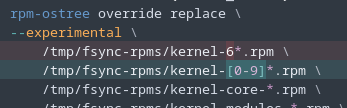

struggling with the fact that the rpms have the same name for main

rpm-ostree override replace doesn't seem to work

i'm about to just delete vmlinuz and extract vmlinuz into place

So if there is kernel skew, we can use rpm-ostree override replace.

If there is no kernel skew the only thing that needs to change is vmlinuz.

Dnf handles this fine with reinstall but rpm-ostree doesn't seem to have the same

I don't want to change version numbers of main. But I think main is the only one where we run into this issue.

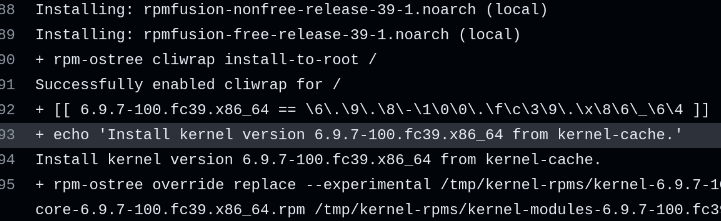

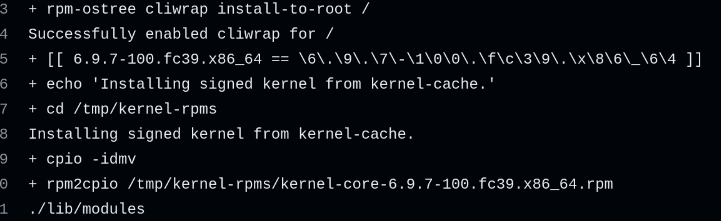

I want to try manually swapping the vmlinuz file if kernel versions match. We can get the vmlinuz package using rpm2cpio.

When kernel versions don't match we can use rpm-ostree override replace.just

--force 😂

i wish it were so easyswapping the file seems to keep rpm happy. no idea if ostree liked that....

can always test it

kernel-install says no

😦

wait... it picked up host

if you can push an image to a registry i'll test in a VM?

the latest code in PR branch doesn't have this change so i can 't build my own 🙂

GitHub

feat: use cached kernel · ublue-os/main@4e84235

OCI base images of Fedora with batteries included. Contribute to ublue-os/main development by creating an account on GitHub.

code was pushed

that's an interesting approach... and... i'll probably need the same solution for ucore

that is, however we solve this for main needs to be done the same way for ucore if we are going to ship ublue signed kernels

so with this solution... now if there IS kernel skew we don't get the desired kernel.

seems like we want to handle both cases, but for now just trying to test if this works?

Correct that's why I have the todo

Please draft this so no one attempts to merge seeing green

drafted

Case 1:

Kernel skew: use rpm-ostree override replace on kernel, core, modules, modules-core, modules-extra

Case 2 (default case):

No skew: swap vmlinuz

Case 1 is are normal kernel swap, but case 2 was giving me fits

built the image locally, pushing to local registry... will test

Note, since it didn't do kernel install.... It didn't run through dracut (which doesn't work when used with kernel install)

But sbverify shows the signature was on the kernel

right

And rpm still saw the file as part of kernel-core package

so, there are a couple questions with this approach:

1) will it boot even non secureboot?

2) if i import this new key will it boot with secureboot?

3) if i rebase, reboot, rollback, reboot, will it still boot?

4) does the initramfs regen have anything to do with it?

ok,

0) fails to boot in secureboot WITHOUT the key, that's good

1) boots without secureboot

4) we can manually do initramfs gen to pick up the stuff from config.

that's correct, since it was test signing key

Oh you built that as well?

did you push the image to ghcr in that action run? i didn't even realize

hmm... we can't enroll the test key so easily though, since it's not on the image

Yeah that image has the wrong keys

it would have wrong keys in a PR image too

We're not building here. That image is coming from ublue-os/main-kernel:40

So somehow that didn't signed correctly

oh, yeah, crap

the last run was even scheduled

and yep, it skipped https://github.com/ublue-os/kernel-cache/actions/runs/9900362761/job/27350999988

Looks like the condition for grab keys for scheduled didn't work

i'm reading the workflow conditions

Watch as it's scheduled instead of schedule

yeah, i think it should be

github.event_name == schedule

https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows#scheduleYeah.

I'm off to bed. You can do a dispatch run to clear the tags

already started the dispatch run

akmods didn't run, so that's good

Quick thought on adding a sbsign check in the runner.

Use

`podman run --entrypoint /bin/bash image -c "cat path to vmlinuz" > tmp/vmlinuz

And then do a sbverify --cert

you want that in kernel-cache?

Yeah after image build and before push

to block test key pushing?

Yepp

k

distinct PR 🙂

Agreed

actually, created an issue for this so i can get back to testing

actually, they started before the kernel-cache rebuild finished, so i cancelled akmods and restarted manually

I did some testing on a

silverblue-main image built with the current branch for testing kernel-cache signed kernel replacement and reported here: https://github.com/ublue-os/main/pull/605#issuecomment-2224466568GitHub

feat: use cached kernel by m2Giles · Pull Request #605 · ublue-os/m...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

Okay. I can get the second half implemented.

While it's goofy, it works. We can talk about improvements in future for minimizing size costs.

After kernel swap is done we have akmods dual signing

For akmods dual sign we should be able to use rpmrebuild for adding the additional signature like we are doing with the kernel

main is about done now. Working on secureboot check right now

that block should be able to be copied into all of our workflows

this is working in the pr.

I haven't gotten the secureboot check working

but kinoite-main has kernel skew, but silverblue-main does not

So silverblue-main only copies the signed kernel, while kinoite-main downgrades the kernel

Removing the secureboot check for now. Will add in another PR later

GitHub

feat: use cached kernel by m2Giles · Pull Request #605 · ublue-os/m...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

Not doing the SB check in this PR now. But you can see that kinoite 39 does a downgrade, while silverblue 39 just does a kernel swap

But this is ready for review.

@bsherman @Kyle Gospo @Robert (p5) @EyeCantCU

Working on hwe right now.

Nvidia is good. Asus running through now.

Surface is annoying with kernel package being named differently

Asus and Nvidia are now good.

Working on surface

alright, surface is being very, very fickle

hwe should also be ready now

main and hwe are ready

hwe main-nvidia inherits signed kernel from main

@bsherman @Kyle Gospo @Robert (p5) @EyeCantCU

Next up is bluefin.

Thoughts for akmods is that we will do the same rpmrebuild technique

i approved hwe, and main already merged...

by "same rpmrebuild technique" you are referring to the signing process used in kernel-cache, right?

Yes.

Let akmods build the RPMs then repack it

Need to sign the .ko's and .ko.xz's

needed due to our multi-key sign, right? since the akmods script would only use one key

Yepp

We can have it be an optional turn on like in kernel-cache

So when we are only using one key it's not doing the repack

Most up to date key goes for to akmods expected spot. Old key that's getting cycled out goes into slot 2

seems great

Noticing that image sizes are a little larger due to the copy over.

After completing akmods and bluefin. I will look into slimming down the image by reducing copies.

Yep. I think slight increase is worth it for reliability, but solving that is of course a nice thing

Finally got around to resolving the build errors in a slightly simpler way than a kernel-cache repo in a custom image (without supporting third-party kernels). Mostly following how Ben and M2 did it.

For anybody else crazy enough to be maintaining their own Nvidia builds, here is what it needed:

1. Switch the builder to Fedora Workstation

2. Query the kernel version from the upstream images

3. Install the upstream kernel version on the workstation container build

Obviously this doesn't allow for any custom fsync kernels since it's not touching the user-facing kernels, but it does allow for the nvidia and nvidia-open kmods to build in a way that's compatible with installing to the ci-test images.

https://github.com/rsturla/akmods/commit/b2110b5d7abe1247d2f7d465655e42443fe7efec

I got sbverify working as part of the actions so we can make sure that the kernel is signed in downstream images

it will look something like this:

I guess the cat method doesn't work with the 22.04 builder

but this does

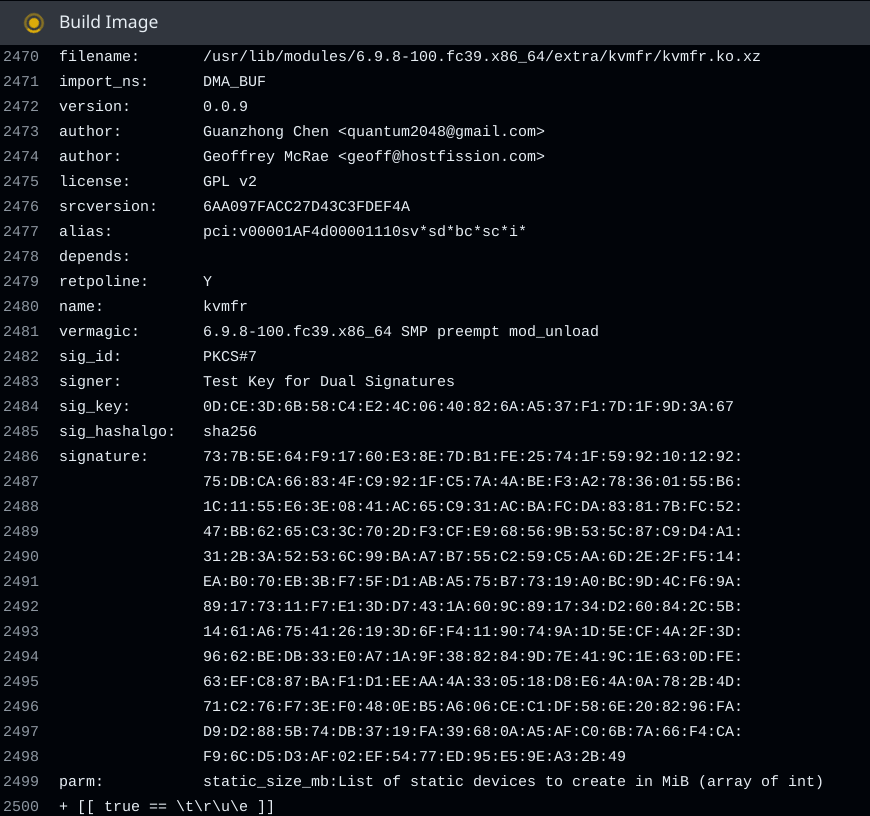

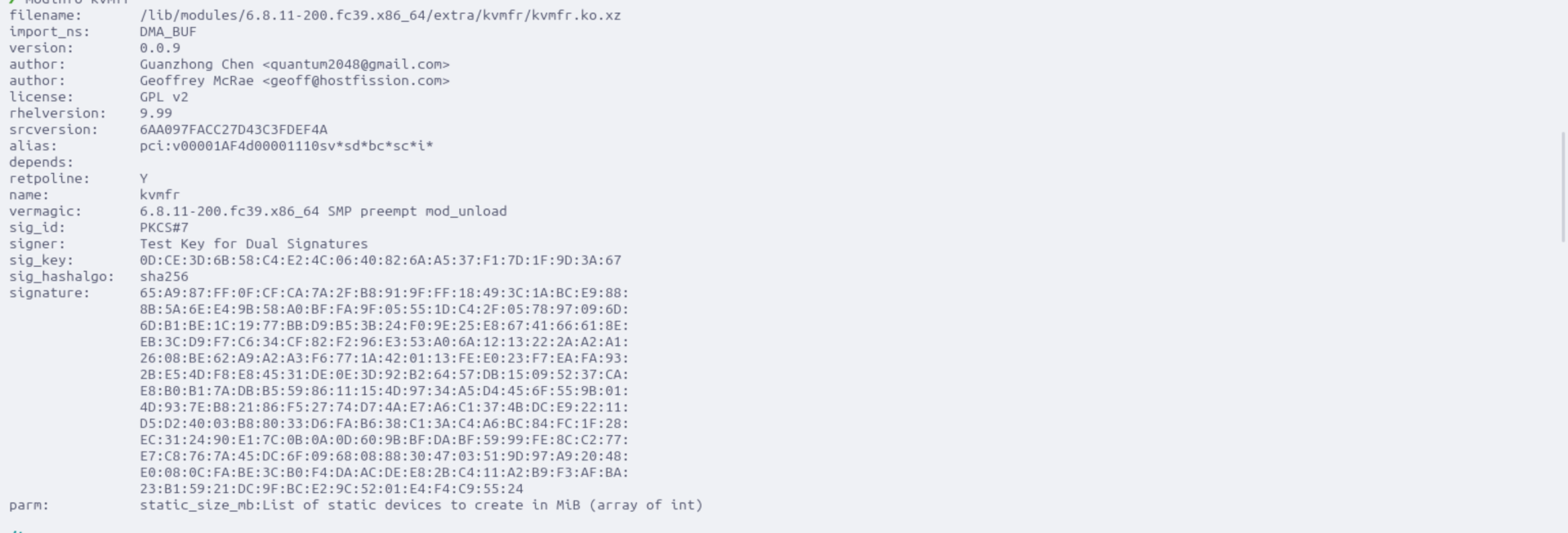

Think I have the pathway for dual signing down for kmods. This should result in kvmfr to have two signatures

https://github.com/ublue-os/akmods/actions/runs/9946624178/job/27477574324

GitHub

feat: Enable Dual Signing for akmods · ublue-os/akmods@0854ab0

A caching layer for pre-built Fedora akmod RPMs. Contribute to ublue-os/akmods development by creating an account on GitHub.

i only see the second signature

modinfo only seems to show the last signature

so the signatures are getting appended. Will the kernel actually load them is the question

next up for me is this bit:

split zfs into distinct akmods build (Containerfile.zfs) and also move over residual zfs bits from ucore-kmods

WIP PR for this

https://github.com/ublue-os/akmods/pull/217

GitHub

refactor: split zfs into distinct image by bsherman · Pull Request ...

Initially ZFS support was added as part of the akmods common image and corresponding workflow matrix element.

This splits ZFS support into a distinct:

workflow matrix element (distinct build)

Cont...

ready to go for +1

I put in some comments

really only the final copy is a blocker

final copy?

oh, i see the 3rd comment now

Alright that all looks good!

Did you see my issue about tagging with kernel version?

been AFK, then working, just getting back to this.

i replied on kernel tagging issue https://github.com/ublue-os/akmods/issues/216#issuecomment-2231580804

so.... https://github.com/ublue-os/bluefin/pull/1513

i'm working on it intermittently... but welcome feedback

I wonder if this is due to container in container.

And that we are on an old version

We could just hardcode to akmods-zfs:coreos-stable-${FEDORA-MAJOR-VERSION}

For now.

Could try mounting it in to avoid a wasteful copy layer

oh, i see you just made a change

i like the bind mount idea

GitHub

refactor: use akmods-zfs for ZFS install · ublue-os/bluefin@6bbeb7d

The next generation Linux workstation, designed for reliability, performance, and sustainability. - refactor: use akmods-zfs for ZFS install · ublue-os/bluefin@6bbeb7d

It builds

it needs the newer podman from 24.04

it also hardcodes using coreos-stable-${FEDORA_MAJOR_VERSION}.... can I use the bind mount from inside a script?

so I can do some sort of logic with it?

looks like if it doesn't use a rw layer for those bind mounts... or it reuses it

okay and we are green across the board.

24.04 works. Can look to change to using the obs build of podman instead until 24.04 is ready @bsherman

i haven't messed with the obs build of podman... it's just a build we install over the pre-installed version on 22.04?

yepp on the runner

it was one of the things we were trying for the tar bug

looks like this

We also could do something like this instead from the pr on main

GitHub

main/.github/workflows/reusable-build.yml at ef474caf61592c27d211c2...

OCI base images of Fedora with batteries included. Contribute to ublue-os/main development by creating an account on GitHub.

Which I really like a lot of the changes

see latest updates on bluefin PR

looking weird, why is asus, etc not doing "build image" ?

https://github.com/ublue-os/bluefin/actions/runs/9968090782/job/27542788534?pr=1513

we don't build image for asus/surface on PRs

GitHub

bluefin/.github/workflows/reusable-build.yml at 08106c155a3b20e46e0...

The next generation Linux workstation, designed for reliability, performance, and sustainability. - ublue-os/bluefin

This speeds up builds during PRs and the only difference we have for surface/asus is the evdi and not installing kernel from the kernel-cache

makes sense! i just hadn't noticed

pushed another update to use OBS podman

@M2 😭 https://github.com/ublue-os/bluefin/actions/runs/9968374176/job/27543541390#step:20:135

24.04 it is for now

yeah

pushed

Okay will approve

Akmods should be triggered immediately following when cache-kernel occurs.

@M2 re: testing the dual-signed akmods... do you have a published image I can test with?

You can use either the bluefin or Aurora iso.

Normal version.

Then do local akmods build for common. That will build thr kvmfr module

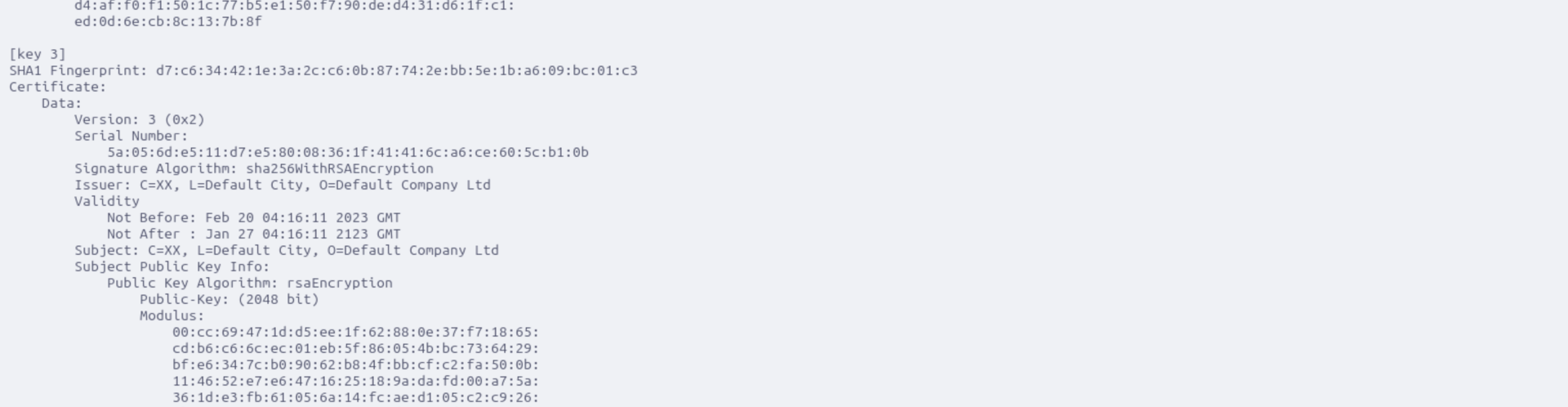

Extract and install. The two test public certs are in the repo. Default Company is cert 1 and Dual Signed is cert 2.

I've been loading default company with MOK.

I'm going to try seeing if we can directly load the cert in a running system to the system keyring

oh I see, you've been testing with the test certs, not "real" certs

good enough for testing though

yepp. VM so doesn't matter. kvmfr since it's in common but not installed by default on bluefin/aurora

so for module signing the documentation reads that the signature is appended. So I'm thinking that it is limited to that last signature

https://github.com/torvalds/linux/blob/master/scripts/sign-file.c

this is the tool i've been using for kmod signing

something i'm noticing is that wl, and xpad don't have the signature with Module Appended line

but we don't have any write permissions to platform or machine keyring. We do have write to secondary_trust_keyring but I had mssing key when attempting to add a certificate to that keyring

SlideShare

Multi-signed Kernel Module

Multi-signed Kernel Module - Download as a PDF or view online for free

Resigning doesn't work.

However you can use openssl directly and then use sign-file

👀

Okay still confused since only one public_cert is used with sign-file

Well this still doesn't seem to work

And it's working!!!!!

Holy shit this was way harder than I expected

what was the final fix?

2 things.

1. The signer file is both the signing key and certificate in one file.

2. The attached public cert at the end is all public certs in one file

You don't use sign file to sign. Use openssl directly. Then use sign-file to attach the signature to the module

Need to confirm that either key works. But the key that isn't shown in modinfo worked with a modprobe

mokutil enrolled cert

modinfo showing different cert

inserting it and showing that exists

what a pain

why can't it just be consistent lol

like i seriously thought it would be something like sbsign....

but no....

i'm now totally thinking about modifying sign-file.c and adding that to kernel

like seriously.... they all need to be keypairs so if there is an even amount of inputs..... go to the races

https://github.com/ublue-os/akmods/pull/215

Attached my updates here for people to test.

Easiest test method is use bluefin:gts.

1. You need a secureboot enabled vm.

2. Clone the repo and switch to the pr branch.

3. Import the public key into using mokutil.

mokutil --import akmods/certs/public_key.der.test

4. Build the akmods common container podman build -t akmods -f Containerfile.common --build-arg KERNEL_FLAVOR=coreos-stable FEDORA_MAJOR_VERSION=39 .

5. Create, export, and install rpm

6. Finish importing your key.GitHub

feat: Enable Dual Signing for akmods by m2Giles · Pull Request #215...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

Alright. Assuming everything goes into extra for out of tree modules. Looping through the kmods to resign everything and rebuild in one script at the end

looks like I'll need a little different script for zfs but common, extra, and nvidia all seem to build

All but zfs appears to be working

So zfs does not get installed as part of the build process. That was causing an issue

If you want debug packages.... Well you can build and sign your own

ZFS should be dual signed now

it doesn't appear so....

grrr

sorry, i've been stuck on annoying work problems today

grrr

grrr

So-called free-thinkers when grrr

thoughts are not free, they are quite expensive, in fact

I don't know, I've seen some pretty worthless thoughts in my time

depends where you get those thoughts 😉

gotta pay for the good stuff

Okay so strings definitely seems to indicate no double signing

I want my threadripper for compiling

I'm just an idiot....

never actually copied over the rebuilt rpm....

and dual signing on zfs!

again... that was harder than I expected

https://github.com/ublue-os/akmods/pull/215

Put it on ready for review. Someone other than me please test this thing

GitHub

feat: Enable Dual Signing for akmods by m2Giles · Pull Request #215...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

instructions are above with kvmfr

you can also do an ostree admin unlock o swap other individual modules

Dual signature also works with zfs. Used

ostree admin unlock --hotfix to have a persistant change.

It works with either public certificates@bsherman honors on the 2nd ack?

I'm gonna test this in a bazzite:testing build right after it merges

to be 100% sure it's good

bazzite testing sounds like a great place to test this

Only concern I have is nvidia

but, it appears to be the same as how extra/common build. ZFS is definitely the oddball

@j0rge up for an ack?

gotchu fam

do we need to coordinate changes down the line or is it just here?

as long as there's no bugs, we're good everywhere

just need to start using the new keys

lol

wait until you see the heredoc stuff in main

Bazzite testing gonna be truly testing

your bazzite containerfile life is about to go booooooom.

@Kyle Gospo queued akmods

Sweet

Why aren't they running?

@j0rge anything using the builders

They all have waiting for a runner

looking

stuck here

GitHub Status

Welcome to GitHub's home for real-time and historical data on system performance.

appears to be an actions outage?

@Kyle Gospo how has dual signed kmods been going on testing? Have you tried with only the new pulic key in the MOK?

i got bit by an unsigned kernel today 🙂

had to disable secure boot... i'm guessing it'll be fixed with our new changes, once we get a clean build of 40

Yepp

We can add the same check to ucore as well

yep, i plan to do so once i'm pulling in the kernel cache stuff

i plan to:

1) add a couple ucore only packages to akmods

2) convert ucore to kernel-cache + akmods w/ signature check

Sounds good.

@bsherman @Kyle Gospo https://github.com/ublue-os/kernel-cache/pull/18

GitHub

chore(ci): Check secureboot signatures by m2Giles · Pull Request #1...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

next up is confirming kmods

this is making me feel dumb again

GitHub

feat: verify kmod signatures for dual-sign by m2Giles · Pull Reques...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

and it works

https://github.com/ublue-os/akmods/actions/runs/10017355653/job/27691424624?pr=218#step:12:2130

GitHub

feat: verify kmod signatures for dual-sign · ublue-os/akmods@325c2c7

A caching layer for pre-built Fedora akmod RPMs. Contribute to ublue-os/akmods development by creating an account on GitHub.

So we could create another container that then tests the rpms or we can call this good enough.

added a test container

Almost got the test container finished off

common is working, now working through extra/nvidia/zfs

nvidia and zfs should be good now

and extra has gone green

@bsherman @Kyle Gospo https://github.com/ublue-os/akmods/pull/218

GitHub

feat: verify kmod signatures for dual-sign by m2Giles · Pull Reques...

Thank you for contributing to the Universal Blue project!

Please read the Contributor's Guide before submitting a pull request.

Ready for review

The longest ones take like 20ish minutes to build now which sucks

but most of that was mesa-filesystem taking forever to download

interesting...

so, the test image (Container.test) mounts the previously built scratch akmods image and runs dual-sign check on all the RPMs

that's sweet man

ready to merge

MERGED

what's next step do we need to announce anything?

well, we still can't get clean builds thanks to package skew on mesa freeworld stuff, at least in F40

realistically, we are just tightening up our reliability here...

yes, we're dual singing, but much more important IMHO, is the check M2 added to ensure expected signature(s) exist in kernel/kmod RPMs before publishing an image

however, it doesn't change anything for downstreams

oh so nothing user visible then

Bluefin needs to switch to new public key for ISOs. Config needs an update to the just script for the new public key as well.

All the adds have been to ensure that kmods/kernels are signed with what we expect right now.

right, but not yet, right? we still need a full set of clean builds with verified signatures before we switch ISOs to use new key, etc

correct

waiting on clean builds

the ucore addons in akmods, which will let me shutdown

ucore-kmods repo and have ucore source from akmods

https://github.com/ublue-os/akmods/pull/219GitHub

refactor: move ucore addons builds here by bsherman · Pull Request ...

This relocates the ucore-addons and ucore-nvidia RPM builds from ublue-os/ucore-kmods to ublue-os/akmods, allowing us to continue consolidating our build processes into more managable units.

Note: ...

Do you want to add any tests for a ucore build?

Such as? This PR doesn’t add kmods just those simple addons rpms

fair. Was just thinking if we should have another guard or something

Alright there are also now staged PRs for main/hwe to go back to remove the COPY layers

40 being busted right now is frustrating

@M2 hmm... kernel-cache failed

https://github.com/ublue-os/kernel-cache/actions/runs/10024187753

but

main f40 built! 🙂

i think the problem is it's a main branch build, so should be using production keys but it's downloading test keys

curl --retry 3 -Lo kernel-sign.der https://github.com/ublue-os/kernel-cache/raw/main/certs/public_key.der.test

https://github.com/ublue-os/kernel-cache/actions/runs/10024187753/job/27705966906#step:12:134

https://github.com/ublue-os/kernel-cache/pull/19

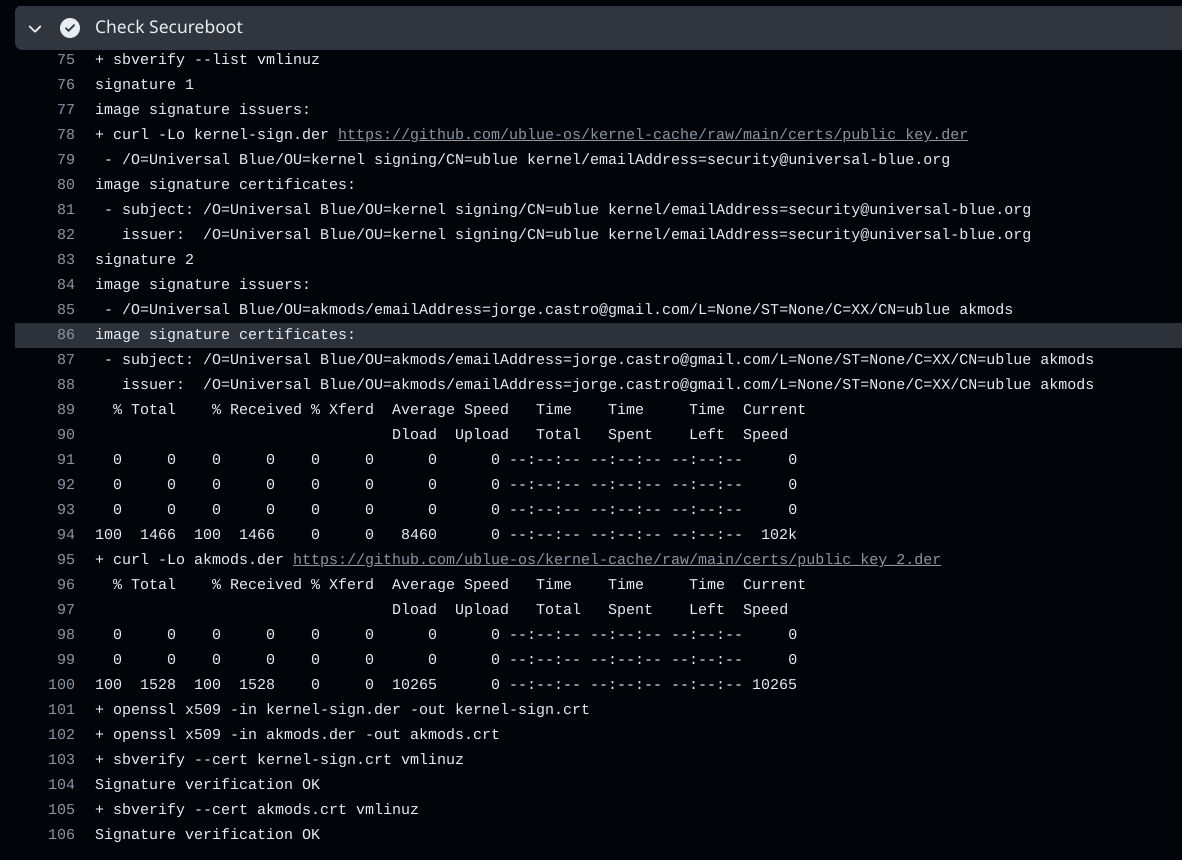

I checked main's "Check Secureboot" job and it looks fine

ditto hwe's

@Kyle Gospo you able to hit this +1 also?

thank you @Kyle Gospo 💙Oh darn. Need an if statement for making sure right public keys are downloaded for that test. I am confused since kernel cache worked yesterday....

Thanks for the quick fix

Oh I see. We should double check akmods as well to make sure the check is fine there as well. But kernel consumers should be fine since no kernel signing there

Yep. I’m pretty sure I checked akmods, I know I checked main and hwe

And yes, any consumer of kernel-cache shouldn’t have a problem since they are consuming images with the proper “prod” keys.

I think you have some paths to handle. But super stoked.

lol 🙂 yeah, it failed after i pasted

and what's odd, that built locally

invalid mount type "cache"24.04

Will fix that

i'm on 24.04

Will look.

AH!

no... i have 3 jobs, so 3 places to change to 24.04

i was NOT on 24.04

About to say... I see 22.04

Stupid old podman/buildah

ok, that was just the "fedora-coreos" images... now for ucore

UGH

GitHub

chore(ci): reduce copy layers · ublue-os/ucore@926d8ac

An OCI base image of Fedora CoreOS with batteries included - chore(ci): reduce copy layers · ublue-os/ucore@926d8ac

i remember we hit this before

ubuntu is so broken

I just wonder if we end up building with docker and then use podman to chunk it before pushing to ghcr.

Like we are only missing some things on 24.04 but we'll have issues again possibly in a year or we go to nested build container

In one year:

Like we are only missing some things on 25.04 but we'll have issues again possibly in a year or we go to nested build container

what are the downsides of using the OBS podman builds?

They are currently old

And it's not just podman. We also need an up to date buildah. The action builds with buildah not podman

ah ok

I was gonna say, when podman-cache?

So it's with the Ubuntu runner. Not sure what is the easiest way to modify without just standing up a ppa

they just sunset the podman ppa last release or something

This is a barrier for adoption for them.

yeah not much we can do

the red hat <-> canonical competitive landscape wins this one.

seems like redhat not supporting a PPA is killing cross-platform usefulness of their container tooling solution

It's like the manager who decided "we should kill docker!" left half way through

ok, my last PR related to this thread is ready and then i'll let the thread die 🙂

https://github.com/ublue-os/ucore/pull/179

a littel help please?

@Kyle Gospo @M2 @p5 ?

@j0rge i'll take a +1 from you too 🙂 i'm ready to GOOOOOOOO

💙 @M2 and @Kyle Gospo 🙂 i'll merge now

Let's gooooooooooooooooooooooooooooo

my man was getting desperate

😄

"jorge we will allow you to click this button, but ONLY THIS ONE. AND ONLY ONCE PLEASE."

well, i waited overnight 😉 because I wanted to monitor the merge, but yeah, i just want it done now

DONE

i've carefully pulled prior versions of the images, i'm eager to measure the space savings

I didn't have any savings in bluefin according to dive

but I'd be curious to see

hah, maybe none for me either

i was promised savings 🙂 where's my savings? 😄

was buildah/podman already doing something smart for us?