anyway, enough small talk. ive got alot of homework going through your August tuts. I'll check out

anyway, enough small talk. ive got alot of homework going through your August tuts. I'll check out Florence.

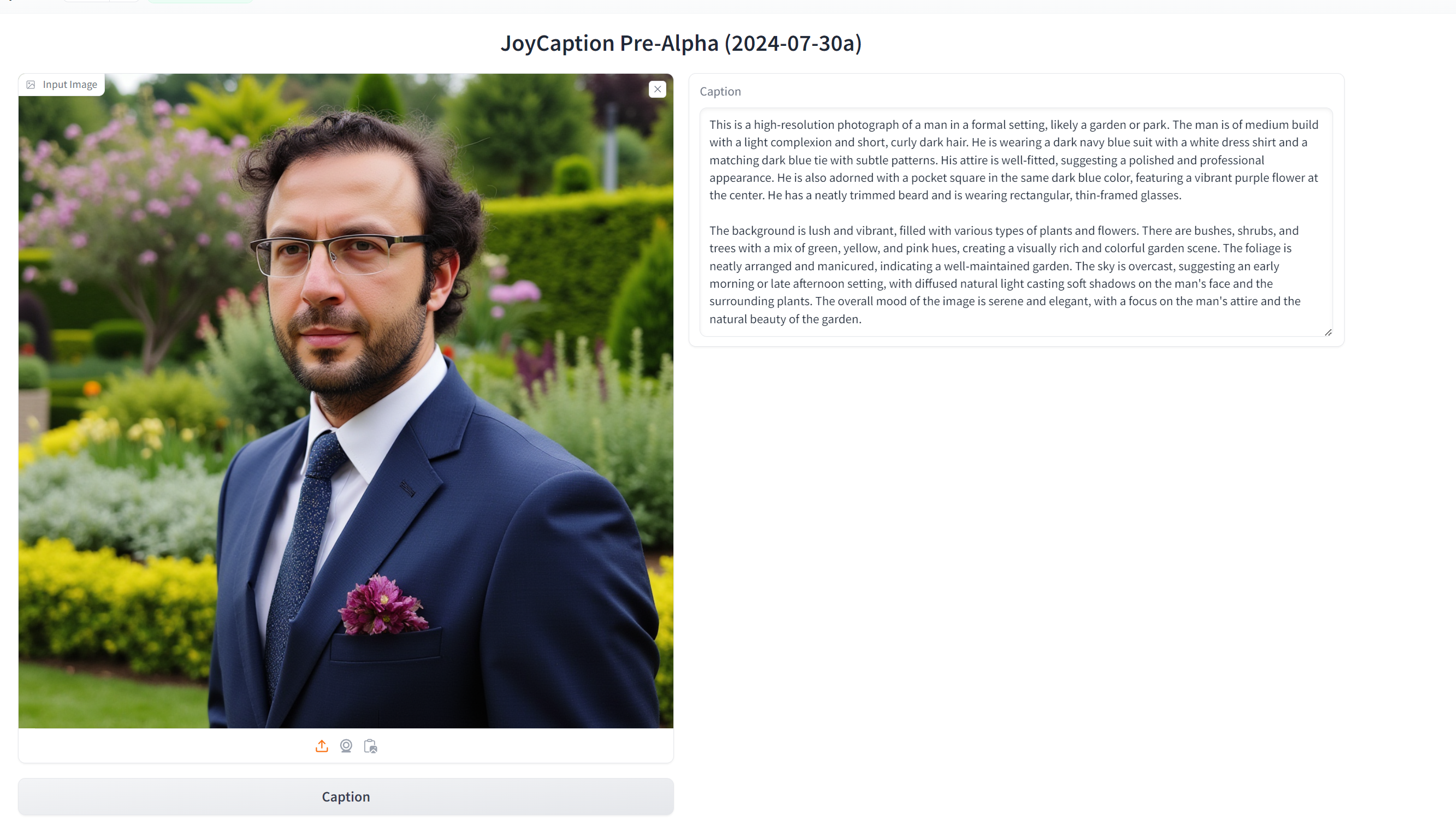

1. git clone the linked repo, it has the trained clip-to-llama thingamajig included already

2. setup the venv, or if you are lazy like me, use an existing venv from one of the other billion projects with a superset of this one's requirements.txt

3. edit app.py to remove all the gradio and spaces junk

4. replace "meta-llama/Meta-Llama-3.1-8B" with "unsloth/Meta-Llama-3.1-8B-bnb-4bit" to save space and not have to authenticate with hf

5. print(stream_chat(Image.open("/path/to/boobas.png")))

6. ???

7. profit

1. git clone the linked repo, it has the trained clip-to-llama thingamajig included already

2. setup the venv, or if you are lazy like me, use an existing venv from one of the other billion projects with a superset of this one's requirements.txt

3. edit app.py to remove all the gradio and spaces junk

4. replace "meta-llama/Meta-Llama-3.1-8B" with "unsloth/Meta-Llama-3.1-8B-bnb-4bit" to save space and not have to authenticate with hf

5. print(stream_chat(Image.open("/path/to/boobas.png")))

6. ???

7. profit