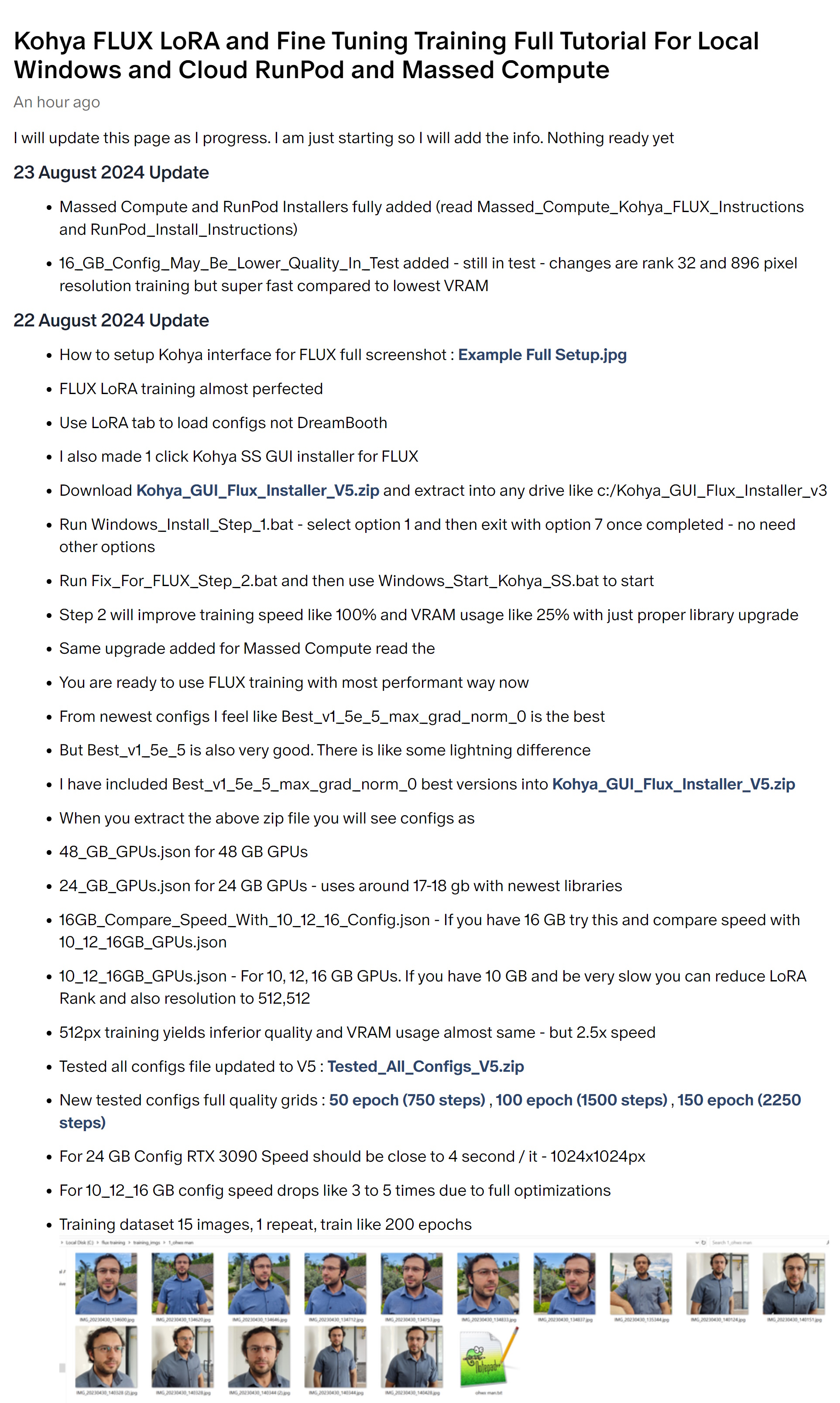

I see everyone is fluxing. However, I managed to find the golden point for training ponyRealism 2.1

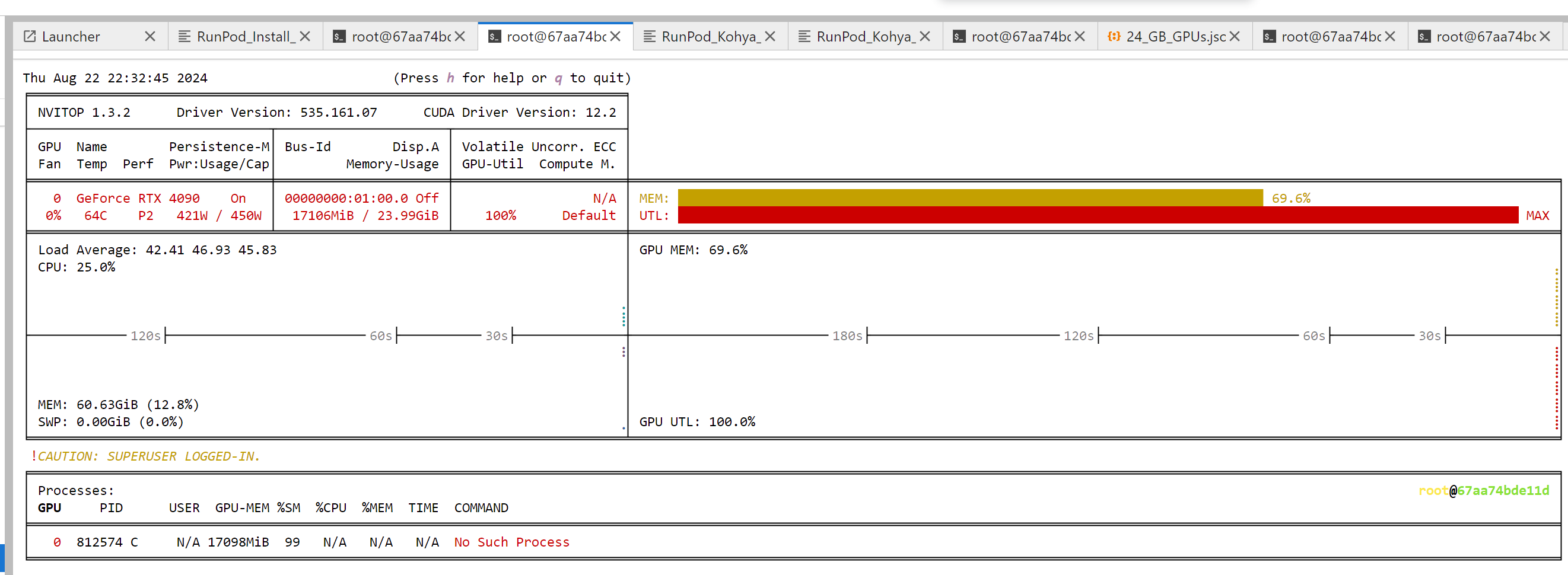

What's important is that I don't use a bucket, I just work with the best 100 images, 1024x1024 resolution, and the classic 'xxxxx woman' folder name. (Where xxxxx is the name of the lora. In Furkan's case ohwx.) Another important thing is that I need 24 GB VRAM here, almost fully exploiting the training. For 100 images it's about 45-50 minutes, for 150 images it's about 1 hour 20 minutes average training.

I just trained 5 Lora, and for all of them the max_norm/keys_scaled value on Tensorboard rarely went above 2, so they became perfect person Lora. I tried it before with 16/16 dim/alpha, but 32/32 turned out to be the right value.

What's interesting is that the image generation got better with euler_a, and bemypony_Photo3 model. However, the Lora performed quite well on the other Pony models, so I'm satisfied. Here's my Kohya json file, feel free to use it!