hahahaha xcellent how do you do that?

hahahaha xcellent how do you do that?

very likely trained my face

nah just prompted  in ideogram 2

in ideogram 2

in ideogram 2

in ideogram 2its the best for text i find

Midjourney is in shambles!

seems the runpod .sh dosent work on regular linux ubuntu

i tried so many times and it fails because it dosent recordnize what i want but its okay i may just rent a runpod server

you need to have python c++ tools and cuda installed

runpod comes with pytorch docker template

if you can load it it would work

i have cuda but not sure about c++

but it is hard to install those

also runpod doesnt have sudo command

you need to append them

ill have to look into it tomorrrow

On the new flux lora test updates, i see speeds in s/it for each config json. Are those the speeds we shoukd be getting? Im running config 5 which is the one recommended for 12gb vram cards like my 3060. It was 12.2 s/it but im getting 25.5 s/it. Not sure what im doing wrong if those are the expected rates

no those are for A6000 GPU

relative speed

also on linux

25 second is looking normal

you have to reduce resolution for speed up but reduces quality

you can do 768x768

Thanks. Lol i thought i was way off. 25 seems fine

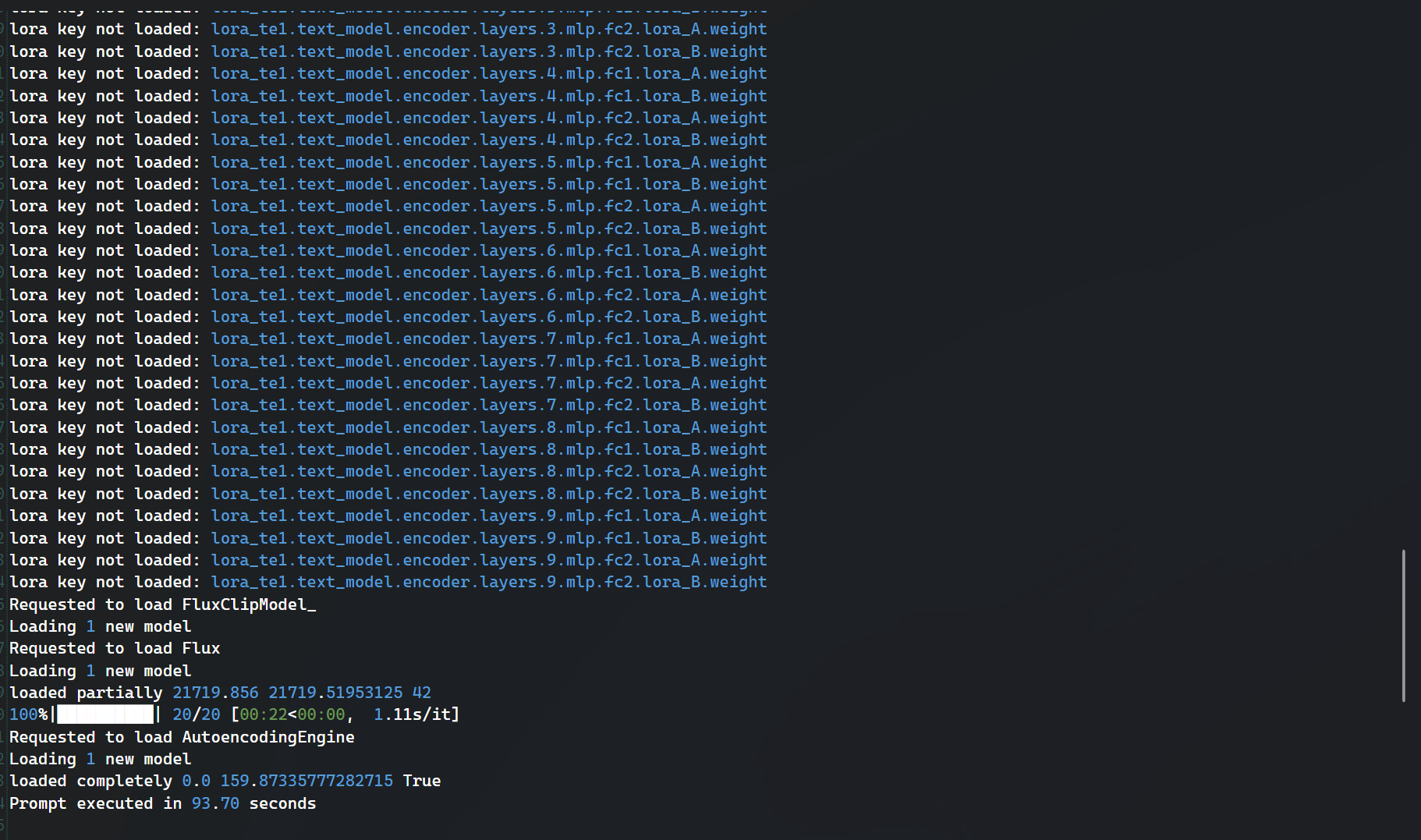

@Dr. Furkan Gözükara doesn't seem like comfy or forge accept loras trained with text encoder yet

text encoder training didnt added to kohya yet

so i dont know :d

i got it to work  in sample images its fine, but they don't work on comfy or forge yet

in sample images its fine, but they don't work on comfy or forge yet

in sample images its fine, but they don't work on comfy or forge yet

in sample images its fine, but they don't work on comfy or forge yetwell i am waiting kohya

he said he found today gonna add :d

For multi gpu training do the gpus need to be equal in vram? Like i have an 8 gb card im not using and a 12 gb card that is installed. Would the 8 gb card work for multi gpu training with the 12 gb one?

very likely

it loads onto each gpu cloning basically

Looks like one trainer might be getting closer to flux release. Their dev branch is now called flux. So fingers crossed

Thanks

anyone know how to add a lora on comfyUI for flux dev?

our configs ready :d

anyone know how to add a lora on comfyUI for flux dev? This is the workflow

You need to add a lora loader after the clip. Model from flux loader to left model port of lora loader. Clip out to lora loader and then model out to scheduler i believe

I have a basic workflow that i can post that shows it

Or post the image

This workflow allows you to harness the power of FLUX.1 [dev] within ComfyUI. Follow these steps to set up and run the model. The true "simplest" v...

![[Simple+Advanced] FLUX.1 ComfyUI Workflows - LoRA Support v2 (No CL...](https://images-ext-1.discordapp.net/external/na4wfXQQJrY5tc6YtV_EUDzN--VXAFHX87bvfz2-9Q0/https/image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/28572c0b-e9ca-4e7e-8e7a-4557e4a8ac57/width%3D1200/28572c0b-e9ca-4e7e-8e7a-4557e4a8ac57.jpeg)

Hopefully discord doesnt strip the data from the png

If it does thats the civ link to the json and workflow

It works for me and im noodle programming impaired so anyone should be able to use it

discord doesnt strip meta it works