Hey @Dr. Furkan Gözükara can i follow your instructions to runpod and use aws instead ?

Hey @Dr. Furkan Gözükara can i follow your instructions to runpod and use aws instead ?

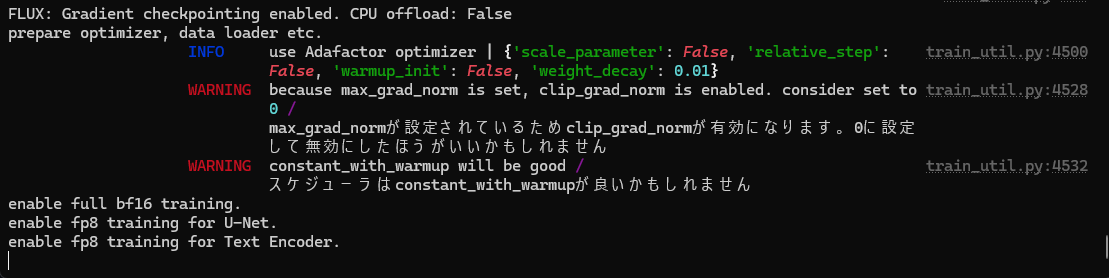

--fp8_base is specified, the FLUX.1 model file with fp8 (float8_e4m3fn type) can be loaded directly. Also, in flux_minimal_inference.py, it is possible to load it by specifying fp8 (float8_e4m3fn) in --flux_dtype.

--fp8_basefloat8_e4m3fnflux_minimal_inference.pyfp8 (float8_e4m3fn)--flux_dtype