Do you happen to know why there's little to no improvement when using Torch 2.5? Said it a couple of

Do you happen to know why there's little to no improvement when using Torch 2.5? Said it a couple of messages ago.

!! thanks for sharing - how many pics in your training dataset and how many training steps?

!! thanks for sharing - how many pics in your training dataset and how many training steps?

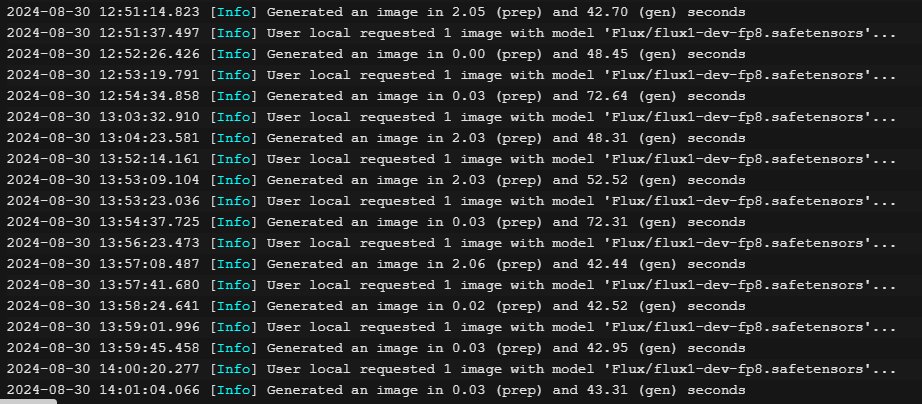

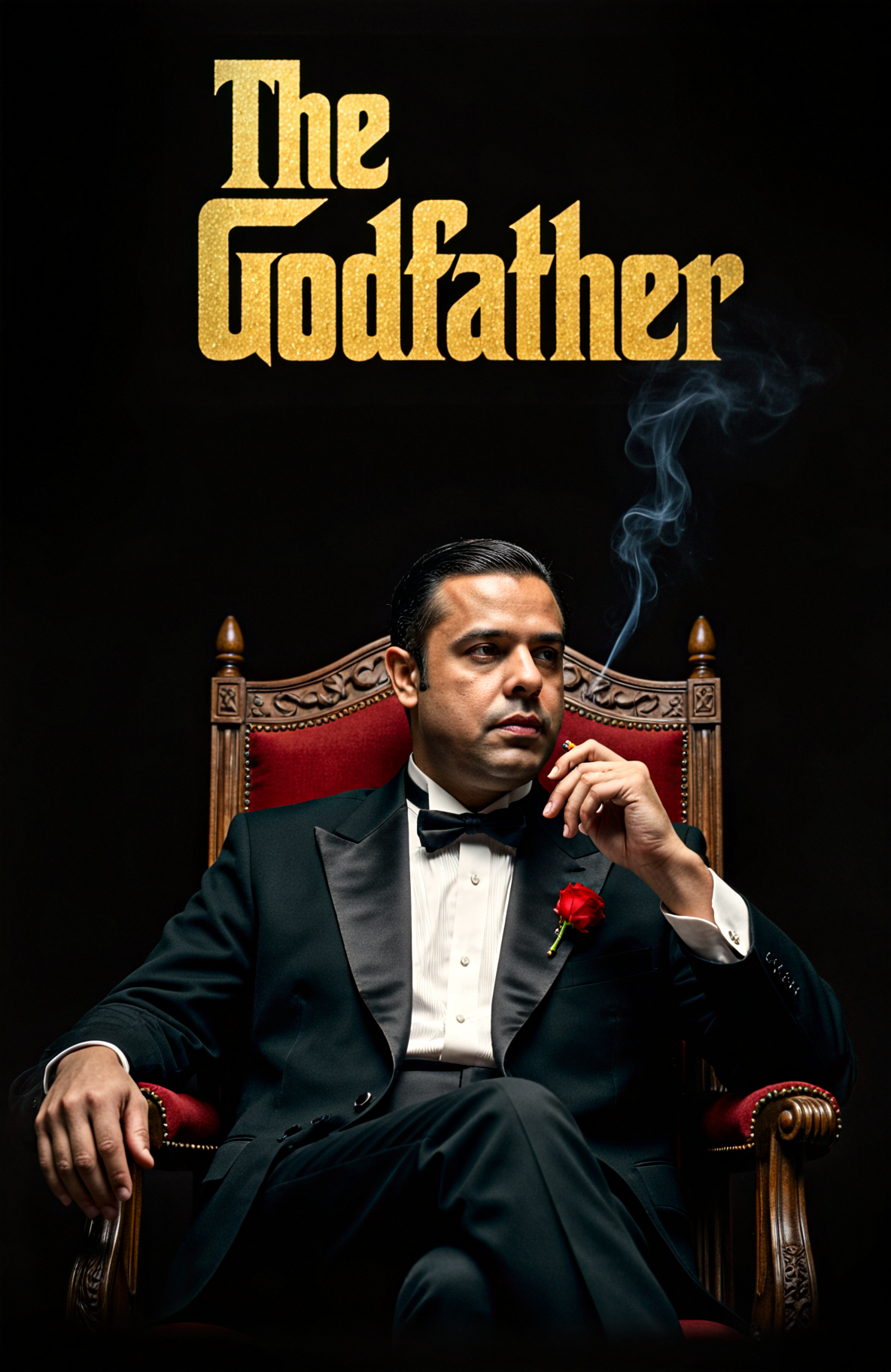

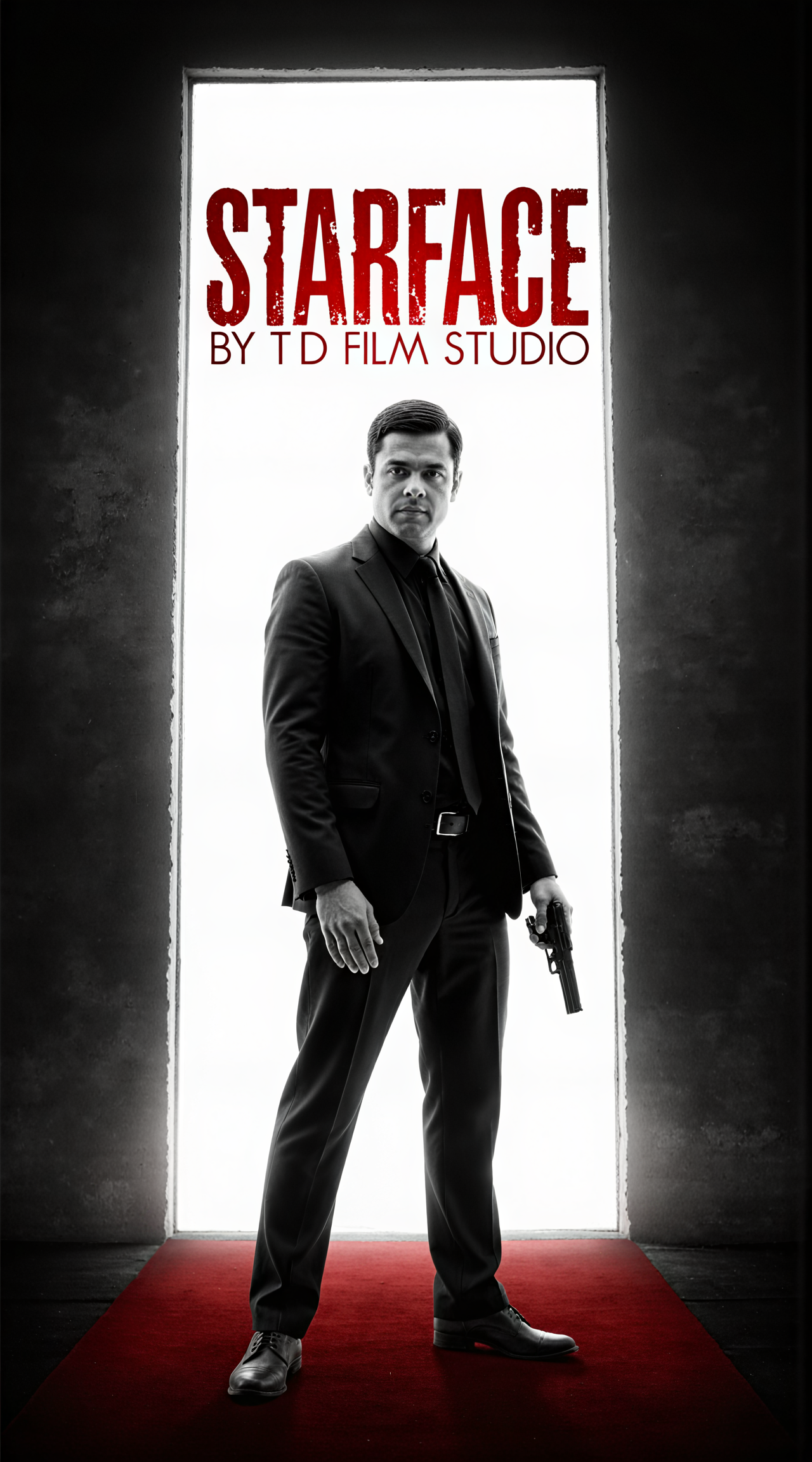

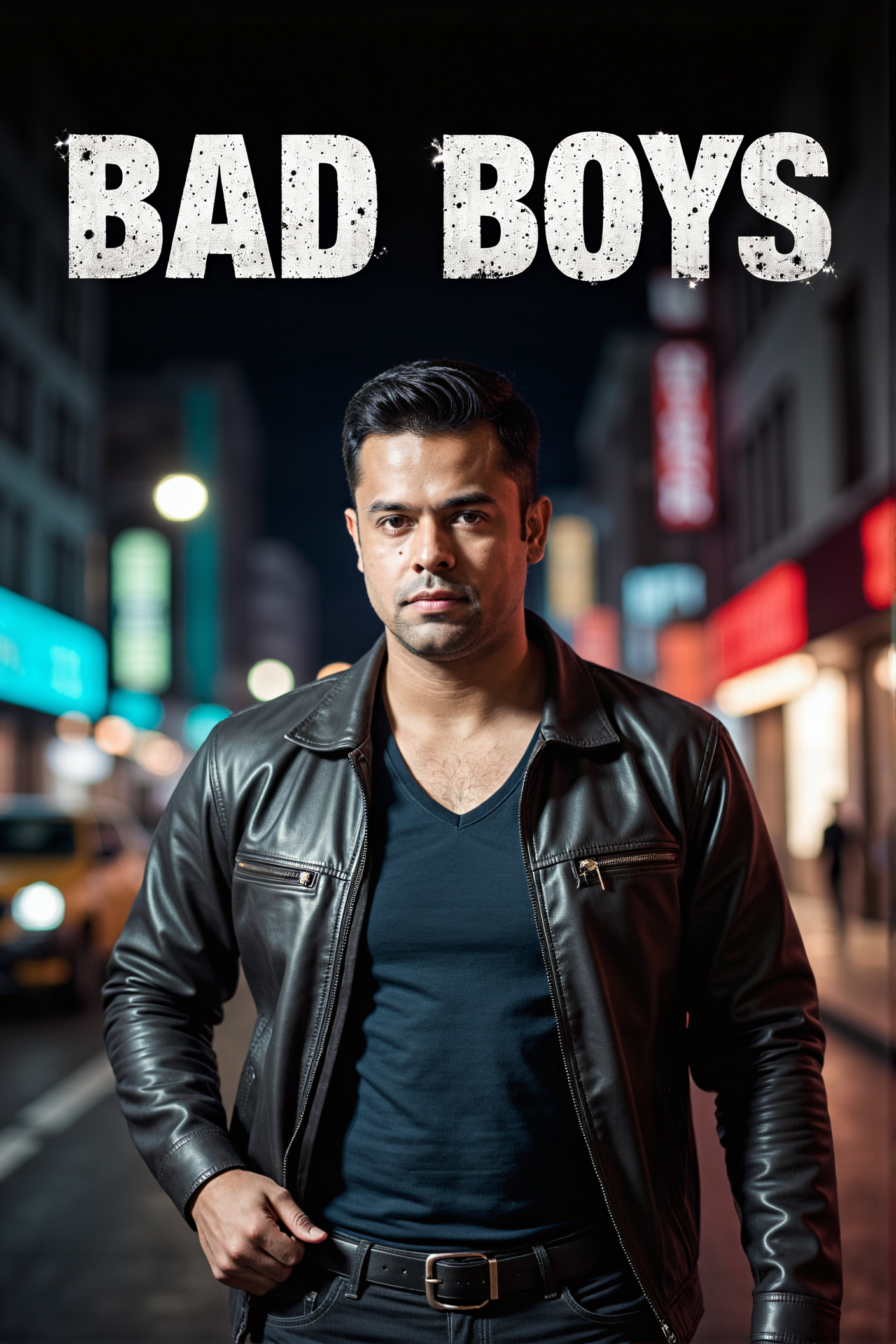

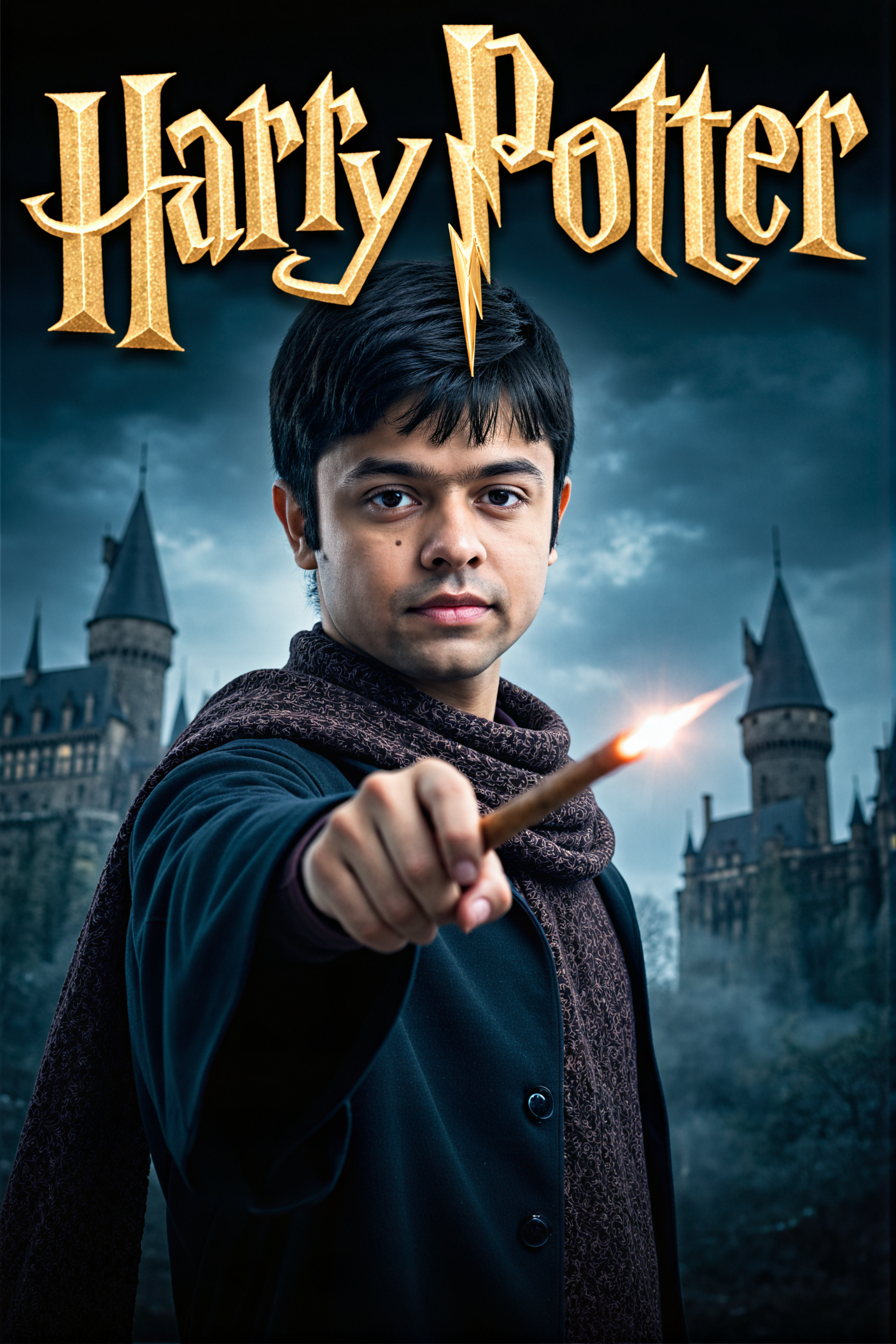

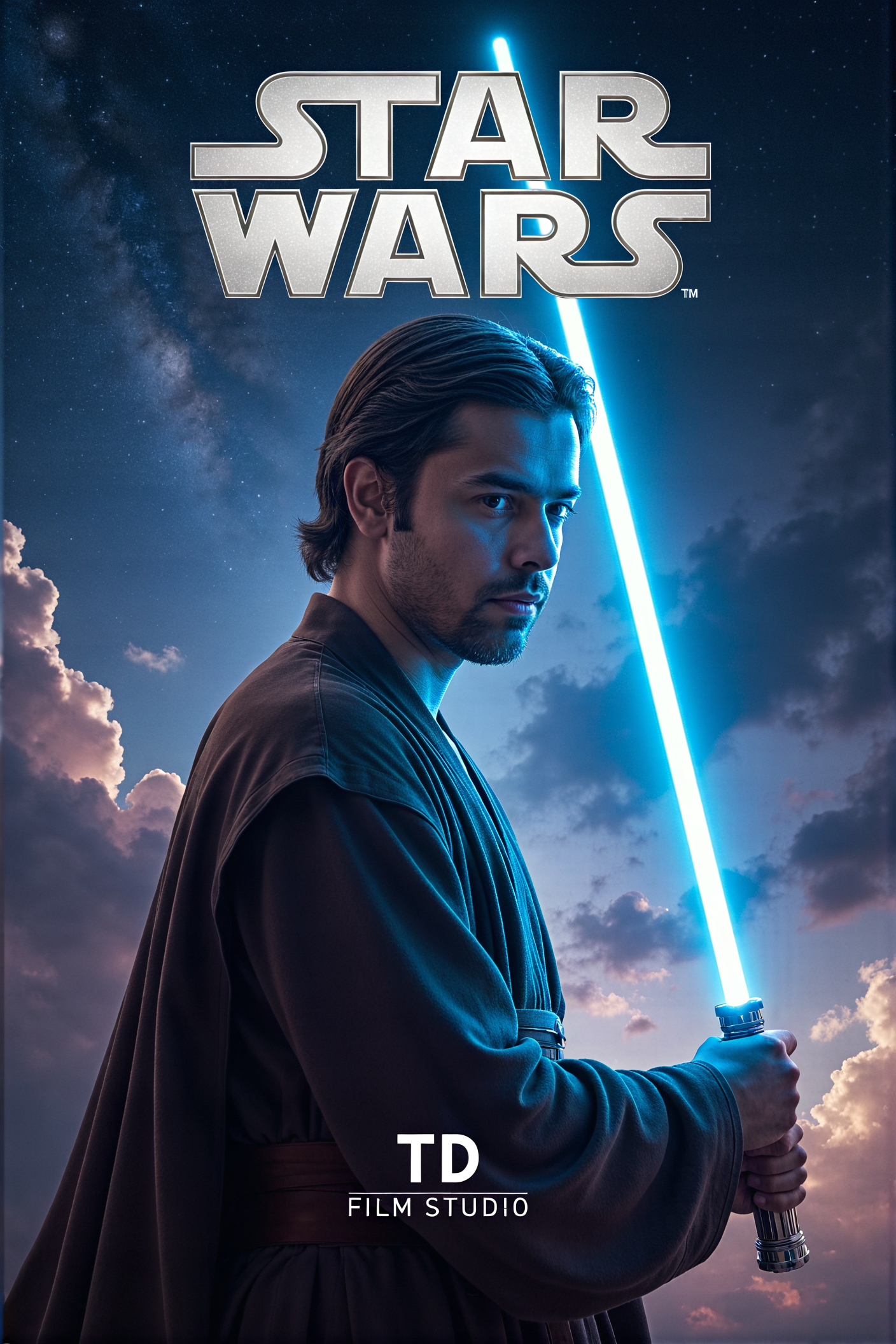

This LoRA was trained on two layers single_transformer_blocks.7.proj_out and single_transformer_blocks.20.proj_out

It is possible to train on blocks.7.proj_outlayer only if you don't want the dataset style transfer, the size depending on the dim can be below 4.5mb (bf16), the model is powerful enough to offer advantages such as single layer training, so let's do that.

A Flux LoRA with 99% face likeness with great flexibility can be as small as 580kb (single layer, dim 16)

allow_pickle=False to raise an error instead."allow_pickle=False