Has anyone done tests regarding interactions between multiple LoRAs, or is there documentation somew

Has anyone done tests regarding interactions between multiple LoRAs, or is there documentation somewhere how it works in detail (assuming it doesn't get so technical it goes over my head)

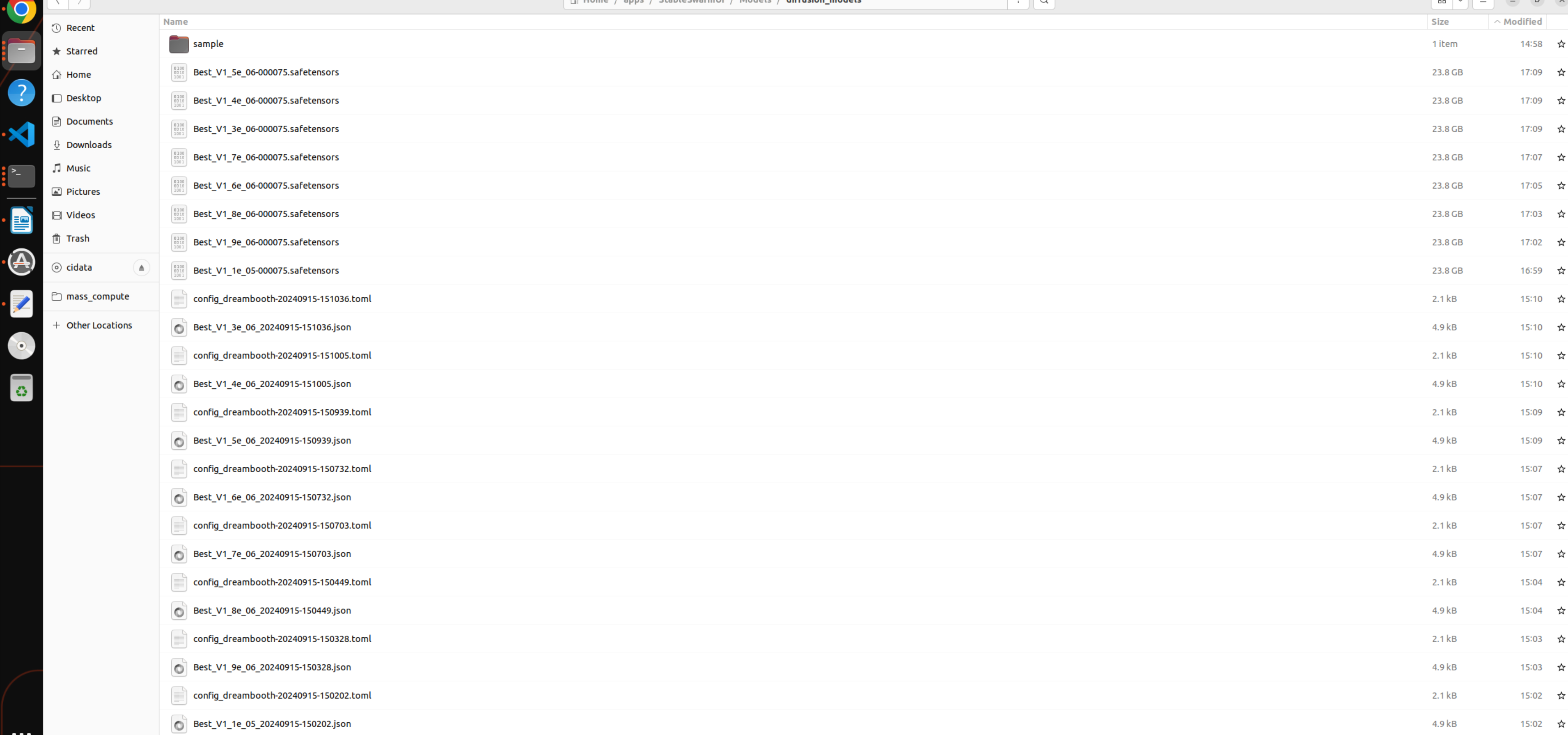

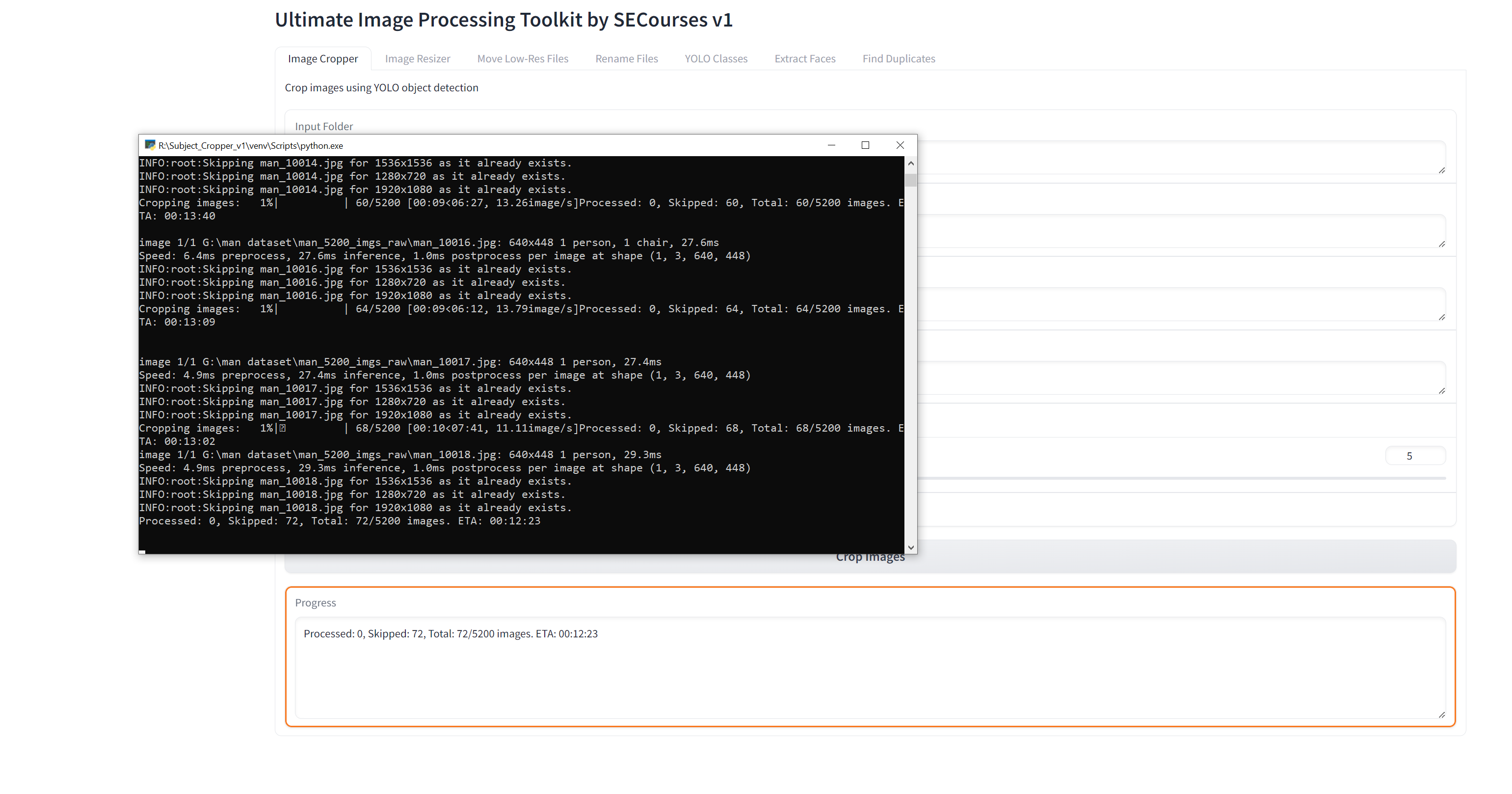

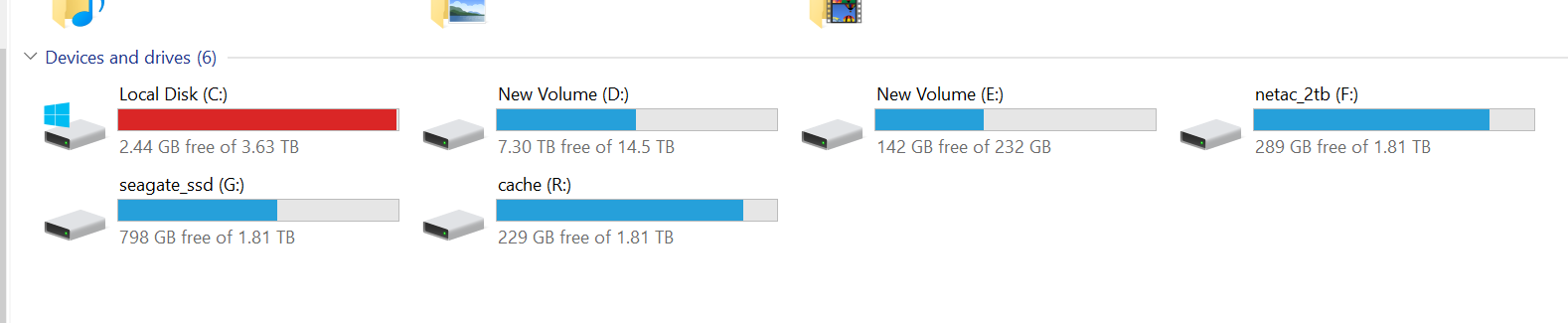

For instance, does LoRA load order matter? Is it better to train a model with two characters (like the one I did above with a character + mascot) compared to training two different LoRAs and using them both at the same time?

For instance, does LoRA load order matter? Is it better to train a model with two characters (like the one I did above with a character + mascot) compared to training two different LoRAs and using them both at the same time?