it is training full checkpoint

23.8 gb unet file

ok time to do a big test

Mmm ok so training on a lora checkpoint ex. "best_version22-000150"

koha responded about the bleeding

i will also upload so far best checkpoint training config

link pleae

The translation to English of the Japanese text is:

"Unfortunately, I don't have any ideas either. The lack of publicly available technical details about Flux is making the problem more difficult. I think we have no choice but to wait for the community's research."

"Unfortunately, I don't have any ideas either. The lack of publicly available technical details about Flux is making the problem more difficult. I think we have no choice but to wait for the community's research."

Viggle?

@dsienra77 残念ですが、私もアイデアは持っていません。Fluxの技術的詳細が公開されていないことが、問題を難しくしていますね。

コミュニティの研究を待つしかないと思います。

コミュニティの研究を待つしかないと思います。

9/16/24, 12:39 AM

thicker beard lol

i wish flux pro was leaked

lol

i thought sd3 large would have been

i am pretty sure it is exactly same 23.8 gb

we would directly use it with existing tools

hehe, may be that fixes the bleeding problem if is related to flux.dev being a distilled model

hehe, may be that fixes the bleeding problem if is related to flux.dev being a distilled modelwould be nice if black forest labs after releasing flux 2 pro it open source the version 1

trying to find best fine tuning configs:

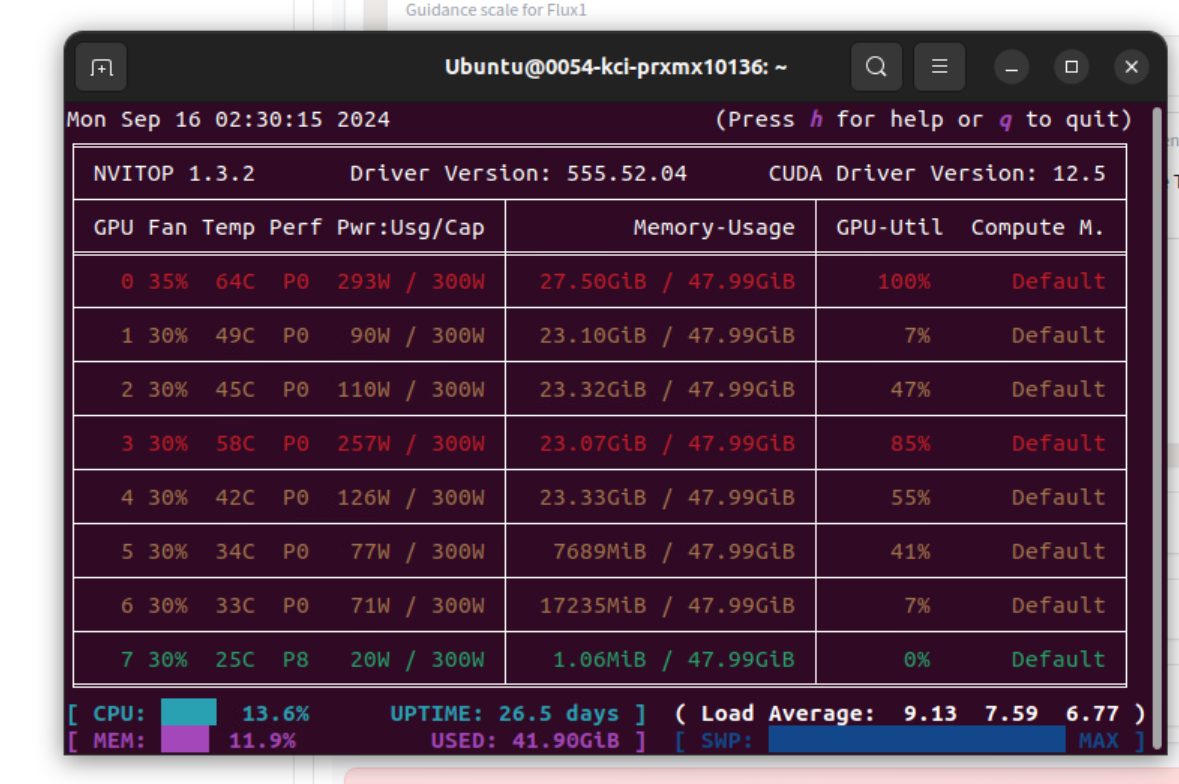

dmn fine tuning requires more than 16 gb :/

only 24 gb gpus can train atm

haha this is weird

1 config uses 15.3 :d

nice 24 gb can train pretty decent speed

configs added

Patreon

Get more from SECourses: Tutorials, Guides, Resources, Training, FLUX, MidJourney, Voice Clone, TTS, ChatGPT, GPT, LLM, Scripts by Furkan Gözü on Patreon

have you tried the extracted lora

you need to up the weight on them to be usable

i didnt

but fine tuning definitely better

started 8 more test need to sleep now 6:44 am :/

the lowest vram is 15450 mb

supposed to be no quality loss

finaly! finetune time! cant wait )) LoRA is defenetely not good enougth...

speeds are very resolution dependent. I am getting 1.1 it/s with 512 but around 5 it/s with 1024, training Flux Lora. Would you expect the parameters like LR to be the same for both, or have you seen that you need other parameters at higher resolutions?

Asking because if it's the same, you can make all your adjustments and tests using 512, and only go to 1024 for the final run

Hey guys i have question, i. trained lora with my dataset, and it went great, i saw there is some lora's out there like photorealistic loras that people did, can i train again my dataset image with those lora? or you cant do that, if i understand correctly lora it's to train specific things in the model right?

you could, by merging the lora into the Flux checkpoint first, and then train on that. Whether this improves the result for the Flux realistic Lora is open to debate, but your theory is correct.

I have trained in the past on an SDXL checkpoint merged like that, because I knew I wanted to use it with another Lora that negatively affected my lora, unless trained like that

I have trained in the past on an SDXL checkpoint merged like that, because I knew I wanted to use it with another Lora that negatively affected my lora, unless trained like that

I don't know if there are already good merging tools for Flux (let me know if you find one), but there are for SDXL

oh ok i understand

thank you

np. that's actually something I plan to try, with the Flux realistic Lora

what speeds do you get on 16 GB vram? does it require the very slow model splitting?

I have tried the new configurations for lora training and the results are getting better, thanks!

i still see no reason to train a full fintetuning and get a 23GB model compared to a few MB for a LoRA.

I already have around 20 LoRA's' and the results are very good. But will it be possible to extract a loRA's

from the finteuning model? because that could be interesting.

I already have around 20 LoRA's' and the results are very good. But will it be possible to extract a loRA's

from the finteuning model? because that could be interesting.