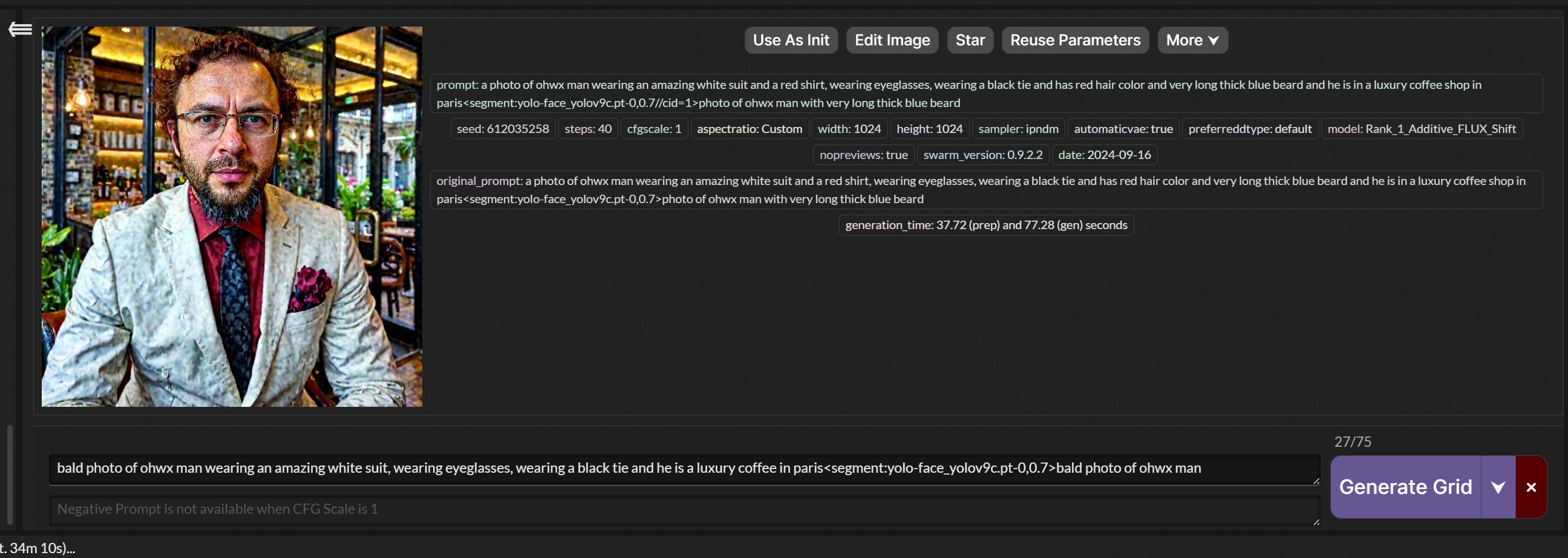

Okey, but that is not for lora. check that out another time as it was a 48hr run with 100 images in

Okey, but that is not for lora. check that out another time as it was a 48hr run with 100 images in a data set. for this lora.

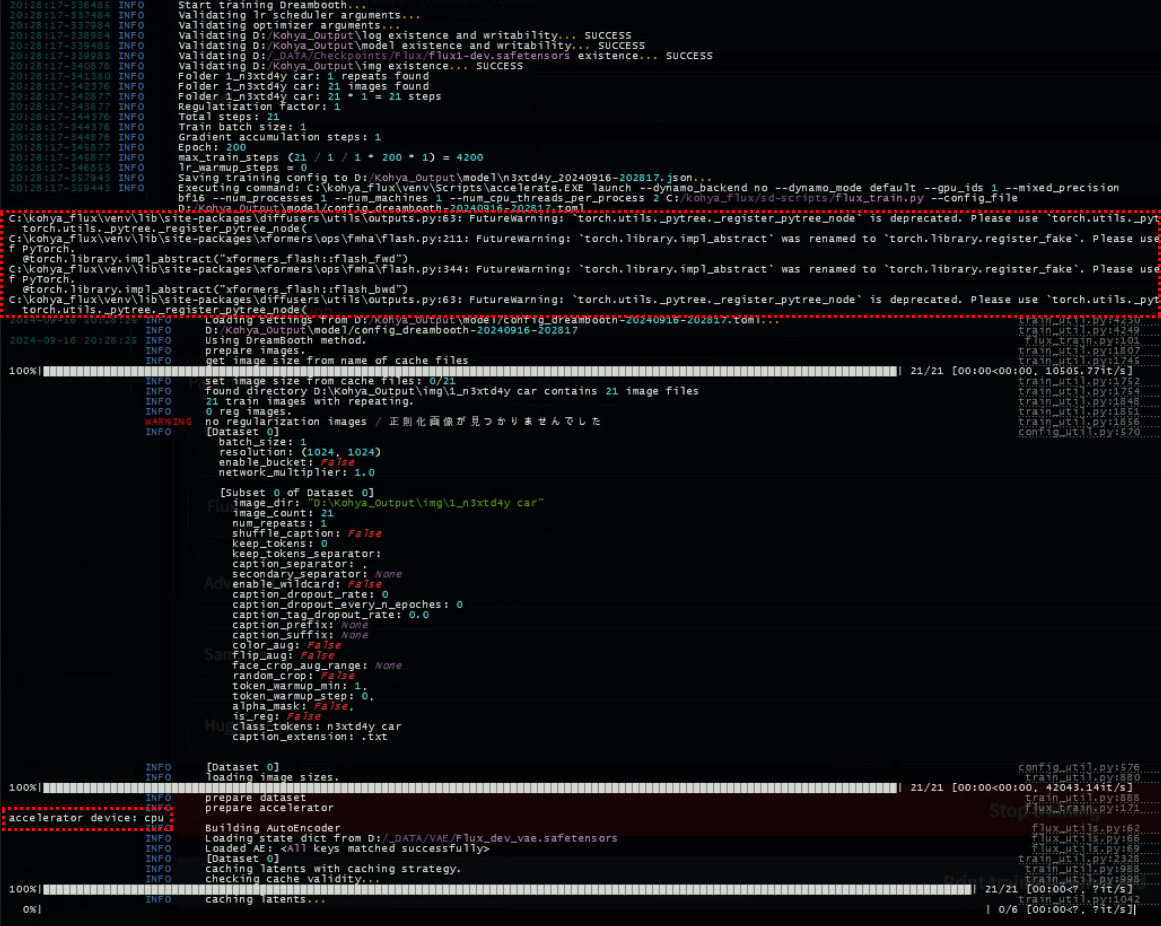

is not supported because:

max(query.shape[-1] != value.shape[-1]) > 128

xFormers wasn't build with CUDA support

attn_bias type is <class 'NoneType'>

operator wasn't built - see python -m xformers.info for more info

is not supported because:

max(query.shape[-1] != value.shape[-1]) > 256

xFormers wasn't build with CUDA support

operator wasn't built - see python -m xformers.info for more info

is not supported because:

xFormers wasn't build with CUDA support

operator wasn't built - see python -m xformers.info for more info

is not supported because:

max(query.shape[-1] != value.shape[-1]) > 32

xFormers wasn't build with CUDA support

dtype=torch.bfloat16 (supported: {torch.float32})

operator wasn't built - see python -m xformers.info` for more info

is not supported because:

max(query.shape[-1] != value.shape[-1]) > 128

xFormers wasn't build with CUDA support

attn_bias type is <class 'NoneType'>

operator wasn't built - see is not supported because:

max(query.shape[-1] != value.shape[-1]) > 256

xFormers wasn't build with CUDA support

operator wasn't built - see is not supported because:

xFormers wasn't build with CUDA support

operator wasn't built - see is not supported because:

max(query.shape[-1] != value.shape[-1]) > 32

xFormers wasn't build with CUDA support

dtype=torch.bfloat16 (supported: {torch.float32})

operator wasn't built - see