Hi there! <@205854764540362752> , I read your latest greatest post about multiple-GPU Dreambooth fin

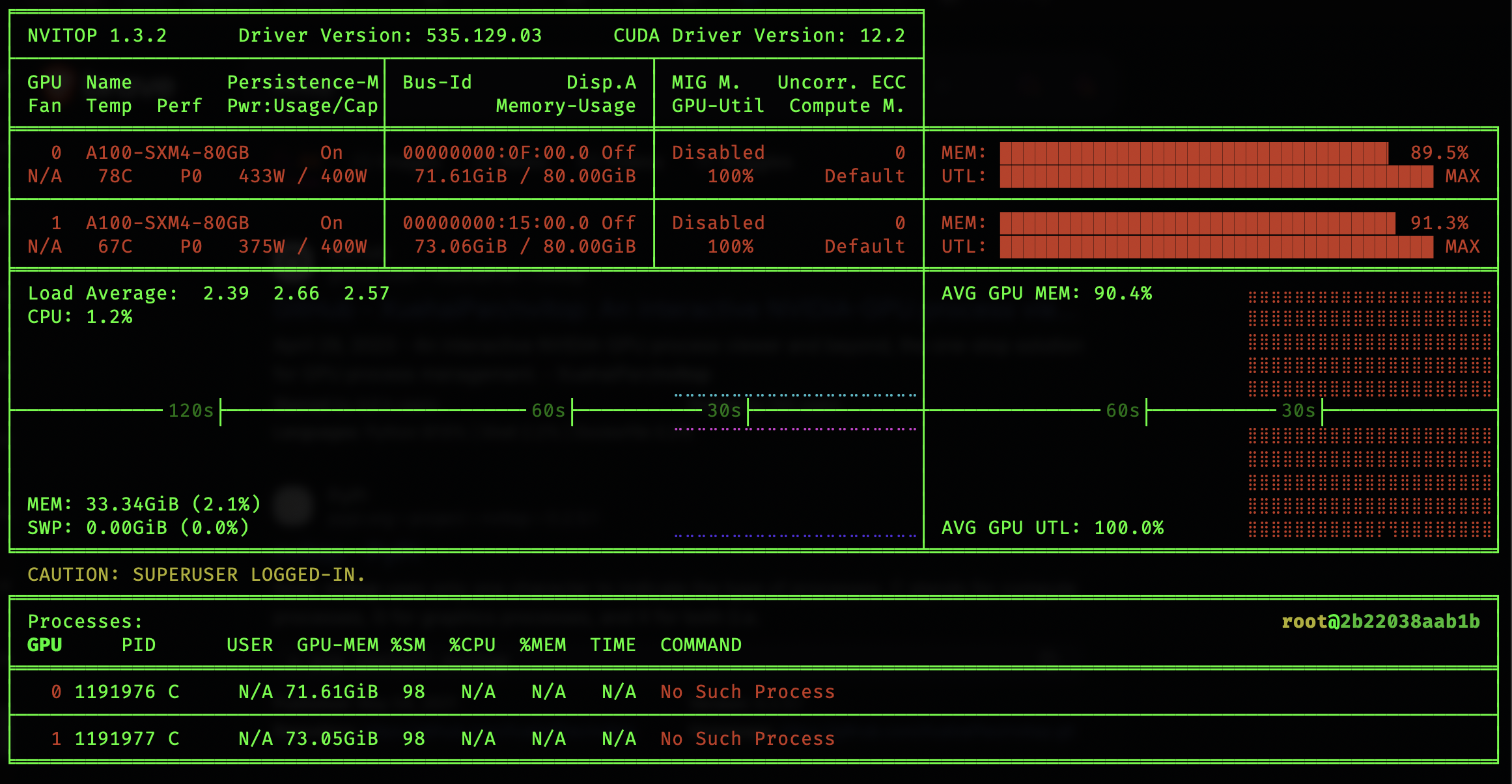

Hi there! @Furkan Gözükara SECourses , I read your latest greatest post about multiple-GPU Dreambooth finetuning for Flux. Please tell me if I got this right: if you want to use multiple GPUs, they must each have ≥80 GB RAM (so, top-of-the-line A100's or H100's). Still, you don't get linear decreases in processing time, at least with A100's (with 2x A100's you don't get 2x speed). Thus, for that money (at least on RunPod), you're better off with one L40S.

However, what about 4x A6000's? Those don't have 80 GB VRAM. Are they still cost-effective? EDIT: referring to Massed Compute's 4x A6000's at half-price with your special code, as well as those at full price.

However, what about 4x A6000's? Those don't have 80 GB VRAM. Are they still cost-effective? EDIT: referring to Massed Compute's 4x A6000's at half-price with your special code, as well as those at full price.