Crawler stopped after encountering 404,

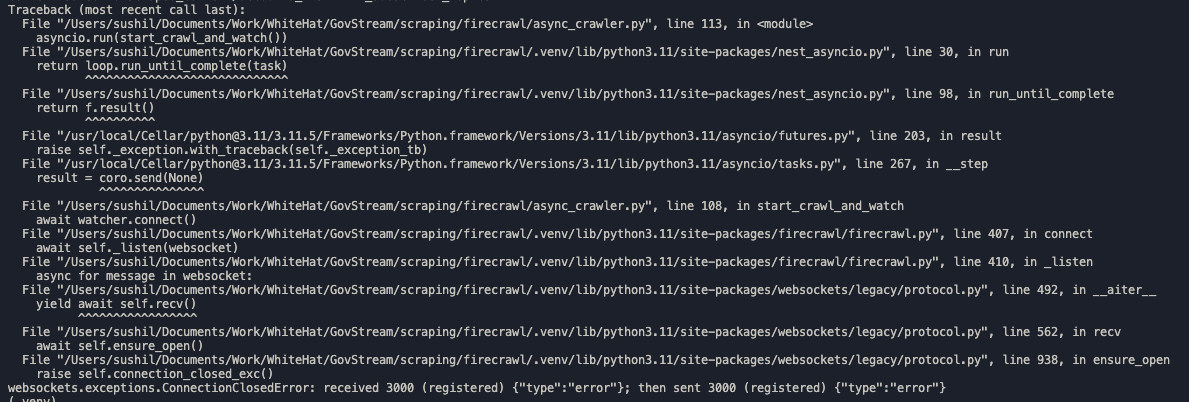

I am using start_crawl_and_watch, when the crawler encounters a broken page, 404 error, It stops with error

websockets.exceptions.ConnectionClosedError: received 3000 (registered) {"type":"error"}; then sent 3000 (registered) {"type":"error"}

doesn't call bacl to

on_error hook18 Replies

ccing @mogery here to take a look

@sushilsainju can you send me the ID of the crawl please?

0eb7a6c7-0c05-4557-ad5b-59da52b3174e

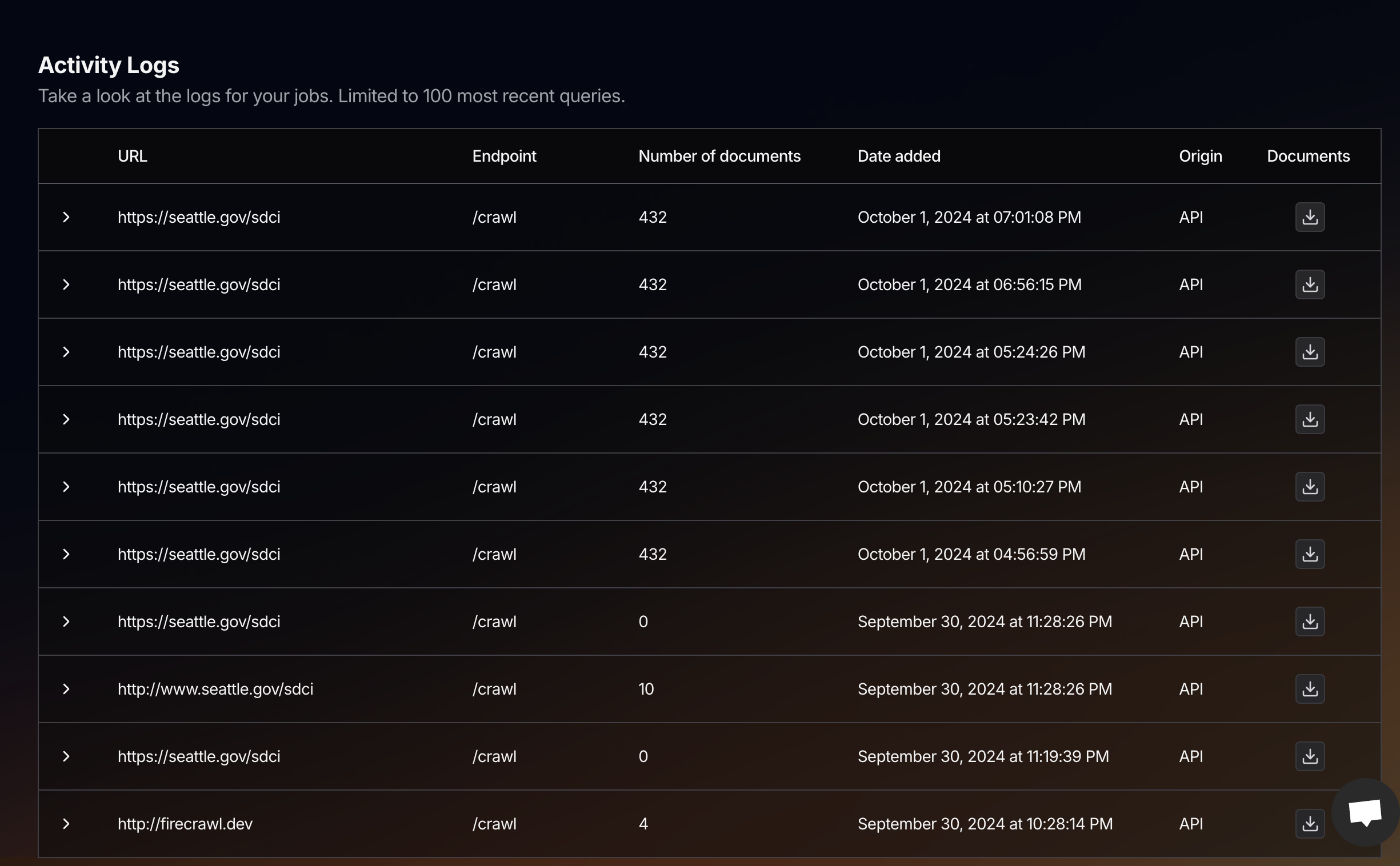

The failed session doesn't appear on the activity log either

and it seemed to have used up significant number of my credits, almost 10K credits each request

which means I've hearly used about 30K creadits, on this

start_crawl_and_watch call without any useful resultattached are the screenshots of my lates usage and activity logs

Hey @sushilsainju , while mogery investigate, can you dm me your email? I will put the 30k credits back to your account

its sushil@whitehatengineering.com

Hope you guys can fix the issue soon

Thanks! Just added 30k there 🙂

Yes we will! @mogery is on it!

Thanks!

Is there any settings/config that would save the content of each scraped page to an individual text file?

I am trying to achieve this on

on_document hook

I think this should be 40K credits, As you can see on my activity log I've barely used around 5K credits, think I lost the remaining on the failed start_crawl_and_watch

We are still trying to test out firecrawl for our product,

any update on this @mogery @Adobe.FlashShould be fixed. Crawls don't fail anymore

I'm still facing issues with

It crashes unexpectedly and I can not find the failed crawl requests on the dashboard, It does not return to

crawl_url_and_watchIt crashes unexpectedly and I can not find the failed crawl requests on the dashboard, It does not return to

on_error hook either

Hey sushil, sorry for the late turn around on this. ccing @mogery to take another look.

We are planning to cancel our subscription until this issue is fixed, we are not getting what we expected

Hey, sorry again. We're working on it asap.

I hope our monthly subscription will be extended, since we havn't been able to use the service properly

Hi, any updates on this?

I was using the playground to crawl the website and it was in progress , scraping around 9000+ pages,

i refreshed the page and navigated to activity logs, I could not find the log of my latest crawl on the activity log table?

neither can i see it on my usage charts

crawls are added to the activity log once the entire run is finished

how about this?