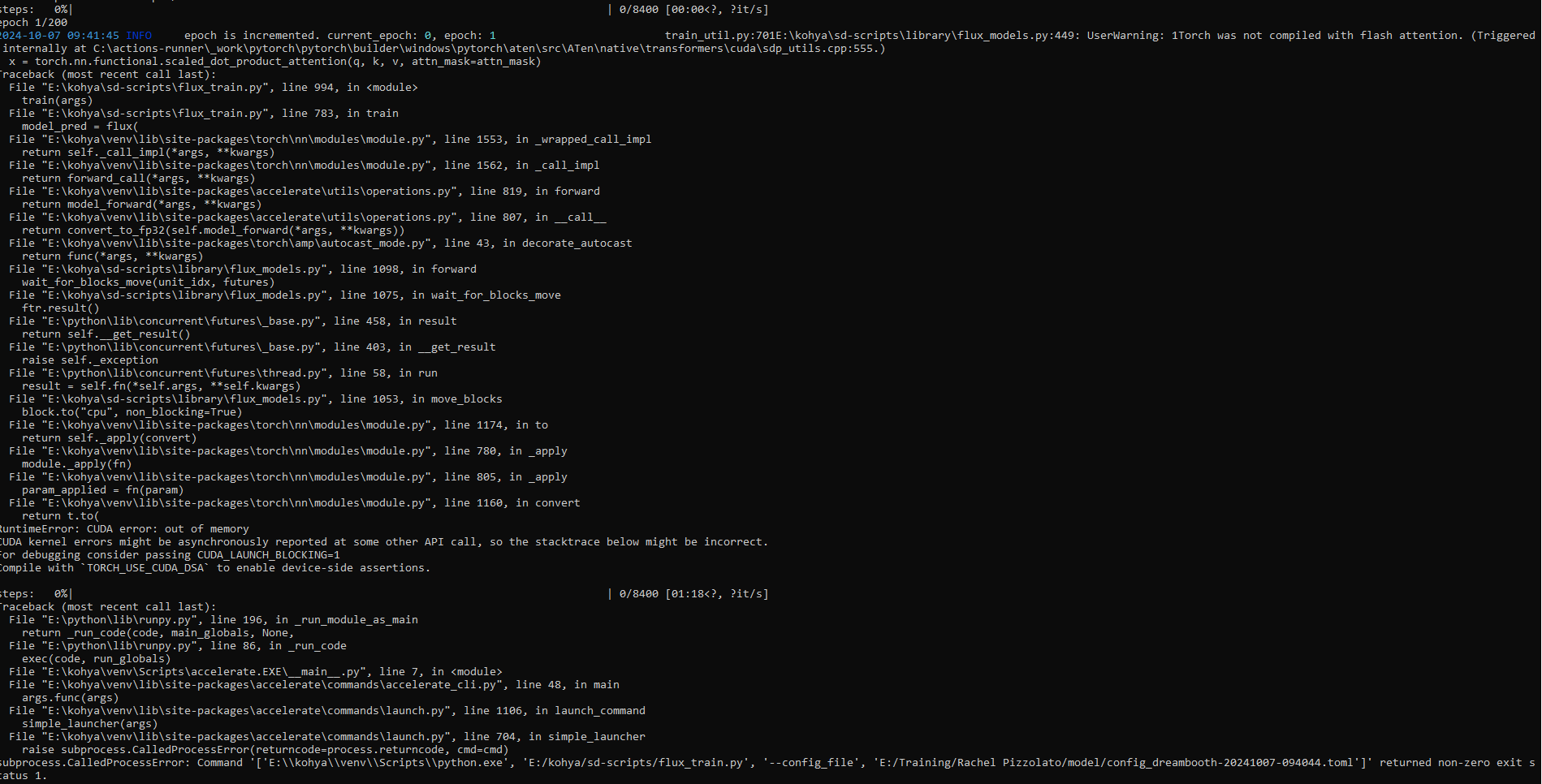

Traceback (most recent call last):

File "Q:\kohya_ss\sd-scripts\train_db.py", line 529, in <module>

train(args)

File "Q:\kohya_ss\sd-scripts\train_db.py", line 123, in train

text_encoder, vae, unet, load_stable_diffusion_format = train_util.load_target_model(args, weight_dtype, accelerator)

File "Q:\kohya_ss\sd-scripts\library\train_util.py", line 4825, in load_target_model

text_encoder, vae, unet, load_stable_diffusion_format = _load_target_model(

File "Q:\kohya_ss\sd-scripts\library\train_util.py", line 4780, in _load_target_model

text_encoder, vae, unet = model_util.load_models_from_stable_diffusion_checkpoint(

File "Q:\kohya_ss\sd-scripts\library\model_util.py", line 1005, in load_models_from_stable_diffusion_checkpoint

converted_unet_checkpoint = convert_ldm_unet_checkpoint(v2, state_dict, unet_config)

File "Q:\kohya_ss\sd-scripts\library\model_util.py", line 267, in convert_ldm_unet_checkpoint

new_checkpoint["time_embedding.linear_1.weight"] = unet_state_dict["time_embed.0.weight"]