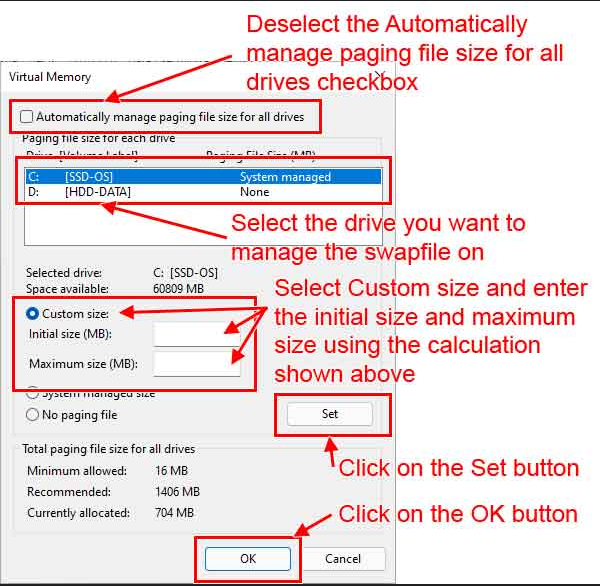

there you set you virtual memory size

64 GB in you case

Thanks, I already set maximum to 64GB, for the initial I used the old on at around 5GB but this did not resolve the issue

I did my first test, very promising results, a little undertrained at the moment, at the end I resumed the training with regularization images still no bleeding, now I going to start a new training from scratch 11 people at the same time, family and friends, I going to use regularization images from the class person because I'm training people form different ages and genders the regularization images are captioned I downloaded the dataset including captions a time ago it contains very diverse people from different races and ages from baby to elderly people, male and female. the captions of my subjects are simple "name class" lets see how it behaves

do you have enough free space on the selected disk? you are using the correct preset for your system?

Yes I have half a TB free space on the M.2 ssd, I downloaded Best_Configs_v5.zip and used the 12GB one (12GB_GPU_10800MB_17.2_second_it_Tier_1.json)

idk, it should work, lets wait for Dr. Furkan may be he can help

In task manager my gpu settings say 16Gb total shared gpu-storage, is that correct?

Yes alright thank you

you welcome

I try to train myself but before that I need more info. Normally when I train a lora of one person if the background have some people behind, somehow the image of the person I train bleed to the background into the people. So in your theory, by training with open dev flux checkpoint we can eliminate this problem right ?

@Dr. Furkan Gözükara Is it possible to train a flux lora or flux finetune from a flux q8 gguf format?

The most important thing and the main difference with this model is that I can train multiple people of the same class without bleeding, with regular flux dev I I trained 3 man for example and the model mixed all the faces together, and on inference prompting for one person returns a mix of all, now the problem is gone with flux-dev-de-distill, it behaves like sdxl but with much better quality. I still experimenting but is very promising so far.

So that mean 1 lora 3 lora active keyword is possible but can they appear all together in 1 image ? You need to check it

I will check that, give me a minute, I trained 1 lora 11 people

thank you so much

One at a time works perfect, two or more people on the same frame loose likeness, can be fixed with inpainting, man and woman works much better, the same problem that sdxl have, but remember my lora is still undertrained, lets see with my new lora... I will start training later, it will take 2 days or more to finish

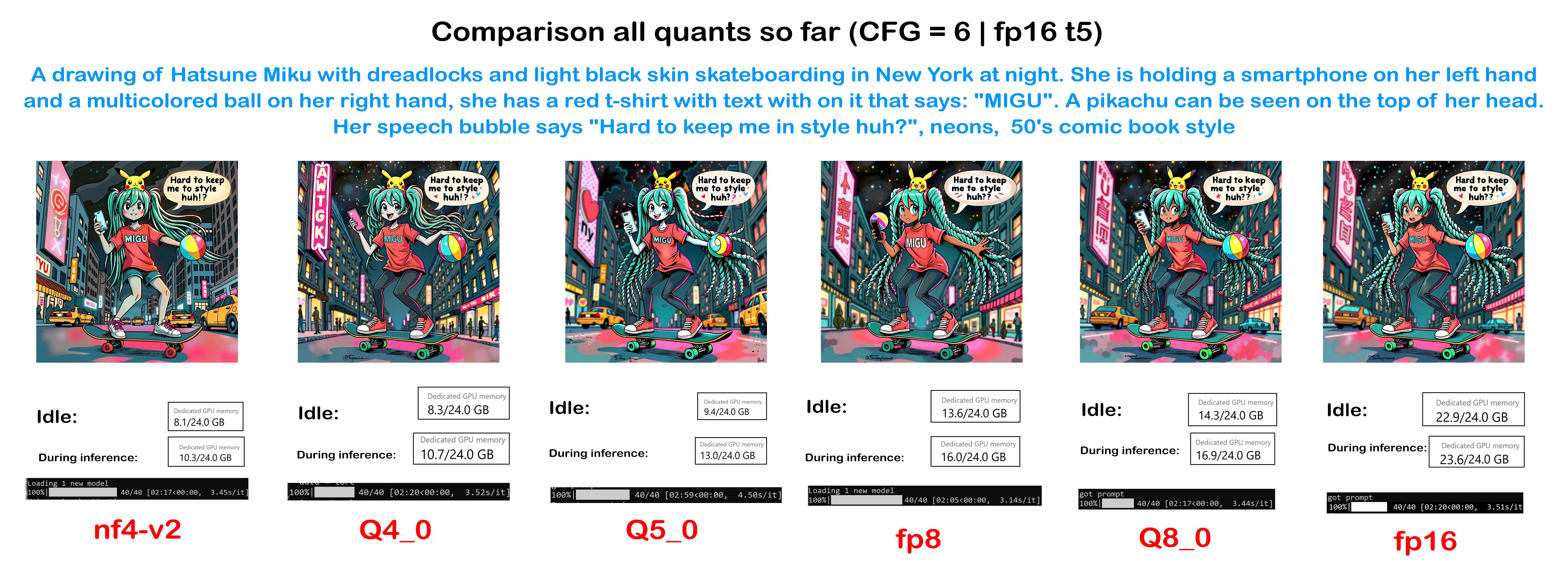

Comparison of all quants. Q8 is clearly the winner, when compared to fp16.

"clearly". sample size of 1

I have seen other posts with comparison. Do you have a different experience?

I've made no comparisons. I just use fp8 to generate

From the comparison posts on reddit, q8 seems to keep a similar consistency to fp16. So if we could use it for training that would be super. But that doesn't seem possible atm.

@daroth Have you finetuned using fp8?

no, kohya didn't load that model. only the full sized one worked

fp8 is faster when you have rtx 4000s

If anyone has a second... Could i get more information on what "de-distilled" means?

q8 faster in other cases

If your work is success, I will train a checkpoint with maybe 12 people inside it, maybe quality much better than use 12 loras

I'm looking to do a finetune with a fp8 but the Dr. setting for it uses shared memory, and is slow at around 10s/it. But speaking to koyha, it seems its an intended behavior to use shared memory.

just learn to use masscompute service or runpod. Local VGA is not mean to change model anymore. Quality is worst.

I might have to, but I can do super fast loras, so I was suprised how slow it was.

I use 3090 with 24gb Vram but I can only train fp8 lora. I use mass compute to train fully fp16 lora with the cost of about 4 usd

very cheap

That is per hour?

no, total cost

Massed Compute - from my experience has been great, far better than runpod. The SECourses pricing on the A6000's is great.

per hour is only 1.25 usd

when training with my local pc, I need 11 hours of 3090 run full throttle, my poor pc fan roar the hell of of my room and the temperature turn up a lot

so 4 usd saving my trouble and time

11 hours of 3090 for fp8 lora

no thank you

I'm training this model now, Works much better for training, you can train multiple people on one lora without concept mixing, it behaves very much like sdxl, much more flexible

Yeah the pc lights stayed on and it looks like a disco ballroom.

I never see any loras in SDXL have multiple concept, Flux maybe the first model can do it

I trained loras on sdxl with more than 20 people no problem

Since SDXL have instance ID, so I use it for more convenience if I need people lora anyway

Best gpu prices are from here - https://www.autodl.com/home but I don't know how to run them. Learned about them via an article discussing 48gb 4090s.

AutoDL为您提供专业的GPU租用服务,秒级计费、稳定好用,高规格机房,7x24小时服务。更有算法复现社区,一键复现算法。

this really new to me

Is it possible to create a finetune, then extract the LORA? I've had good luck with that for single subjects, and sometimes it's also nice to have a checkpoint as well.