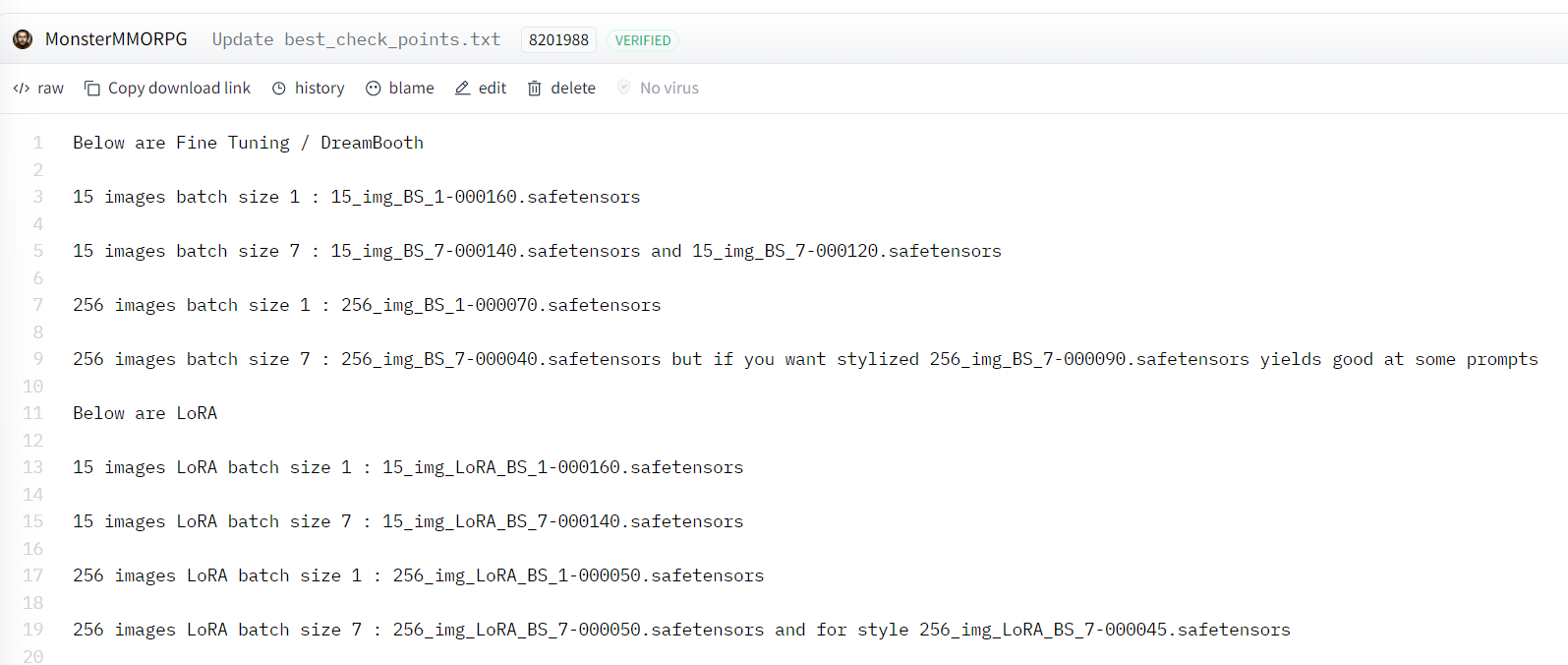

Nice, If you have to train more than one subject it is your only choice, in my tests is superior on

Nice, If you have to train more than one subject it is your only choice, in my tests is superior on everything, the only problem is if you are going to do a full training the inference of the resulting model will be slower because you have to set the cfg scale to 3.5, to overcome it what can be done is to do the finetune, extract the lora and then use the resulting lora on regular flux-dev and be able to use cfg scale 1 in conjunction with distilled cfg