@Dr. Furkan Gözükara Update on my https://huggingface.co/nyanko7/flux-dev-de-distill training tests.

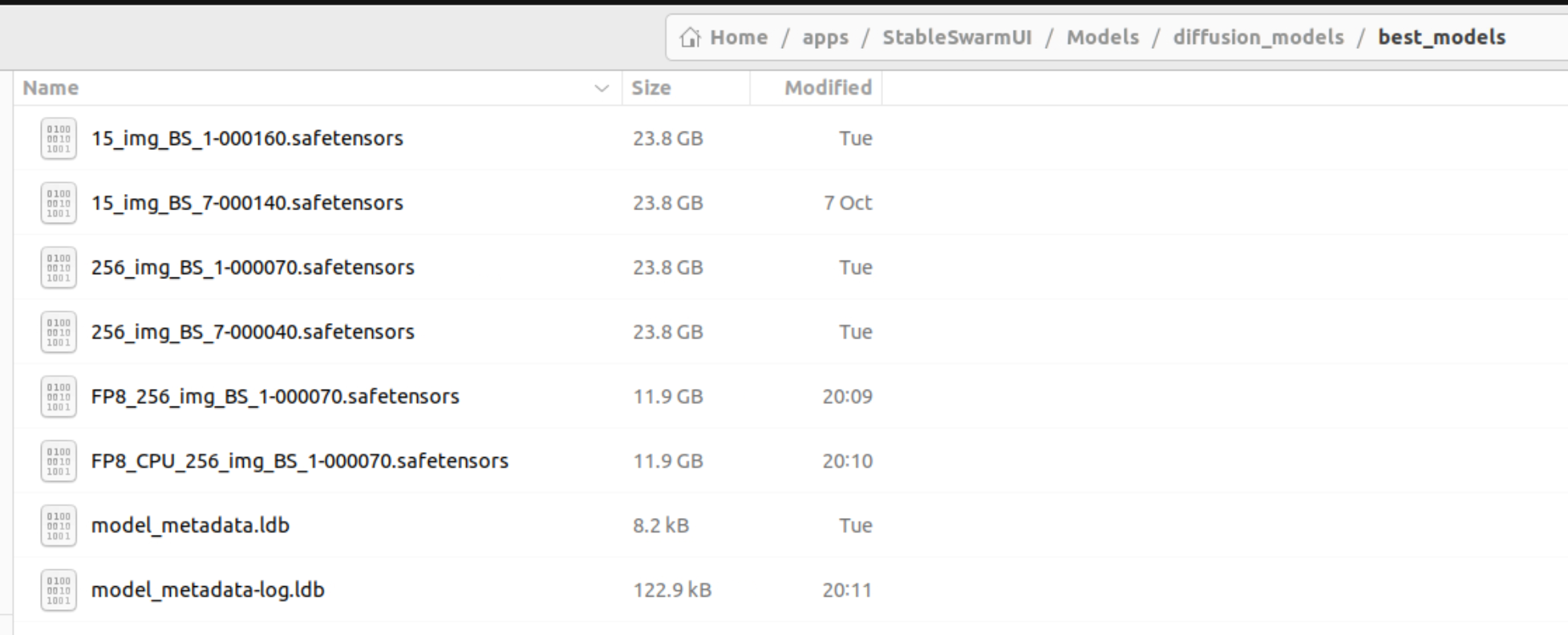

@Dr. Furkan Gözükara Update on my https://huggingface.co/nyanko7/flux-dev-de-distill training tests. Some bad news and some good news, Good news: I can train many subjects at the same time without bleeding between each other 11 people in one LORA, simple captions "Name class" note: the token must be different a saw a little bleeding with similar names like "Diego man" "Dani man" works best with "NameLastname class" so they end up been very different. Bad news: there is still some class bleeding, may be my fault because I was using a higher lr than recommended to get faster results, Other thing I was using faster presets rather than quality presets "Apply T5 Attention Mask disabled" "Train T5-XXL disabled" now I'm testing with dose enabled, other bad news regularization images still reduces resemblance, I will try this again with "Apply T5 Attention Mask enabled" and "Train T5-XXL enabled" and with the recommended learning rate. The other option that I didn't try was "Guidance scale for Flux1" flux-dev-de-distill has a default cfg of 3.5, that option I leave it at 1 for now because I'm using regular Flux-Dev for inference is another thing I have to test. I will report my Updates.