@everyone Qwen Image 2511 is truly a massive upgrade compared to 2509. Full step by step how to use tutorial published and i have compared as well https://youtu.be/YfuQuOk2sB0 If you can upvote, leave a comment I appreciate that: https://www.reddit.com/r/comfyui/comments/1pv9snm/qwen_image_edit_2511_is_a_massive_upgrade/

Furkan Gözükara SECourses · 2d ago

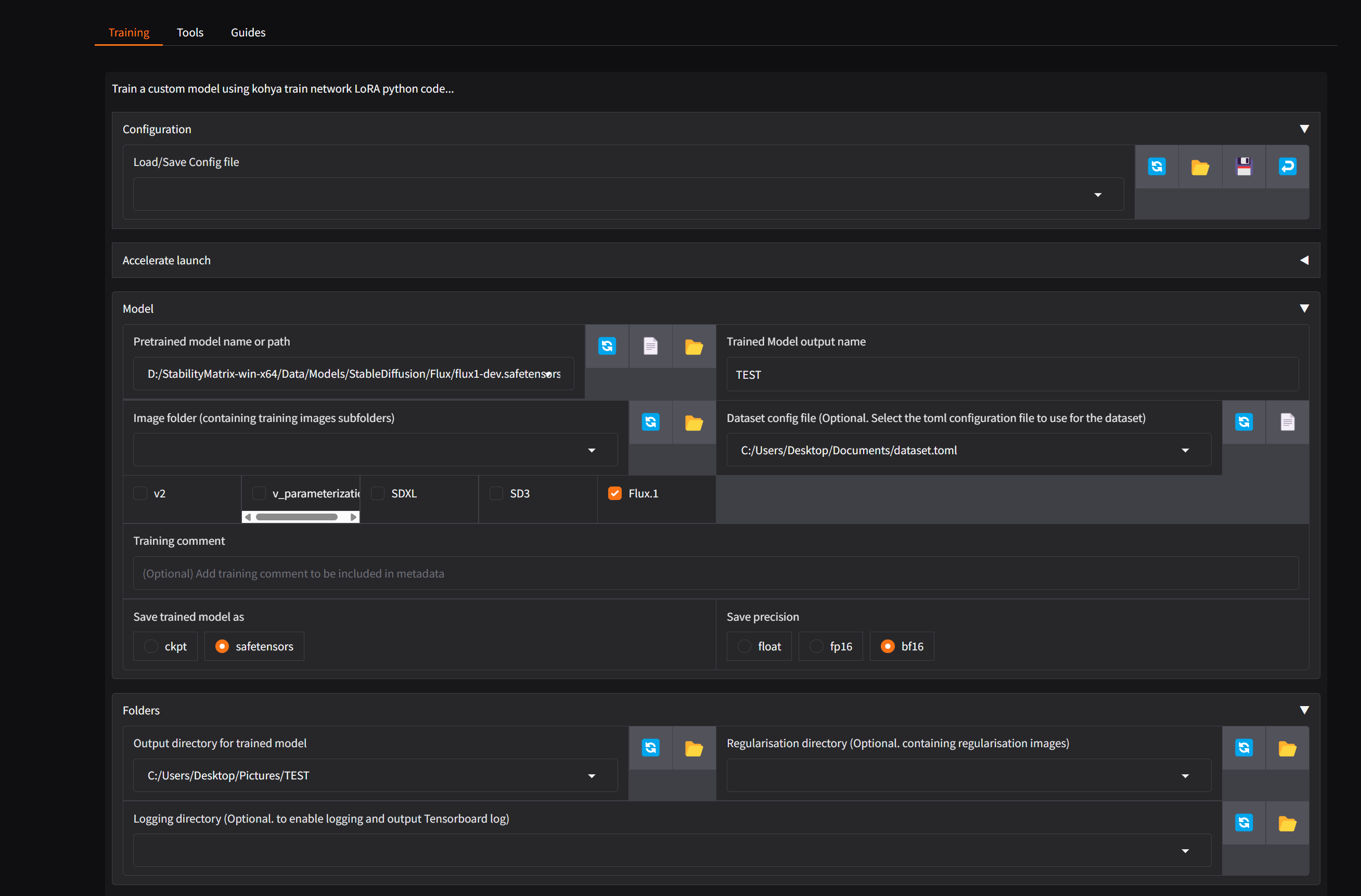

@everyone After doing massive research our Wan 2.2 LoRA training tutorial is ready and published thankfully https://youtu.be/ocEkhAsPOs4 If you can upvote, leave a comment I appreciate that: https://www.reddit.com/r/comfyui/comments/1pscwvr/wan_22_complete_training_tutorial_text_to_image/

Furkan Gözükara SECourses · 6d ago

@everyone After doing massive research our Z Image Turbo LoRA training tutorial is ready and published thankfully https://youtu.be/ezD6QO14kRc If you can upvote, leave a comment I appreciate that: https://www.reddit.com/r/comfyui/comments/1pedr0v/zimage_turbo_lora_training_with_ostris_ai_toolkit/

Furkan Gözükara SECourses · 4w ago