amazing

i dont have yet. our kohya configs are transferable to onetrainer

where can i find that khoya configs

Full Instructions, Configs, Installers, Information and Links Shared Post (the one used in the tutorial)

Full Instructions, Configs, Installers, Information and Links Shared Post (the one used in the tutorial)

https://www.patreon.com/posts/112099700

https://www.patreon.com/posts/112099700Patreon

Get more from SECourses: FLUX, Tutorials, Guides, Resources, Training, Scripts on Patreon

YouTubeSECourses

If you want to train FLUX with maximum possible quality, this is the tutorial looking for. In this comprehensive tutorial, you will learn how to install Kohya GUI and use it to fully Fine-Tune / DreamBooth FLUX model. After that how to use SwarmUI to compare generated checkpoints / models and find the very best one to generate most amazing image...

it has both lora and fine tuning configs

thank you Dr.

@Saxon The training parameters are all here. Just match them in kohya. The lora network dim was trained at rank 4 btw.

accelerate launch ^

--mixed_precision bf16 ^

--num_cpu_threads_per_process 1 ^

sd-scripts/flux_train_network.py ^

--pretrained_model_name_or_path "C:\pinokio\api\fluxgym.git\models\unet\flux1-dev.sft" ^

--clip_l "C:\pinokio\api\fluxgym.git\models\clip\clip_l.safetensors" ^

--t5xxl "C:\pinokio\api\fluxgym.git\models\clip\t5xxl_fp16.safetensors" ^

--ae "C:\pinokio\api\fluxgym.git\models\vae\ae.sft" ^

--cache_latents_to_disk ^

--save_model_as safetensors ^

--sdpa --persistent_data_loader_workers ^

--max_data_loader_n_workers 2 ^

--seed 42 ^

--gradient_checkpointing ^

--mixed_precision bf16 ^

--save_precision bf16 ^

--network_module networks.lora_flux ^

--network_dim 4 ^

--optimizer_type adafactor ^

--optimizer_args "relative_step=False" "scale_parameter=False" "warmup_init=False" ^

--split_mode ^

--network_args "train_blocks=single" ^

--lr_scheduler constant_with_warmup ^

--max_grad_norm 0.0 ^--sample_prompts="C:\pinokio\api\fluxgym.git\outputs\k44\sample_prompts.txt" --sample_every_n_steps="500" ^

--learning_rate 8e-4 ^

--cache_text_encoder_outputs ^

--cache_text_encoder_outputs_to_disk ^

--fp8_base ^

--highvram ^

--max_train_epochs 16 ^

--save_every_n_epochs 4 ^

--dataset_config "C:\pinokio\api\fluxgym.git\outputs\k44\dataset.toml" ^

--output_dir "C:\pinokio\api\fluxgym.git\outputs\k44" ^

--output_name k44 ^

--timestep_sampling shift ^

--discrete_flow_shift 3.1582 ^

--model_prediction_type raw ^

--guidance_scale 1 ^

--loss_type l2 ^

accelerate launch ^

--mixed_precision bf16 ^

--num_cpu_threads_per_process 1 ^

sd-scripts/flux_train_network.py ^

--pretrained_model_name_or_path "C:\pinokio\api\fluxgym.git\models\unet\flux1-dev.sft" ^

--clip_l "C:\pinokio\api\fluxgym.git\models\clip\clip_l.safetensors" ^

--t5xxl "C:\pinokio\api\fluxgym.git\models\clip\t5xxl_fp16.safetensors" ^

--ae "C:\pinokio\api\fluxgym.git\models\vae\ae.sft" ^

--cache_latents_to_disk ^

--save_model_as safetensors ^

--sdpa --persistent_data_loader_workers ^

--max_data_loader_n_workers 2 ^

--seed 42 ^

--gradient_checkpointing ^

--mixed_precision bf16 ^

--save_precision bf16 ^

--network_module networks.lora_flux ^

--network_dim 4 ^

--optimizer_type adafactor ^

--optimizer_args "relative_step=False" "scale_parameter=False" "warmup_init=False" ^

--split_mode ^

--network_args "train_blocks=single" ^

--lr_scheduler constant_with_warmup ^

--max_grad_norm 0.0 ^--sample_prompts="C:\pinokio\api\fluxgym.git\outputs\k44\sample_prompts.txt" --sample_every_n_steps="500" ^

--learning_rate 8e-4 ^

--cache_text_encoder_outputs ^

--cache_text_encoder_outputs_to_disk ^

--fp8_base ^

--highvram ^

--max_train_epochs 16 ^

--save_every_n_epochs 4 ^

--dataset_config "C:\pinokio\api\fluxgym.git\outputs\k44\dataset.toml" ^

--output_dir "C:\pinokio\api\fluxgym.git\outputs\k44" ^

--output_name k44 ^

--timestep_sampling shift ^

--discrete_flow_shift 3.1582 ^

--model_prediction_type raw ^

--guidance_scale 1 ^

--loss_type l2 ^

he is actively working on but i havent tested yet

I went through and changed all the settings I could find, but I wasn't sure how to change the network_dim or network_args. I'm also not clear on the epochs, so I just set it to 16 because I'm not sure what the distinction is between epochs and max_train_epochs.

I believe network dim id lora rank and alpha, which should be changed from 128 to what you see there. Epoch is like a turn, max epoch is max turns you want. The kohya_ss GitHub wiki tells a bit more about the settings.

loras yes

i dont know base models

i see what it was -- I was matching the settings to the dreambooth tab -- network dim is in the lora tab. Is there a reason that I'm too naive to know that these settings would not work well in dreambooth?

hey everyone, when i am finetuning stable diffusionXL 1.0 base on a woman's picture. why does the base model always have that indian clothing style. so the problem is that when i am tuninng my model, the model can only perform good while i generate a picture of the character i have trained in indian clothes. Need help here

what token are you using for the woman? could be the token alone is related somehow to indian clothing styles

you should use some good fine tuned model of sdxl

as a base

RealVisXL_v4 , this is the model i am using as base model

i will try to change that

there is v5

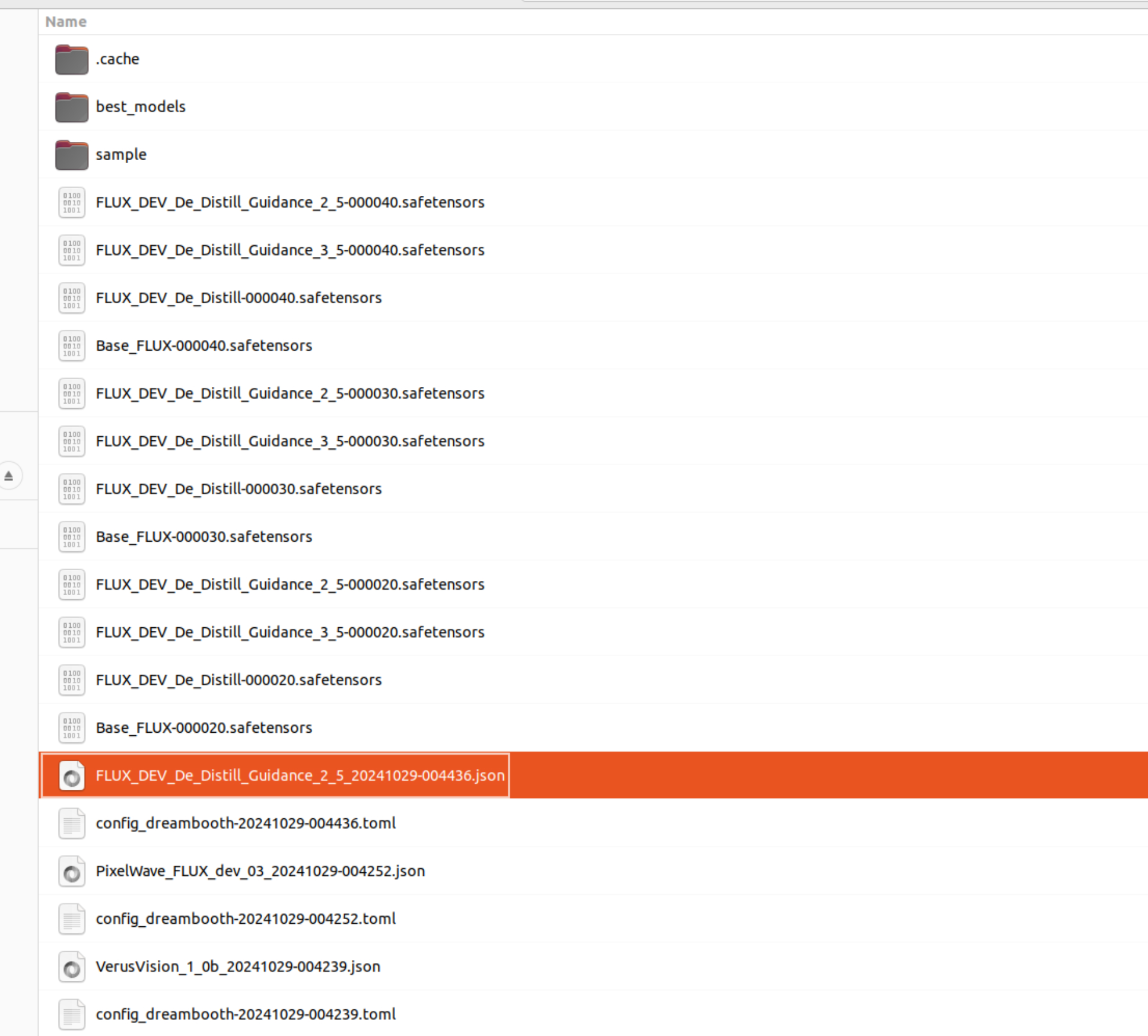

i am trying to go for the flux dev de destill but i cant find some good training params preset

i am testing right now

it

perfect, i will be waiting for it

@Furkan Gözükara SECourses Just saw your latest post with the sample Dwayne Johnson training set; it is very useful!

I have a question: is there a particular number/proportion of Head Shot, Close Shot, Mid shot and Full body shot you would use? All equal? (e.g. 7 each?). I am not sure how whether some of your images is a Mid shot or a Close shot or Full body shot.

Also, what number of training images would you recommend? (balancing between quality and speed - obviously, 256 images is not practical for many of us on Batch size 1!). Would you say 28 is a good amount? Or would you go with 84 like you did recently for your client?

I have a question: is there a particular number/proportion of Head Shot, Close Shot, Mid shot and Full body shot you would use? All equal? (e.g. 7 each?). I am not sure how whether some of your images is a Mid shot or a Close shot or Full body shot.

Also, what number of training images would you recommend? (balancing between quality and speed - obviously, 256 images is not practical for many of us on Batch size 1!). Would you say 28 is a good amount? Or would you go with 84 like you did recently for your client?

i would go with 84 for flux

it learns better

well i didnt do any certain number of images for each shot type

but when you go higher number you usually have sufficient for all

still 28 should work good i believe currently testing

@Furkan Gözükara SECourses I am considering training a LoRA Flux model for product photography (still life photography). I want to train a LoRA that specializes in representing still lifes, including props, lighting, and other details. I have a dataset of 200 images. What Kohya parameters would you recommend? Thanks in advance!

Register his patreon then download his configs. He already uploaded on it

@Furkan Gözükara SECourses Master, I'd like to do a quick training on 10 photos. My sister wants to see how it looks LOL. It doesn't need to be high quality, so what's the best way to do it? LORA training is probably faster than Dreambooth, right? If so, what configuration should I choose for my RTX 4090? And how many epochs for 10 photos?

LOL. I choose Rank_3_18950MB_9_05_Second_IT, 200 epoch, so 2000 steps. I expected much faster on 4090 LOL. SHowing me over 220h. Its not what i expected. So its any way do train on local pc with normal time ?

LOL. I choose Rank_3_18950MB_9_05_Second_IT, 200 epoch, so 2000 steps. I expected much faster on 4090 LOL. SHowing me over 220h. Its not what i expected. So its any way do train on local pc with normal time ?

I am registered and I have the Json's included in the LoRA_Tab_LoRA_Training_Best_FLUX_Configs folder, but I think they are more oriented to train human figure or characters. Which one could I use for my purpose of creating Lora for still life?

Read more carefully, he already show how to train person and train style. There is no shortcut to it. There are no perfect settings. It depends on your dataset as well and also experiments. He's already shown how to do experiment how to check quality. Perfect settings is judged by you not him he only shows the way to do it. Get to work you lazy people

hey everyone, i want to do Dreambooth training of flux-dev-de-destill. I am a beginner and will really appreciatesuggestions. Thanks

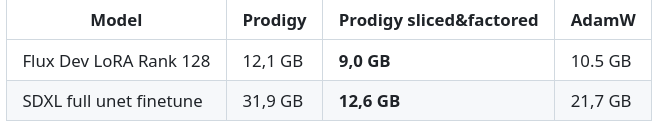

@Furkan Gözükara SECourses not sure if this is interesting for you, or you use Adafactor anyway:

Over at OneTrainer we have optimized the vram of Prodigy a little bit. It's now basically at Adafactor levels. Might be interesting for kohya too (I don't have contact).

Over at OneTrainer we have optimized the vram of Prodigy a little bit. It's now basically at Adafactor levels. Might be interesting for kohya too (I don't have contact).

and can anyone suggest me, what is the best model for realistic human pictures generation in flux dev ?

Hello! Has anyone worked on "LoRA extracted from Full Fine Tune, then LoRA at different network dims"? I'm looking for someone who can help me with this for a small fee! Please DM me. I already have a prepared dataset for the character Conan (Arnold Schwarzenegger), around 100 images, and my own GPU, an RTX 3090, ready for testing.

Yes i already done it. When extract the highest extractable rank which is 640 the size of lora is similar to fp8 checkpoint or gguf q8 full model

can anyone share best practices for training and generation to achieve maximal likeness of real person and generated images?

this video is the best one you can find https://youtu.be/FvpWy1x5etM

YouTubeSECourses

If you want to train FLUX with maximum possible quality, this is the tutorial looking for. In this comprehensive tutorial, you will learn how to install Kohya GUI and use it to fully Fine-Tune / DreamBooth FLUX model. After that how to use SwarmUI to compare generated checkpoints / models and find the very best one to generate most amazing image...

video is really high res

Thank you, Dr. Furkan!