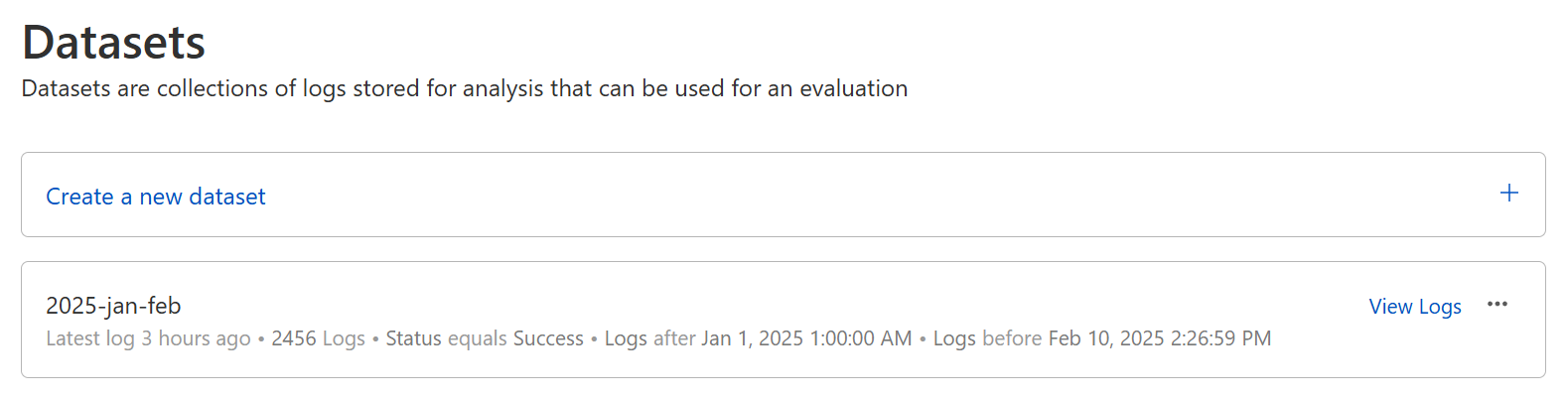

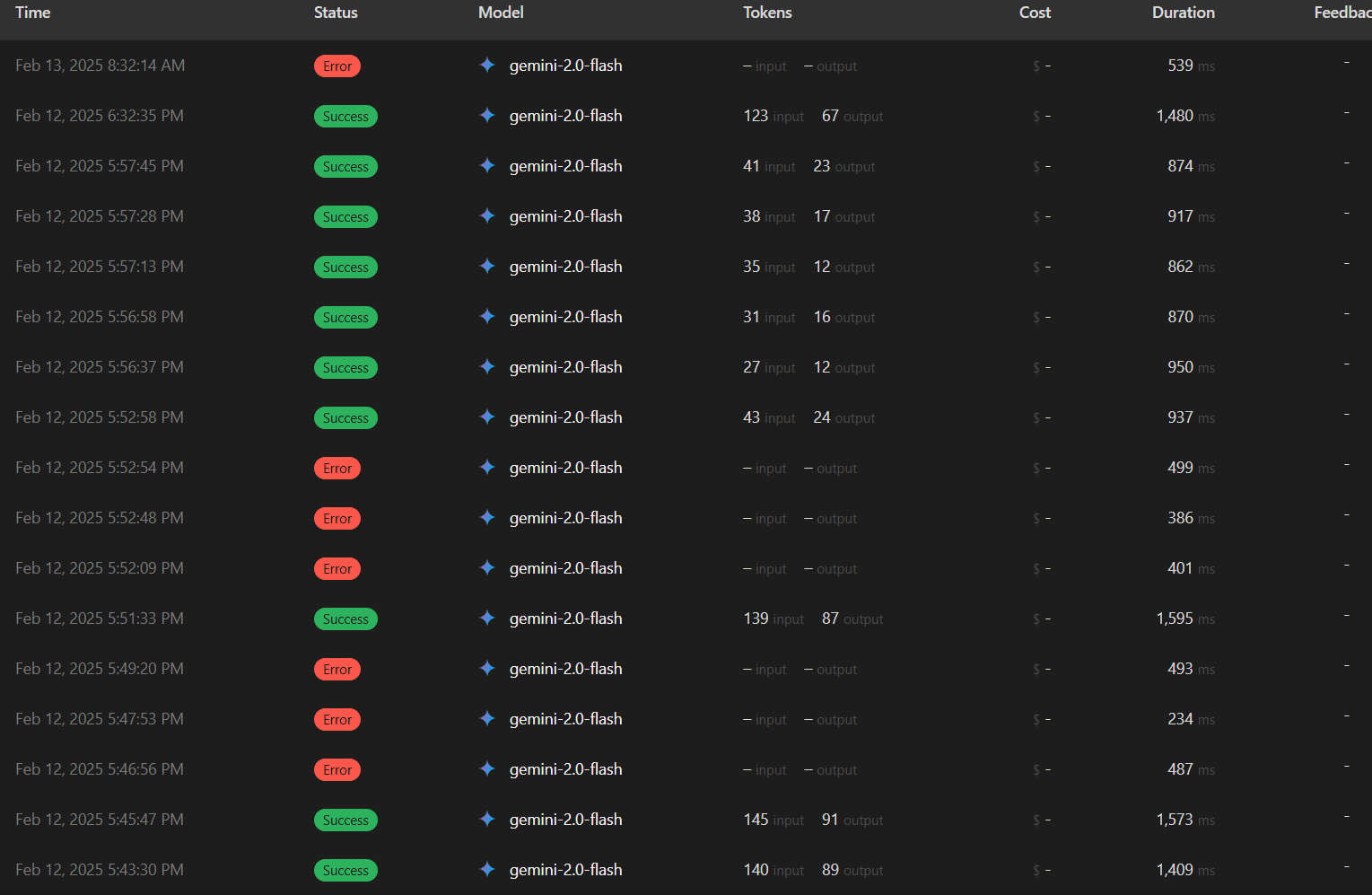

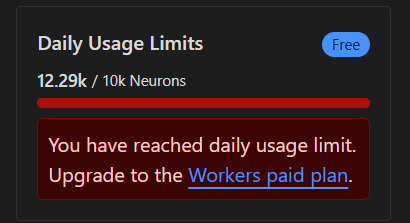

are you using google vertex or google ai studio? i just tested on google ai studio and seems like ca

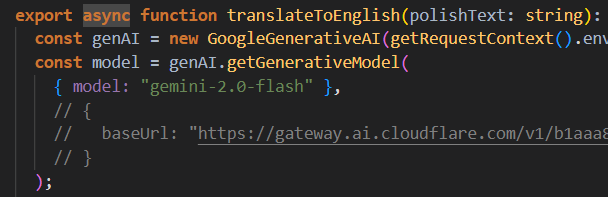

are you using google vertex or google ai studio? i just tested on google ai studio and seems like caching is working properly. Have you set any different cache configuration for gemini? https://developers.cloudflare.com/ai-gateway/configuration/caching/

Cloudflare Docs

Override caching settings on a per-request basis.