interesting not sure why

Targetting a few layers?

What's the size of the LoRA file?

nope fully layers

128 rank

fp16 saving : 1.27 GB

That's a small file.

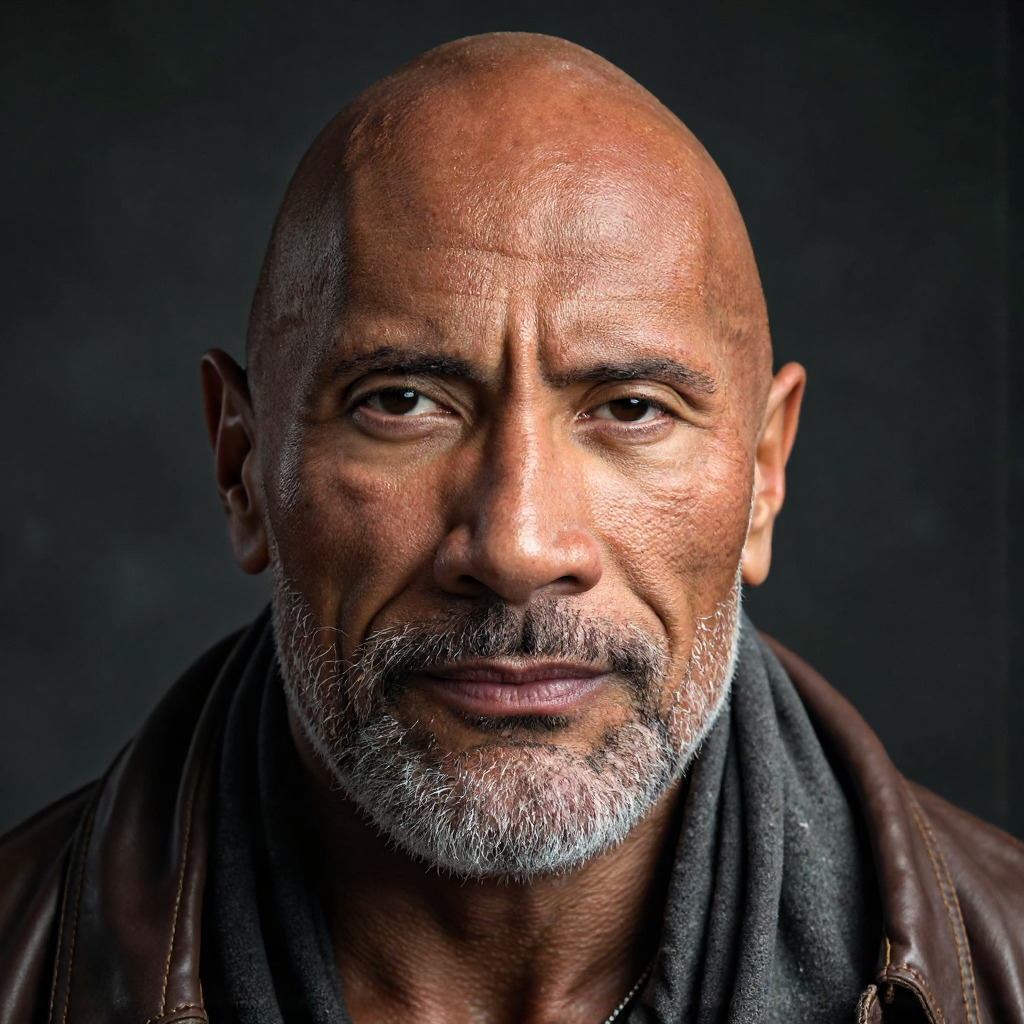

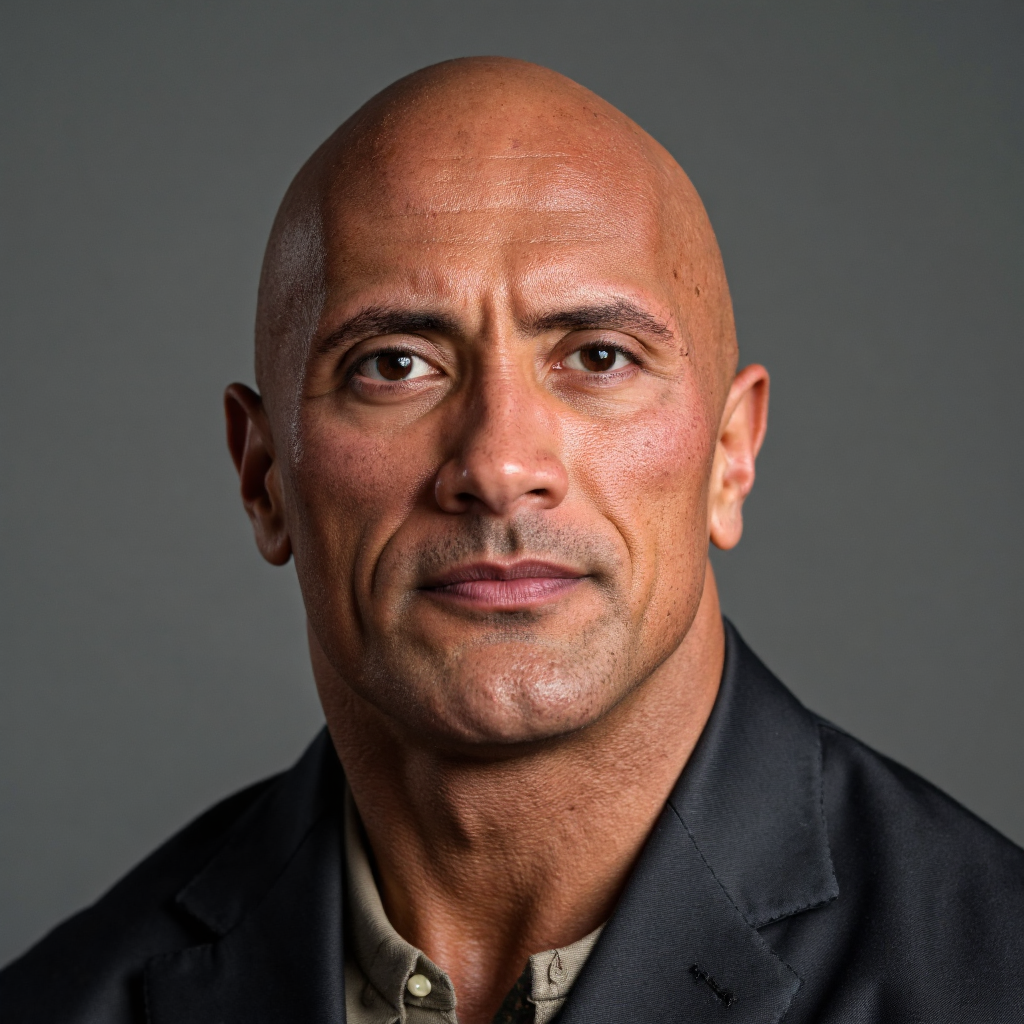

Resemblance is good?

Oh, and how fast is the H200?

really fast

not too bad but lower quality here

left ours right faster

you see faster is overfit to get decent resemblance

i will try 2x gpu next with 1024px

Thanks but I want to know exactly how are you doing this

Yeah. The original one is excellent. How many images did you use?

Patreon

Get more from SECourses: FLUX, Tutorials, Guides, Resources, Training, Scripts on Patreon

28 images

it is 170 th epoch

hehe

I've written python scripts with chatGPT that will process the high resolution images for training by cutting them into 1024x1024 pieces with a bit of overlap.

Amazing, can you upload the model to tensor art please?

Sure!

0 runs, 0 stars, 0 downloads. This fine tuned checkpoint is based on Flux dev de-distilled thus requires a special comfyUI workflow and won't work very well ...

Little comparison to see the improvement side by side.

you recreated this? holy moly! do you have a breakdown on how you did this?

not really the higher the resolution the longer it takes to render obviously - and you get better results but also diminishing returns eventually - this was resized to 1080p i believe took 5min to render

i sent the workflow above - input video - play with the prompt and setting, make sure to match the skip steps with the normal scheduler step to equal 20 step of genning anything under that breaks teh genning

and prompt basically everything betwheen the both videos i wish theres an llm that can do that for me to be more accurate

I already test some top Flux dev de-distilled model, quality quite dissapointed, most of them only good on realistic image, for the style only the same or even worse than Flux dev although time for render is double due to CFG >1. Are there any de-distilled models you guys can recommend and maybe can be used to train lora and finetuning ?

Awesome, thanks a ton Maki

yep

and whats the best tool for lip sync?

What would you guys recommend for a flux checkpoint training on Kohya for a character model.. i have 40 images on a 3090ti (24 gb, 64 gb of ram). More than batch size 1? Is current best practice not to caption our training sets and to use ohxw woman? Things change so fast in this world?

@Dr. Furkan Gözükara I'm currently using Flux Q8 on Kaggle , is there something better I can use for multi purpose? (realism,style etc). I saw in chat something about de distilled versions?

nothing i know specific

batch size 1 is best

we have 24 gb config do fine tuning / Dreambooth

not that i know

testing faster training on 8x A6000 machine

any suggestions?

Is Hunyuan current state of the art img2vid model?

in both open-source and SaaS worlds?

Anyone know the easiest way to finetune your own VLLM for image captioning? I've already got my own dataset, but there doesn't seem to be a straightforward way to actually carry out the finetuning process itself... I know there's already some good captioners out there, but I want to finetune my own