200 epoch probably not needed for that many images

In the end, it turned out to be 180 images. I'm using 100 epochs, and right now it's at 50% of the training process at a speed of 6.6s per step. We'll see how the final result turns out

What would be the best way to train a model for interior design? Let me explain.

I need to apply a specific product to a given room. For example, I want a Renaissance-style room with this product on the wall and this product on the floor.

I understand that in this case, it makes sense to train a highly structured model with all the individual products and, at the same time, the same products applied to different rooms. I assume that captions are important in this scenario.

What would be your approach to training a model for this application?

I need to apply a specific product to a given room. For example, I want a Renaissance-style room with this product on the wall and this product on the floor.

I understand that in this case, it makes sense to train a highly structured model with all the individual products and, at the same time, the same products applied to different rooms. I assume that captions are important in this scenario.

What would be your approach to training a model for this application?

full research so best to keep experimenting

Hey @Furkan Gözükara SECourses, I'm enjoying the 5090 videos you've done and had a question. Wondering if you're planning on doing a video comparing 3090 vs 5090 for fine tuning or lora training? Sorry if asked elsewhere discord didn't show if it had or hadn't.

yep i will show

i made a quick test also

i am waiting official pytorh

Reddit

Explore this post and more from the SECourses community

Hi! Don't know which channel to write this on. I'm training a lora and I'm wondering if I can stop the training and start a training on the latest save like you can when training checkpoint?

Save training state (including optimizer states etc.) when saving models

Save training state (not enabled)

Save training state (including optimizer states etc.) on train end

Save training state at end of training (not enabled)

Resume from saved training state (path to "last-state" state folder)

Saved state to resume training from

Save training state (not enabled)

Save training state (including optimizer states etc.) on train end

Save training state at end of training (not enabled)

Resume from saved training state (path to "last-state" state folder)

Saved state to resume training from

I have finetuned flux-dev with 180 images of a character and 180 photos from various angles. Maybe it's not the best dataset because it didn’t have varied backgrounds. Almost all the images were just of the face without the body.

I'm having quite a few issues with prompts where I request a stylization. I trained a batch with 100 epochs and have 4 safetensors—I’ve tested them all, and I’m experiencing the same issues across the board.

When I ask for a stylized result, like a cartoon or anime style, it struggles to adhere to the prompt and often generates random things instead.

What could be happening? Thanks a lot!

I'm having quite a few issues with prompts where I request a stylization. I trained a batch with 100 epochs and have 4 safetensors—I’ve tested them all, and I’m experiencing the same issues across the board.

When I ask for a stylized result, like a cartoon or anime style, it struggles to adhere to the prompt and often generates random things instead.

What could be happening? Thanks a lot!

this is normal, nothing is wrong. You trained the model on realism data and it is inclined to generate it.

18000 steps is a lot, you may want to try earlier checkpoints

you may also try different prompt engineering, generate a lot of images and cherrypick from them secondary dataset to include in traning of second gen model with more stilyzed images in training data

yes I did it

I did imagine that. But I watched @Furkan Gözükara SECourses tutorial and saw that he had some incredibly stylized images... I think he also only trained with realistic images. That's where my doubt comes from

yes buyt i never tried

its option is here

yes flux is very realistic

therefore i recommend 2 step training for stylized images

first train, generate some good stylized images, and retrain on them

i had 256 images and dataset was variety . also still i had hard time and needed to find prompts

thank you sir!

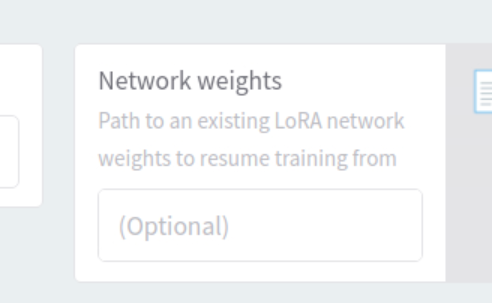

The grid test I am analyzing to find newer FLUX DEV training. This is not even including the first 10 different trainings i made  1176 images. I think we gonna have a better workflow than what we have with using more VRAM and more training time.

1176 images. I think we gonna have a better workflow than what we have with using more VRAM and more training time.

1176 images. I think we gonna have a better workflow than what we have with using more VRAM and more training time.

1176 images. I think we gonna have a better workflow than what we have with using more VRAM and more training time.

In case someone is interested in creating LoRAs from Lumina2: https://huggingface.co/sayakpaul/trained-lumina2-lora-yarn, https://github.com/huggingface/diffusers/blob/main/examples/dreambooth/README_lumina2.md (the code).

GitHub Diffusers: State-of-the-art diffusion models for image, video, and audio generation in PyTorch and FLAX. - huggingface/diffusers

Diffusers: State-of-the-art diffusion models for image, video, and audio generation in PyTorch and FLAX. - huggingface/diffusers

Diffusers: State-of-the-art diffusion models for image, video, and audio generation in PyTorch and FLAX. - huggingface/diffusers

Diffusers: State-of-the-art diffusion models for image, video, and audio generation in PyTorch and FLAX. - huggingface/diffusers

Its Lora train or checkpoint ?

fine tuning atm

after that i plan lora

For multi-GPU training, does the set-up need 80gb vram TOTAL, or does EACH gpu need 80gb vram?

for fine tuning

each gpu 80 gb

for lora not necessary 48 gb gpus works great

Gottcha, thank you!

Out of interest, what causes the VRAM per GPU requirements to jump up so much? Just curious on what's going on in the background processes that requires VRAM requirement to go from 24gb VRAM per GPU from single training to 80gb VRAM per GPU for muli-traning

extremely good question

i asked this to kohya

he was also not sure

How does multy GPU trainign work anyways?

Each GPU has a copy of a trained model and perform traning steps independantly, so how in the end we are not gettign two separate models?

Each GPU has a copy of a trained model and perform traning steps independantly, so how in the end we are not gettign two separate models?

they cannot possibly synchronize model state after each step, that woudl take ages (minutes)

And if the models are just merged as last step - would that mean the final model can have questionable quality (missed local minimas and such)?

with diffuser models

each models loaded fully into vram

like flux sd etc

so we have exact replicate

yeah, but how the state of each model loaded into GPU sync up?

So the training result in single model, not multiple models

they are snyched at steps

so it is being increased batch size