ye dont compare older teacache and newer teacache :d

newer is different implementation better than older

did you read me ?

they optimized VRAM. i will check vram usage tomorrow

that could be why using lesser

or maybe

we broken something

remind me tomorrow :d

i got to sleep

yes, ofc the new teacache is better, my willing was not to compare 2 teacaches, but obviously from v31 to v32, there is that new teacache, that seems great with certainly better vram usage, but there is a drop of performance on 24/32Gb GPUs

good night!

The first test was not good at all, but I made a mistake by inserting a 9:16 photo and generated a 16:9 video. I'm making another video, but it will take some time

a frame of the first video...

@Dr. Furkan Gözükara Aspect ratio doesn't work on the new update

Second video finished. This time with very good quality

It is rare. The first version of teacache started slow and ended very fast. The new update starts fast and ends very slow. I think the new update is a bit slower in general. I'll keep testing tomorrow

thanks

gpu presets broken too

fixing now

gpu preset broken that is probably why fixing now

Thanks, good luck

fixed

Windows_Update.bat should be sufficienmt

fixed ty

Alright, gonna try

From v32 to v33 : Aspect ratio works correctly now, GPU uses 26.9GB of VRAM instead of 18.5Gb with the 32Gb preset, and for the exact same generation with Teacache 0.15 for 14B : 310s (v31), 418s (v32), 402s (v33)

presets can be made to use more VRAM looks like there are some vram improvements since i made :d

i need to fix RAM leftover issue though. currently it doesnt properly clear after generation

Yes, it's great gg, on your 5090, how much VRAM do you use during process basically ?

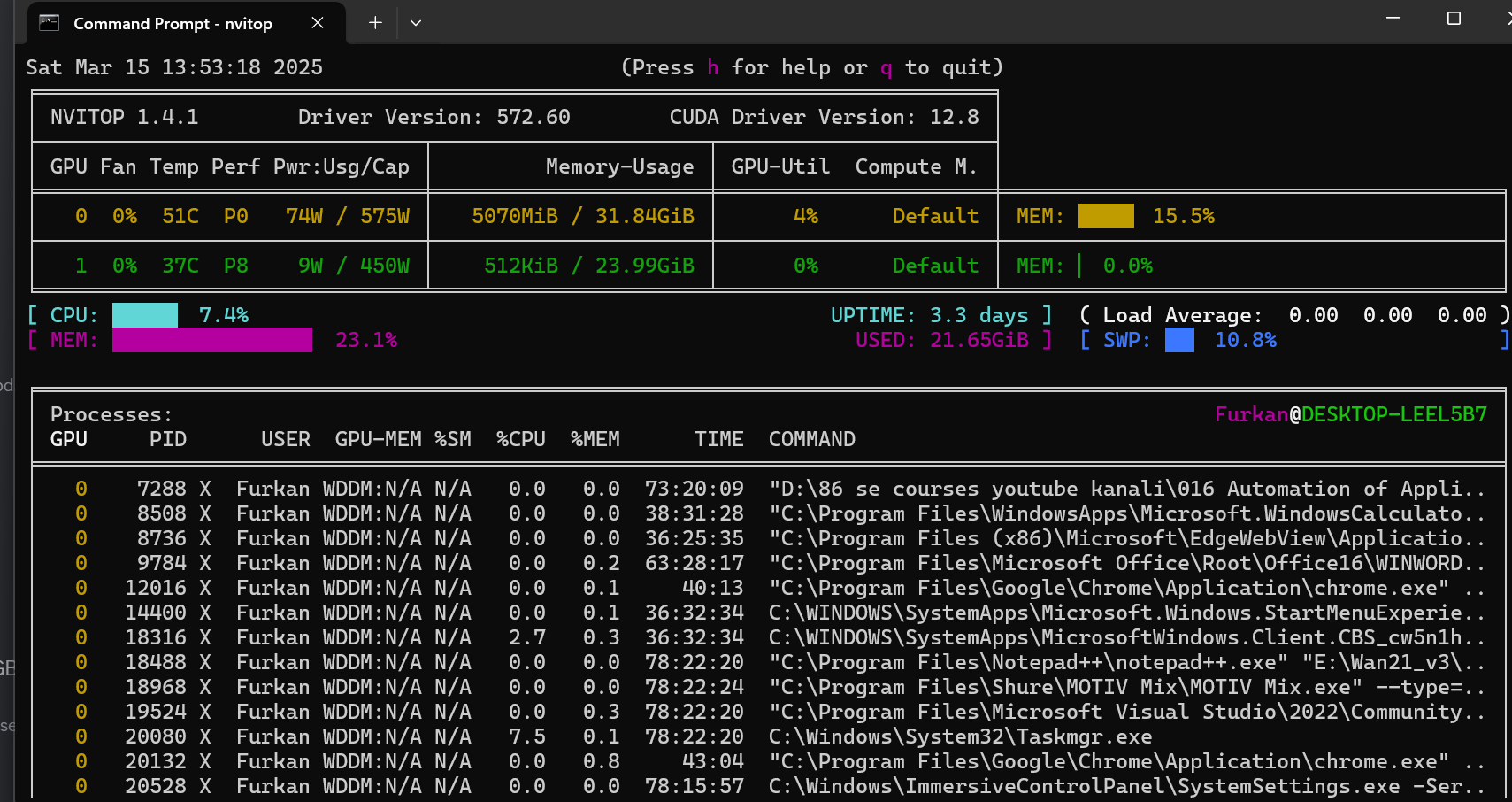

well i need to restart pc and test

currently without wan app i am using 5 gb

okay, 5 Gb without wan seems pretty high! I got 1.4 Gb without

yes. i try to set presets with thinking as 2gb free :d

i need to restart pc lots of apps running :d

would be perfect

yeah haha

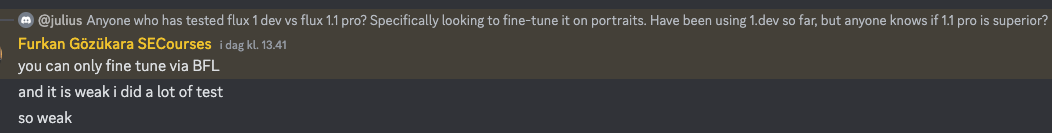

@Dr. Furkan Gözükara Hey have you tested flux 1.1 pro? Any reason we are fine-tuning on flux 1 dev still?

Just copying furkans answer in here from another channel for everybody else

Anyone tried Google's Gemini flash 2.0?

saw some posts about it on reddit, would've been good - one year ago

@Dr. Furkan Gözükara For fine-tuning flux with kohya, do you recommend using the models from OwlMaster/FLUX_LoRA_Train (https://huggingface.co/OwlMaster/FLUX_LoRA_Train/tree/main) or do you recommend the models from your unified downloader (ae from black-forest-labs/FLUX.1-schnell, t5xxl from OwlMaster/SD3New, clip_l from OwlMaster/zer0int-CLIP-SAE-ViT-L-14) ?

i am using but not for image :d

i recommend zer0int-CLIP-SAE-ViT-L-14 as clip

the rest are same

also i recommed use flux1-dev.safetensors

for what ?