GPU VRAM Usages Table With Respected To Number of Persistent Params+

All tests made on secondary GPU thus they are 100% accurate

Therefore, make sure that you have such empty VRAM before starting the Wan 2.1 SECourses App

1280x720 px, 81 frames, 14b text to video model - BF16 precision (torch.bfloat16)

0 is : 14168 MB - Below 24 GB GPUs

1,000,000,000 is : 15658 MB

2,000,000,000 is : 17664 MB

3,000,000,000 is : 20134 MB

3,750,000,000 is : 21134 MB

4,000,000,000 is : 21500 MB

4,250,000,000 is : 22000 MB - 24 GB GPUs

8,250,000,000 is : 29870 MB - 32 GB GPUs

22,000,000,000 is : 41700 MB - 48 GB GPUs

1280x720 px, 81 frames, 14b text to video model - FP8 precision (torch.float8_e4m3fn)

0 is : 14168 MB - Below 24 GB GPUs

1,000,000,000 is : 14918 MB

8,000,000,000 is : 21260 MB

8,750,000,000 is : 21980 MB - 24 GB GPUs

22,000,000,000 is : x MB

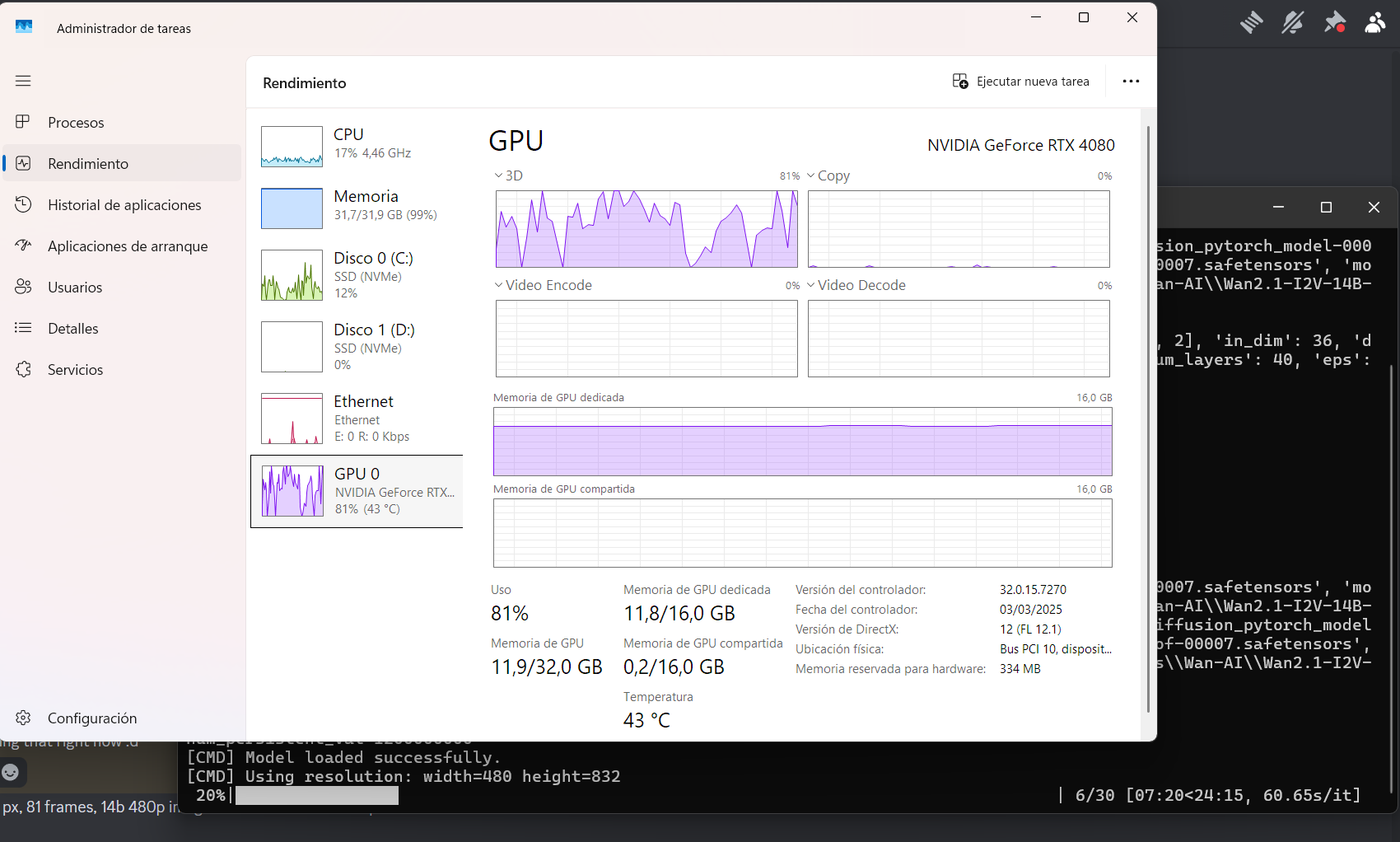

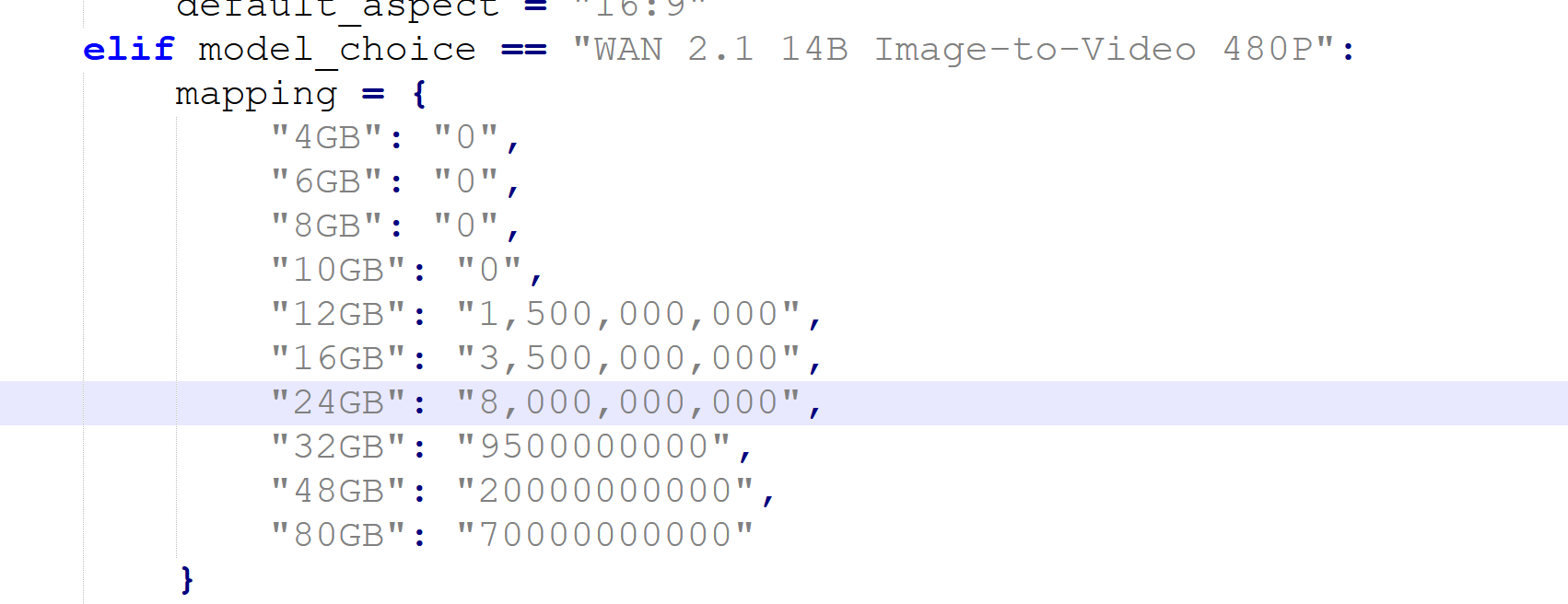

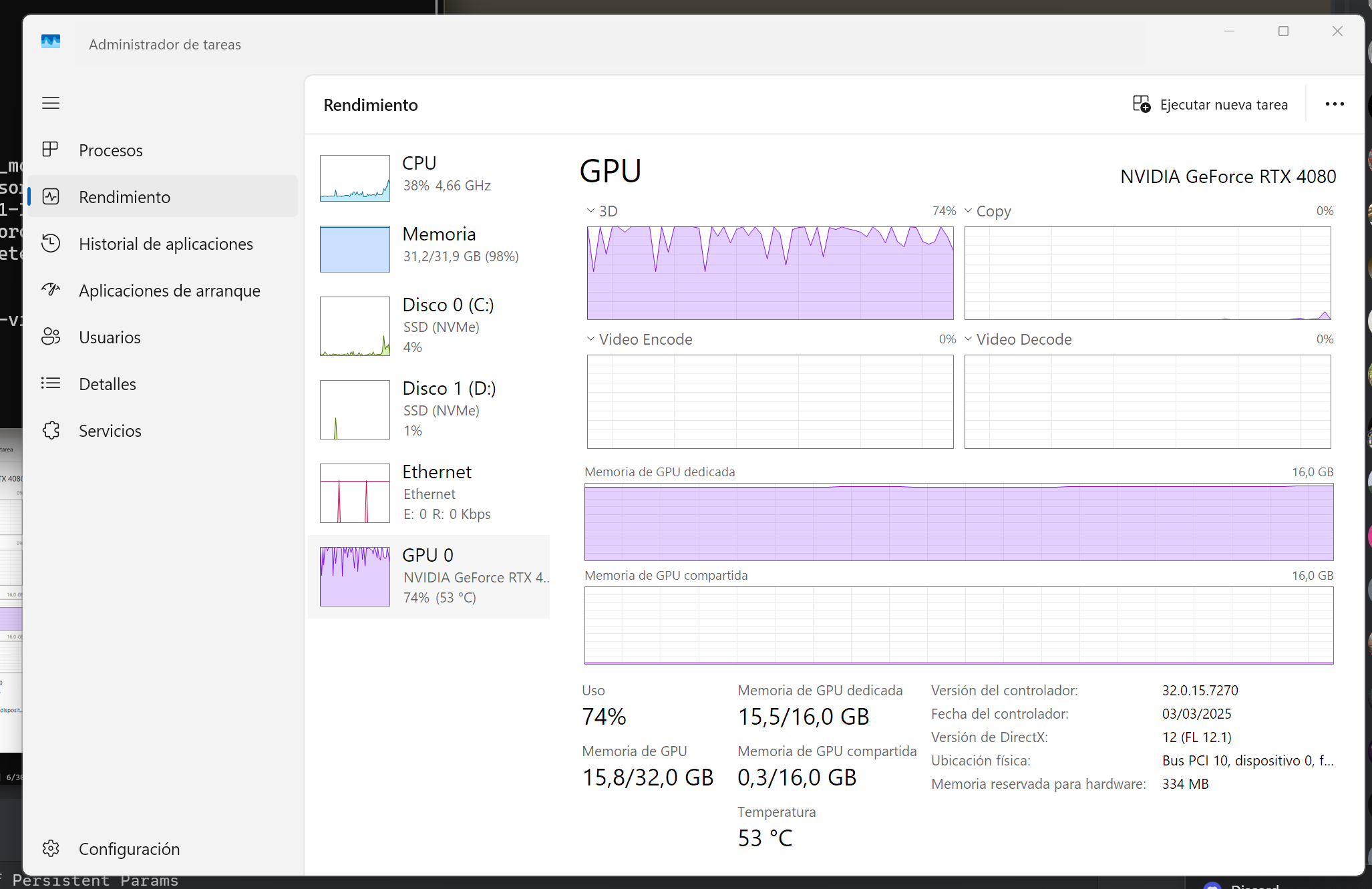

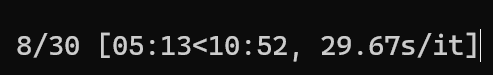

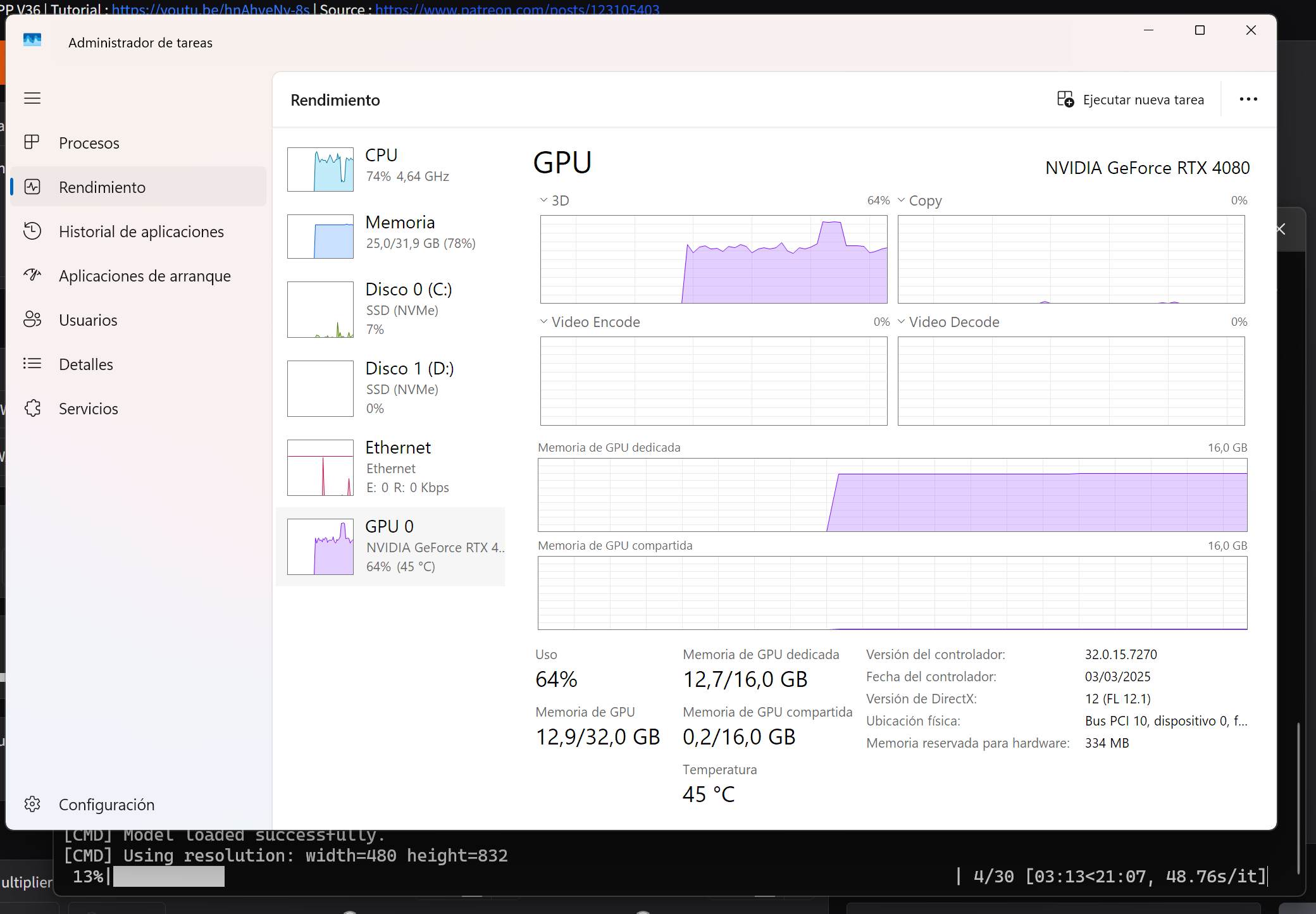

480x832 px, 81 frames, 14b 480p image to video model - BF16 precision (torch.bfloat16)

0 is : 8618 MB - Below 12 GB GPUs

1,000,000,000 is : 9354 MB

1,500,000,000 is : 10090 MB - 12 GB GPUs

2,000,000,000 is : 11370 MB

3,000,000,000 is : 13080 MB

3,500,000,000 is : 14038 MB - 16 GB GPUs

4,000,000,000 is : 15140 MB

8,000,000,000 is : 22100 MB - 24 GB GPUs

12,000,000,000 is : 30060 MB - 32 GB GPUs

22,000,000,000 is : 38300 MB - 48 GB GPUs

480x832 px, 81 frames, 14b 480p image to video model - FP8 precision (torch.float8_e4m3fn)

0 is : 8618 MB - Below 12 GB GPUs

2,500,000,000 is : 9794 MB - 12 GB GPUs

7,500,000,000 is : 14850 MB - 16 GB GPUs

8,000,000,000 is : 15392 MB

15,000,000,000 is : 21700 MB - 24 GB GPUs

22,000,000,000 is : 23080 MB - 32 GB GPUs

720x1280 px, 81 frames, 14b 720p image to video model - BF16 precision (torch.bfloat16)

0 is : 18326 MB - Below 24 GB GPUs

2,000,000,000 is : 20360 MB

3,000,000,000 is : 22010 MB - 24 GB GPUs

6,000,000,000 is : 28380 MB

6,500,000,000 is : 29330 MB

6,750,000,000 is : 29790 MB - 32 GB GPUs

16,000,000,000 is : 47030 MB - 48 GB GPUs

22,000,000,000 is : 47800 MB - 80 GB GPUs

720x1280 px, 81 frames, 14b 720p image to video model - FP8 precision (torch.float8_e4m3fn)

0 is : 18326 MB - Below 24 GB GPUs

3,000,000,000 is : 19763 MB

6,000,000,000 is : 22020 MB - 24 GB GPUs

14,000,000,000 is : 29840 MB

15,000,000,000 is : 30790 MB - 32 GB GPUs

22,000,000,000 is : 32013 MB - 48 GB GPUs

GPU VRAM Usages Table With Respected To Number of Persistent Params+

All tests made on secondary GPU thus they are 100% accurate

Therefore, make sure that you have such empty VRAM before starting the Wan 2.1 SECourses App

1280x720 px, 81 frames, 14b text to video model - BF16 precision (torch.bfloat16)

0 is : 14168 MB - Below 24 GB GPUs

1,000,000,000 is : 15658 MB

2,000,000,000 is : 17664 MB

3,000,000,000 is : 20134 MB

3,750,000,000 is : 21134 MB

4,000,000,000 is : 21500 MB

4,250,000,000 is : 22000 MB - 24 GB GPUs

8,250,000,000 is : 29870 MB - 32 GB GPUs

22,000,000,000 is : 41700 MB - 48 GB GPUs

1280x720 px, 81 frames, 14b text to video model - FP8 precision (torch.float8_e4m3fn)

0 is : 14168 MB - Below 24 GB GPUs

1,000,000,000 is : 14918 MB

8,000,000,000 is : 21260 MB

8,750,000,000 is : 21980 MB - 24 GB GPUs

22,000,000,000 is : x MB

480x832 px, 81 frames, 14b 480p image to video model - BF16 precision (torch.bfloat16)

0 is : 8618 MB - Below 12 GB GPUs

1,000,000,000 is : 9354 MB

1,500,000,000 is : 10090 MB - 12 GB GPUs

2,000,000,000 is : 11370 MB

3,000,000,000 is : 13080 MB

3,500,000,000 is : 14038 MB - 16 GB GPUs

4,000,000,000 is : 15140 MB

8,000,000,000 is : 22100 MB - 24 GB GPUs

12,000,000,000 is : 30060 MB - 32 GB GPUs

22,000,000,000 is : 38300 MB - 48 GB GPUs

480x832 px, 81 frames, 14b 480p image to video model - FP8 precision (torch.float8_e4m3fn)

0 is : 8618 MB - Below 12 GB GPUs

2,500,000,000 is : 9794 MB - 12 GB GPUs

7,500,000,000 is : 14850 MB - 16 GB GPUs

8,000,000,000 is : 15392 MB

15,000,000,000 is : 21700 MB - 24 GB GPUs

22,000,000,000 is : 23080 MB - 32 GB GPUs

720x1280 px, 81 frames, 14b 720p image to video model - BF16 precision (torch.bfloat16)

0 is : 18326 MB - Below 24 GB GPUs

2,000,000,000 is : 20360 MB

3,000,000,000 is : 22010 MB - 24 GB GPUs

6,000,000,000 is : 28380 MB

6,500,000,000 is : 29330 MB

6,750,000,000 is : 29790 MB - 32 GB GPUs

16,000,000,000 is : 47030 MB - 48 GB GPUs

22,000,000,000 is : 47800 MB - 80 GB GPUs

720x1280 px, 81 frames, 14b 720p image to video model - FP8 precision (torch.float8_e4m3fn)

0 is : 18326 MB - Below 24 GB GPUs

3,000,000,000 is : 19763 MB

6,000,000,000 is : 22020 MB - 24 GB GPUs

14,000,000,000 is : 29840 MB

15,000,000,000 is : 30790 MB - 32 GB GPUs

22,000,000,000 is : 32013 MB - 48 GB GPUs