what do you see on ram and gpu

i rebooted

23.4 gb and ram = 3.8

in task manager performance my gpu is at the bottom used to be at the top lol

[CMD] Model loaded successfully.

[CMD] Using resolution: width=480 height=832

Only

0%| | 0/50 [00:00<?, ?it/s]WAN 2.1 14B Image-to-Video 480P

[CMD] Using resolution: width=480 height=832

Only

num_frames % 4 != 1 is acceptable. We round it up to 121.0%| | 0/50 [00:00<?, ?it/s]WAN 2.1 14B Image-to-Video 480P

not working right for me

prev version was ok

how many frames you have?

what do you see on cmd ram gpu?

gave me a runtime error something about tensors on cpu and gpu

and the aspect ratio keeps changing back from portrait to 16.9

knocked it down to 81 frames

using 0.5 ram

but ive used higher than 81 before and produced upto 16 sec vids

21.97 it/s

MIDI: Multi-Instance Diffusion for Single Image to 3D Scene Generation https://huanngzh.github.io/MIDI-Page/

MIDI is a novel paradigm for compositional 3D scene generation from a single image, extending pre-trained 3D object generation models to multi-instance diffusion models for simultaneous generation of multiple 3D instances.

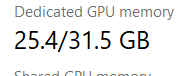

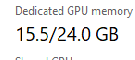

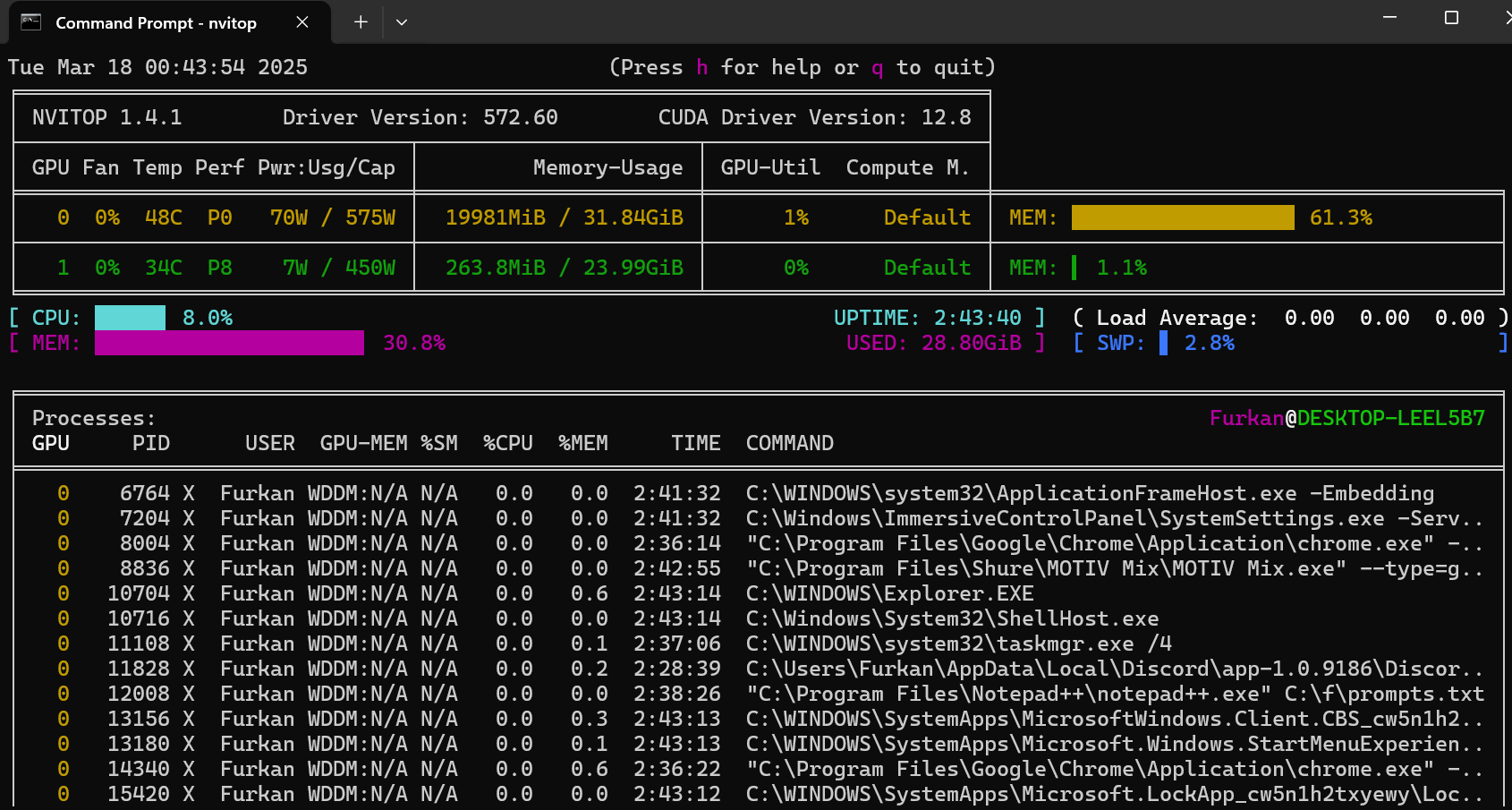

@Furkan Gözükara SECourses Are you seeing the full 32GB in windows from your 5090? Mine only reports 31.5GB and its the difference between fitting the full Wan Model or not.. My 3090 reports 24.0 - in the same task manager so I'm thinking its not the 1024 calculation.

Which is your primary gpu connected to your display?

Onboard Intel. Both headless. 3090 shows the full 24.0

So thats why i was curious why the total was different.

same here 31.5

they stolen vram

Yeah something different between 30xx/50xx. My 3090 = 24.0 in task manager, 5090 = 31.5 in task manager - and I can't load the full FP16 WAN 2.1 model in 32GB because its 500MB short!! it goes to shared GPU which slows it down a lot.

shameless nvidia

but flux training fully fits

@Furkan Gözükara SECourses have you found perfect teacache settings for Wan2.1? with no visible quality loss to me: teacache at 0.250 T2V 14B model, with Sage and torch-acceleration i got a 49 frame Wan down to 101 seconds. I can get it faster, but start to notice degradation.

0.15 is nice

but 0.2 made some degrade

but if working for you great

Will you be doing wan/hunyuan lora training tests? I got hunyuan down well, but wan lora's are not coming out well for me with musubi-tuner, but can't tell if its 5090 problem. So much instability with cuda 12.8, sage, triton 3.3

i will first do wan 2.1 lora

I think this number changes if you select 14B co-efficients. this is also a bit confusing. I also notice torchcompile kills loras

0.15 only changes with 1.3b model

so you like 0.15 for I2V and T2V 14B right? I didn't buy 5090 to run 1.3B models

will you use musubi-tuner? I just can't get good lora's for wan with the same dataset as used for good loras on hunyuan. I actually think captions might be required this time for more flexibilty during prompting. But not sure yet. I didn't caption hunyuan and they came out good, sometimes better than flux with kohya.

ytes 0.15 for all 14b model

yes i plan to use musubi

lots of people using lots of bug fixes

Maybe I need to try again with later code. Also this guy just created a gui for it. https://github.com/Kvento/musubi-tuner-wan-gui there is also a gui from ttplanet https://github.com/TTPlanetPig/Gui_for_musubi-tuner - this is gradio

i plan to make my own gradio

I just modified TTplanet's one. its a good starting point. Let me know how your Wan Lora success is. I'm going to keep experimenting, and will let you know what i discover too. I think there is a reason character lora's aren't being released as fast as "motion/animation" loras

sure. i am testing 0.2 teacache right now

it takes less than 10 min on h100 highest quality - 20 steps

Also TorchCompileModelWan (for wan21) breaks Lora's on 50xx but not 30xx. Must be the 3.3 triton, or torch nightlies. I just tested. Same workflow works fine on 30xx. but not 50xx with Torchcompile running on both.

very likely

wow teacache 0.2 not bad

and super fast