Selam Furkan, I was using Wan2.1_v38 without any problems. Unfortunately, as soon as I updated to v5

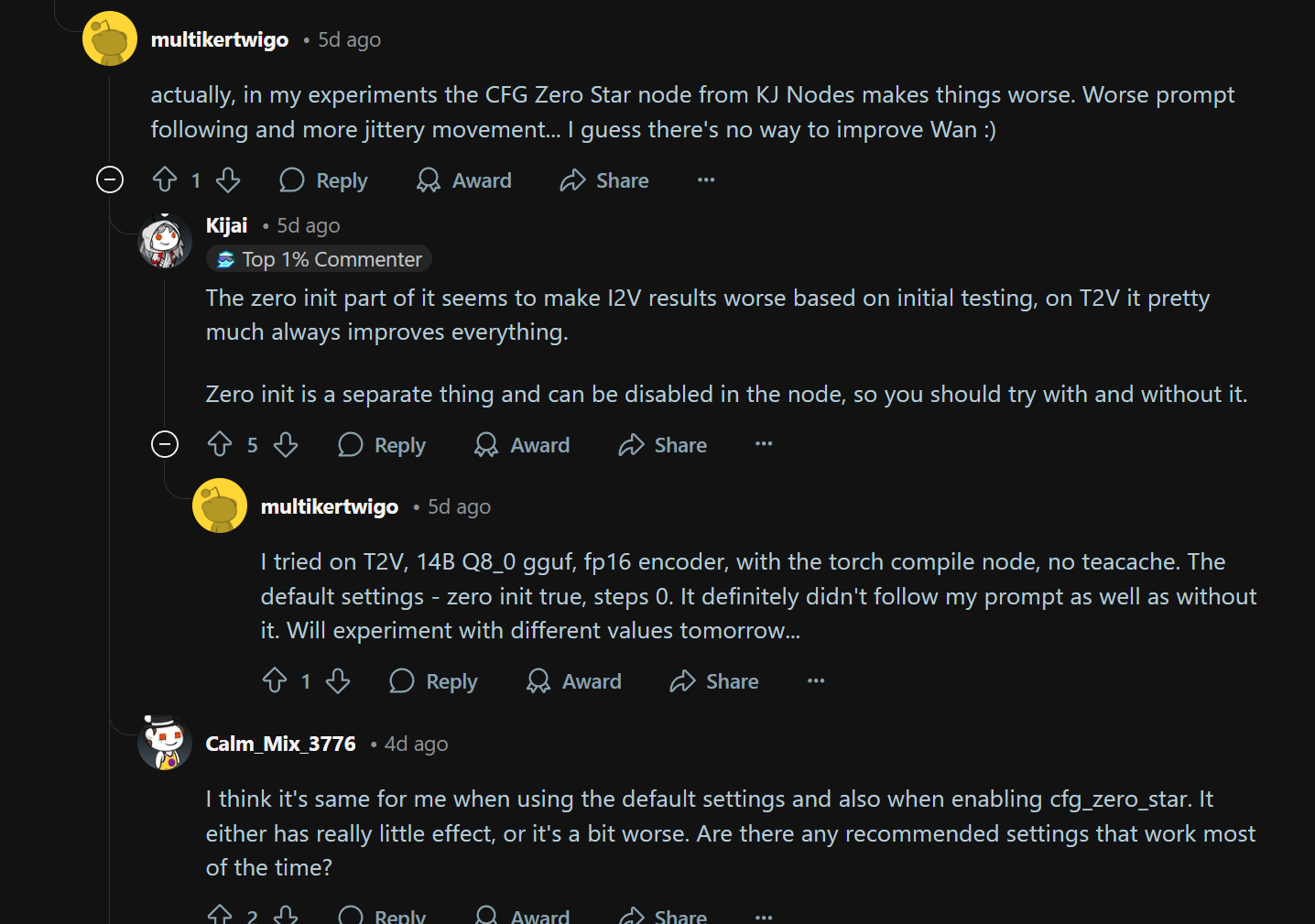

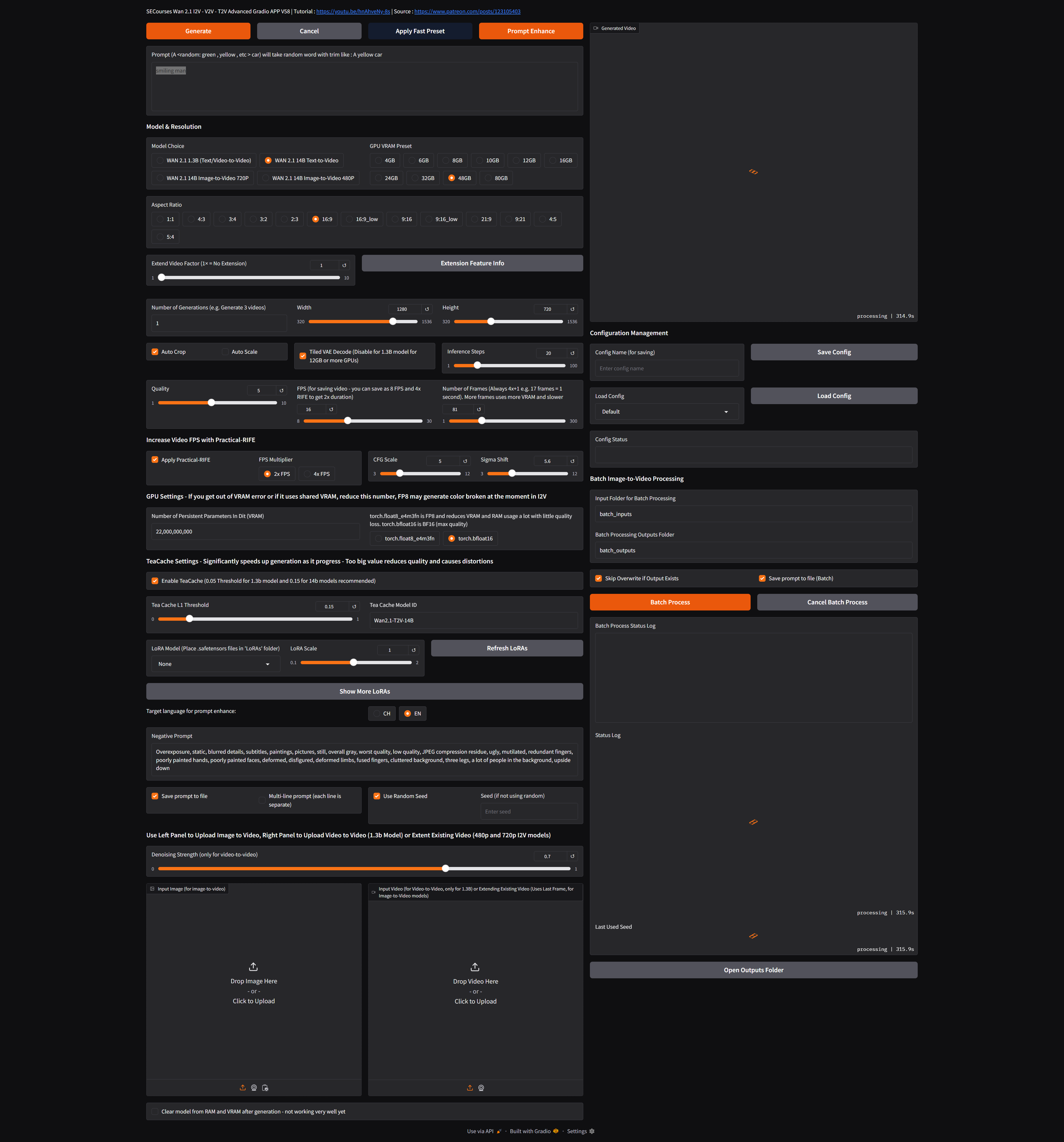

I was using Wan2.1_v38 without any problems. Unfortunately, as soon as I updated to v58 and finally v59, Wan2.1 stopped working 100% correctly. I use your .bat installation file as a template, but I install everything manually and create a conda virtual environment. There are no issues during installation, but after Gradio starts, the 14B text-to-video model is no longer rendering correctly if the Tea cache is enabled, despite the fact that I am always using the same downloaded models. This means the problem is not caused by the models. Other models seem to render correctly with the Tea cache enabled, but somehow, the quality is no longer as good as before, even with 80 steps and high-quality settings, like in versions ≤v38.

After switching back to v38, everything was fine again—high-quality outputs with all models and Tea cache enabled. I think the issue might be caused by Sage Attention or its implementation. In v38 and earlier versions, I couldn’t see any significant quality difference between Tea cache being enabled or disabled, apart from a huge performance uplift. With the newest versions, Sage Attention provides a much better performance boost, but in my case, when comparing the same results to previous versions (≤v38), I can confirm a significant quality difference.