hopefully will make but i still couldnt have time yet 😦

hopefully will make but i still couldnt have time yet

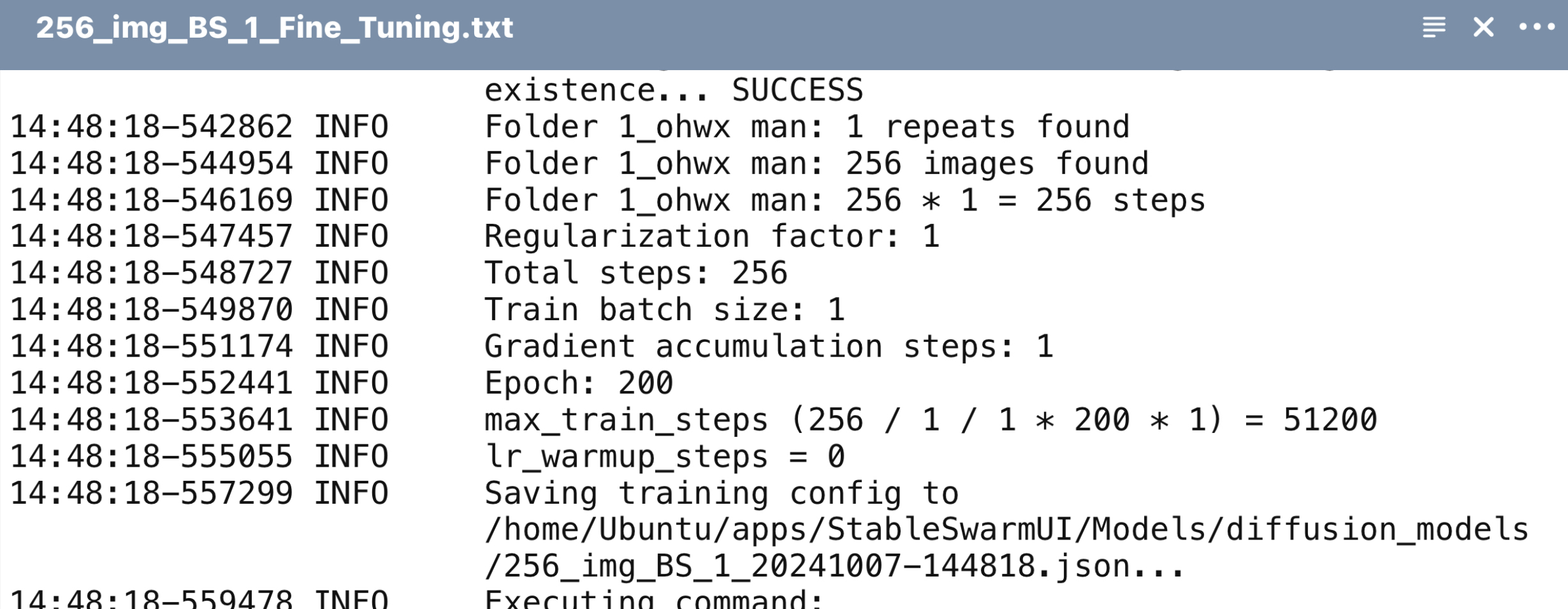

what epoch was your best checkpoint for the batch 7 config? I have 252 imgs so I’ve been training until 111 epochs to reach 4K steps but never went higher than that.

what epoch was your best checkpoint for the batch 7 config? I have 252 imgs so I’ve been training until 111 epochs to reach 4K steps but never went higher than that. its really odd

its really odd Thanks! much worth the patreon sub!

Thanks! much worth the patreon sub!