Crawl job never stopping

so i am trying out firecrawl sdk for the first time as the playground worked very well. Getting confusing results.

1. this code just hangs and never prints the crawl_results:

2.

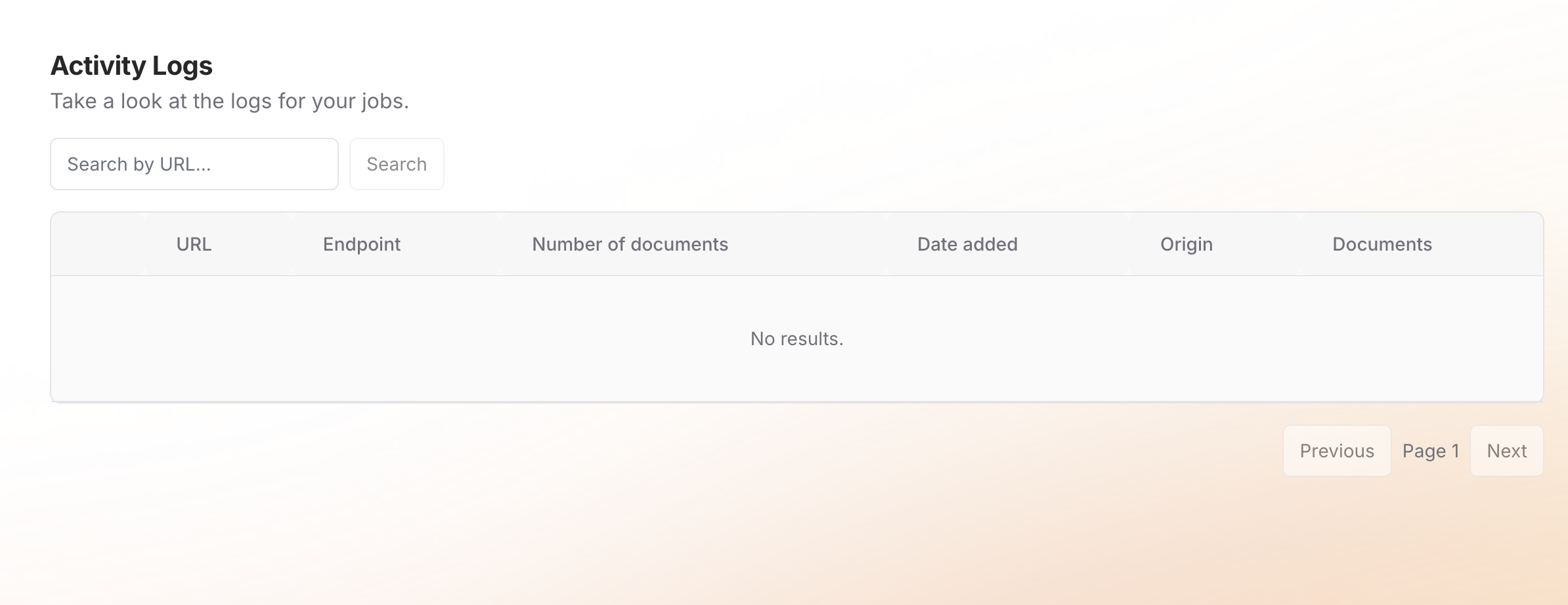

i can see my credits are getting drained though so it is doing something. i do want to cancel the job as it was just a test but i don't have the job id and i don't see a way to cancel anything in the dashboard, nor do i see results even coming through!

1. this code just hangs and never prints the crawl_results:

2.

i can see my credits are getting drained though so it is doing something. i do want to cancel the job as it was just a test but i don't have the job id and i don't see a way to cancel anything in the dashboard, nor do i see results even coming through!