I am not sure what has happened with

I am not sure what has happened with workflows product recently, but since yesterday, the product just doesn't work for me, i've had to stop everything, and now looking at alternative solutions.

I can't trigger / pause / resume / terminate any workflows, l met with the error code

10001 as earlier.

Over 3000 workflows failed yesterday with the exact same error This instance experienced an internal error which prevented it from running. If this is persistent, please contact support: https://cfl.re/3WgEyrH

Any new workflow l try to start this morning goes straight into queued state and will never run.

I have 4K+ messages going into dead-letter-queues because workflows can't be instantiated.

@James / @Diogo Ferreira any help would be appreciated, as this product was working perfectly up until yesterday moring.119 Replies

Untouchable instances, some are 2 days+ old

Even the cf dashboard cannot fetch information about workflows:

waited some time, and tried to trigger a few hundred instances, again either inactive or stuck running, i am a bit shocked how this is product has been broken for over 24hrs now

@Thomas Ankcorn i am not sure if you could help on any of the above?

Thanks Ollie, flagging with the team

Hi Ollie, sorry for the issues you are seeing. Since yesterday, we have started monitoring your account, and we have initiated a cleanup process. This was the cause of the 3000 workflow instances failed, as during the cleanup process, the system purges irrecoverable instances.

Regarding the new instances created this morning, they should have run normally, which doesn't see to be the case.

We are looking into it as we speak.

Ah amazing, and thanks a lot, sorry to sound like am complaining, l do love the cloudflare products and everything has been working so smoothly, i've ran almost 1 million workflow instances, so real credit to the platform being able to handle all that

Let me also point out, that the issue is limited to your account.

Good to know

Not that it is of any help to you, I know, but just for the sack of clarification

Deffo good to know, otherwise one can start to wonder if there is a wide spread issue, and then just constantly spamming the status page

Also some new instances that i've been triggering seem to be at least running, not sure if all of them are but some of them are, there just seems to be a backlog of stuck "queued" ones

Ollie, does this issue still persist for you?

Not always, so say if l try to load the page, maybe 2/5 fail to load, but this happens mainly on workflow heavy pages, e.g. one of the workflows that has ran 800,000+ times

so l wonder if its calculating the stats per page load or not?

Yes, that can affect it

@Ollie I can see that for the last hour, your Workflows are being completed. Yes, there are some instances still in running state (we are looking into those), but newer instances are running correctly.

Can you please confirm?

Yep this looks to be correct

I can see from my external logging thrings are running but now l can no longer see via the cf dashboard:

That's strange, I can query your account with no issues

Yeah really weird, still not able to see anything on it, tried on a different PC as well, nothing

Same on my mobile, just checked

All with the same

internal_server ?Weirdly no, so from the network l can see the request: https://dash.cloudflare.com/api/v4/accounts/0601cdee659d3394e0d37db62e73705c/workflows?per_page=25&page=1&name=undefined is being sucessful

Hummm

Yeah really weird, and also sometimes it never even makes that network request when reloading

Yeah all of the other pages work fine except for any pages under workflows

On reloads l seem to get a flash of the page structure loading then it vanishes

Can you check now, please?

am not sure what magic you did but that works

SO l can see its cleared up a lot now, only have around 65 instances stuck in a running state now

Yep, I can also see that. We are trying to understand what is causing that

thanks a lot for the help so far btw

I believe you are facing a race condition here

im putting the workflows through their paces then 😛

Hey, I'm facing the same issue. I think it's related to the Cloudflare dashboard—maybe it was recently updated to a new version, and older versions cached in users' browsers aren't able to fetch the latest version correctly. It could also be a conflict between the old and new versions of the dashboard. Everything was working fine until yesterday, so it's possible that some changes made by the Cloudflare frontend team in the last 24 hours triggered this issue.

That said, if you open the dashboard in an incognito tab, everything works fine—probably because incognito loads the latest version without using the cached files.

this is not related to only workflows but may be related to the frontend of cloudflare

Yes, we had a regression with a Front-end release, which was already reverted. Clearing your cache will fix it.

Yes after doing force reload ( ctrl + shift + R ) it fixed

@Ollie you have stop creating/running new instances, correct?

Yeah l didn't want to interfer with your debugging so just stopped all

You can continue

Ah perfect will do

All new instances of workflows seem to be running pretty smoothly, though am keeping numbers low, should l start to ramp up to see if the race condition gets hit again?

Oh dear @Diogo Ferreira we are back to the same issue after having ramped up:

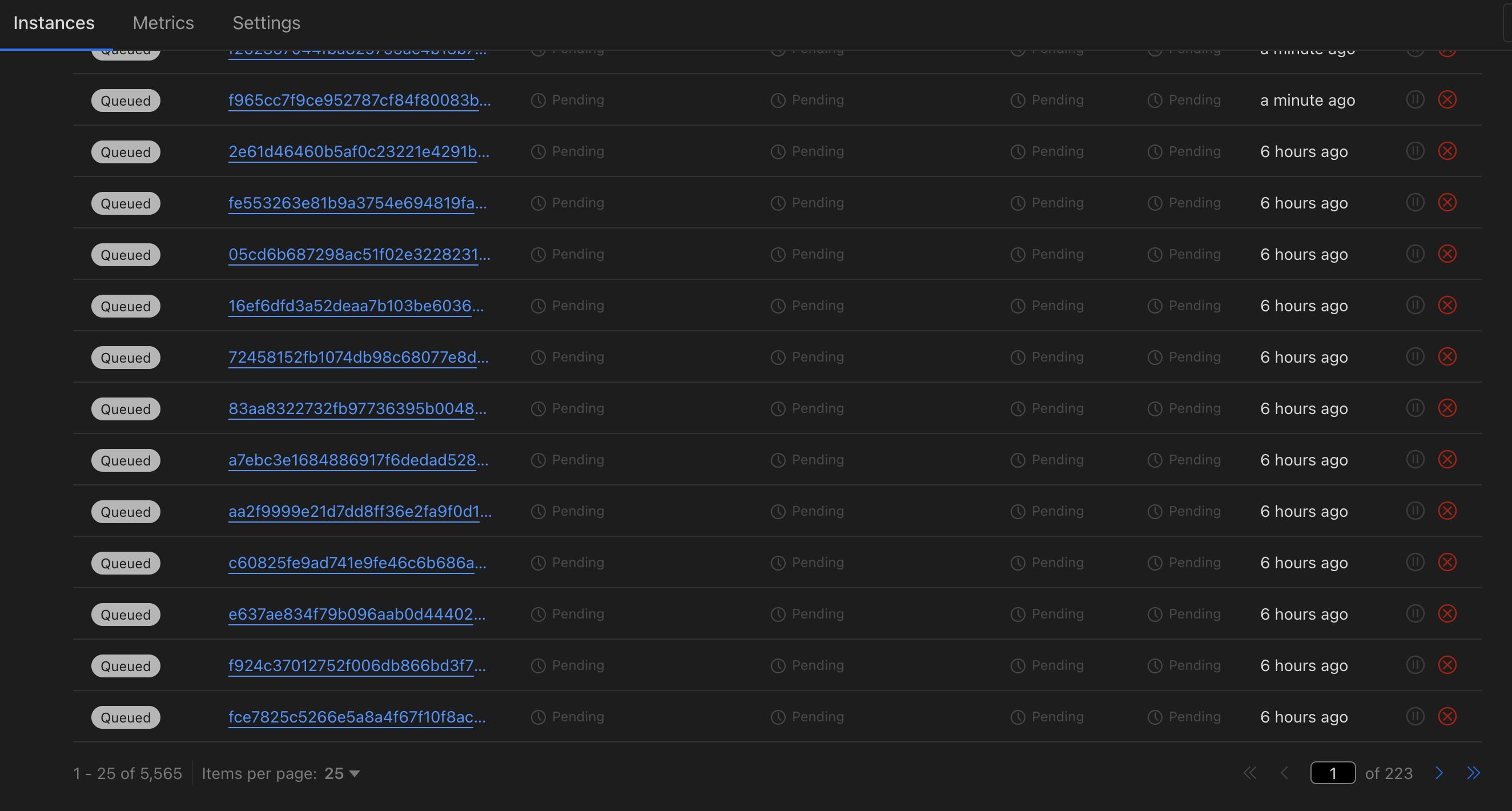

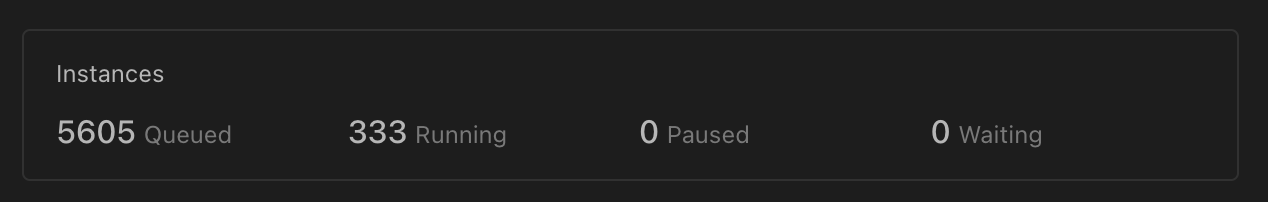

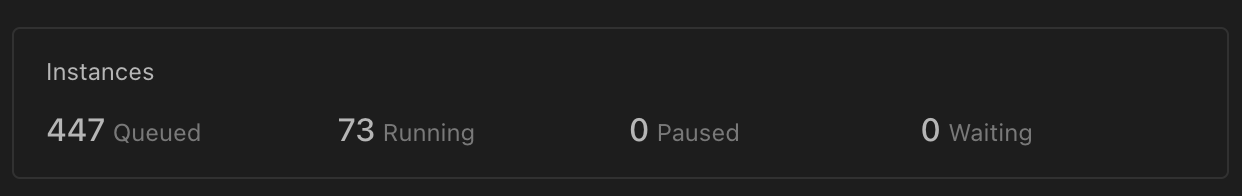

{ code: 10001, message: "workflows.api.error.internal_server" }Instances are starting to pile up and get stuck:

CF Dashboards not loading anymore for me either:

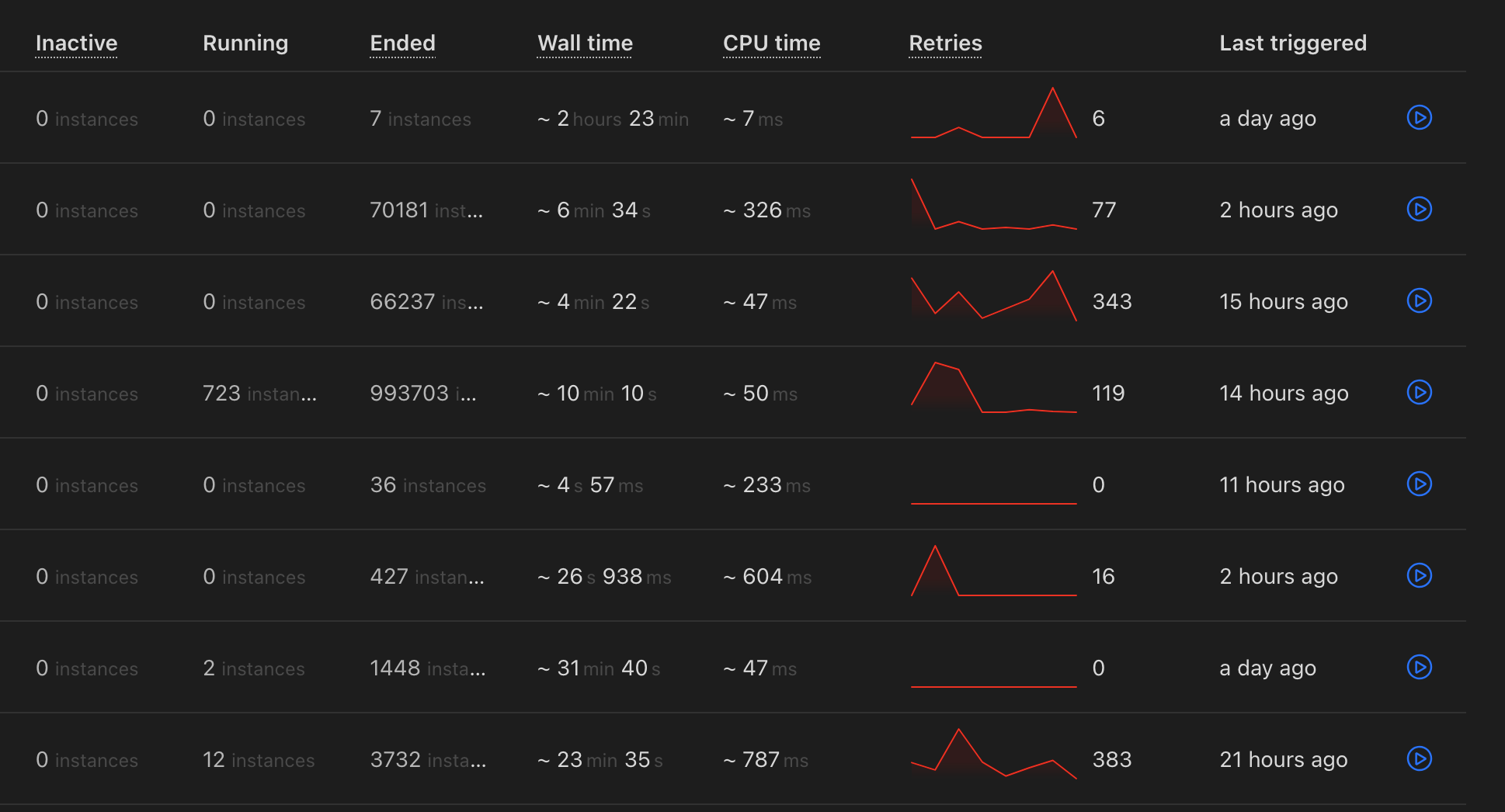

@Austin B , @Thomas Ankcorn and @Diogo Ferreira the latest update from me is now the dashboard loads, but i've stopped running new workflows, as i now have a lot that are "stuck". It feels like some recent release this week has introduced some issues with workflows:

I'm still getting this issue as well

I am not sure if @Thomas Ankcorn will be able to rally some more help to look at this, as it now seems to be affecting quite a few peeps

I've signed up to the pro plan to submit a case for this, caseID: 01505384

I just deployed with no errors for the first time

(In a few days)

lol, all of my workflows are still in a "stuck" state, l don't know if there are any CF employees who can help on this, the whole workflow platform is having issues, and l don't really want to have to migrate away, but its getting close

@Matt Silverlock or anyone who is active?

@Vero // @Hello, I’m Allie! as well

Unknown User•7mo ago

Message Not Public

Sign In & Join Server To View

There is around 3 other users in this thread that it’s also affecting

All, or some? @Diogo Ferreira (who is out today) said it was a subset — something we're fixing and taking seriously — but trying to understand if there's something bigger here / larger in impact

So this is my current state, l would say its affecting 90% of all new workflows triggered, from monitoring say 1 in 10 actually run now:

I stoped triggering new ones a few hrs ago, but have kicked off some jobs that should start to ramp up triggering workflows

What rate are you creating them at?

around about 2mins between each one, but a workflow can sometimes take say 3 mins to finish, so somtimes their would be a slight backlog.

In short am using queues to send messages with delay seconds, which then the workflows pickup

I've been running like this perfectly for the past fews weeks, from the screenshot you can see ive almost had 1 million instances ran

Starting to see these now:

{ code: 10001, message: "workflows.api.error.internal_server" }With low amount running its starting to climb:

?pings please

Please do not ping community members for non-moderation reasons. Doing so will not solve your issue faster and will make people less likely to want to help you.

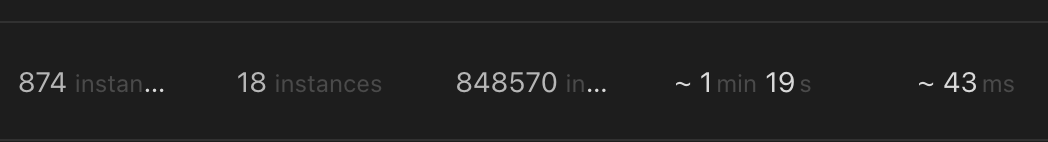

I know there was a status for workflows on the cf status, but l dont think its fully resolved, i've got over 5K workflows since around 10hrs ago.

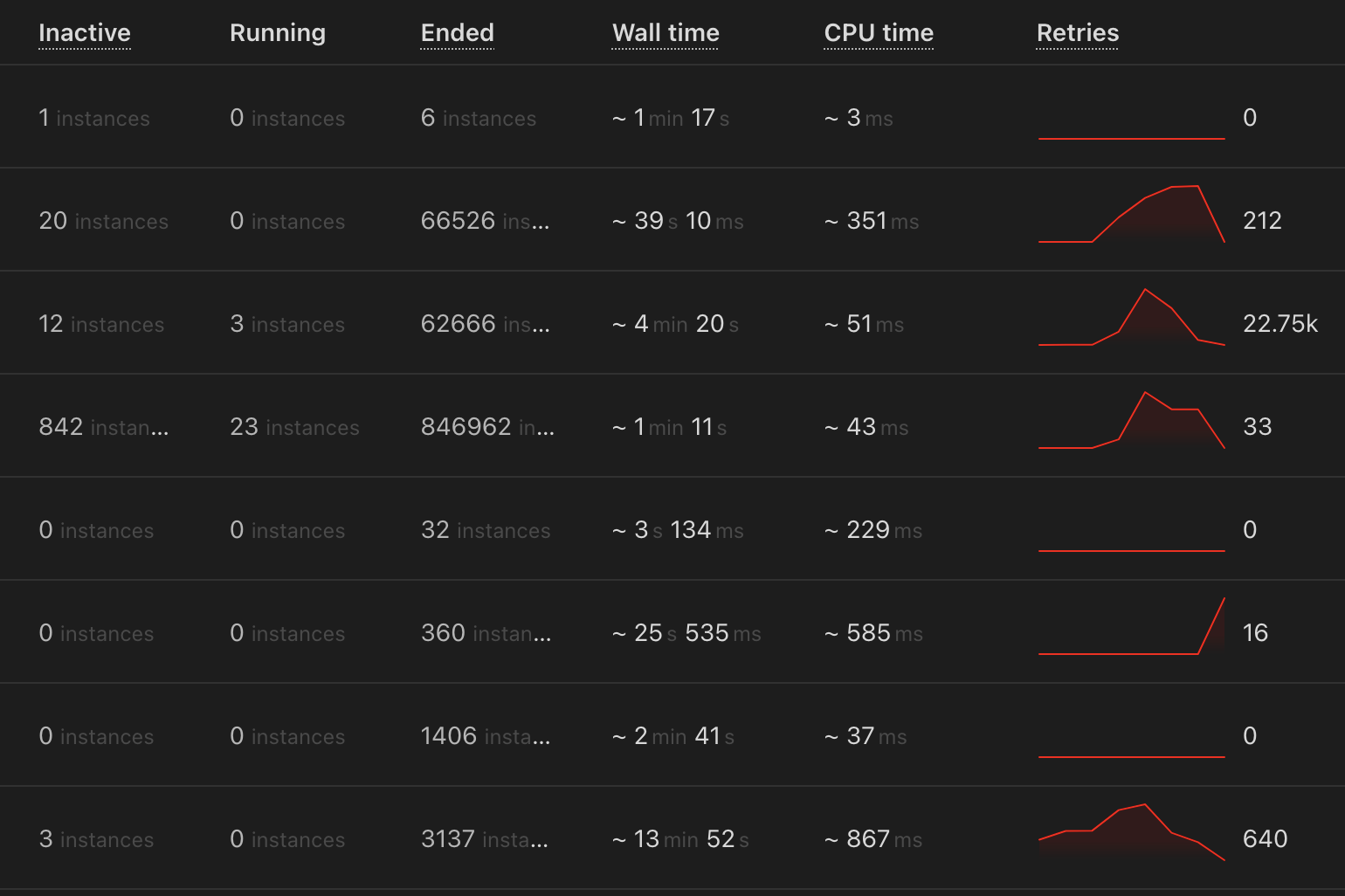

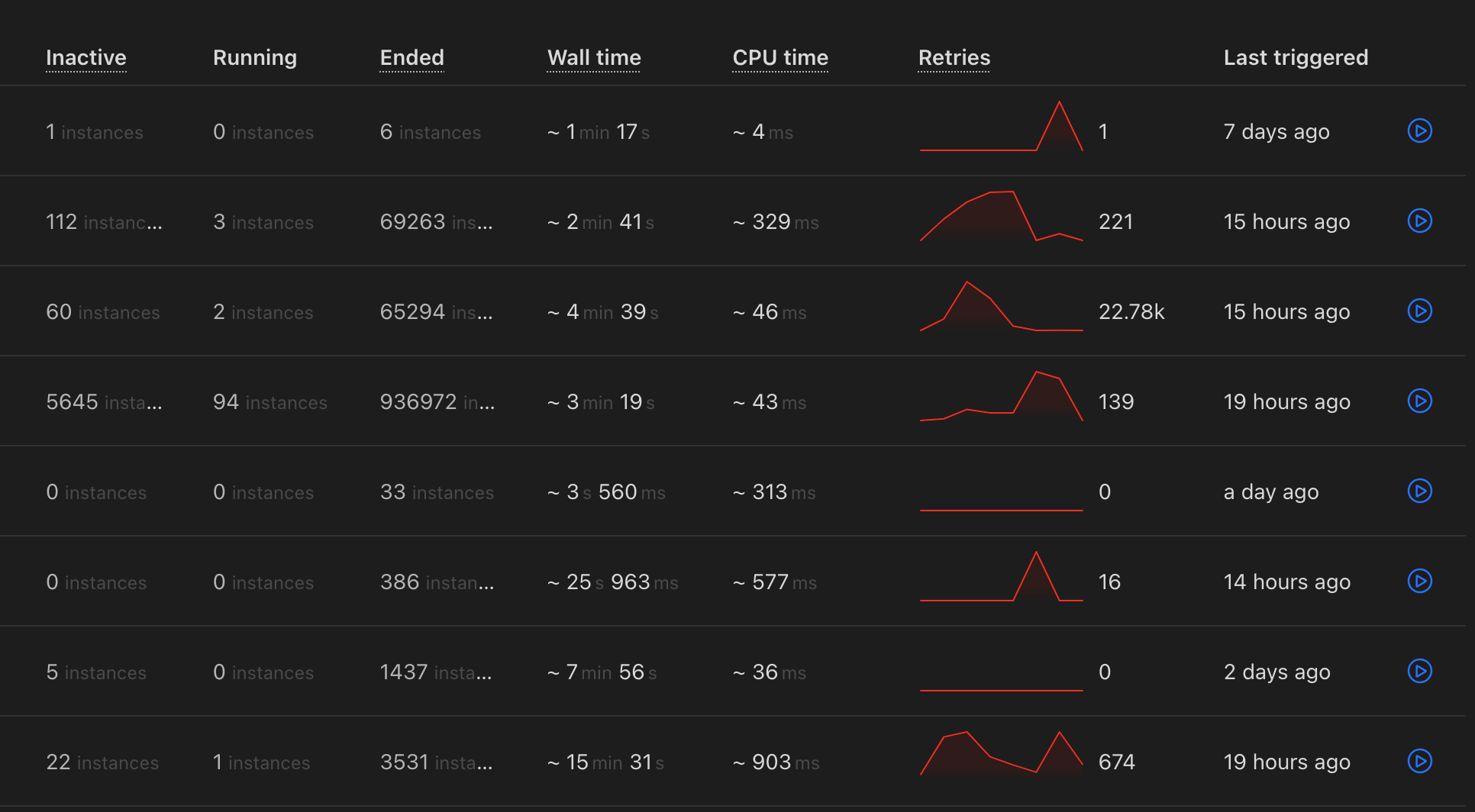

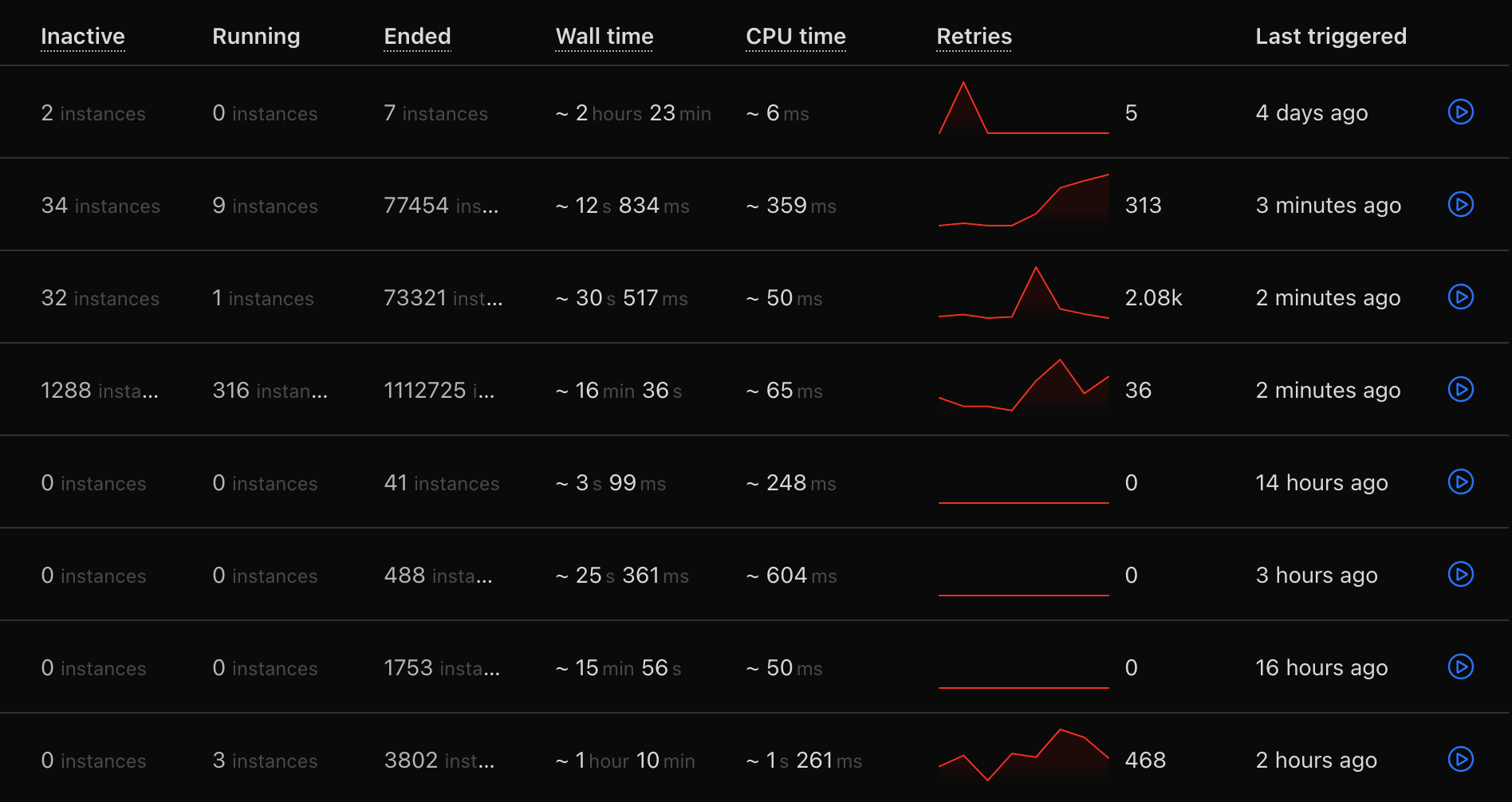

Just an update for any CF employees that are looking at workflows, thanks a lot for your help so far, but l am still facing issues. My company has for now turned off all workflows since yesterday, this is our latest state.

Any help would be appreciated to get us back to a state pre-last week where everything worked perfectly.

We are taking a look at it Ollie. Sorry for the inconvenience this causes you.

For what its worth, I also still have a couple thousand invocations that have been stuck in queued since last week

@Diogo Ferreira so far everything seems to be working perfectly! I will ramp up slightly in workflow instances now just to push some things

Hey @Diogo Ferreira I'm still facing this issue myself, I have workflows that are "Queued" from 5 days ago and I can't get any new ones going

My account ID is

824c12820335788e8daf77dba7e7891e if that is helpful

For some reason I can't open a case even though I'm on a Pro accoutn as well

@Ollie what ended up being your issue that got resolved? Was it anything to do with the amount of instances you were creating?No nothing at all really, Deigo said a fix was pushed and all my instances started running and have no been getting stuck since

Fair enough. I currently have like 80k queued and building 😅

How are you queuing (using queues to trigger for ex)? and are you calling createBatch or creating them individually (workflows)

It's not been done by me but another dev but I believe it's a Worker Request -> Workflow 1 -> Workflow 2 and no

createBatch as the worker request is a webhook I believegotcha, l wonder how worker request triggers workflow 1?

i basically use a lot of queues, so workflow A -> queue A -> workflow B, etc

And then a few endpoints on a worker that are hit to send messages into queues, l never trigger workflows from anything but queues

or crons

Yea, that's what I think we need to do here to help solve some of the issue. I suspect how it's being done is what's lead to our workflows effectively stopping

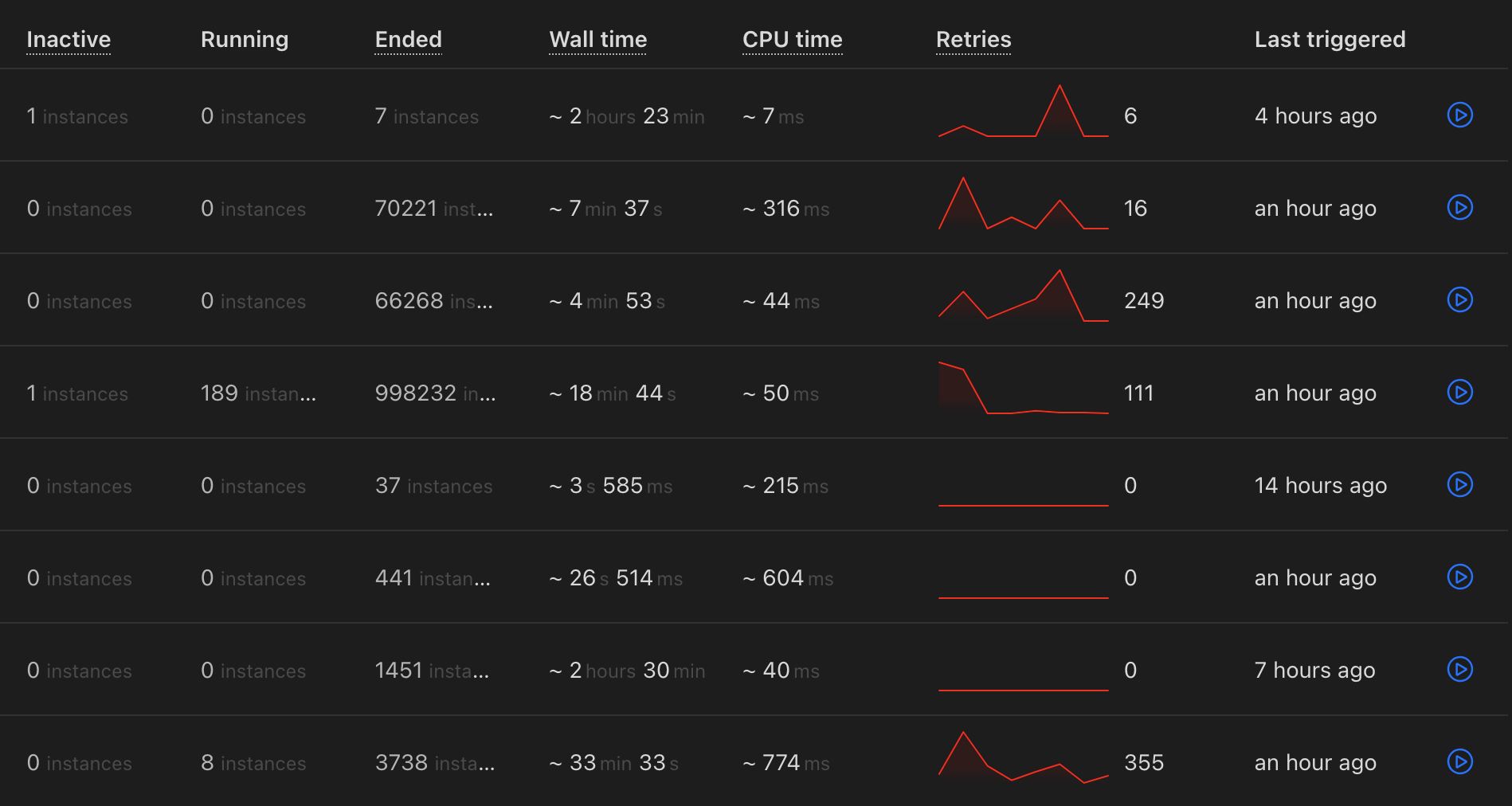

@Diogo Ferreira l don't want to sound the alarm too early, but a few workflows seem to be piling up:

Dan, as per my due diligence, your account is reaching the allowed entitlement limits, 4500 concurrent instances.

I am monitoring your account Ollie. Accumulation of Queued instances is normal, specially when Workflow instance are created from batch create. Nevertheless, I expect it to reach a steady state.

Yea, as we have a bunch stuck in "running". They've been stuck for ~5-7 days

I appreciate you looking Diogo 🙏

yeah l suspected so, didn't want to flag anything too early etc, so thanks for looking

Allow me some time to assess them

@Diogo Ferreira just want to flag, l have some, not that many that seem to be stuck in a running state from around 13hrs ago.

Nothing is generally getting stuck in "inactive" anymore, its more like it makes it into running and then some get stuck

Also i noticed anything that it stuck is within the range of 9hrs - 13hrs ago, since then seems to be fine

Exact same as me but 5-6 days ago 😅 and have almost ~4k stuck in running

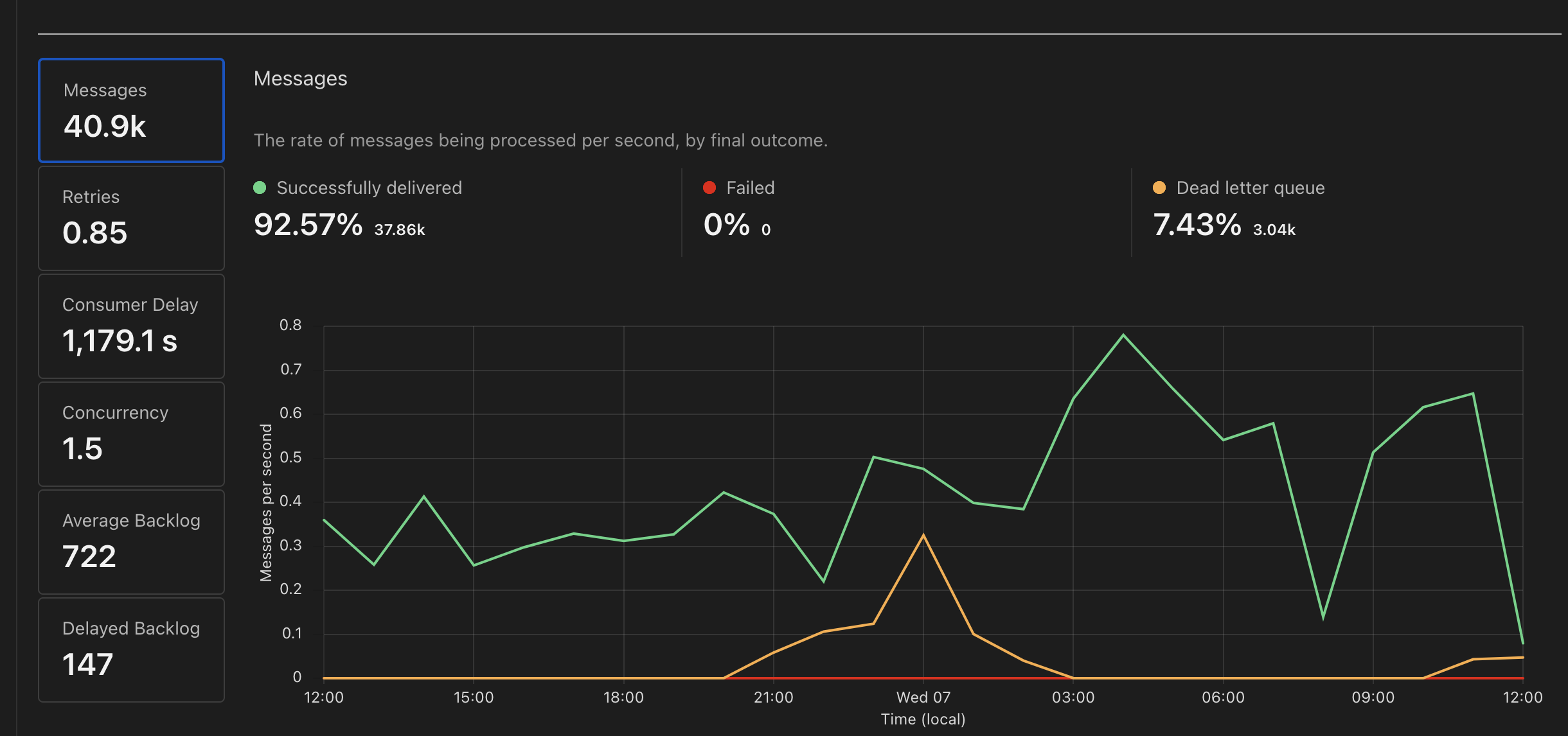

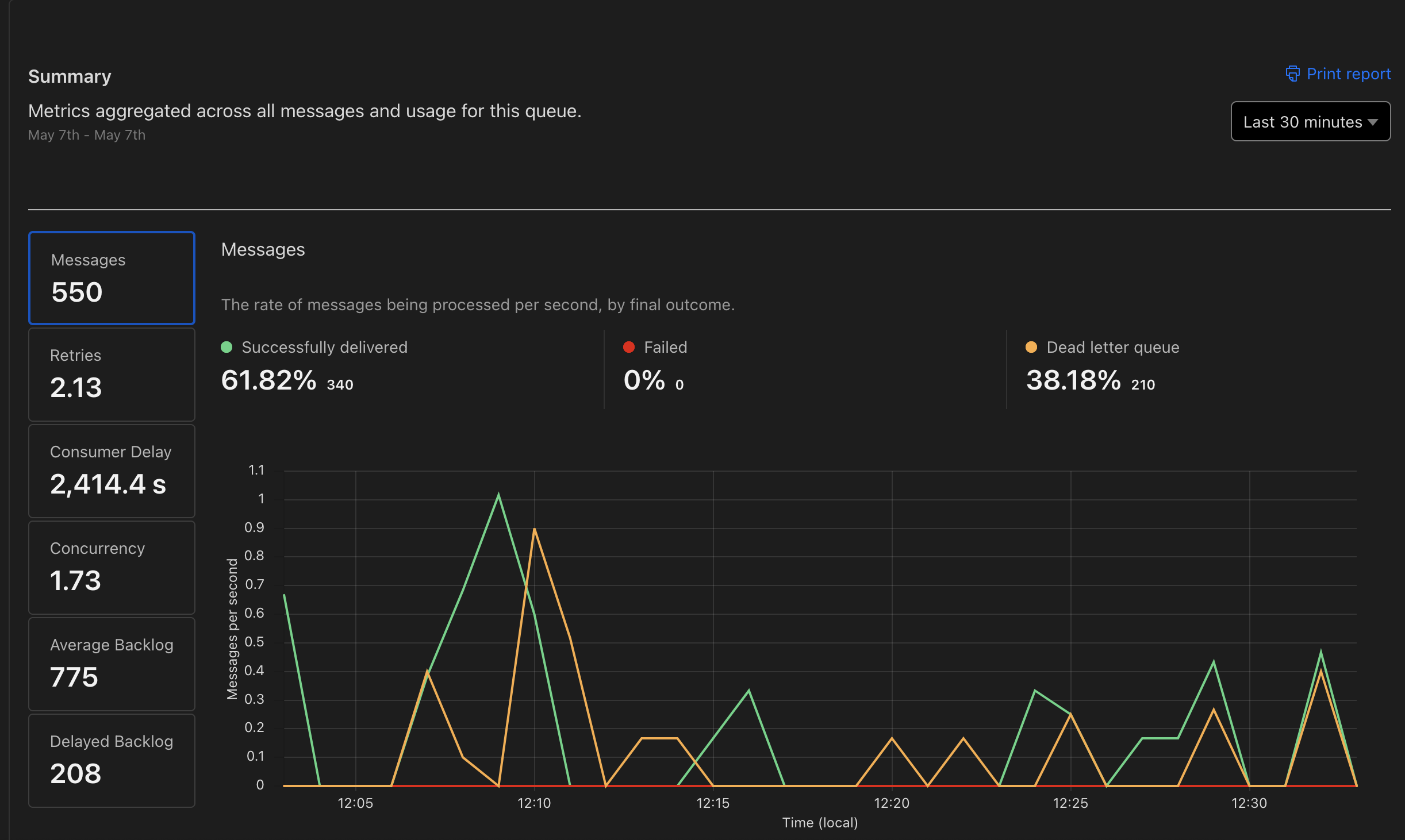

I think there are issues here and there, l can see from queues that trigger my workflows, that at certain points in time, it fails to instanitate them:

within the last 1hr, the rate of dead-letter-queue messages has started to rise

A good example being last 30mins, there seems to be an issue starting for starting new workflows:

I think they fail because they can't create the new workflows, is the guess for mine anyway

Yeah exactly, its failing to create new ones around 30% + of the time right now

Also some new deployments seem to be failing with:

workflows.api.error.internal_server [code: 10001]

Yeah l think there is now an active issue, l can't load the dashboard, l cant deploy any new worker with workflow updates, and l have a rising number of stuck in running and failing to even instatiate, we probs just need to wait for @Diogo Ferreira or someone to come onlineYea, that's what I was getting and have done for a week or so. I get that same error when checking the

Network tab@Ollie unfortunately I can see your account is reaching some limits. And the limits issue occur at the same time you see the issues on the Queue metrics. Also, when it happens, CF-UI/API are also affected, justifying the ìnternal_server` errors. In a nutshell, your account is getting overloaded. When the overload reduces, or disappears, your account manages to move along and process instances.

@DanGamble your account is suffering from an identical issue as Ollie's. Even though the outcome is the same, the way your account is getting overloaded is a bit different.

Nevertheless, the team is actively working on ensuring that your use cases do not cause the observed overload

Perfect, thank you @Diogo Ferreira. I'm guessing the data in the current workflows wouldn't be lost with a resolution?

I appreciate this is really hard to answer but do you have any ETA? Just so I can pass on something loose to the merchant

Yeah thanks for the info @Diogo Ferreira and really appreciate the help in all of this. In terms of overloads / limits, is there some kinda of thing we can do to reduce this on our side, or is this purely a CF side of things?

Hey @Diogo Ferreira, my case number is

01518300. It's not been that helpful so far and I'm going to reference here just as an FYII can see what you mean by overloaded and then moving along, had about a 4hr wait of nothing happening, and now they have started to process (well at least 1 workflow is, rest still stuck)

@Diogo Ferreira am not sure if its still due to overloading but seems to stop processing now altogether:

@DanGamble anything on yours today? i have not been able to start any workflows at all today so far

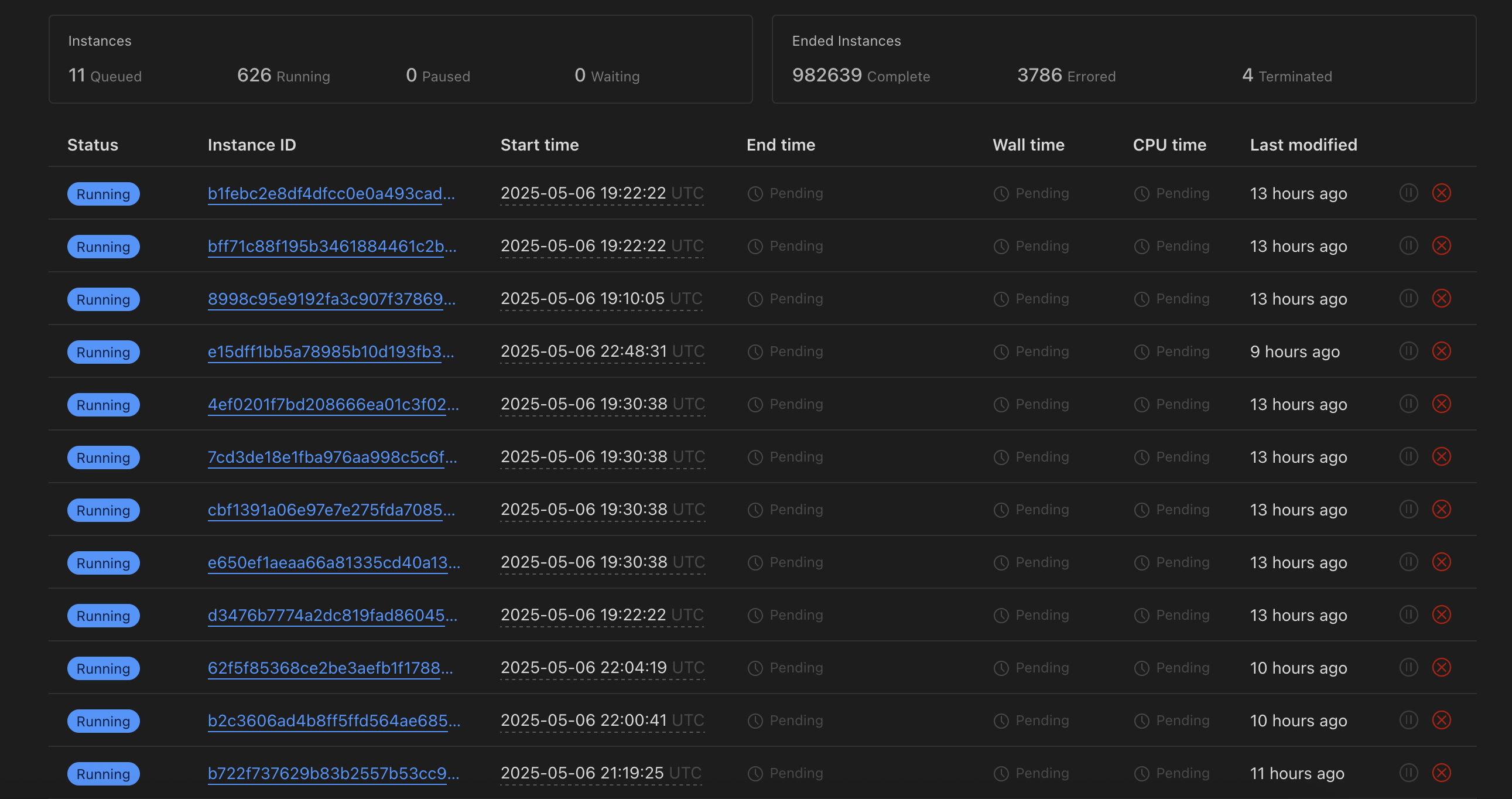

Hi @Ollie one of the "overload" side effects if precisely what you (and @DanGamble ) are seeing, instances say in running state for a long period of time. Since you posted that screenshot, I can see the running instances decreased by 200 instances. This is exactly what we are trying to fix.

ahh amzing thanks @Diogo Ferreira

Just out of curiosity for maybe you and @Matt Silverlock does raising a support case aid this at all, or is it just more noise for you to deal with?

Just an update as of today, still a few hundred stuck in running, but otherwise starting to kick off running of more, and slowly ramp up over the course of today

Mines still just completely stuck, unfortunately

Yeah l had a few stuck from 5 days ago l just manually cancelled, so far things seem to be running now, but am increasing the amount ever-so-slowly to see if there is a point it just gets stuck again.

Though yesterday l did have around ~100 workflows fail to start and messages went into a dead-letter-queue

Somehow we’re up to 32k running processes and they just keep getting stuck I thought the limit was 4.5k

Yeah thats insane, l suspect it keeps trying to run new ones, and it hasn't registered the ones that are technically running

Wondering if I can try and use the API to find all the running ones, save the payload, stop them and then try again

But I do keep getting API errors when deploying so suspect I don’t be able to

Yeah l thought that with my dead letter queue messages, just to kill and process, but half the time l try to deploy it fails so l feel the same

Yea, get the same errors when trying to use the API

Sorry to chase @Diogo Ferreira but are there any more insights to this? We've been unable to use workflows for almost 2 weeks now

I feel like there is a live issue, struggling to instantiate new workflows, and the dashboard is failing to load now

@Diogo Ferreira FYI

Can't see a thing, l suspect the throttling on my account?:

From looking at my queues, since dashboard is broken, evrything seems to have stopped. Can't deploy any new worker code that has workflows attached to them

Responses via dashboard and wrangler CLI:

{ code: 10001, message: "workflows.api.error.internal_server" }Dashboard finally loaded, looks like same problem as before now, things getting stuck:

@DanGamble i assume yours are still stuck?

Si, still stuck for me

Hi guys. I am really sorry for the delay on this matter. In fact, we are still understanding what is causing this issue to occur. It is quite similar to what Ollie described: "... it keeps trying to run new ones, and it hasn't registered the ones that are technically running". Given our reliance on Durable Objects to manage all our system, it is making our life a bit harder.

As a recommendation/mitigation for the problem, try to create/trigger less than 40 instances per second.

Thanks for the update, will keep to that limit

Would you say the 40 is account wide or per workflow? Do you know what clogs up the API? Is it as the queued reduces or the running? As our running doesn’t seem to be getting lower

Account wide. The API clogs, because the DO used for the account management is overloaded with RPC calls from the Workflow instances and from the API.

Not that this is relevant for you, I know

Yeah l have slowed down how many l have been initiating per second, and things seem to be running a lot better now, only have 99 that are stuck running, but thats from 10hrs ago.

I guess this is just while you are still investigating the issues, then we should be able ramp up volumes once all sorted?

Hopefully! 🙏

And once again, we really appreciate your consideration

No worries, and thanks a lot for all your help so far!

It looks like keeping well under 40 workflows per seconds seems to be working from my side, no queued / stuck workflows today at all

Great to know @Ollie ! We have also released a new version that improves on the unstuck mechanism

Great to know!

Thanks for letting us know @Diogo Ferreira ❤️

An FYI from me for today, 0 stuck workflows all day, still keeping to the under 40 per sec limit, but have been running workflows pretty much non-stop all day

@Diogo Ferreira do you have any idea when we would be able to begin ramping up volumes of workflows?

HI @Ollie you can ramp up your usage!

Perfect, will start to do so!

@Diogo Ferreira apologies for not doing this sooner, holidays got in the way.

I can confirm that everything seems to be fixed. I pushed the system as much as l could.

l queued up ~100,000 workflows within a few hrs. Got to around ~2,700 running at any time (bear in mind they are 2s workflows so number could be higher / lower).

And over the cause of a few hrs the system would run through all workflows until the count reached 0.

I am now no longer seeing any issues with running workflows. (with volumes of around 2 million workflow runs every 30 days)

Thanks a lot for all of you and your teams help in this matter!

Thank you for the feedback!

Hello guys,

Im facing a similar problem where my workflow instances get queued but never starts

This started may 15th, on that time, I noticed that when we manually paused some wf queued instance, all instances above it were triggered

so I publised a worker that basically start, pause, and finish a wf instance so my routines could be triggered

That worked until today (June 18th); this morning, none of my instances are being triggered, even with that start/pause “quick fix”

There are some crucial routines to my company, i'm even thinking about trying to migrate those routines to other account or even service

we're currently investing arround 4.5k usd on cloudflare,

could you please take a look?

@Diogo Ferreira