Hey team ! I'm trying to edit the

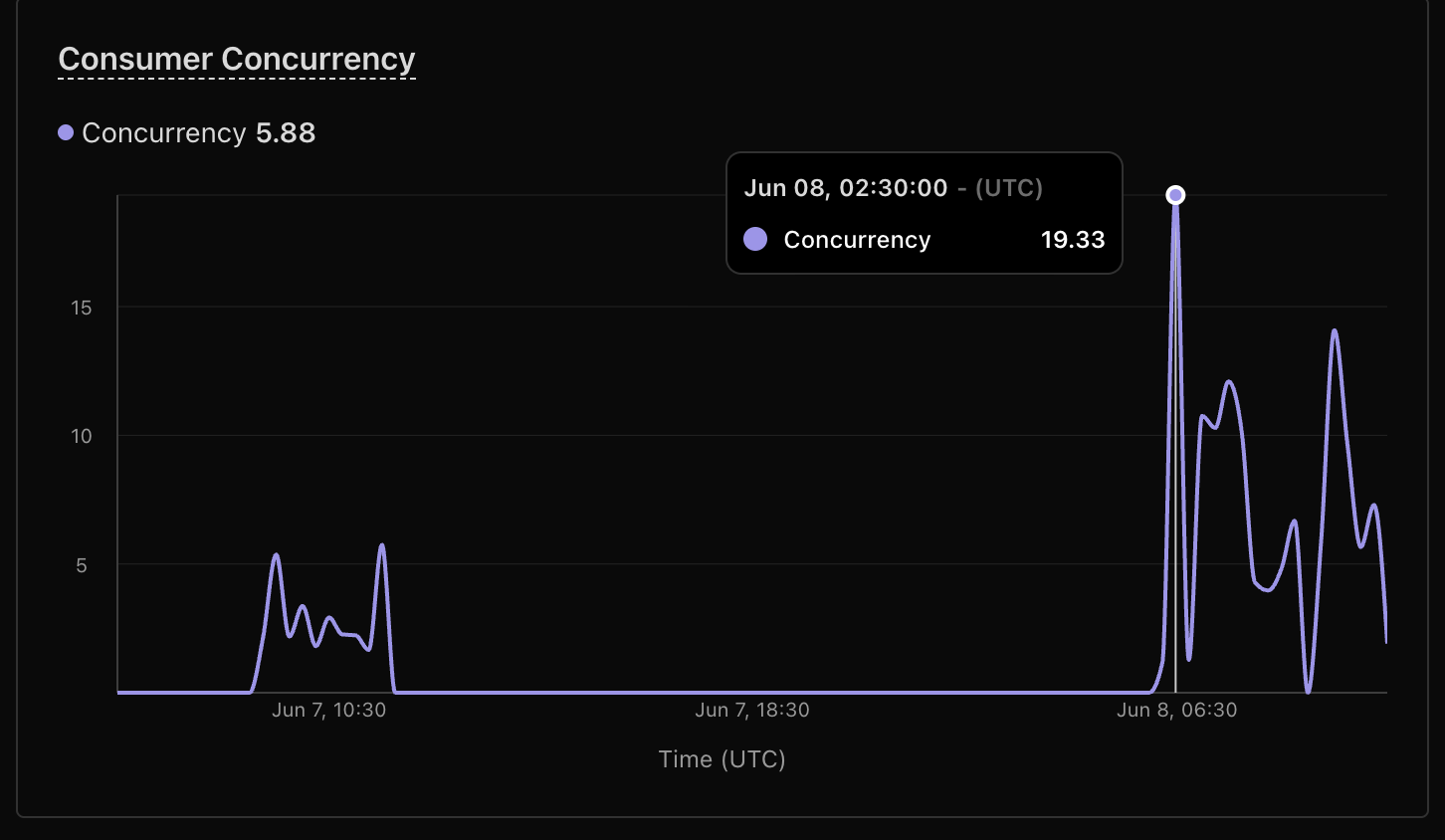

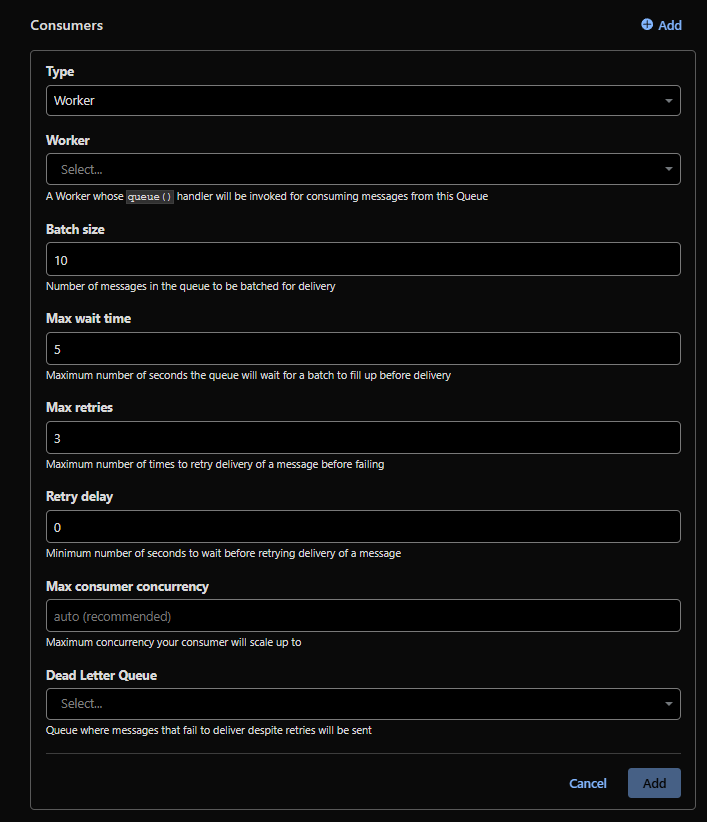

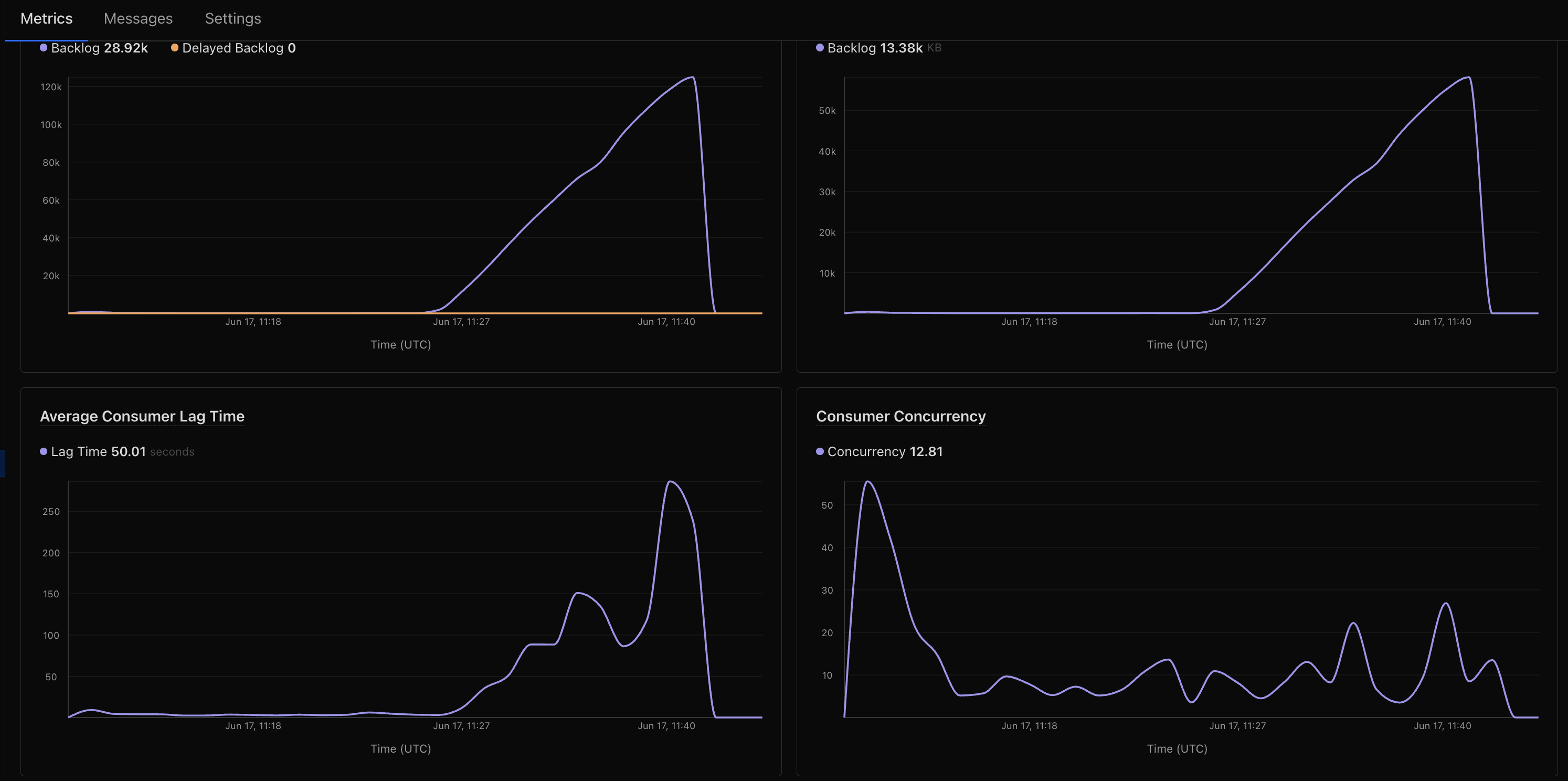

Hey team ! I'm trying to edit the concurrency on some of my queues, but the option doesn't exist anymore:

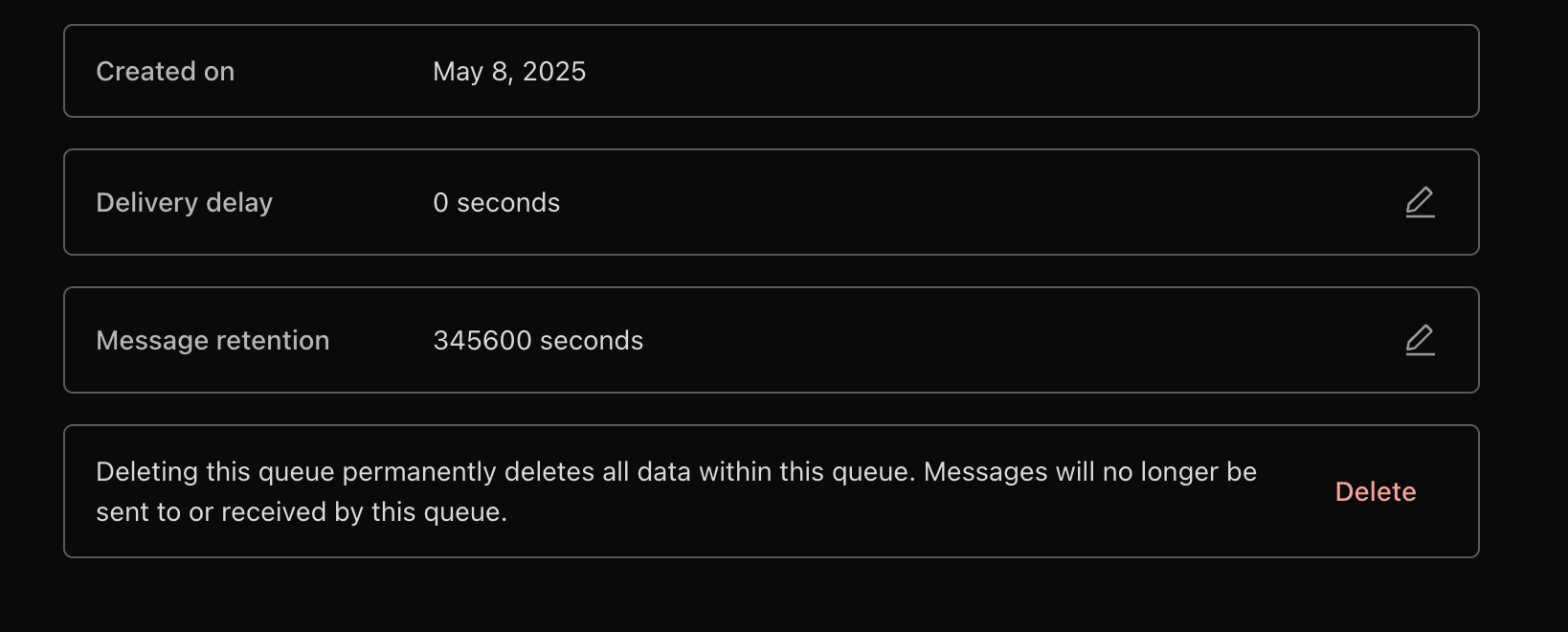

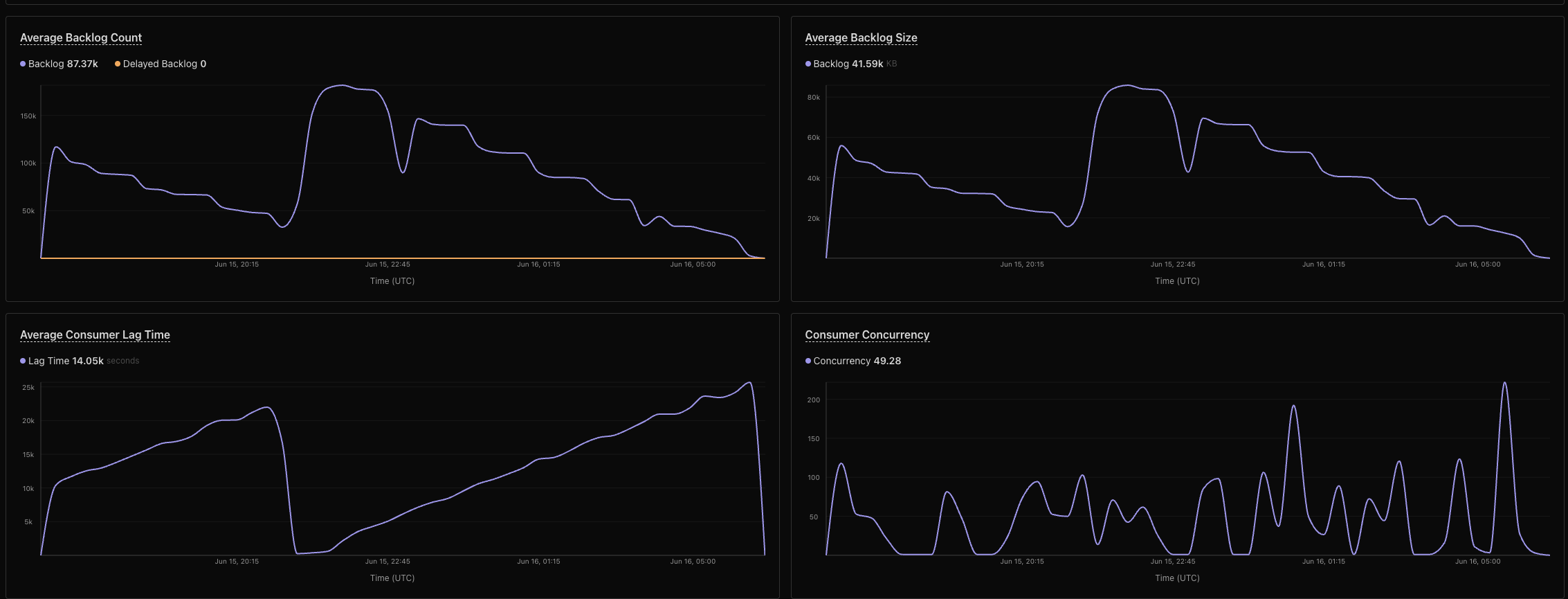

345600 seconds. I'll update the results in the thread

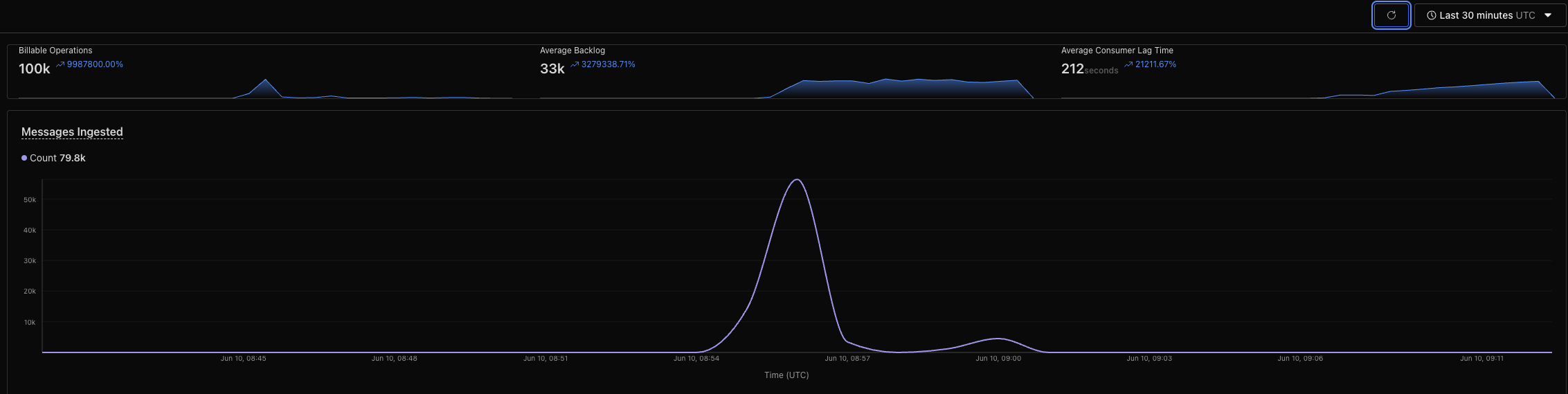

fa75809b889646e1bb7e87bd33fe735d (we created a new one in case it solved something haha)Are you expecting the 5K seconds we predict to go much lower?

fa75809b889646e1bb7e87bd33fe735d? I don't see any traffic on this one for the last 7 daysf024d80269db4736a73e60db222dec7d

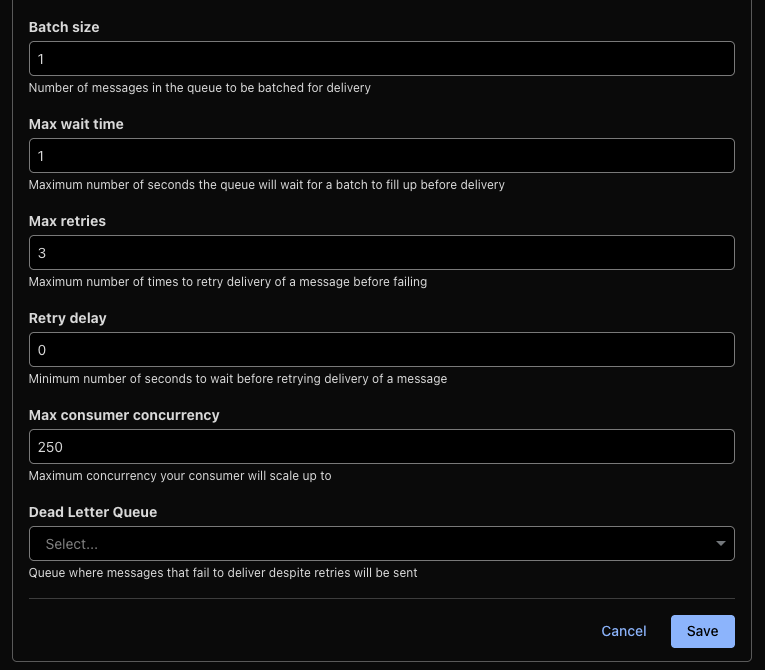

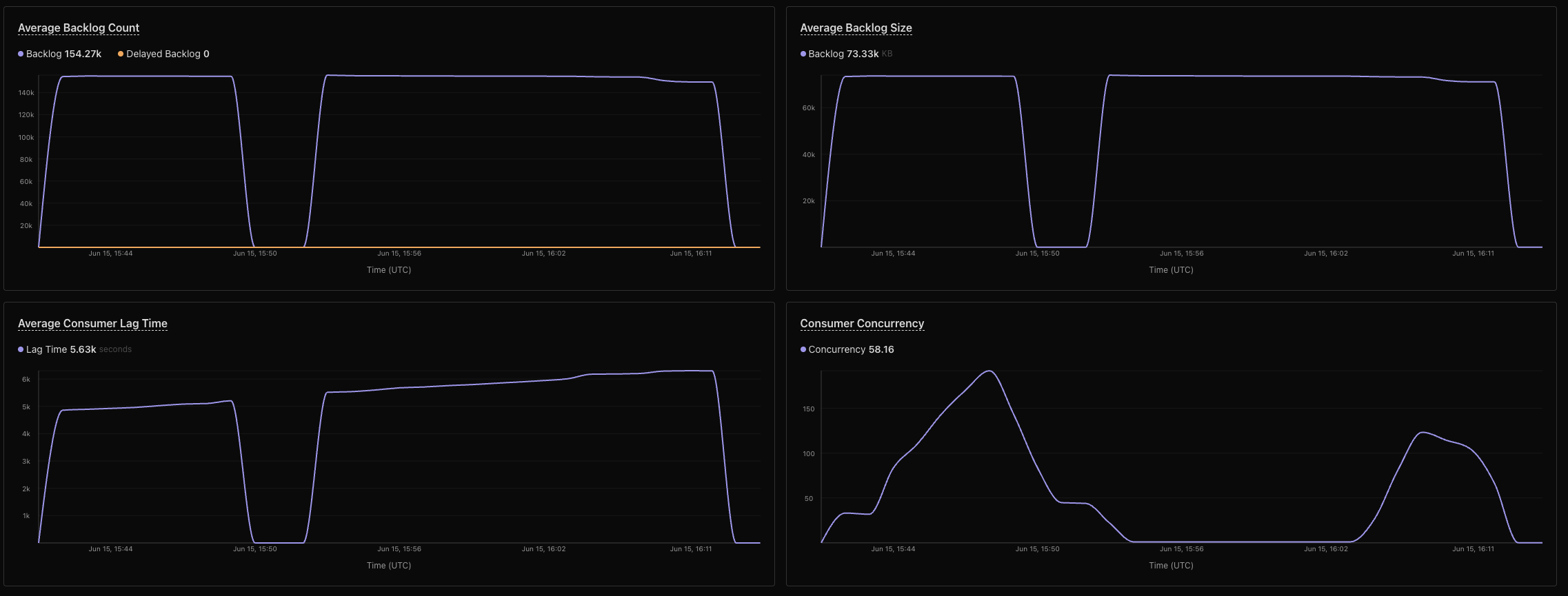

345600 secondsfa75809b889646e1bb7e87bd33fe735dfa75809b889646e1bb7e87bd33fe735df024d80269db4736a73e60db222dec7dbatch_size: 50,

max_concurrency: 250,

max_retries: 3,

max_wait_time_ms: 0