Stop db connection usage from rising while avoiding "Cannot perform I/O of a different request"

I'm trying to create a db connection function that doesn't create a new db connection for every new request. I'm using Kysely and MYSQL:

I've tried:

However this leads to fatal errors in workers with:

How do people handle db re-use?

5 Replies

There’s only two real options for DB connection reuse in Workers:

- manually store and manage the connection in durable object and run all queries there

- or more easily and what I’d recommend, just use Hyperdrive: https://developers.cloudflare.com/hyperdrive/

Cloudflare Docs

Hyperdrive

Hyperdrive is a service that accelerates queries you make to existing databases, making it faster to access your data from across the globe from Cloudflare Workers, irrespective of your users' location.

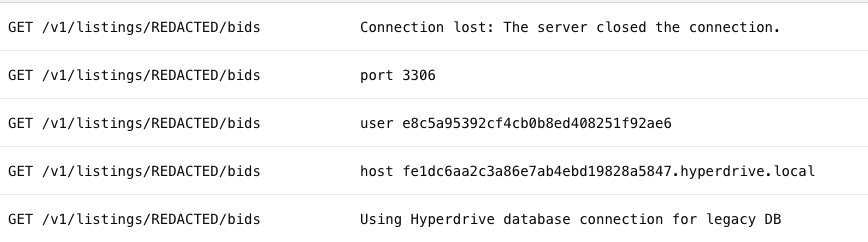

thanks, I did give that a go but hit a wall with "Connection lost: The server closed the connection."

did console log to ensure the envs were being read correctly

"mysql2": "3.14.1"

I'm connecting to the hyperdrive using mysql createPool, is that an issue? It seems like Kysely only supports connecting with a pool

Hmm I wouldn't expect it to be an issue. You might try posting in #hyperdrive - the team there are very active and receptive to questions and feedback