I don't quite understand what I did, but

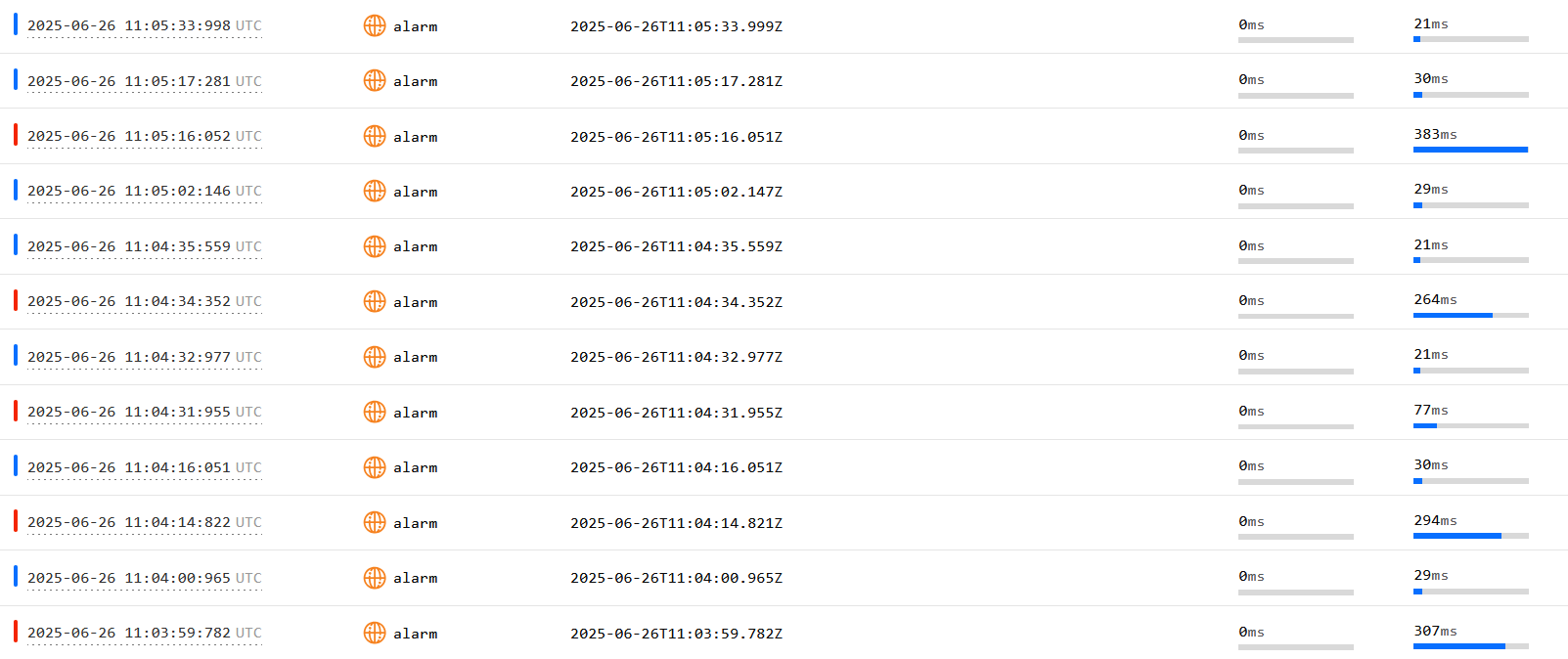

I don't quite understand what I did, but I seem to have an endless series of timers that constantly respawn my dead containers

when i

wrangler deploy they all go inactive, but then they pop back up again, presumably because of the alarm triggers 💀

(just wanted to flush some logs to R2 every 60s)

i think my code was just dumb but i wanted to share because it was kind of a silly situation

2 Replies

Unknown User•5mo ago

Message Not Public

Sign In & Join Server To View

This is actually super handy for me honestly - I have no other way of killing instances if I messed something up during dev

I'm using the standard containers with a 6 minute timeout, I think I hit some limit at 4 containers. If I accidentally triggered all 4, I'd be stuck for 6 minutes before they died

And maybe I could get fully stuck if I accidentally trigger- looped the instances?

I only fixed this because I deployed and they took my fix, but maybe that would have still worked even if a deploy didn't kill them :shrug:

A button on the web UI to kill an instance (and any associated alarms / etc) would fix this I think