Has anyone solved this kind of error yet

Has anyone solved this kind of error yet? Would love ideas on what to try to get it working.

12 Replies

Hey @surgery18 did you by any chance change

CMD ["rails", "server", "-b", "0.0.0.0"] in the Dockerfile to add 0.0.0.0?

I am asking because we discovered an issue yesterday where just a Dockerfile change does not trigger a new image to be built and push by wrangler. The content of the image has to change

So something to try to make sure that's not the issue you are hitting is to make a no-op change to a file that gets bundled in your container imageI am using

npx wrangler containers build to make and push the image. Also the bind to 0.0.0.0 was originally in my docker file when I sent it at first. I just tried changing the port to 8080 to see if somehow if 3000 had an issue or something and same results.Thanks and just to confirm, that port change in both your Workers script and container image resulted in an image push and deploy?

Yes, I pushed the docker port change manually by pushing the docker image and then deployed the worker script.

I am noticing in the logs that it doesn't always show the output of the server running either. Like it isn't fully running the docker image or something? Only sometimes do I see in the logs the server is running. But running the docker image locally works just fine.

And, I have tried destroying the the worker + containers and repush it and same results.

by same results, I mean the error of

The container is not listening in the TCP address 10.0.0.1:8080I'll try to repro with a very minimal rails app

I am also getting this as well

A call to blockConcurrencyWhile() in a Durable Object waited for too long. The call was canceled and the Durable Object was reset. Just incase you need to know that as well.I am able to repro with https://github.com/th0m/rails/commit/0e4e05d0d75e297da58c59fac9583702c424296d let me see what is going on

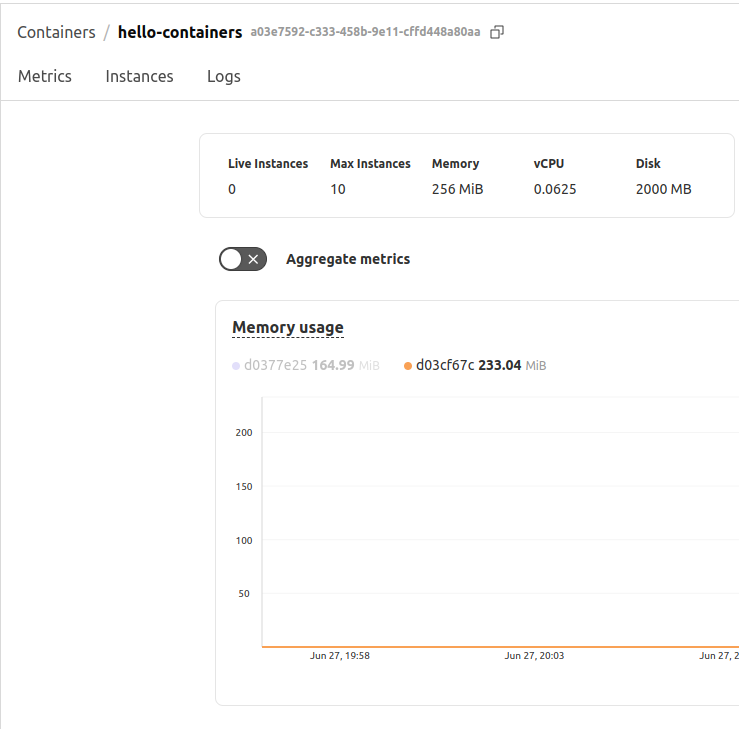

I think the app gets OOM killed on the dev instance

256 MiB is probably too low, let me see how much memory it's using locally

Yes I can confirm it works on

"instance_type": "basic",can you test it @surgery18 ?@Thomas Lefebvre Same issue. But let me try redeploying fresh. I will destroy the instances and redeploy it fresh and see if it works.

Yes, basic type did work. I guess it was a memory issue or something then.

Thanks for the help @Thomas Lefebvre

No problem, we're wondering if there is something we can do to surface these better, I need to check exactly what's happening for that particular case because I had seen OOM error logs being printed in the containers logs tab before