Keeping memory under control

I'm hoping to launch a small private smp where up to 10 players might play concurrently. I am testing the performance of DH-Plugin and noticing that even in a world where all LOD data was pregenerated, just flying around the map toward places where LOD hasn't been sent to me yet makes the server gobble up my memory and cause signifigant GC lag. Why is just sending data to the client so resource intensive? If this is how the server behaves with one player, I'm not sure whether it can handle five or ten people. Especially 5-10 people who will actually be modifying terrain instead of just receiving lod data. Everything is fine without DH-Plugin, by the by. I'm not hitting into the crashing mentioned by the FAQ either, it's just frequent lag spikes caused by gc

https://spark.lucko.me/FcPfHRKFvM

- Server has two dedicated threads of a AMD Ryzen 9 5950X, I can get more threads if I really need them for this plugin but ideally two should be enouggh

- Server has 8GB which is quite respectable for a paper server and should be enough for DH-Plugin

- I used the "low core count" flags in the plugin wiki, but they didn't make any difference, and my host advised me to switch back to their default aikar flags.

Running a bleeding-edge dev build to take advantage of a new feature. And here is my current config:

spark

spark is a performance profiler for Minecraft clients, servers, and proxies.

36 Replies

I've ran the plugin on a Raspberry Pi without issues. Which part of this trace is problematic to you?

Currently on mobile, so navigating the trace is not very convenient

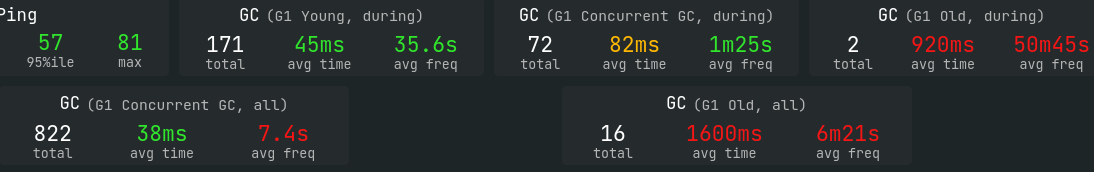

Also on mobile, so I'll send a screenshot when I get back, but a lot of the G1 times are wacked out

the ram inflates like almost 1gb per second

according to repeated runs of

tps memThis is a report from a non-DH client joining the DH-Plugin server. The biggest lag spike happened when I approached a naturally generating sand structure that had been pregenned but never loaded by players. So I'm guessing it's not neccesarily sending lods to players but updating lods serverside?

https://spark.lucko.me/QDxSkKZNLR

spark

spark is a performance profiler for Minecraft clients, servers, and proxies.

And this is with DH-Plugin disabled server-side, which didn't lag at all, and memory pressure was <4GB per gc

https://spark.lucko.me/ajbOohLzgr

spark

spark is a performance profiler for Minecraft clients, servers, and proxies.

All three reports were generated with

--alloc but I don't know enough about the JVM to understand the data. Hopefully I've sufficiently demonstrated that DH-P is the memory hog, though, and it's not something else.Did you pregen LODs using

/dhs pregen, or just pregen chunks?

The plugin will load 16 chunks per LOD to generate, and with the default thread count of 4; that's 64 chunks in parallel at any given momentLODs were pregenned, not just the chunks.

I'll see what decreasing threads to 2 does for me.

Do you think there's any merit in rate limiting the lods being sent out to players?

There are a couple of ways to rate limit. The first is to limit the number of LOD requests clients can send in parallel, and the other is to limit the bandwidth used. The bw limit is not implemented in the plugin though.

In any case, this would only be a band-aid, not a real fix

based on that intermediate spark with client disabled, I think maybe regenerating on update that is the potential issue?

You can increase the

lod_refresh_interval setting to perform fewer updates

Default is 5 secondsI'll play around with the config in the morning (2:30am here), thanks

I have an idea to let server owners decide which events should trigger LOD updates. Right now the list is pretty long, and includes stuff like water/lava flowing and leaves decaying

Limiting it to for instance only block placing/breaking should reduce the number of updates quite substantially

All right, so I decreased the number of scheduler threads to 1, bumped the update delay to 10 seconds, and kneecapped

full_data_request_concurrency_limit to 5. What results is a vastly improved situation but not neccesarily fixed. Out of about an hour of flying around, the memory usage is still ballooning 1GB+ per second in many situations but more infrequently, and this was almost always managed by G1 Young with no performance hit. However, the main source of my performance issue still is that out of that hour there was a situation where ram filled up too quickly for the normal GC, and G1 Old caused a 2300ms lag spike cleaning up the mess.

I consider the current situation playable. Not great, but I wouldn't blame you at all if you want to end support here. But, like you said, this is really a band-aid fix, and if you're still willing to work with me I'd like to get to the bottom of this, as the overall memory pressure caused by the plugin is quite high and will only get worse with more players, not better. I think a good step forward would be to have some way to figure out how many lod update regeneration are happening per second, which chunks and why. The performance is especially bad when exploring, even though all LODs are pregenned, so I can't help but suspect something is bypassing the config limits somehow? Also, I don't think the bottleneck is having the chunks load on only one paper async thread (basically required since I only have two threads total), because the MSPT rarely dipped above 10ms. This seems to be purely a RAM issue, not a CPU or disk issue.

Spark after config change:

https://spark.lucko.me/k0TzErZMrIspark

spark is a performance profiler for Minecraft clients, servers, and proxies.

I am also interested in fixing this, so you won't be abandoned 😅 I'll add a debug log message to events that trigger LOD updates, and to the update process itself

Something like:

BlockPlaceEvent detected in LOD at x,y. Performing 7 LOD updates...Of course you'd get 7 events if there really were 7 updates

awesome thanks

FWIW, this post possibly stems from the same underlying memory issue, albiet with a less capable garbage collector https://discord.com/channels/881614130614767666/1397685457566961746

Pushed some new stuff to Gitlab now

Config file now has a list of events to observe for LOD updates, and the option to enable debug logging which will be very verbose about updates

I'll be able to test it out tomorrow, thank you!

update_log.txt uploaded to mclo.gsUploaded by hjk321

So it looks like by far the noisiest events are things growing, decaying, and igniting. I'm going to try disabling those specifically and bumping the generation delay to 15 seconds. The main thing I'm curious about at this point though is what part of the lod generation is taking up the most memory? I'm estimating the footprint of a single lod update approaches 100mb

These are the ones I disabled (after the logs above) based what triggers the most but is non-essential to player experience. For instance, leaves decaying are unlikely to be player-facing unless the wood supporting it was broken or burned, likely by a player.

This is very useful for keeping the usage down. Thank you. I really can't think of anything else to do besides optimizing the lod generation process itself to use less memory, which is probably too much of an undertaking and doesn't seem to be strictly necessary for my use case

Thank you again for everything! Memory pressure seems reasonably low now

The way the LOD builders work is that they first load an area of 4x4 chunks, then take a snapshot of each in order to work with it asynchronously, builds the LOD and saves it to the DB, and finally unloads the 4x4 chunk area it loaded

I suspect the raw chunks and snapshots are the bulk of the memory footprint

The process can probably be refined a bit to have a lower peak usage, but the total memory throughput (and hence the GC load) will be the same

Thanks for the event list. I might set that as the default

BlockFadeEvent would be the only one I'd be iffy about disabling by default. I'm not sure exactly what's fading, just that it triggers a lot.

From the docs:

Called when a block fades, melts or disappears based on world conditions

Examples:

Snow melting due to being near a light source.

Ice melting due to being near a light source.

Fire burning out after time, without destroying fuel block.

Coral fading to dead coral due to lack of water

Turtle Egg bursting when a turtle hatches

-

If you were seeing a lot of igniting, then it makes sense that you also got a lot of fading

I see. And I'm guessing this list of events are the only supported, right? You're not doing some kind of dynamic registration? I can think of some instances where I'd want to trigger lod refresh through api (eg after a FAWE operation)

You can enter any event class name

But the event must implement either

blockList(), getBlocks(), or getBlock()Might be worth mentioning in the config

Thanks again for everything

I'm not sure if structuregrowevent actually works as anticipated for LOD

I'm getting this a lot.

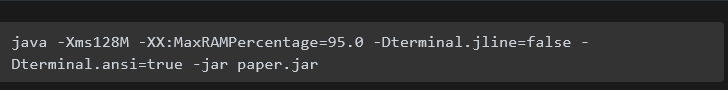

hi, since you already have a thread for the same issue i have i am just gonna ask my question here. Do you know how i can stop my server from running out of memory? I already have 1 sheduler thread. And the startupflags are setup correctly. The server is running on a Pterodactyl panel. And i am using minecraft version 1.21.5 (Btw server has 7 gb of memory and i have already tried both the latest release and the nightly build)

Try decreasing the percentage to 90

just did that but it still crashes after a while.

Crashes, or is killed? Please show a screenshot from Pterodactyl when it happens, including the out of memory error message.

Sorry for the late respons, I thought i enabled pings for every message here. But it just get's killed no error's from distant horizons.

Check the system logs on the host that is running Wings. It will tell you if the process was killed for attempting to over-allocate memory.

mmmh i altered some startup flags and it seems to be stable allthough the ram stay's at 7.5/8gb

even though nobody is on rn

Depending on the exact flags, Java might allocate the entire heap size immediately