Managed Rules block Google Crawls

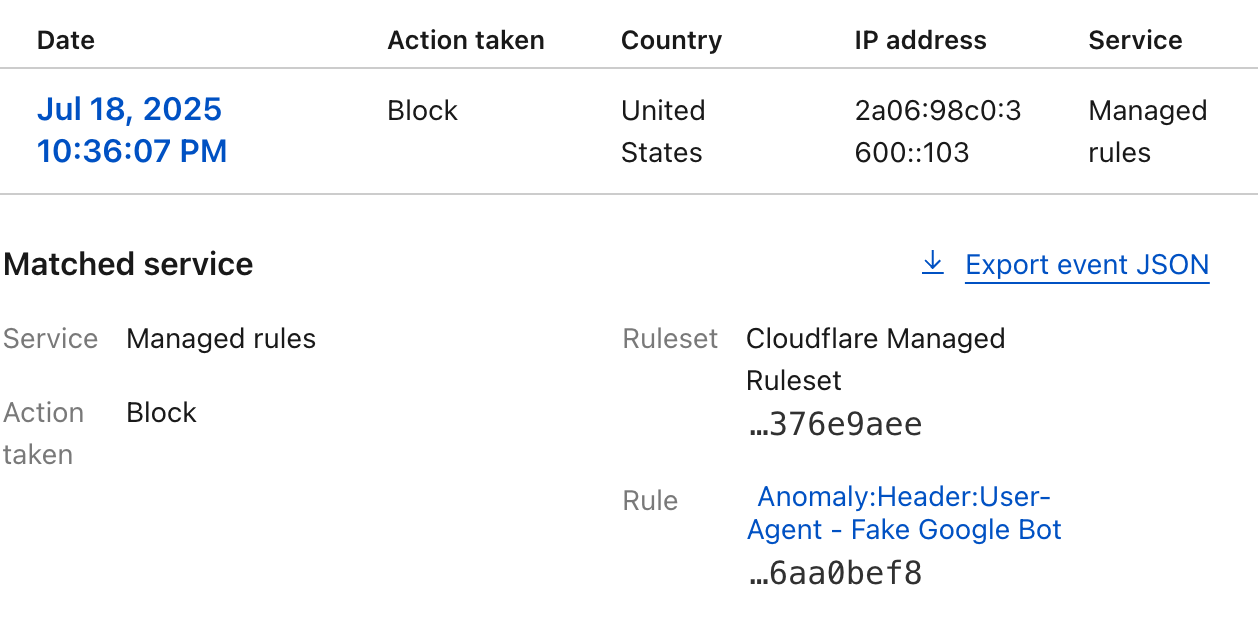

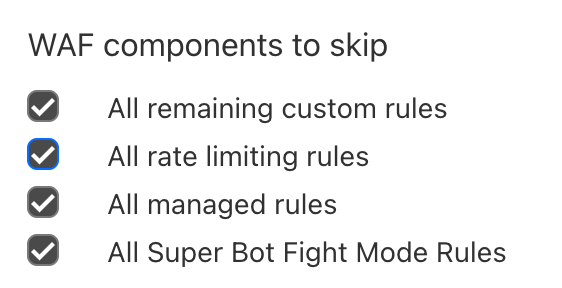

We've seen a significant increase in Google encountering 403 permission denied errors. They've got up into the thousands on our Google Search Console. I investigated this in detail and found that it's Cloudflare's Managed Rules, in particular the "Fake Google User Agent" that is blocking genuine Google crawls. Has anyone else encountered this? Is there any solution apart from turning off that managed rule?