Yes. I just added logging and its

Yes. I just added logging and its

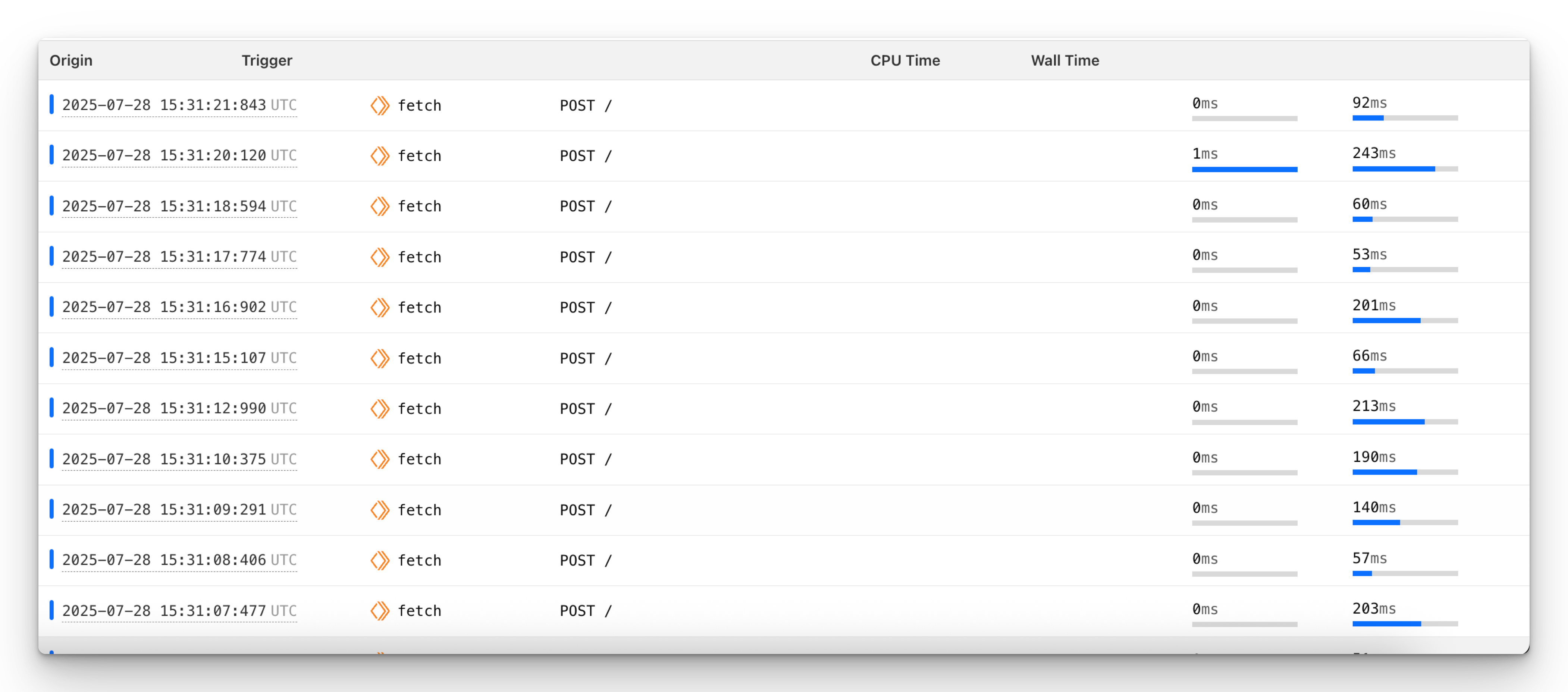

started fluctuating too, I tried multiple requests randing from 60ms to 700ms

started fluctuating too, I tried multiple requests randing from 60ms to 700ms

saving to queue time is 294ms

saving to queue time is 198 msconst startTime = Date.now()

await env.TRACKING_QUEUE.send(data);

console.log(`saving to queue time is ${Date.now() - startTime} ms`);