Hi, Team

Hi, Team

We're facing an interesting problem with Cloudflare Queues.

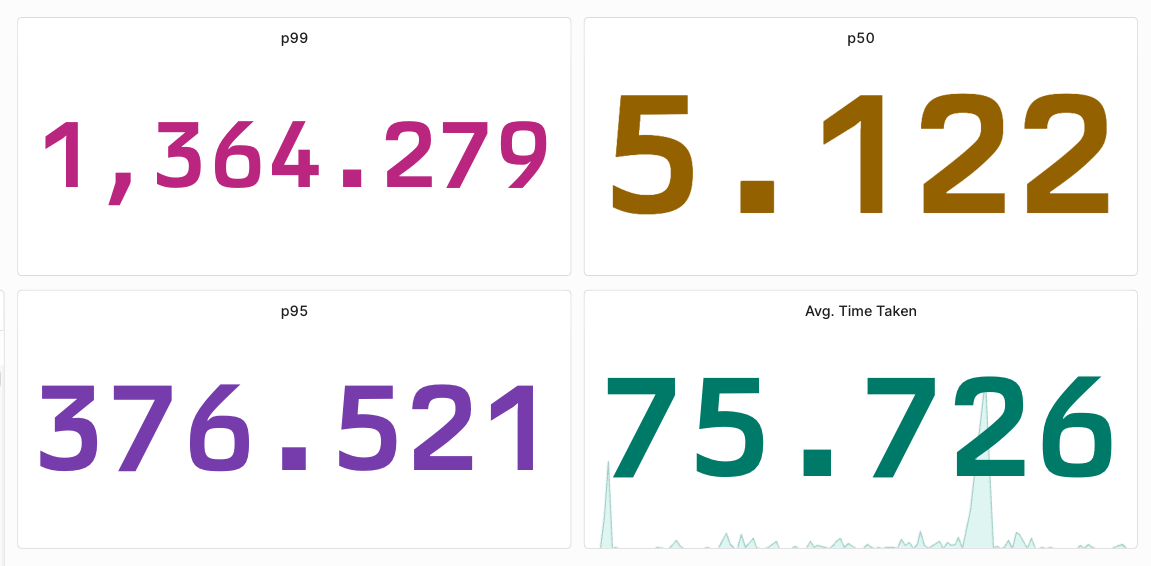

We're getting up to 22 minutes of wait time until the queue decides to send the message to a consumer

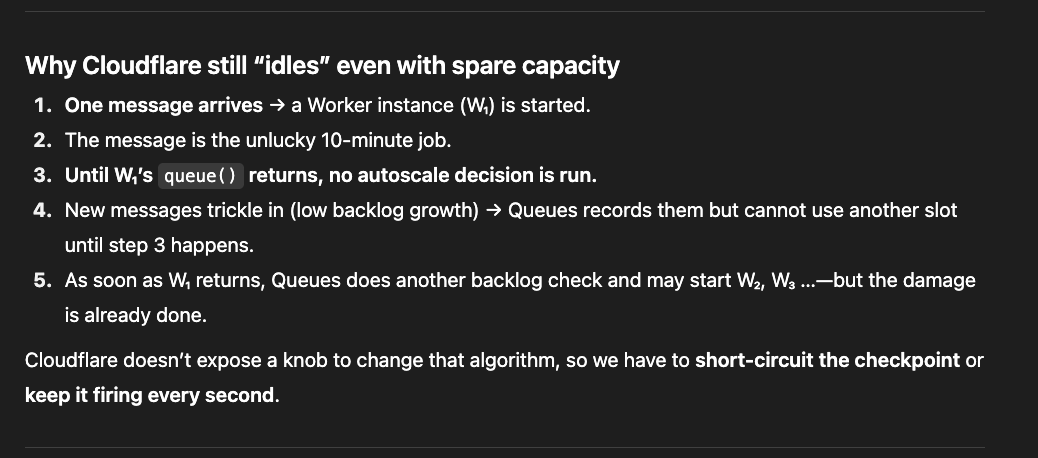

Each job could take more time ~5 minutes, and the scaling algorithm of queues waits for the batch to finish to decide if it should scale more consumers based on the backlog and growth rate.

This is our current config

I discussed it with ChatGPT, and I thought calling

But in the consumer logs, all the queue calls show a max time of ~30s, and the job is also not getting completed so probably the consumer is getting killed for some reason, even when we have

Any help is appreciated

As a last resort, I'm thinking of moving to Upstash Queue + Workers so the consumer worker is immediately called with the message via an HTTP request, and we scale the consumers based on the number of messages coming in.

We're facing an interesting problem with Cloudflare Queues.

We're getting up to 22 minutes of wait time until the queue decides to send the message to a consumer

Each job could take more time ~5 minutes, and the scaling algorithm of queues waits for the batch to finish to decide if it should scale more consumers based on the backlog and growth rate.

This is our current config

I discussed it with ChatGPT, and I thought calling

waitUntil with queue_consumer_no_wait_for_wait_until flag, so the queue scaling algorithm can check faster and add more consumers. But in the consumer logs, all the queue calls show a max time of ~30s, and the job is also not getting completed so probably the consumer is getting killed for some reason, even when we have

Any help is appreciated

As a last resort, I'm thinking of moving to Upstash Queue + Workers so the consumer worker is immediately called with the message via an HTTP request, and we scale the consumers based on the number of messages coming in.