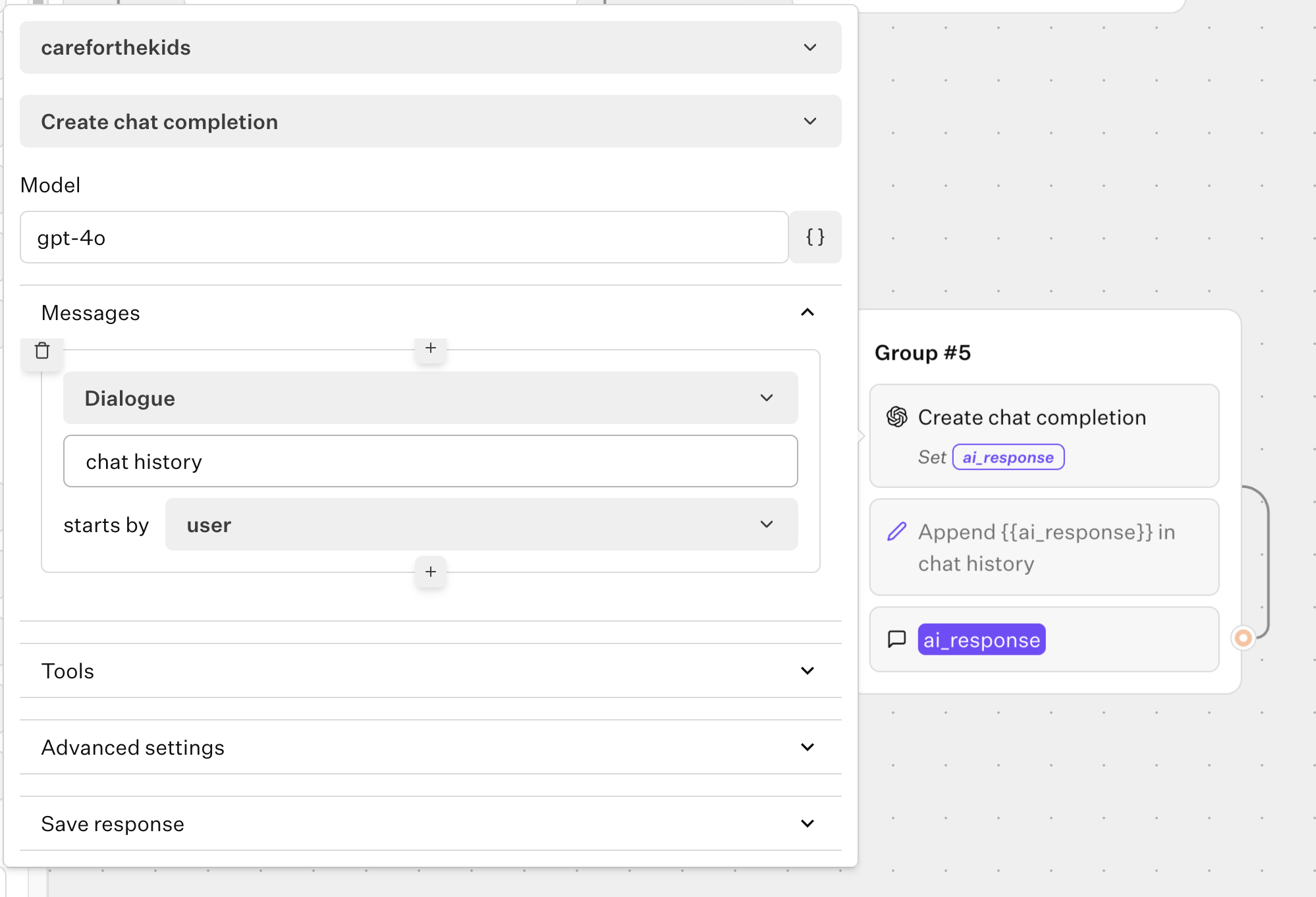

Dialog not working

Is it possible that dialog is no longer working? I created a very simple flow following https://www.loom.com/share/df5d64dd01ca47daa5b7acd18b05a725?sid=8dab03d1-f4c2-45eb-8dd6-aaf5b08ef16b and it doesn't seem to be working. I am however churning through a ton of tokens.

One thing I noticed is that the logs are not showing up in OpenAI.

This is what OpenAI says about that....

I checked Typebot’s documentation and changelog, and here’s what I found:

• The OpenAI block in Typebot uses the standard chat completion endpoint (/v1/chat/completions) and doesn’t support the newer Responses API endpoint (/v1/responses)  .

• The Typebot changelog notes a fix related to custom base URLs for model fetching, but it doesn’t mention support for the Responses API .

⸻

Can you simply change the Base URL?

Unlikely. While Typebot lets you specify a custom base URL, it generally expects to build requests using the old API path. Changing the base URL to /v1/responses is unlikely to work unless Typebot also adapts the request payload format — which it currently doesn’t.

⸻

What are your options?

1. Wait for Typebot to officially support the Responses API — keep an eye on their changelog or documentation updates.

2. Use a proxy server — you can route your Typebot requests through a small intermediary service that:

• Receives requests from Typebot to /chat/completions

• Forwards them to OpenAI’s /v1/responses endpoint

• Returns the response back to Typebot

This setup lets you populate the Logs tab via the Responses API without waiting for Typebot to natively support it.

3. Track requests yourself — log request and response data at your application level if that meets your needs.

One thing I noticed is that the logs are not showing up in OpenAI.

This is what OpenAI says about that....

I checked Typebot’s documentation and changelog, and here’s what I found:

• The OpenAI block in Typebot uses the standard chat completion endpoint (/v1/chat/completions) and doesn’t support the newer Responses API endpoint (/v1/responses)  .

• The Typebot changelog notes a fix related to custom base URLs for model fetching, but it doesn’t mention support for the Responses API .

⸻

Can you simply change the Base URL?

Unlikely. While Typebot lets you specify a custom base URL, it generally expects to build requests using the old API path. Changing the base URL to /v1/responses is unlikely to work unless Typebot also adapts the request payload format — which it currently doesn’t.

⸻

What are your options?

1. Wait for Typebot to officially support the Responses API — keep an eye on their changelog or documentation updates.

2. Use a proxy server — you can route your Typebot requests through a small intermediary service that:

• Receives requests from Typebot to /chat/completions

• Forwards them to OpenAI’s /v1/responses endpoint

• Returns the response back to Typebot

This setup lets you populate the Logs tab via the Responses API without waiting for Typebot to natively support it.

3. Track requests yourself — log request and response data at your application level if that meets your needs.

Loom

In this video, I explain the deprecation of the message sequence option in the Open AI block and introduce the new dialogue option in Typebot. I discuss how the dialogue option is more flexible and less magical than the previous option. I provide a step-by-step explanation of how to use the new dialogue option, including creating a chat history ...