Memory leak?

Multiplayer, 1.21.8

"DistantHorizons-2.3.4-b-1.21.8-fabric-neoforge.jar"

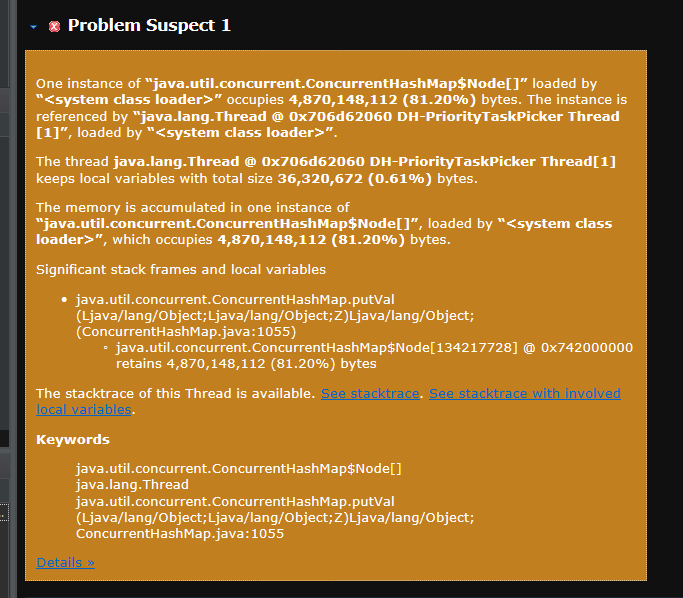

Hi, I'm not an expert at debugging specific memory issues but I only, on the surface, know how to well.. execute the heapdump command and put it into a tool like Eclipse Memory Analyzer.

I recently came across a memory leak issue when upgrading from 1.21.5 -> 1.21.8. Initially, I had no issues with memory but I did do a clean install of Windows right before upgrading to 1.21.8. I did not test if this issue is present on 1.21.5 with a clean install.

I ran

I disabled the mod (by removing it, disabling rendering did nothing) and now Minecraft runs smoothly without any memory leak. Could other mods be interfering with DH or is this a DH issue?

"DistantHorizons-2.3.4-b-1.21.8-fabric-neoforge.jar"

Hi, I'm not an expert at debugging specific memory issues but I only, on the surface, know how to well.. execute the heapdump command and put it into a tool like Eclipse Memory Analyzer.

I recently came across a memory leak issue when upgrading from 1.21.5 -> 1.21.8. Initially, I had no issues with memory but I did do a clean install of Windows right before upgrading to 1.21.8. I did not test if this issue is present on 1.21.5 with a clean install.

I ran

/sparkc heapdumpI disabled the mod (by removing it, disabling rendering did nothing) and now Minecraft runs smoothly without any memory leak. Could other mods be interfering with DH or is this a DH issue?