Streaming reasoning w/ 0.14.1 and ai v5 SDK

I'm using o3 and added

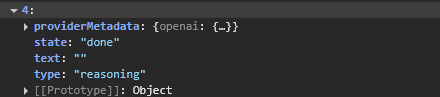

See the screenshot for the in-browser console of the part for reasoning.

I did see this post, but it makes it seem the issue is resolved.

help-bugs-problemsReasoning part not saved in Mastra Memory message history?

sendReasoning: true to the toUIMessageStreamResponse and do not get any actual reasoning text.See the screenshot for the in-browser console of the part for reasoning.

I did see this post, but it makes it seem the issue is resolved.

help-bugs-problemsReasoning part not saved in Mastra Memory message history?