Timeout errors

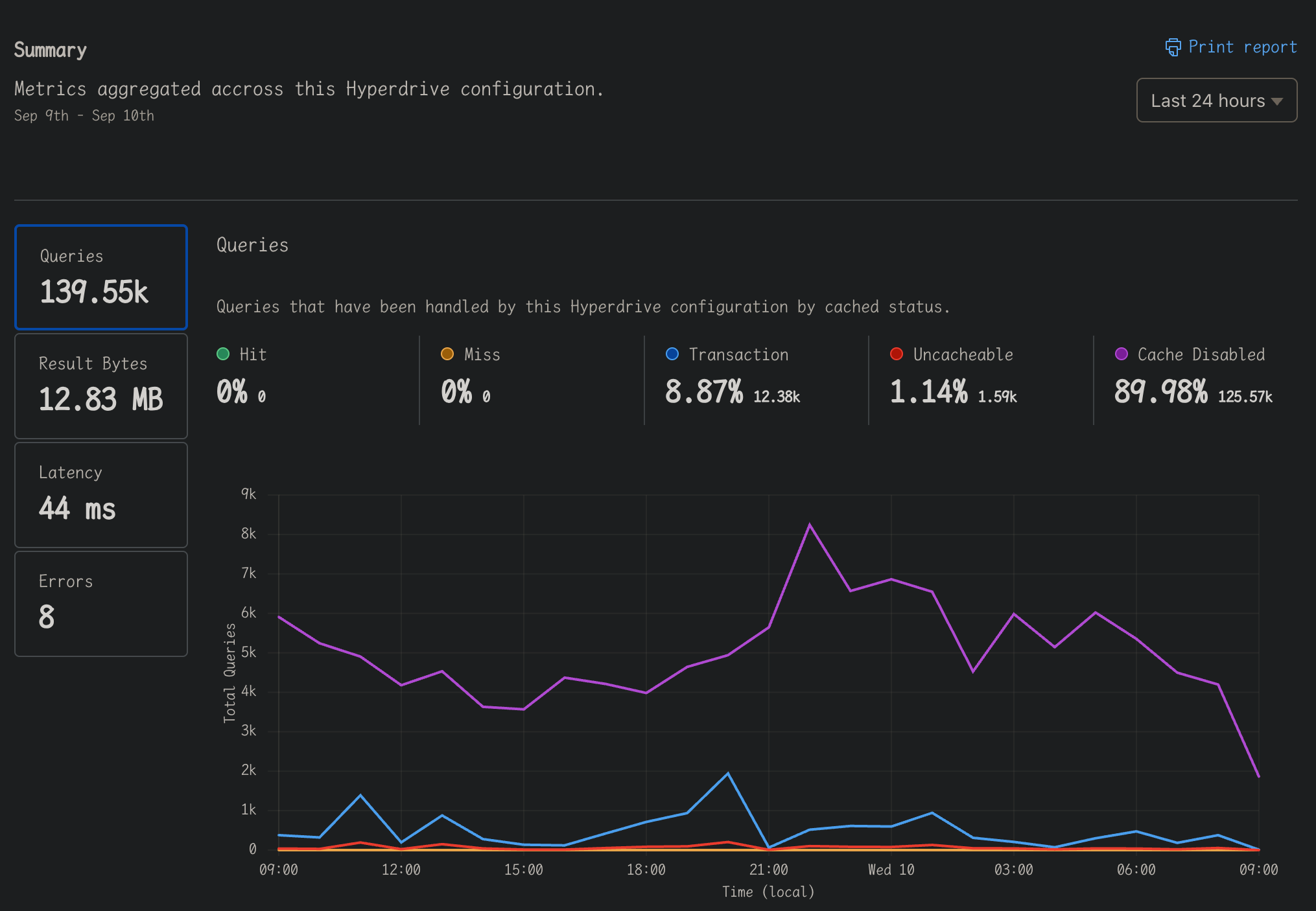

Additionally, I used node-postgres but disabled Query caching in Hyperdrive, not sure if this has any impact.

25 Replies

Moving to a thread

So, I have both Hyperdrive IDs. It sounds like the error rate for @max is about 0.5%, which is far too high. I expect that the retries in our next release should drive t hat down substantially

@rxliuli has issues that span a range of error messages, but all seem to route back to connections getting stuck, causing the pool to be saturated with half-open unusable connections at times

This is across both our supported JS drivers, so is not strictly driver-specific

That all sound correct?

Yes.

Sounds right to me.

I've done some work to reduce load from our app (though we're scaling up, so what my code giveth, company growth taketh away).

Hopefully will reduce significantly when we do some releases next week too.

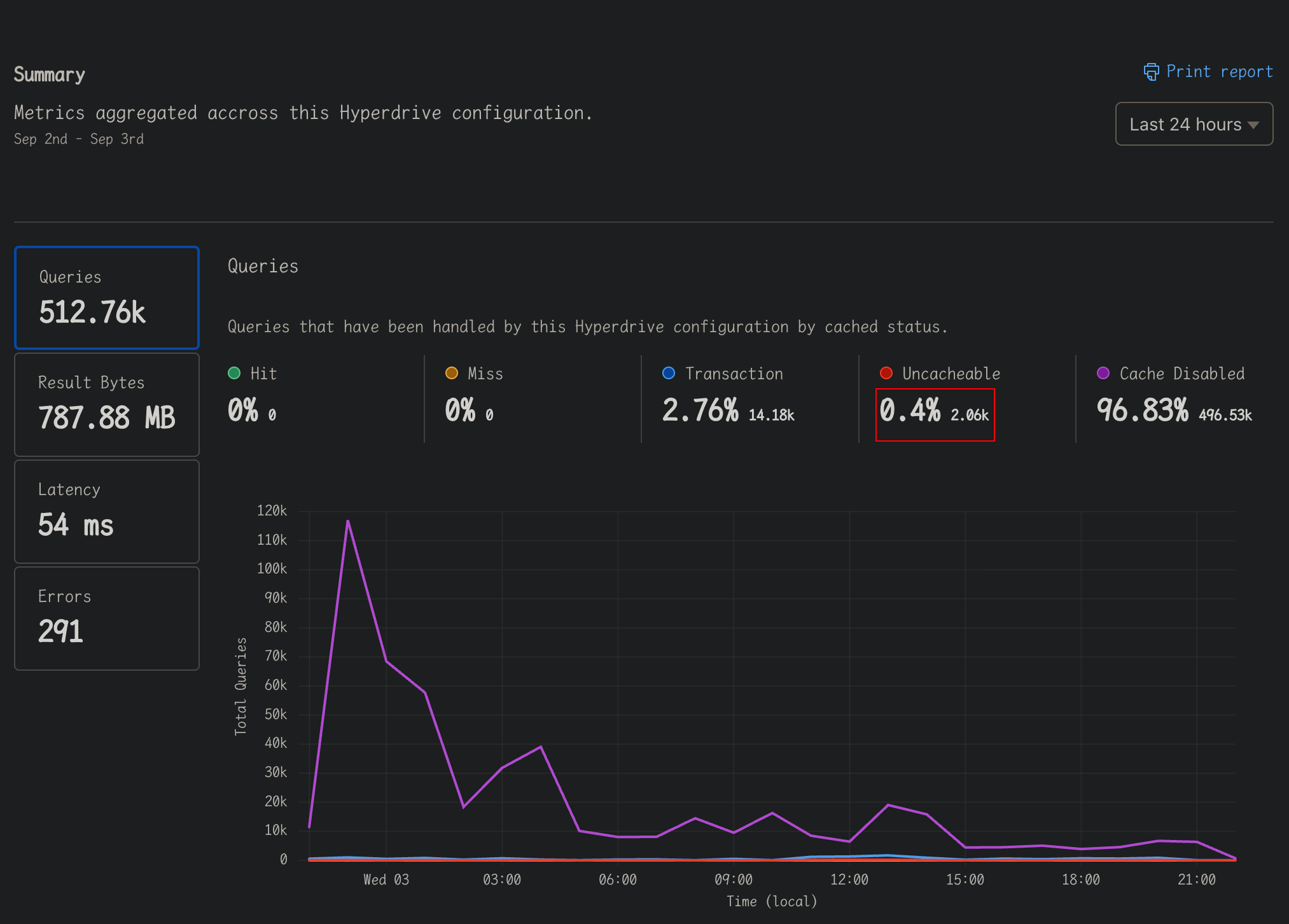

Additionally, my error rate is also at 0.4%.

@rxliuli what's your observed error rate, give or take?

Okay. Also way too high. Our goal is to keep these at 0.01% or lower.

Thanks, folks. I've pinged in my PM, and we'll be focusing on addressing this with our next release. We've got some work already done, and will continue pushing on this until our stability is back where we want it.

We appreciate you letting us know, and your understanding.

No worries. Thanks for looking into it.

Do you have a rough ETA for your next release, or is it hard to say?

Hard to say, but the goal is next week.

Thanks. Will keep trying to make improvements on my end in the mean time. 🙂

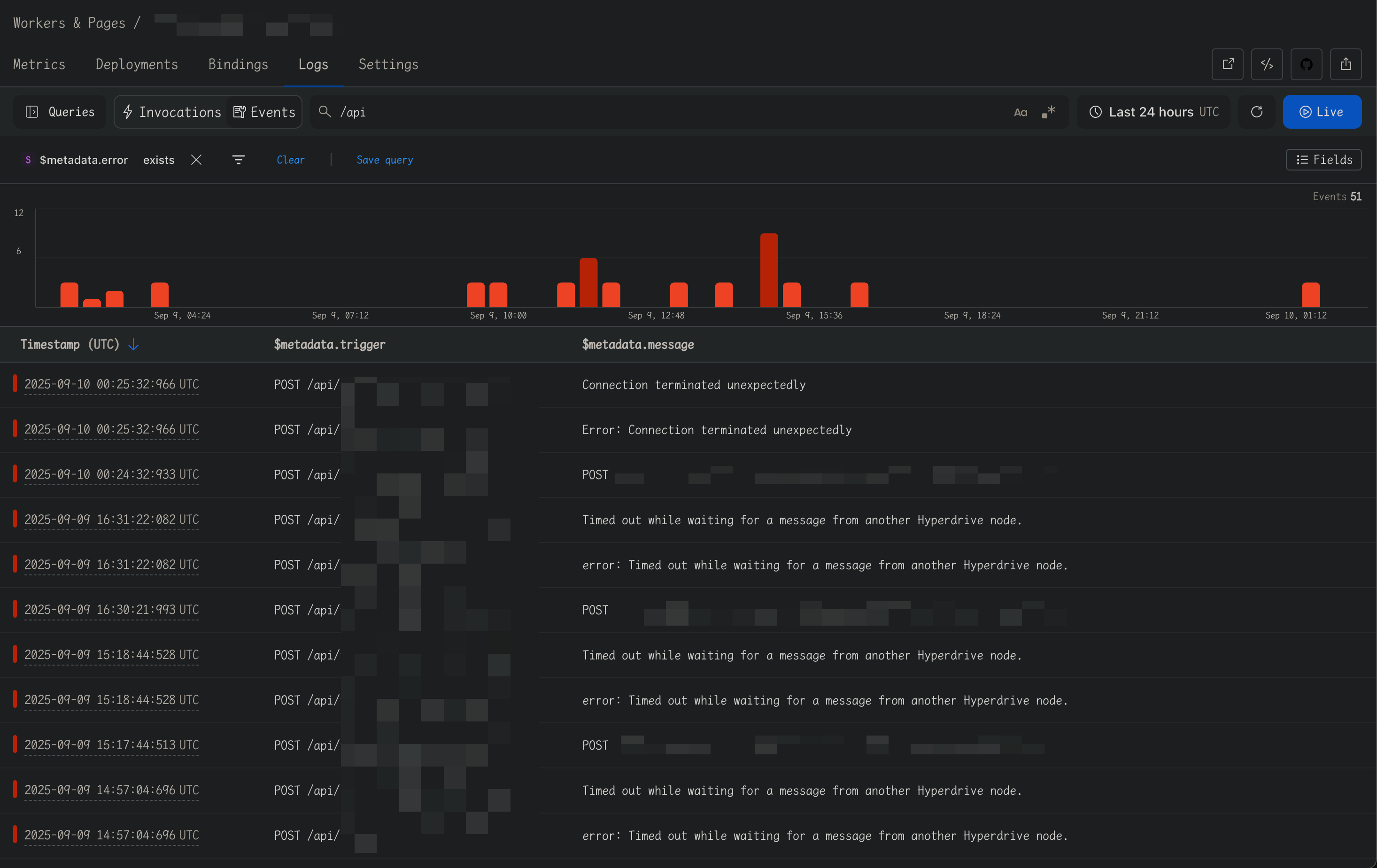

@AJR I just encountered another different error message while importing a large amount of data, just wanted to let you know.

remaining connection slots are reserved for roles with the SUPERUSER attribut -- (new error)

Timed out while waiting for an open slot in the pool.

Timed out while waiting for a message from another Hyperdrive node.

Connection terminated unexpectedly

Okay, so you're definitely running into using up all the connections in your pool. That error is just passed back from your database, that's not one Hyperdrive generates

I have just upgraded the database memory and will continue monitoring for a while to see if this error still occurs.

The memory may or may not be the limiting factor. Depending on your hosting, most of them will let you explicitly set maximum connections. That is the setting that is being bumped into, with that erorr message.

The release with handling for severed connections is now out. Please monitor your error rates for a bit, and let me know if they don't drop.

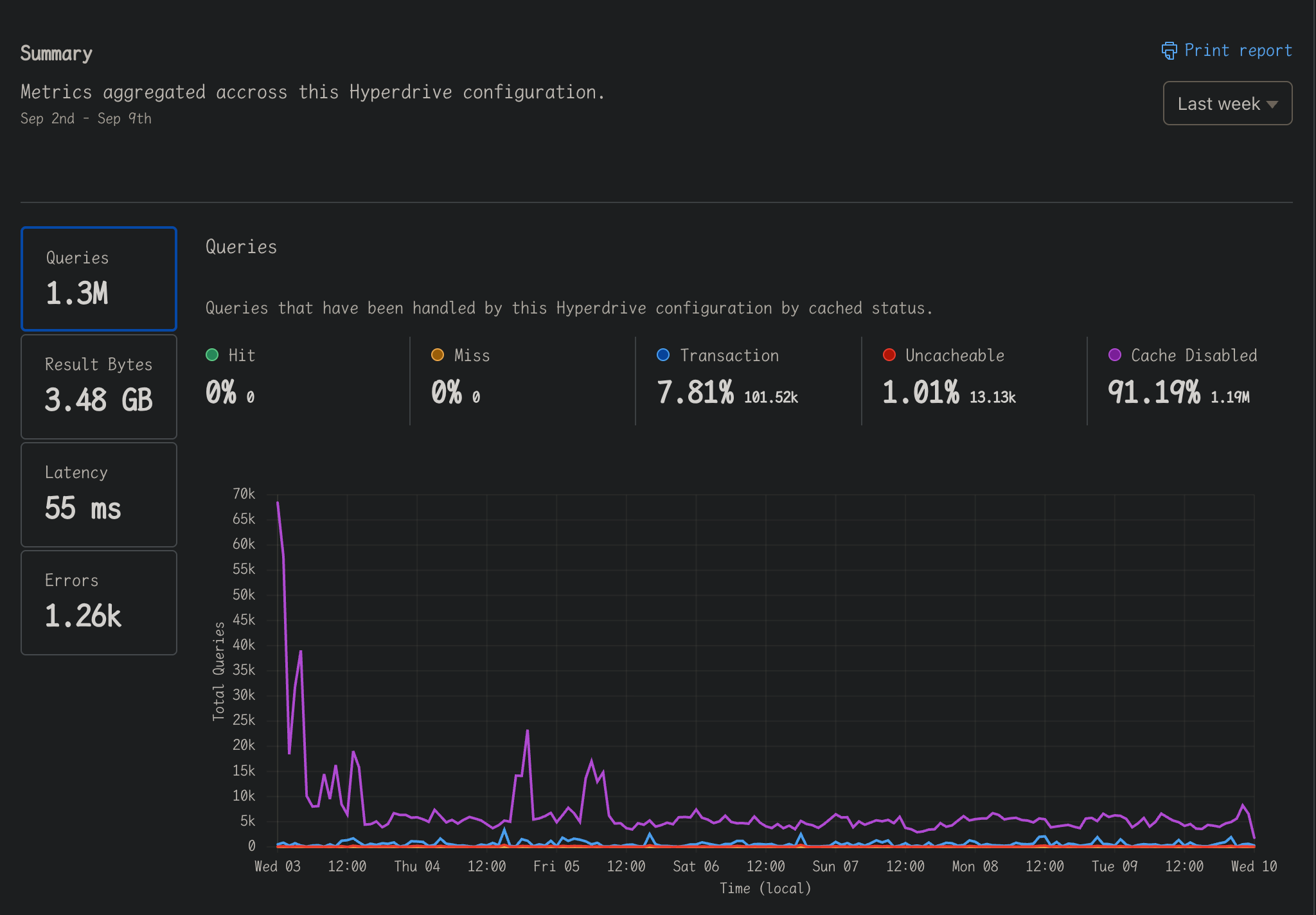

Since the last report, the error rate increased last week, I will continue to monitor it for a while.

It has not decreased since yesterday?

On the contrary, it has actually increased.

Okay, I'm escalating this to our leadership. This isn't a good state.

cc @thomasgauvin

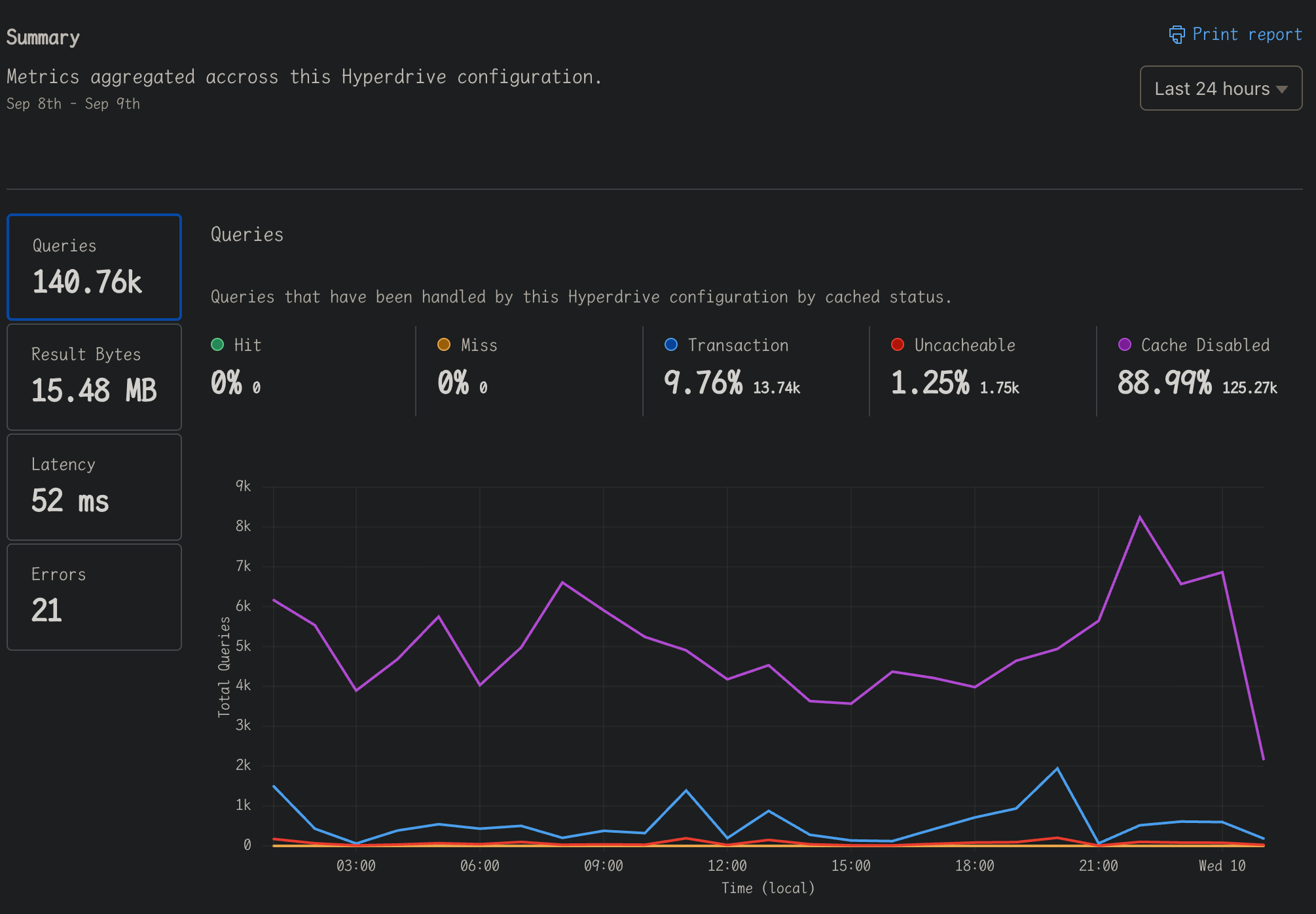

@rxliuli can you share you errors metrics page?

Is this it?

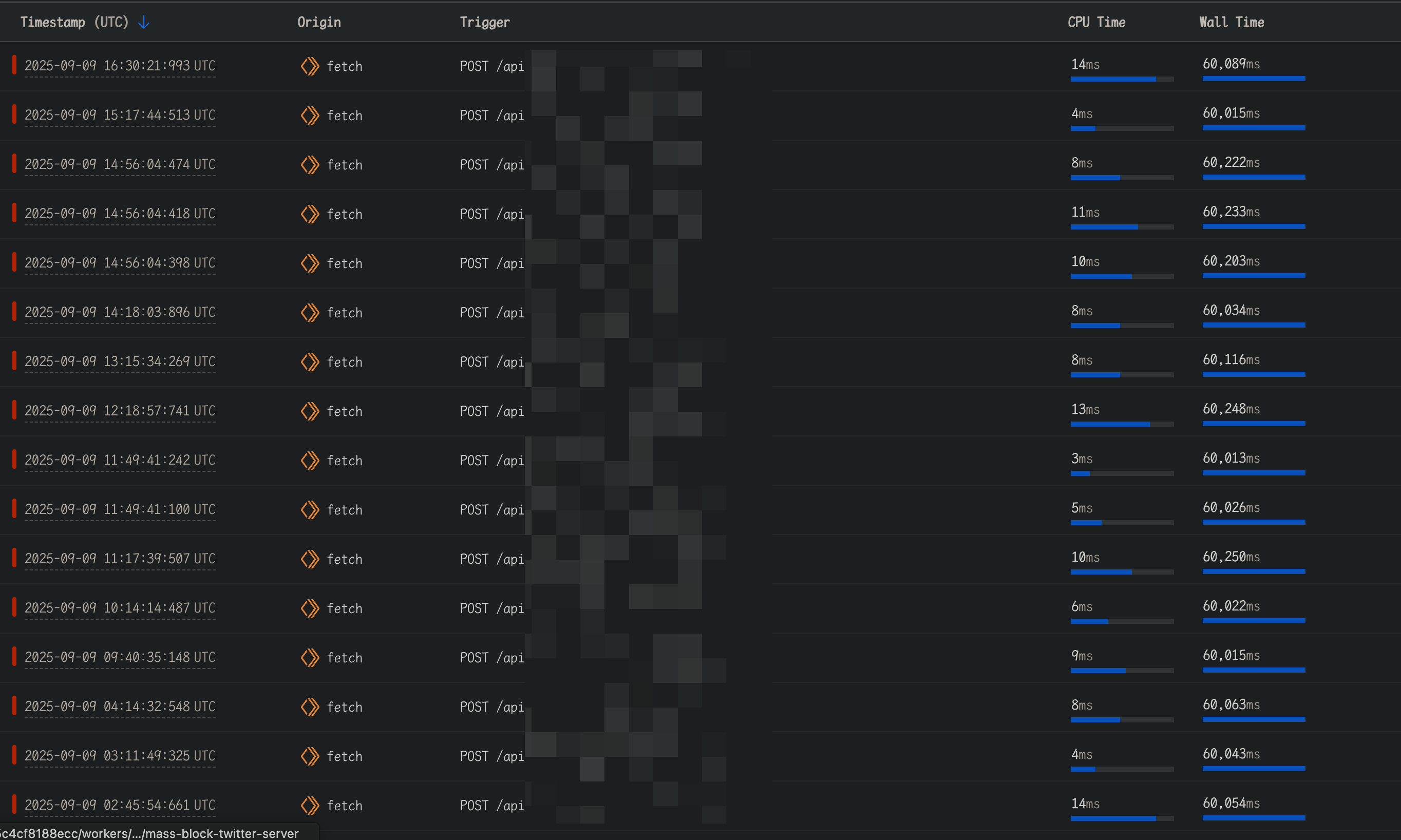

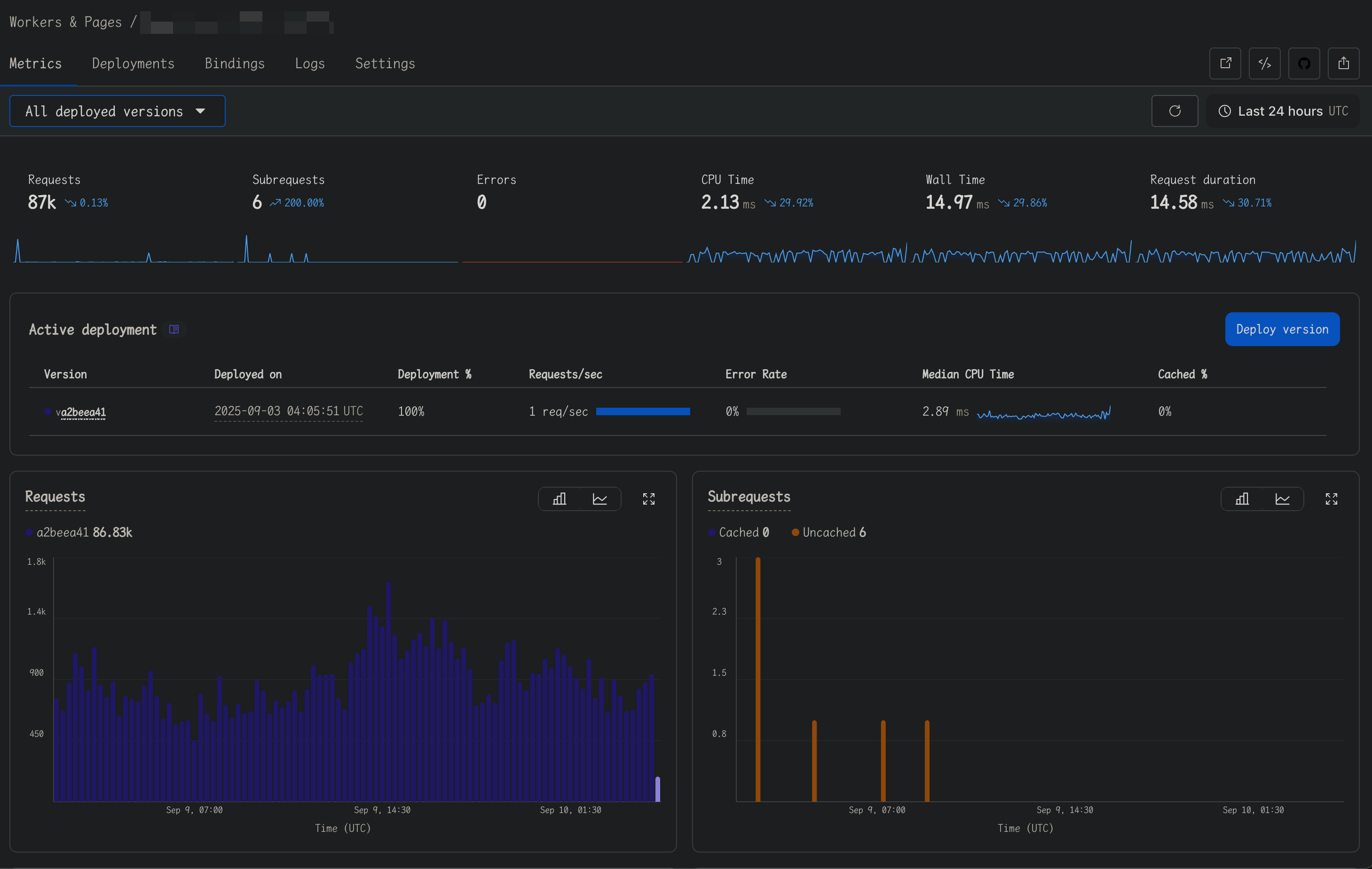

@thomasgauvin If you're asking about the Workers > Metrics page, it has some issues - it doesn't track errors, but I can see the error logs in Workers > Logs.

You can click on errors on the left of the Hyperdrive metrics to see more of the errors (with graph)

Sorry, it looks like the problem has eased quite a bit, I read it wrong.

Do I correctly understand, then, that this response was incorrect and instead the error rate did drop post the last release?

Yep.

Love to hear that. I was really trying to get my head around what was left that we'd missed.

Thank you for bearing with us on this