Echo implementing membrane openai example in phoenix liveview

Hi there!

I tried to implement the openai realtime example available here, trying to integrate it into a liveview example app.

As for integrating membrane in liveview I followed this example https://github.com/membraneframework/membrane_demo/tree/master/webrtc_live_view

In my implementation, what happens is that the audio is captured and is sent to openAI, because I can receive the response. But the problem is that together with the response I receive also all the audio sent as input from mediaCapture (i.e. Echo).

Do you know what could cause this issue?

this is the liveview implementation: pento/lib/pento_web/live/call.ex at main · tomashco/pento · GitHub

this is the pipeline that gets called: https://github.com/tomashco/pento/blob/main/lib/pento/call/pipeline.ex

this is the integration with the openai endpoint: pento/lib/openai_endpoint.ex at main · tomashco/pento · GitHub

this is the integration of the websocket: pento/lib/openai_websocket.ex at main · tomashco/pento · GitHub

I’m pretty sure the problem lays in the pipeline, but I don’t understand what is wrong.

thank you to everyone can help!

16 Replies

Hi @tomashco, briefly looking at the pipeline and liveview, they seem fine (except that you have both audio and video set to false in the capture https://github.com/tomashco/pento/blob/main/lib/pento_web/live/call.ex#L25)

GitHub

pento/lib/pento_web/live/call.ex at main · tomashco/pento

Contribute to tomashco/pento development by creating an account on GitHub.

what may happen is that the output from openAI is captured by your mic and sent again, but as I understand it's not the case

you can try separating the input and output and opening them on different devices, to make sure that something else isn't causing the echo

yes having both audio and video false in the capture is a typo, this is the actual configuration:

For the test I just did, I removed the audioPlayer in the render:

and I can still hear the echo. So I think is the mediaCapture that returns back the audio?

Now you have

preview? set to true, so it may be it 😉unfortunately also disabling preview is the same. Should I try to check the implementation of

Membrane.WebRTC.Live.Capture.live_render component?Please do, maybe disabling the preview doesn't work 🤔

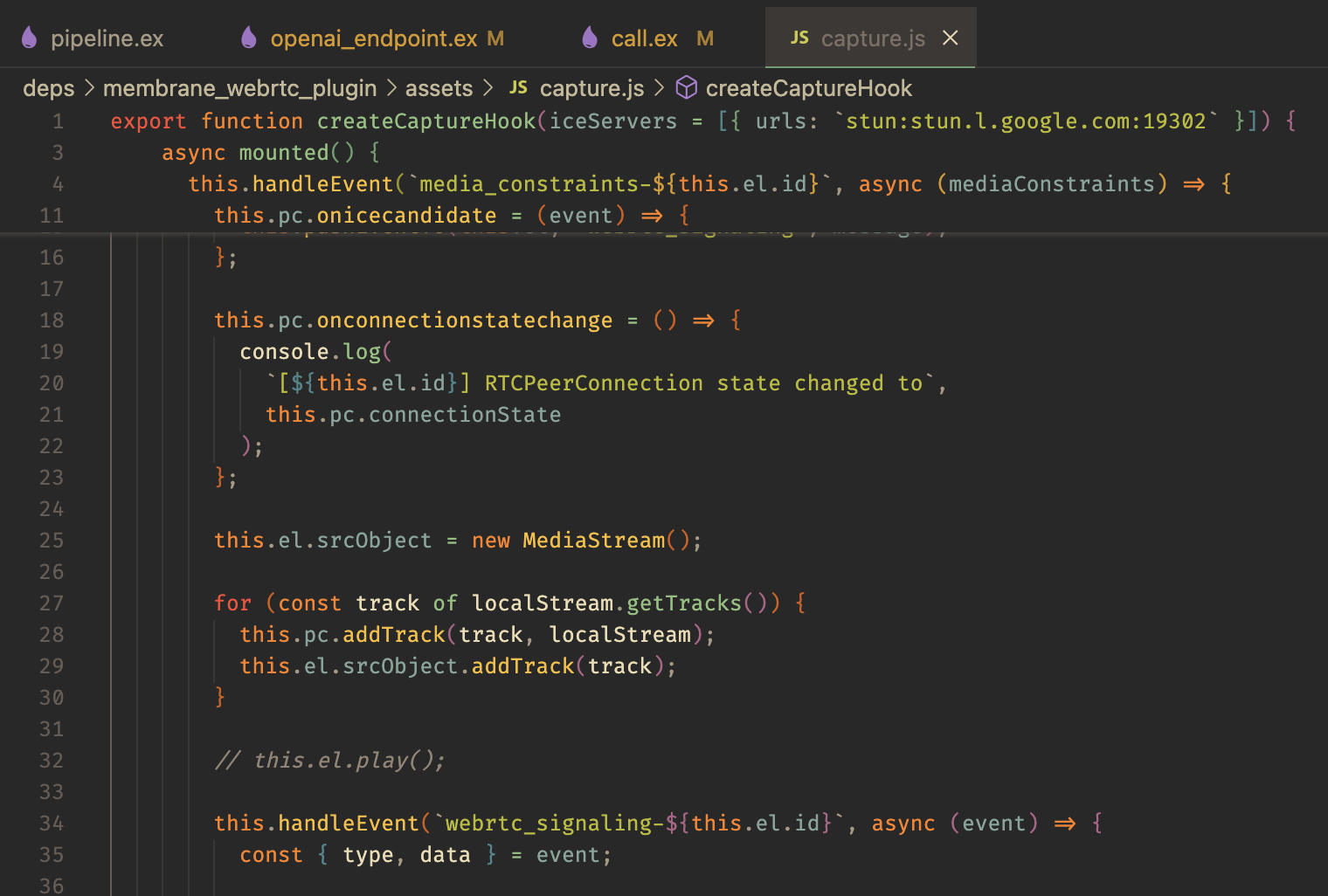

Probably i found the error, in capture.js it starts playing by default. by commenting this line I don't hear the echo anymore and it's now working as expected!

great! we’ll look into it, cc @Feliks

may I ask you if you have any example showing STT and TTS made using membrane?

Here's an example of STT with Boombox: https://github.com/membraneframework/boombox/blob/master/examples.livemd#read-speech-audio-from-mp4-chunk-by-chunk-generate-transcription

GitHub

boombox/examples.livemd at master · membraneframework/boombox

Boombox is a simple streaming tool built on top of Membrane - membraneframework/boombox

you can wrap it into a membrane element too

super, I'll try that thank you!

Regarding TTS, I don't know any good OSS model

For TTS, you can check out Kokoro. I think there are better models now but maybe a good starting point: https://github.com/samrat/kokoro/blob/main/kokoro_player.livemd

GitHub

kokoro/kokoro_player.livemd at main · samrat/kokoro

Elixir bindings to Kokoro-82M text-to-speech model - samrat/kokoro

@tomashco what browser have you used? Do you have any demo that I could use to reproduce your issue, without a need to have a OPENAI_API_KEY?

I have created a PR that is supposed to fix your issue, but when I run our demo I cannot hear any echo, so it is hard for me to test it

https://github.com/membraneframework/membrane_webrtc_plugin/pull/31

hi @Feliks I just pushed a commit in which I just comment out the live audioPlayer and the part of the pipeline which calls openai.

It is true that now the pipeline returns back what it hears, but I don't mount the audioPlayer component in page so it shouldn't be a problem

https://github.com/tomashco/pento/commit/c24df8c17825bb405c23ee57cbf65f36c35fe2ae

I also retested uncommenting the line

// this.el.play();

and I can hear the echo. While I stop hearing it the moment I comment again the line

I'm using Firefox 142.0.1 (aarch64) but I've the same problem on Chrome Version 139.0.7258.155 (Official Build) (arm64)

I'm using a Macbook air M3

let me know if I can be of any help!

thank you!