Compute Exhausted Before Official Launch

Hi Neon Team,

We have built an application that has two initial customers signed up. For now, there is only development traffic and some test-traffic to the website (it's a next.js app).

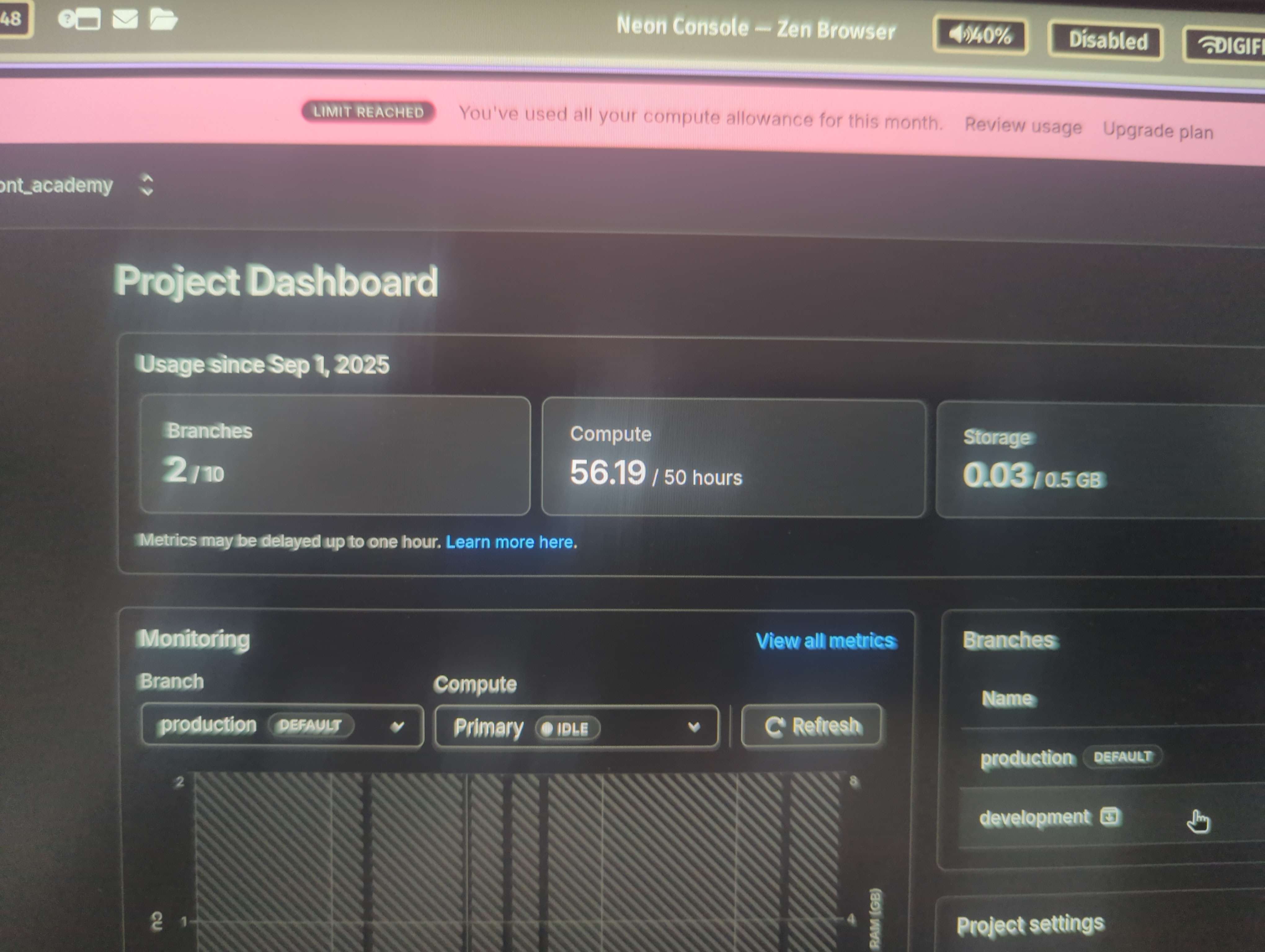

I just got a notification that we surpassed the 50 hours included in the free plan. This happened before we had any real customer traffic.

As such I am wondering if we are doing something wrong, and how all the compute could be consumed, only 12 days into the month, without having any material usage (real visitors) yet?

Thank you in advance for the help.

--

EDIT:

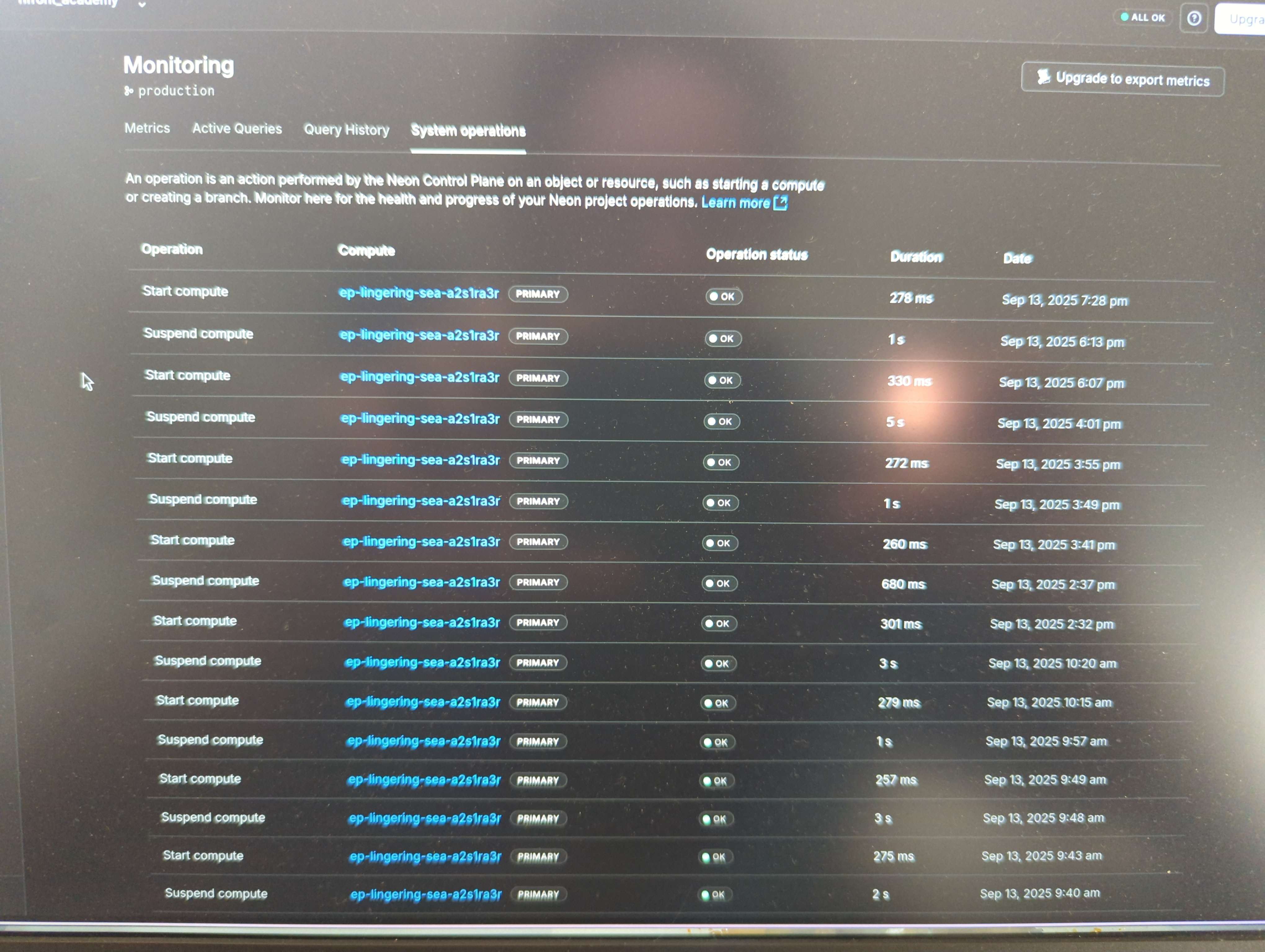

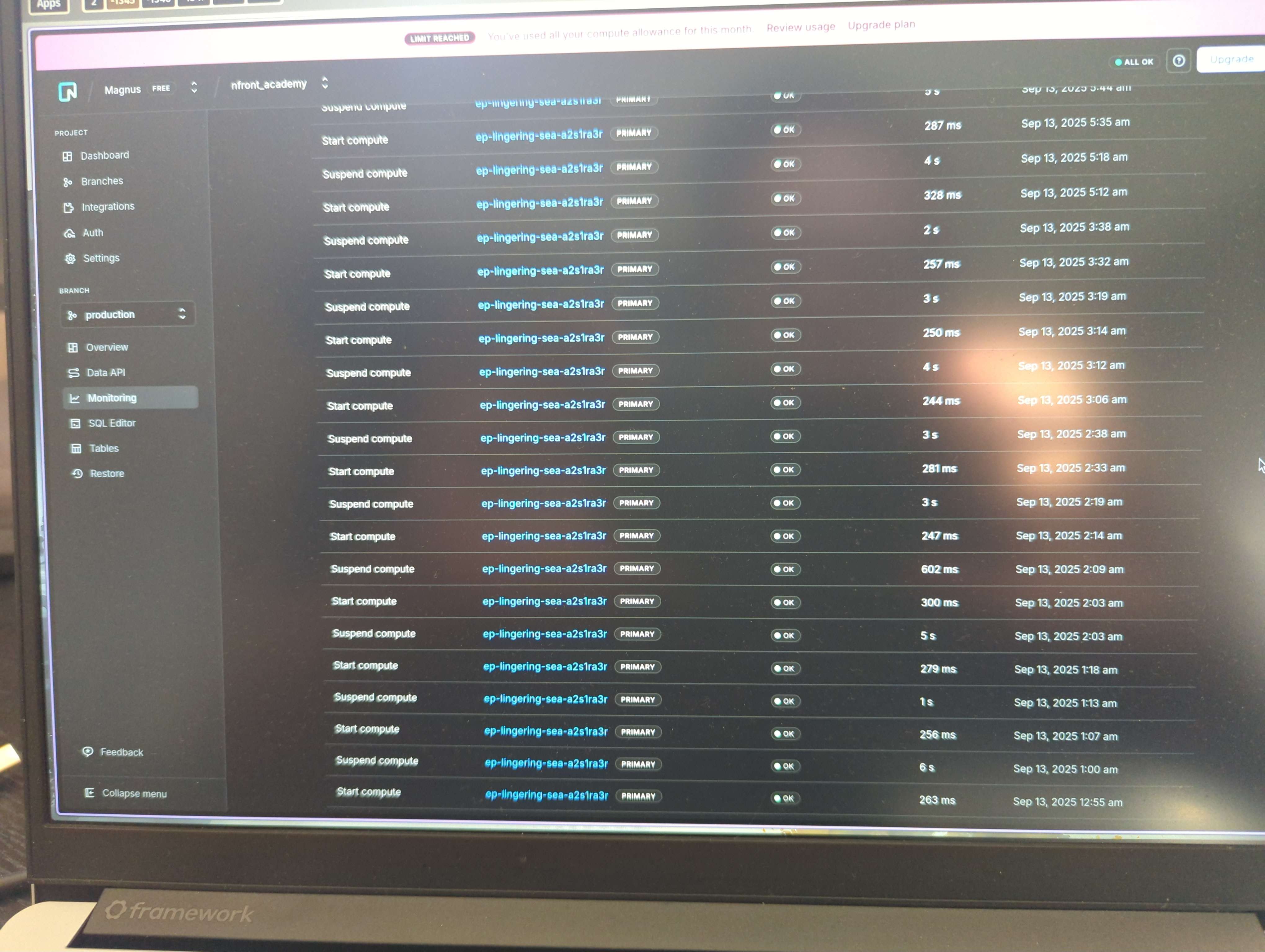

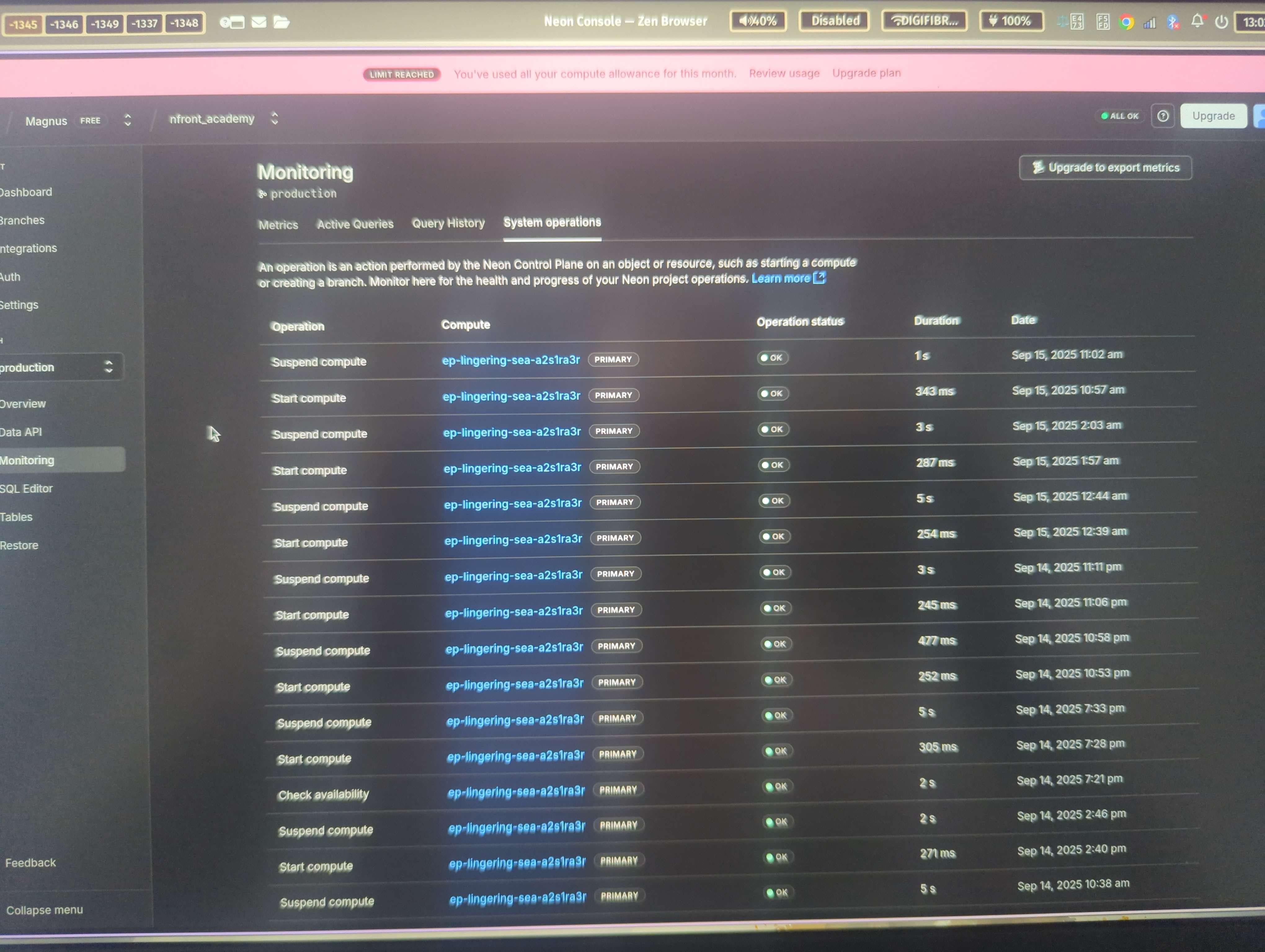

I checked the "Monitoring > System operations" tab, to try to figure out when / how the compute was activated.

I noticed it turned on several times throughout the night. In fact, if I start counting from midnight, I see 43 lines of compute activity up until 9am this morning (half is suspend, half is start).

This pattern, of turning on and off (each time staying on for ~250ms), is happening every few minutes. No longer than 15 minutes is passing between every "start" event.

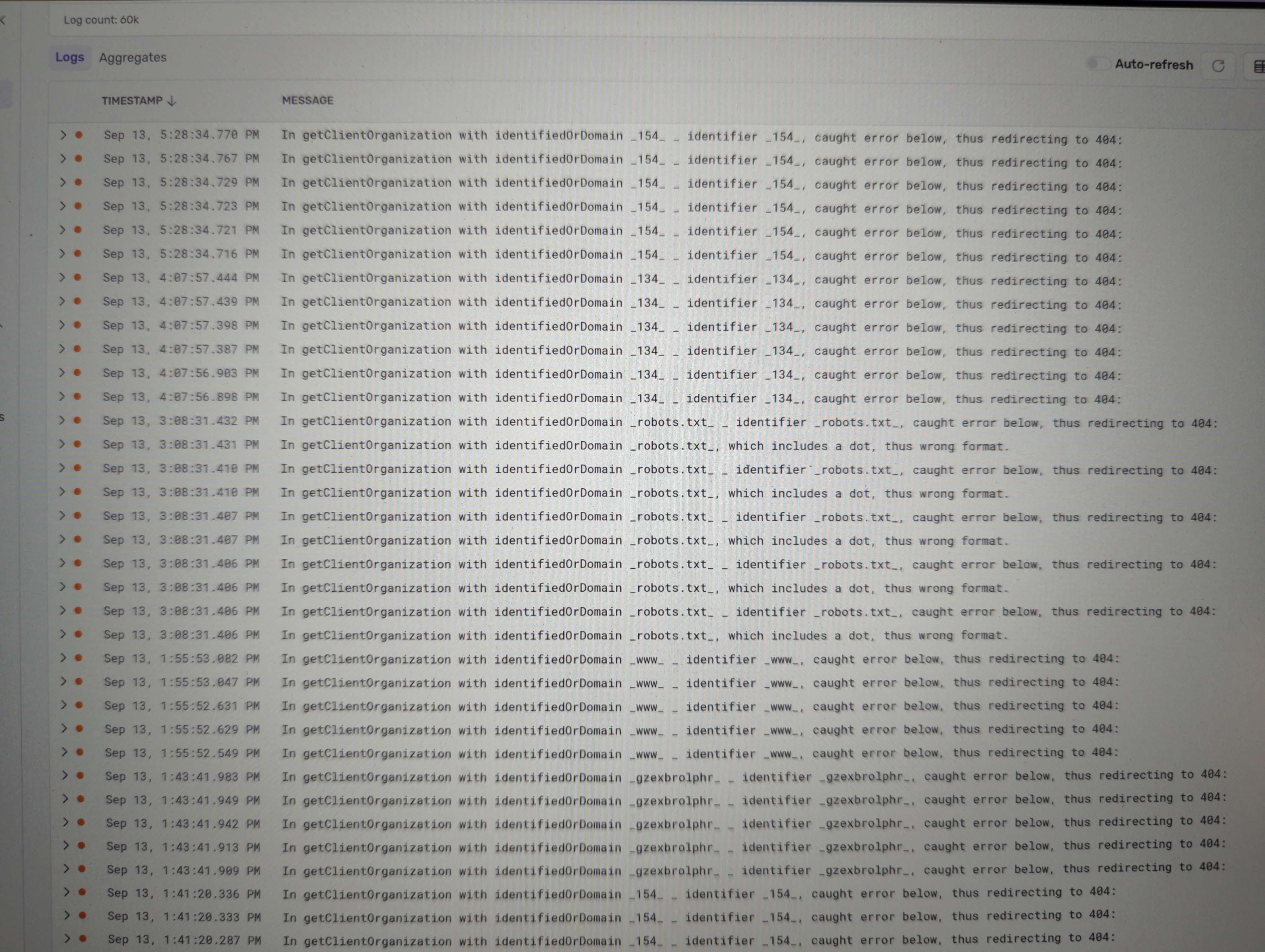

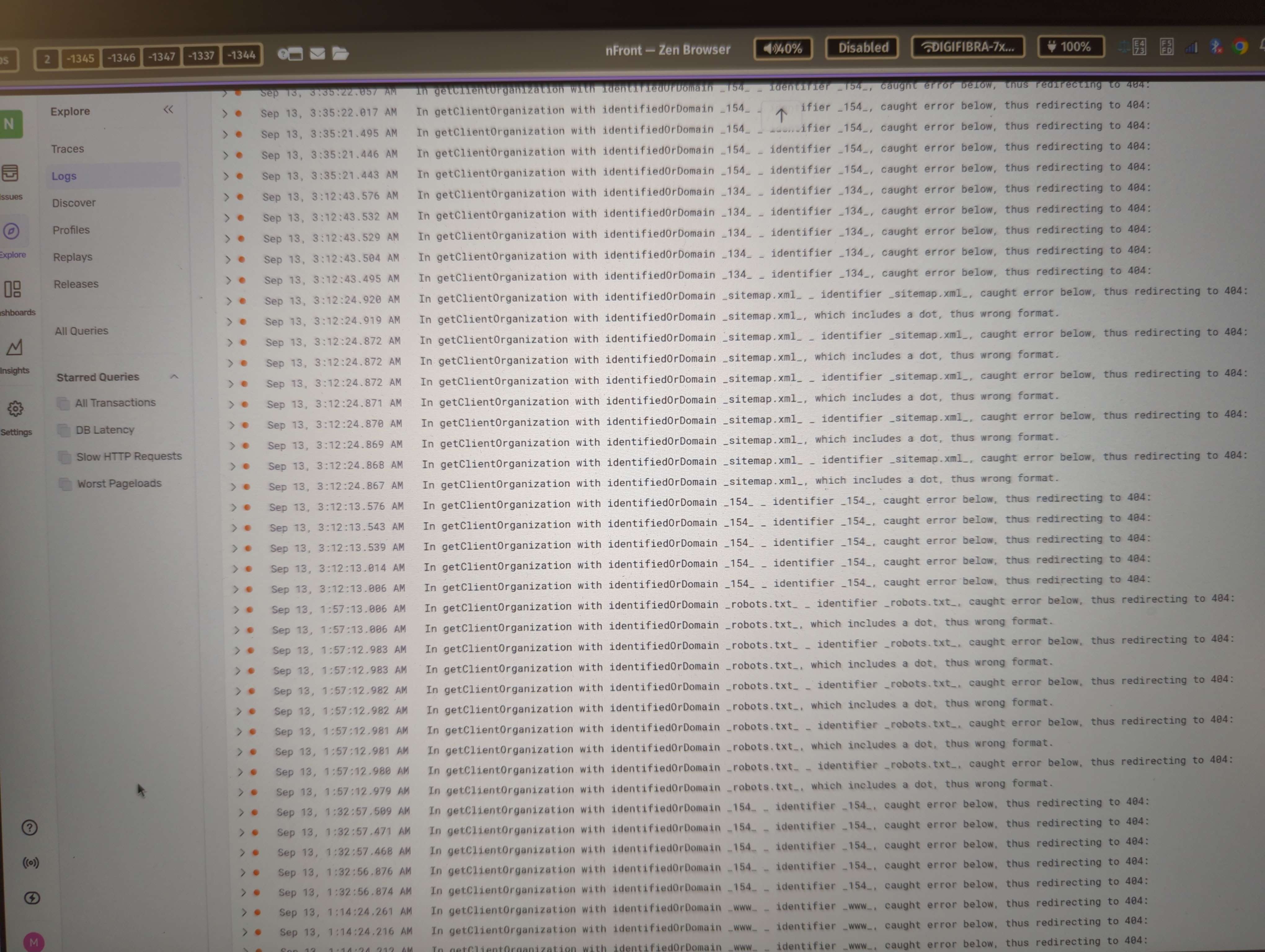

I am certain that none of our two clients were up all night testing the app, and neither was I, so there is something really odd going on here. Unsure how to debug this.

I also see exactly 901 connections (Max) every 15 minutes or so. It goes up to exactly 901, then drops to 0.

In case useful:

We have built an application that has two initial customers signed up. For now, there is only development traffic and some test-traffic to the website (it's a next.js app).

I just got a notification that we surpassed the 50 hours included in the free plan. This happened before we had any real customer traffic.

As such I am wondering if we are doing something wrong, and how all the compute could be consumed, only 12 days into the month, without having any material usage (real visitors) yet?

Thank you in advance for the help.

--

EDIT:

I checked the "Monitoring > System operations" tab, to try to figure out when / how the compute was activated.

I noticed it turned on several times throughout the night. In fact, if I start counting from midnight, I see 43 lines of compute activity up until 9am this morning (half is suspend, half is start).

This pattern, of turning on and off (each time staying on for ~250ms), is happening every few minutes. No longer than 15 minutes is passing between every "start" event.

I am certain that none of our two clients were up all night testing the app, and neither was I, so there is something really odd going on here. Unsure how to debug this.

I also see exactly 901 connections (Max) every 15 minutes or so. It goes up to exactly 901, then drops to 0.

In case useful:

- We are storing the connection on

globalThisin production, to avoid any unlikely reconnections. - We are not calling any regular ping to keep the database active.

- We are using Drizzle, with

@neondatabase/serverlessversion^1.0.1.